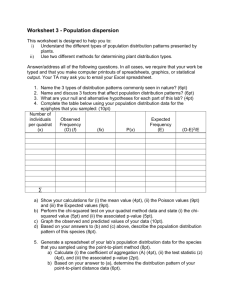

2008 Probability / Mathematical Statistics Comprehensive Exam (100 Points) P . )

advertisement

2008 Probability / Mathematical Statistics Comprehensive Exam (100 Points)

1. Suppose P (A0 ) = .4, P (B 0 ) = .7, and P (A ∩ B) = .2. Find:

(a) (2pt) P (A ∪ B)

(b) (3pt) P (B|A)

(c) (3pt) P (B|A0 )

(d) (2pt) Are events A and B independent? Justify your answer.

(

2. X is a continuous random variable with pdf f (x) =

(x − 1)/4 if 2 ≤ x ≤ 4

0

otherwise

(a) (4pt) Find E(X).

(b) (4pt) Find the CDF F (x). Be sure to define F (x) for all real numbers.

3. (3pt) In a box there are 10 identical balls except for the colors: 4 are white and 6 are red. If I

pick a white ball I win a prize. If I pick a red ball, I pay $2.00. The ball is replaced after being

selected. Let X be the number of times I play until (and including) the second time I win. What

is the distribution of X and what are the associated parameter values?

4. (3pt) In a manufacturing process that makes light bulbs, there is a 0.01 probability that any

particular bulb produced is defective. Assume this probability is constant over time. The bulbs

are put into boxes containing 100 bulbs. Let X be the number of defective bulbs in a randomly

selected box of bulbs. What is the distribution of X and what are the associated parameter

values?

5. (4pt) X and Y are random variables with joint pdf f (x, y) =

1

2

xy

for 0 < y < x < 2

0

otherwise

Find fX (x), the marginal pdf for X.

6. Two discrete random variables X and Y have joint pdf defined by the following table:

Y

X

1

2

1

.10

.30

2

.15

.45

(a) (2pt) What is the marginal pdf fY (y)?

(b) (2pt) What is the conditional pdf f (Y |X = 1)?

(c) (2pt) What is E(Y |X = 1)?

(d) (2pt) Are X and Y independent? Justify your answer.

7. (6pt) Find the pdf of Z =

fX (x) =

2

x3

0

1

if X is a random variables with pdf and CDF

X

for 1 < x < ∞

FX (x) =

otherwise

1

1− 2

for 1 < x < ∞

otherwise

x

0

8. Suppose X1 ∼ EXP(3), X2 ∼ GAMMA(3, 4), and X1 and X2 are independent.

(a) (5pt)

What is the moment generating function of Y = X1 + X2 ?

(b) (3pt)

What is the distribution of Y ?

9. (8pt) Verify that X is a UMVUE of µ for a Poisson(µ) distribution based on a random sample

of size n.

10. Let X1 , X2 , . . . , Xn be a random sample from f (x; θ) = θxθ−1 with support 0 < x < 1 and with

θ > 0. Note this is the Beta(θ, 1) pdf.

(a) (3pt) Find the method of moments estimator (MME) of θ.

b the maximum likelihood estimator (MLE) of θ.

(b) (6pt) Find θ,

1

(c) (2pt) What is the MLE of

?

θ+1

Suppose X and Y are independent EXP(1) random variables. Find the joint pdf of

11. (8pt)

S = X + Y and T = X.

12. (5pt) Find a complete sufficient statistic if we have a random sample X1 , X2 , . . . , Xn from a

(ln θ)θx

distribution with pdff (x; θ) =

for 0 < x < 1 and θ > 1.

θ−1

13. (7pt) Let X1 , X2 , . . . , Xn be a random sample from a Gamma(2, 1/θ) pdf. It can be shown that

2θXi ∼ χ2 (4). Determine an equal-tailed 100γ% interval estimator for θ based on Y =

n

X

2θXi .

i=1

14. (5pt) Suppose X1 , . . . , X20 are a random sample from an EXP(2) distribution. Approximate

the probability that X ≥ 2.5.

15. (6pt) Suppose X and Y are a random sample from an EXP(1) distribution. Find the joint pdf

of S = X + Y and T = X.

Probability / Math Stat Handout to Accompany Exam

• If T is an unbiased estimator of τ (θ), then the Cramer-Rao lower bound (CRLB) (given

a random sample) is

[τ 0 (θ)]2

Var(T ) ≥

h

i2

∂

nE ∂θ

ln f (X; θ)

assuming (i) the derivatives exist and (ii) they can be passed into the integral (continuous X)

or summation (discrete X) when calculating expectations.

• Let statistic Si = Si (X1 , . . . , Xn ) and and si = Si (x1 , . . . , xn ) for i = 1, . . . , k. Let S(x1 , . . . , xn )

be the vector of functions whose j th coordinate is Sj (x1 , . . . , xn ) Then, mathematically, if the

conditional pdf of X given S = s,

fX|s (x1 , x2 , . . . , xn ) =

f (x1 , x2 , . . . , xn ; θ)

fS (s; θ)

if S(x1 , . . . , xn ) = s

and 0 otherwise

does not depend on θ, then S is a set of sufficient statistics.

• Theorem 10.2.1 (Factorization Criterion): If X1 , . . . , Xn is a random sample having

joint pdf f (x1 , . . . , xn ; θ), and if S = (S1 , . . . , Sk ), then S is jointly sufficient for θ if and only

if

f (x1 , . . . , xn ; θ) = g(s; θ) h(x1 , . . . , xn )

where g(s; θ) does not depend on observations x1 , . . . , xn except through s and h(x1 , . . . , xn )

does not involve θ.

• Theorem 10.3.1: If S1 , . . . , Sk are jointly sufficient for θ and if θb is a unique MLE of θ,

then θb is a function of S = (S1 , . . . , Sk ).

• A density function is a member of the regular exponential class (REC) if it can be

expressed in the form

f (x; θ) = c(θ)h(x) exp

" k

X

#

qj (θ)tj (x)

for x ∈ A (support)

i=1

and is 0 otherwise, where θ = (θ1 , . . . , θk ) is a vector of unknown parameters and the parameter

space Ω has the form

Ω = {θ| ai < θi < bi , i = 1, . . . , k}

(Note: ai = −∞ and bi = ∞ are allowable values.)

and regularity conditions are satisfied.

• Theorem 10.4.2: If X1 , . . . , Xn is a random sample from a member of the REC(q1 , . . . , qk ),

then the statistics S1 =

n

X

i=1

t1 (Xi ),

S2 =

n

X

i=1

t2 (Xi ), . . . , Sk =

set of complete sufficient statistics for θ1 , . . . , θk .

n

X

i=1

tk (Xi ) are a minimal