January 2009 Probability / Math Stat Comprehensive Exam (100 Points)

advertisement

January 2009

Probability / Math Stat Comprehensive Exam (100 Points)

1. Suppose P (A) = P (B) = 1/3 and P (A ∩ B) = 1/10. Find:

(a) (2pt) P (A ∪ B)

(b) (3pt) P (B|A)

(c) (3pt) P (A ∪ B 0 )

(

2. X is a continuous random variable with pdf f (x) =

3/x4

0

if 1 < x < ∞

otherwise

(a) (4pt) Find E(X).

(b) (4pt) Find the CDF F (x). Be sure to define F (x) for all real numbers.

3. (9pt) The probability that a firecracker successfully explodes when ignited is 0.9. Consider the following

random variables (X, Y, and Z).

• Let X be the number of firecrackers tested when the first firecracker tested successfully explodes.

• Let Y be the number of firecrackers tested when the third firecracker tested successfully explodes.

• Let Z be the number of firecrackers that successfully explode in a random sample of 10 firecrackers.

State the distributions of X, Y , and Z. Be sure to specify the associated parameter values.

(

4. (4pt) X and Y are random variables with joint pdf f (x, y) =

8xy

0

for 0 < x < y < 1

otherwise

(a) (2pt) Sketch and shade in the region of support for the joint pdf.

(b) (2pt) Find E(XY ).

(c) (2pt) Find fX (x), the marginal pdf for X.

(d) (2pt) Find fY |X (y|x), the conditional pdf for Y |X.

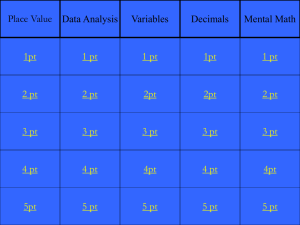

5. Two discrete random variables X and Y have joint pdf defined by the following table:

Y

X

1

2

1

.20

.05

2

.60

.15

(a) (2pt) What is the marginal pdf fY (y)?

(b) (2pt) What is the conditional pdf f (Y |X = 1)?

(c) (2pt) Are X and Y independent? Justify your answer.

6. (6pt) Find the pdf of Y = X 4 if X is a random variable with pdf

fX (x) = 4x3 for 0 < x < 1

(and is 0 otherwise).

7. Suppose X1 ∼ EXP(4), X2 ∼ GAMMA(4, 4), and X1 and X2 are independent.

(a) (3pt)

What is the moment generating function of Y = X1 + X2 ?

(b) (3pt)

Identify the distribution of Y ?

8. Let X1 , X2 , . . . , Xn be a random sample from a weibull WEI(θ, 1/2) distribution.

(a) (4pt) Find the method of moments estimator (MME) of θ.

(b) (5pt) Verify the maximum likelihood estimator (MLE) of θ is θb =

to verify that it is a maximum with a second derivative test).

√

(c) (2pt) What is the MLE of θ?

µ Pn

i=1

√ ¶2

xi

n

. (You do not need

9. Let X1 , X2 , . . . , Xn be a random sample from a geometric GEO(p) distribution.

(a) (5pt) Determine the Cramer-Rao lower bound (CRLB) for X which is an unbiased estimator of

x − p1

∂

τ (p) = p1 . Hint:

ln f (x; p) =

.

∂p

p−1

(b) (3pt) Verify that X is a UMVUE of

1

p

using the CRLB in (a).

10. Let X1 , ..., X25 be a random sample from an EXP(2).

(a) (5pt) In this example we could use the Central Limit Theorem to approximate the distribution of

X. What is that approximate distribution of X based on the Central Limit Theorem? Be sure to

state the values of the associated parameters.

(b) (4pt) Suppose X1 , . . . , X25 is a random sample from an EXP(2) distribution. Approximate the

probability that X ≥ 2.5.

11. (8pt) Suppose X and Y are independent EXP(1) random variables. Find the joint pdf of S = X + Y

and T = X.

12. (6pt) Find a complete sufficient statistic if we have a random sample X1 , X2 , . . . , Xn from a distribution

(ln θ)θx

for 0 < x < 1 and θ > 1.

with pdff (x; θ) =

θ−1

13. (7pt) Let X1 , X2 , X3 , X4 , X5 be a random sample of size n = 5 from a Gamma(2, 1/θ) pdf. It can be

shown that 2θXi ∼

χ2 (4).

Determine an equal-tailed 90% interval estimator for θ based on Y =

5

X

i=1

2θXi .

Probability / Math Stat Handout to Accompany Exam

• If T is an unbiased estimator of τ (θ), then the Cramer-Rao lower bound (CRLB) (given

a random sample) is

[τ 0 (θ)]2

Var(T ) ≥

h

i2

∂

nE ∂θ

ln f (X; θ)

assuming (i) the derivatives exist and (ii) they can be passed into the integral (continuous X)

or summation (discrete X) when calculating expectations.

• Let statistic Si = Si (X1 , . . . , Xn ) and and si = Si (x1 , . . . , xn ) for i = 1, . . . , k. Let S(x1 , . . . , xn )

be the vector of functions whose j th coordinate is Sj (x1 , . . . , xn ) Then, mathematically, if the

conditional pdf of X given S = s,

fX|s (x1 , x2 , . . . , xn ) =

f (x1 , x2 , . . . , xn ; θ)

fS (s; θ)

if S(x1 , . . . , xn ) = s

and 0 otherwise

does not depend on θ, then S is a set of sufficient statistics.

• Theorem 10.2.1 (Factorization Criterion): If X1 , . . . , Xn is a random sample having

joint pdf f (x1 , . . . , xn ; θ), and if S = (S1 , . . . , Sk ), then S is jointly sufficient for θ if and only

if

f (x1 , . . . , xn ; θ) = g(s; θ) h(x1 , . . . , xn )

where g(s; θ) does not depend on observations x1 , . . . , xn except through s and h(x1 , . . . , xn )

does not involve θ.

• Theorem 10.3.1: If S1 , . . . , Sk are jointly sufficient for θ and if θb is a unique MLE of θ,

then θb is a function of S = (S1 , . . . , Sk ).

• A density function is a member of the regular exponential class (REC) if it can be

expressed in the form

f (x; θ) = c(θ)h(x) exp

" k

X

#

qj (θ)tj (x)

for x ∈ A (support)

i=1

and is 0 otherwise, where θ = (θ1 , . . . , θk ) is a vector of unknown parameters and the parameter

space Ω has the form

Ω = {θ| ai < θi < bi , i = 1, . . . , k}

(Note: ai = −∞ and bi = ∞ are allowable values.)

and regularity conditions are satisfied.

• Theorem 10.4.2: If X1 , . . . , Xn is a random sample from a member of the REC(q1 , . . . , qk ),

then the statistics S1 =

n

X

i=1

t1 (Xi ),

S2 =

n

X

i=1

t2 (Xi ), . . . , Sk =

set of complete sufficient statistics for θ1 , . . . , θk .

n

X

i=1

tk (Xi ) are a minimal