GAO INFORMATION TECHNOLOGY DHS Needs to

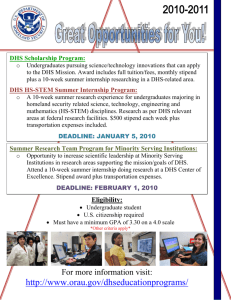

advertisement