HOMELAND SECURITY ACQUISITIONS Major Program

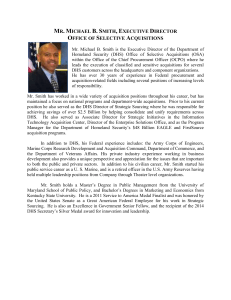

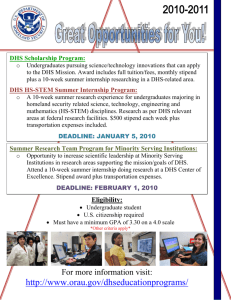

advertisement