GAO SECURE BORDER INITIATIVE DHS Needs to

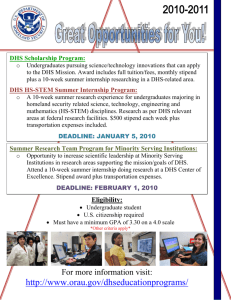

advertisement