CARESS Working Paper #98-01

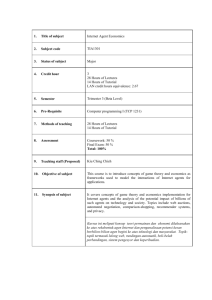

advertisement