CASPR Research Report 2006-02 USING TEXT ANALYSIS SOFTWARE IN SCHIZOPHRENIA RESEARCH

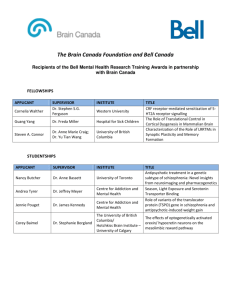

advertisement