Document 11162702

advertisement

UXT LIBRARIES - OEWEY

Digitized by the Internet Archive

in

2011 with funding from

Boston Library Consortium

Member

Libraries

http://www.archive.org/details/misclassificatioOOhaus

HB31

.M415

Dewey

@

no.

qq.

^

working paper

department

of economics

MISCLASSIFICATION OF A DEPENDENT VARIABLE

IN A DISCRETE RESPONSE SETTING

Jerry A. Hausman

Fiona M. Scott Morton

massachusetts

institute of

technology

50 memorial drive

Cambridge, mass. 02139

MISCLASSIFICATION OF A DEPENDENT VARIABLE

IN A DISCRETE RESPONSE SETTING

Jerry A. Hausman

Fiona M. Scott Morton

94-19

June 1994

M.I.T.

JUL

LIBRARIES

1 2 1994

RECEIVED

First Draft

December 1993

Misclassification of a

in a Discrete

Dependent Variable

Response Setting

Hausman'

Fiona M. Scott Morton

Jerry A.

and NBER

June 1994

MIT

We

would like to thank Y. Ait-Sahalia, G. Chamberlain, S. Cosslett, Z. Griliches, B. Honore,

W. Newey, P. Phillips, T. Stoker, and L. Ubeda Rives, for helpful comments. Thanks to Jason

Abrevaya for outstanding research assistance. Hausman thanks the NSF for research support.

Address for correspondence: E52-271A, Department of Economics, MIT, Cambridge, MA, 02139.

'

Introduction

A

dependent variable which

inconsistent in a probit or logit

we mean

variable

happen

a discrete response causes the estimated coefficients to be

is

model when misclassification

that the response is reported or recorded in the

recorded as a one when

is

it

present.

By

'misciassification'

wrong category;

should have the value zero.

for example, a

This mistake might easily

an interview setting where the respondent misunderstands the question or the

in

interviewer simply checks the wrong box.

measurement

error,

dependent variable

likelihood function

We

in the data.

misclassification

and

Other data sources where the researcher suspects

such as historical data, certainly exist as well.

is

we

We

show

that

when a

misclassified in a probit or logit setting, the resulting coefficients are

However,

biased and inconsistent.

distribution

is

the researcher can correct the

problem by employing the

derive below, and can explicidy estimate the extent of misclassification

also discuss a semi-parametric

method of estimating both the probability of

and the unknown slope coefficients which does not depend on an assumed error

is

also robust to misclassification of the dependent variable.

Each of these

departures from the usual qualitative response model specifications creates inconsistent estimates.

We apply our methodology to a commonly used data set,

the Current Population Survey,

where we consider the probability of individuals changing jobs. This type of question

known

for

its

potential misclassification.

is

well-

Both our parametric and semiparametric estimates

demonstrate conclusively that significant misclassification exists

the probability of misclassification

is

not the

in the

sample.

same across observed response

Furthermore,

classes.

A much

higher probability exists for misclassification of reported individual job changes than the

probability of misclassification of individuals

I.

Qualitative Response

We

we

present

Let

y

.

Model with

who

are reported not to have changed jobs.

Mlsclassiflcation

use the usual latent variable specification of the qualitative choice model; for the

consider the binomial response model,

c.f.

Greene (1990) or MacFadden (1984).

be the latent variable:

y."

=

X,/3

+

e,

(1)

The observed

data correspond

yi=l

ifX,/3

+ ei>0

=

if Xi/8

+

Yi

For now,

x be

let

and constant

the assumption that

where $(.)

is

6;

<

Assume

The model

sample for both types of responses.

that

t

is

distributed as N(0,1), the usual probit assumption:

=

T.pr(y,*>0)

=

x.*(Xi^)

+

+

=

1-T

+

independent of

specification follows

is

e,

X

from

(l-x).pr(y;<0)

(l-x).(l-'i'(Xi^))

the standard normal cumulative function.

E(yi|X)

(2)

the probability of correct classification.

in the

pr(yi=l!X)

to:

We

(3)

then calculate the expectation of

y/.

(2t-1)-*(X,/3)

This equation can be estimated consistently in the form,

=a

y,

where

q;

+

(l-2a).<i.(X,^) +7,,

= 1-t.

Alternatively,

we

could approach the problem using the other response,

pr(yi=0|X)

= T.pr(y;<0) +

(l-T).pr(yi->0)

=

T-(2x-l).4.(X./3)

=

(l-a)-(l-2a)-$(Xi/3)

In terms of a:

Note

(4)

that as

above we

find:

(5)

= l-{x +

E(yi|X)

If

(1-2t).<|.(X,^)}

one estimates a probit specification as

misclassification

if

(6)

were no measurement error when

there

present, the estimates will be biased and inconsistent.

is

contrast to a linear regression

where

classical

measurement error

in the

in fact

This result

is in

dependent variable leaves

the coefficient estimates consistent, but leads to reduced precision in coefficient estimates.

The

log likelihood for the probit specification with misclassification

L =

2i

a=0

In the case of

+

{yi-ln[a+(l-2a)-4»(Xi^)]

there

is

no

(1981) shows that

if

a=0

classification error

this log likelihood is

concave functions. Unfortunately, the

logit or probit functional forms.

result

as follows:

(l-yi)-ln[(l-a) + (2a-l)-#(X/?)]}

(7)

and the log likelihood will collapse

In order for the equation to be estimated,

usual case.

is

a must be

less than

one

to the

half.^

Pratt

everywhere concave, being the sum of two

does not hold for our log likelihood for either the

The appendix gives

details of the conditions for concavity.

also demonstrate in the appendix that the expected Fisher information matrix

is

We

not block

diagonal in the parameters (a,/3).^

Notice that the linear probability model does not allow separate identification of the

and x.

coefficients

pr(yi

= l|X)

Instead the estimated coefficients are linear combinations of

=

x.pr(y,->0)

= x-X^ +

^

+

x and

/3.

(l-x).pr(y;<0)

(8)

(l-x).(l-Xi/3)

In the case of Qf> .5, the data are so misleading that the researcher might

want

to

abandon the

project.

^

Thus, previous papers which assume they know

Summers (1993)

assumed a

is

inconsistent.

suffer

correct.

In certain special cases of multiple interviews,

identification of misclassification probabilities

However, generally they must make

identification.

a from exogenous

sources, e.g., Poterba and

from two defects. Their estimates are likely to be inconsistent unless their

But even with a correct a the standard errors of their coefficient estimates are

is

Chua and

Fuller (1987) demonstrate that

possible, given a sufficient

special assumptions

number of

interviews.

on the form of misclassification

to achieve

=

ECyJX)

[1-T

+

(2ir-l)./3o]

+

(2t-1).(X,^,)

This example show that identification of the true coefficients in probit and logit comes from their

To

non-linearity.

results

address this concern,

do not depend on

An

we

shall introduce a

distribution assumptions.

interesting feature of the misclassification

even for a small amount of misclassification.

below.

The

first

term

is

semiparametric approach so other

The

problem

is that

usual probit

first

observations where y =

summed over

inconsistency can be large

1

,

order condition

written

is

the second for those

where

y=0,

y.^t^

where

^,

=

and similarly for

^(Xj/J),

misclassification is as follows.

the likelihood

misclassified).

-

When

j.

(.-„.E^

The

=

(9)

intuitive reasoning for the inconsistency

misclassified observations are present, they are

due

added

into

and the score through the incorrect term (because the dependent variable

The

to

large inconsistency in the estimated parameters arises if probit (or logit)

is

is

used because misclassified observations will predict the opposite result from that actually

observed.

For example, an observation with a large index value

probability 4»(Xj/3) close to one.

value will be a zero.

However,

The observation

will

if that

observation

be included

in the

is

will predict a

misclassified,

second term

its

one with

observed y

equation (9); a

in

^(XJ3) close to one will cause the denominator to approach zero, so the whole term will

approach

The same problem

infinity.

Therefore, the

sum of

and the estimated

/3's

first

exists for observations with very

order conditions in the case of misclassification can

can be strongly inconsistent as a

result.

It is

somewhat

inconsistency will increase as the model gets "better" because there will be

are misclassified and therefore

Another way

that

maximum

*

low index values.

more

become

large,

ironic that the

more good

fits that

large terms in the log likelihood.'*

to think about the inconsistency caused

by misclassification

is to

use the fact

likelihood sets the score equal to zero under the situation of no misclassification.

See Table lb for a simulated demonstration of

5

this property.

Taking expectations and using the probabilities *; and 1-$;

in the case

However,

causes the denominators to cancel and the expectation to equal zero.

misclassification, the probabilities change.

y=l and

The "ones term"

[(l-a)'l'+a(l-*)]

-^

l-4»

-

$

misclassification

to the usual probit case.

is

If

present,

a

?i

a =

in the case

of

used for observations that have

are not misclassified and also for observations that have

^

When no

is

of the normal probit

y=0

and are misclassified:

[(l-a)(l-$)+a*]

=0

(10)

and the expectation of the equation above collapses

0, then the expectation

of the score

is,

in general, not equal

to zero.

We

are particularly interested in the inconsistency in probit or logit coefficients

only small amounts of misclassification are present, since this might be the most

We

facing a researcher.

when

common

case

can use the modified score above to evaluate the change in the

estimated coefficients with respect to the extent of misclassification at zero misclassification.

The

derivation

full

is in

the appendix.

In the case of the probit, the derivative will equal:

d&

Again, notice that

(f)(Xfi)

goes

to

in the case

zero

also.

1

where an observation has ^(Xfi) close

Therefore,

considerable inconsistency to the estimated

estimated

A

/3's

when

- 2^{Xfi)

misclassification

is

to either zero or one, then

even a single observation can theoretically add

jS's.

In general, the

amount of inconsistency

present will depend on the distribution of X's.^

Table

reports the value of this derivative for the job change dataset used later in this paper.

crucial feature of misclassification

the table

'

shows

that the bias in

is

/3

immediately apparent. The

"maximum

derivative"

in the

The

row of

caused by a specific misclassified observation can be very

Distributions such as the uniform lead the average derivative to be relatively small, whereas a

distribution with

unbounded support

will cause

it

to

be

larger.

Averaged across observations, the change

large.

in

is

/3

not nearly so large, indicating that

particular observations contribute heavily to the inconsistency of the estimated

jS.

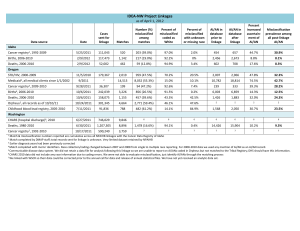

Table A:

Derivative of estimated

jS

with respect to the fraction of misclassification in the

dataset, evaluated at zero misclassification using

Job change dataset from the CPS.*

N=5221

Married

Grade

Age

Union

Earnings

West

Average

519

65.5

19.5

535

0.961

839

179,666

27,640

7,486

179,666

360

359,332

-3.60

-0.200

-0.136

-3.60

-1.70

-3.48

Derivative

Max

Derivative

Min

Derivative

n. Simulation

In order to assess the empirical importance of misclassifications,

using

random number

The

generators.

lognormal distribution, the second, Xj,

third probability,

y' and

y;

and X3

is

is

hand side variable, X,,

first right

a

dummy

distributed uniformly,

we

create a sample

is

drawn from a

variable that takes the value one with a one

e is

drawn from a normal

(0,1) distribution.

are defined to be:

y;

=

yi=

/3o

+

1

X,^,

if

+

y;

X,fi,

+

X3J83

+

y;

called

=

€i

X;0

+

(12)

6;

>

otherwise

Next we create a misclassified version of

a = l-T

*

)

is

misclassified

The dummy

variables

compute the derivative.

on a random

were rescaled

y;.

A

proportion

a of

the sample (recall that

basis.

to equal

1

and 2 and the regressions rerun

in

order to

Table

I

reports the

the first

if

We

results.

For comparison, the

coefficients 146 times.

estimated

Monte Carlo

create the sample and estimate the

column reports

first

the parameters that

5%

misclassification; the second

row has 20%.

amount of

misclassification, ordinary probit can

problem

is

intensified as the

likely to

be a more severe problem when the model

function

we

amount of

propose does very well

Even

produce very inconsistent coefficients.

As discussed above,

misclassification grows.

fits

well, as

it

at estimating the "true" coefficients

assuming a

the case

is

random sample generation

already known.

where a

is

estimated.

Again,

MLE

However,

if

only.

includes a

It

The

does here.

The

this is

likelihood

The variance

and a.

column

in

of a small

in the case

of the estimates rises as the extent of misclassification in the sample increases.

estimates from one

would be

The sample design used

ordinary probit were run on these misclassified data.

row has

MLE

Table

11

reports

that estimates the

/3

a

does well, and standard errors are lower than in

is

not truly known, but treated as

if it is

known,

e.g. Poterba

and Summers (1993), these estimated standard errors will be downward biased.

In the fourth

column are

as estimates of

/3.

the

MLE estimates

of the misclassification error parameter, a, as well

Notice again that the estimates of

/3

are closer to the true parameters than the

estimates obtained without correcting for likely misclassification error (column 2).

As previously

noted, the standard errors in the probit specification using misclassified

data are relatively small.

The researcher depending on

model

the incorrectly estimated

think that the reported coefficients are precisely estimated, although misclassification

a problem in the data.

Although the

MLE

method

be able to estimate very precise coefficients

if

the

that accounts for misclassification

model does not

standard errors that reflect the true imprecision of the estimates.

quite precisely estimated in cases

absolute size of the

We

The

test

jS's,

where the data

fit

is

the

fit

well,

it

will

actually

may

still

Also, the estimates of

model well, as

in

Table

II.

will

not

give

a

are

Not just the

but their ratios vary from the true to the inconsistent case.^

our theoretical model two additional ways.

When

there

is

no misclassification

Xs are drawn from normal

The appendix includes a table which reports

the results of simulations run using normally distributed Xs. Ruud (1983) discusses reasons for this

result. The ratio of the estimated betas differs between probit and MLE when the Xs are distributed

^

ratios of the betas will stay constant if the simulated

distributions, even

when each

individual beta

is

biased.

uniformly or log normally.

8

in the sample, a regression

expected (see equation

to

of

By

4).

yj

on a constant and *(X/3) leads

contrast, the

altered

by nonlinear

somewhat from

the

least squares.

MLE case.

and

same regression with dependent variable

have 10% misclassification error gives coefficients

also be estimated

to the coefficients

We

.1

and

y;

1

as

created

The model can

.8, as predicted.

minimize a quadratic error term which

is

Instead of estimating a, the equation allows the constant

term and the coefficient on ^(X0) to differ from zero and one, respectively:

yi

We

=

+ dr^(X^) +

5o

(13)

Vi

use White standard errors here; they are calculated using the derivative of the nonlinear

The NLS method

function with respect to the parameters in place of the usual regressors, X.

results in the following estimates for 5o, 5,,

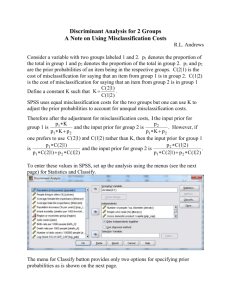

Table B:

One sample

The simulated X's

/3,

Design a =0.1

=

and the

/3's.

generated.

are

drawn from a standard normal

distribution.

1

Probit: correct y

NLS

y misclassified

N=2000

5,

5o

-1.99

(.141)

The

.099

(.059)

(.024)

results here are close to the

5,=(l-25o):

Thus,

test

0.995

NLS

.802

MLE results and

= l-(2-.099) =

/?!

/3o

.804

-2.05

1.03

(.028)

(.623)

(.278)

they satisfy the overidentifying restriction that

.804.

leads to consistent estimates in the presence of misclassification.

of the restriction of independent misclassification error

made

In a situation requiring misclassification treatment,

It

also permits a

in the basic specification.

the researcher

may

suspect the

probabilities of misclassification

from one category

to another are not

the basic model, modified to allow for this difference.

symmetric*

Below

is

Simulation results follow.

To = probability of correct classification of O's.

Let

T,

We can

=

probability of correct classification of I's.

then evaluate the probability of observing given responses under different probabilities

of misclassification:

pr(yi

= l|X)

pr(y;=0 X)

1

The

ir,.pr(y;>0)

=

(l-7ro)

=

To

-

+

(T,

this

hold,

To

-

1)

•

(14)

l)-#(X/3)

*(X/3).

is:

equation to be identified, Tj

it is

+

To must be greater than one.

likely that the data are sufficiently "noisy" that

practice, the condition should not

'

(l-TQ).pr(y;<0)

+ To-

(T,

+

+

log likelihood function for the specification which allows for different probabilities of

misclassification

For

=

be a

If the condition

model estimation

is

does not

not warranted; in

restrictive requirement.

This problem displays a loose relationship to the switching regression problem, e.g. Porter and

Lee (1984).

regime

However, the switching regression framework uses the

hand side variable.

shift as a right

10

discrete variable to represent a

One Sample Generated

Table C:

The simulated X's

^,

=

are

drawn from a standard normal

1

Design

Probit:

T'S

correct y

^0

00

^i

:

using

^0

/3.

MLE:

solving for Tq and x,

design x's

To

iS.

T,

/So

|8i

-2.11 1.03

-.770 .373

-2.18

1.02

.978

.975

(.107)(.044)

(.044)(.011)

(.155)

(.064)

(.007)(.004)(.145)(.061)

MLE

However, note

specification

It is

that

we

can no longer

when we allow

overidentifying restriction of the misclassification

for different probabilities of misclassification.

where he or she suspects

that

correlated with the yi*'s in a continuous fashion:

is

+

=

x(y-).pr(y.->0)

=

x(X,/3,)-<^(X2A)

=

Suppose

.969

(l-x(y-)).pr(y;<0)

+

(15)

(l-x(X,^.)).(l-<i.(X3A))

= E[l-x(X,A)|X,] + E[(2iriX,fi,yi)'^(XM\X,]

E(y;|X)

logit

l-x(X,ii3,)

E(y.|X)

=

X

1-A(X,5)

condition on the X's,

we do

+

(2x(X,^,)-l)-*(X2i/32)

were the monotonic function ranging from

the expected value of y; given

to estimate;

test the

also likely that a researcher could encounter a situation

pr(y.= l|X)

.970 -1.98

able to estimate the parameters both accurately and quite precisely.

is

the probability of misclassification

we

MLE

Probit: y

misclassified

.950

Thus, again

If

distribution.

all

to

1

that

determined x. Then

could be written,

+

(2A(X,5)-l)'4>(Xi)3)

the previous results hold.

not provide simulations here.

11

(16)

This case

is

much more complicated

Logit case

The

logit functional

form can also be analyzed

error in the dependent variable.

First,

we

in the case

of suspected measurement

calculate the probabilities of the observed data given

misclassification:

pr(yi=l|X)

pr(y-0|X)

+

=

T.pr(y,'>0)

=

T.[exp(X^)/(H-exp(X/8))]

= x-pr(y;<0) +

=

T-

(1-T).pr(y;<0)

+

(17)

(l-x).[l/(l+exp(X^))]

(1-T).pr(y;>0)

[1/(1 +exp(X/3))]

+

(1-x)

We then calculate the expectation of the left hand

•

[exp(X^)/(l +exp(X^))]

side variable conditional

on the X's and given

the probabilities of misclassification:

=

E(yi|X)

=

[(1-T)

[a

+

+

T.exp(X/3)]/[l+exp(X/3)]

(l-a)-exp(X/3)]/[l+exp(X/3)]

We now demonstrate that the case of misclassification leads

to a

case of endogenous sampling, e.g., the papers in Manski and

very different outcome than the

MacFadden

of endogenous sampling the slope coefficients are estimated consistently by

constant

is

incorrectly estimated.

(1981).

In the case

MLB logit;

only the

Thus, estimation refinements are concerned with increasing

asymptotic efficiency. Here, in the situation of misclassification,

estimates of both the slope coefficients and the constant term.

MLE logit leads to inconsistent

This result follows easily from

a comparison of the (Berkson) log odds form of the logit in the case of measurement error and

endogenous sampling. Without misclassification we have:

+

X)

=

exp(x,/3)/(exp(X<^

pr(yi=0|X)

=

exp(X,^)/(exp(X(^)+exp(X^)).

pr(yi=

1

1

Thus, the expectation of the log odds

exp(X,/S))

is:

12

(18)

EOn(s,/So))

=

Enn(exp(X,/3)/exp(X<;3))]

With endogenous sampling where we redefine X

+

to

=

(X,

=

Xexp(X,/3)/(exp(X<^)

pr(yi=OjX)

=

(l-X).exp(X<^)/(expCM)

E(ln(s,/So))

=

ln(Xexp(X,^)[(l-X) • exp(X<y3)]-')

=

(ln(X/(l-X))

Thus, only the constant term

is

+

/3o)

-

Xo)^.

be the weighting constant we

pr(yi= 1 X)

1

-

find:

exp(X,^))

+

(19)

exp(X,^))

(XrXo)^,

inconsistent with

(20)

endogenous sampling. Using the expressions

derived above for the case of misclassification, where as before

t

is

the probability of correct

classification:

Ean(s,/So))

If

we

=

+

In [(x

(l-T).exppC,^))/(l-T

express the problem in a log odds form,

it is

+ r'txp(XMl

(21)

easier to see that the misclassification will

induce inconsistency in both the constant and the slope coefficient estimates.

of misclassification

feature

inconsistency.

is

The dependent

that

it

prevents one observation

variable for any one observation can

small as the proportion of ones approaches one or zero.

a terms prevent

When

An

interesting

from causing unbounded

become

arbitrarily large or

misclassification

is

present, the

the limit from reaching infinity.

Simulation results analogous to those using a probit functional form appear in Tables in

and IV.

Similar results hold

responses.

Furthermore,

the case of three or

if

more

if

we

the probabilities of misclassification are not equal across both

use the log odds specification,

we

categories of qualitative responses

probabilities differ both across response category

holds a simple treatment of this topic.

13

can extend our approach to

where the misclassification

and within response categories. The appendix

m.

Semiparametric Analysis

The assumption of normally

probit (logit) specifications

distributed (or extreme value) disturbances required

not necessary to solve for the

is

amount of

by the

One

misclassification.

can use semiparametric methods which estimate the extent of misclassification in the dependent

We

variable and estimate the slope coefficients consistentiy.

technique here.

The

kernel regression uses an Epinechnakov kernel, as

The window width,

efficiency properties.'

weighted average, operates;

we

h*

One could

employ a kernel regression

also determine h*

h, defines the intervals

over which the kernel, or

use Silverman's rule-of-thumb method as a

=

1.06ffn

has desirable

it

first

approximation:

•1/5

by cross-validation, a computationally intensive procedure which

minimizes the integrated square error over

h.

However, past experience leads us

to expect

very

similar results.

Our

kernel regression

kernel one-dimensional.

is

of the simplest form as

We calculate the index

I=X/3, where the

procedure which will be discussed subsequentiy.

range of the index

is

chosen.

The

kernel estimator

with each observation falling in the

window

uses an index function to

it

Then a

is

set

/3's

make

the

are found in a first-stage

of evaluation points covering the

the weighted average of the y's associated

centered at the point of evaluation:

/-/.

/(04e^:(I^)

where

I is

the index, h

is

the

window

Epinechnakov kernel.

The cumulative

dividing the weighted

sum of

'

in the

width, n

is

the

(22)

number of

observations, and K(.)

distribution of the response function (cdf)

y's by the total

number of

points falling in the

is

is

the

formed by

window of

the

Silverman (1986), p. 42. describes how the Epinechnakov kernel is the most "efficient" kernel

sense of minimizing the mean integrated square error if the window width is chosen optimally.

14

kernel.

We plot the cdf using

the probit coefficients

from above

to construct the index.

We use

simulated data with a misclassification probability, a, of 0.07, which approximately corresponds

to the estimated probability

Figure

la.

Standard

we

find in our empirical

CDF

example below with the CPS

Figure lb.

MM !—

a

o

CDF

data:'°

with Misclassification

.w

•

«

o

o

o

o

«

o

o

6

o

a

o

o

o

^^ooooooooo

o

o

a -0

-0,2

S

fi

10

1

4

index

index

.07 y misclassified: simulated data

true y: simulated data

Above

0.2

are two graphs, one using misclassified data and one using true data.

The method

produces the smooth curves of Figures la and lb, where the misclassification problem shows

up

clearly.

For example, a .07 misclassification

rate

would cause the cdf of the dependent

variable to begin at .07 and asymptote at a value of only .93.

there

is

always a seven percent chance

y will be one.

in the

cdf s. Note

increases.

'°

A

that the observation will

No

matter

how

small

X^

is,

be misclassified and observed

kernel regression performed on simulated data, demonstrates the difference

that in Figure la the cdf

However,

The simulated

in

goes between the values of 0.0 and 1.0 as the index

Figure lb the beginning value

is

data consist of 2,000 observations of the

es.

15

about .07 while the cdf asymptotes to

sum of normally

distributed X's and

about .93.

Using the cdf

in

this

manner allows

for identification of the probability of

misclassification using semiparametric or nonparametric techniques so long as the probability

of misclassification

is

independent of the X's.

We experienced

problem of how

two major problems with the kernel regression. The

to handle the tails

critical to the discussion

regression as far out into the

the

if

is

as possible because the

tails

window width becomes very

obscured

Yet,

low.

the classic

In this case, the behavior of the tails is

of the distribution.

of misclassification.

first is

it

misleading to continue the kernel

number of observations

falling within

In fact, the asymptotic behavior of the cdf

estimation continues to the extreme points.

In Figure lb

above

we

becomes

use the points

one bandwidth from the extreme index values as truncation points for the regression.

Other

solutions include variable kernel and nearest neighbor methods.

We

use the nearest neighbor algorithm as an alternative method to investigate the

behavior of the cdf in the

tails.

The

nearest neighbor

closest points to the evaluation point.

become

further

away

use

k=100 and

tail

exhibit similar

considered above.

is

As observations begin

rather than fewer in

methods have on our particular

method

number.

We

looked

a weighted average of the k

to thin out, nearest

at the effect nearest

neighbors

neighbor

problem using several different k values. The graphs below

tail

behavior as the truncated kernel regressions which

Again, the probit coefficients are used to construct the index.

16

we

Nearest Neighbor Methcxl

Figure 2a:

Standard

CDF

CDFs

Figure 2b:

Misclassified

CDF

o

*.«^JJUUUPULW.«^.«.»

OooO°°ooooooooo

oO

o

oo

«

o

f,^

a

o°

»

o

•n

a

o

o

o

^

a

o

o

t^

o

o°

-

oooooooOo

o

.

-0.6

o

o

nnnnfinnnPOO

-0.2

0-2

10

6

1

-O.e

is

more

severe:

how

to find the starting jS's.

to use the probit regression coefficients in the construction

we

are clearly

0.6

1.0

l.»

1

a

.07 y misclassified: simulated data

true y: simulated data

problem,

0.2

index

index

The second problem

-O.J

not freeing

the data

of the index.

One method

While

from an assumed error

importantly, while the results will demonstrate that misclassification

is

is

simply

illustrating the

structure.

More

present because of the

behavior of the cdf, the estimates of misclassification will not, in general, be consistent

estimates.

rv.

Application to a Model of Job Change

We now

to

consider an application where misclassification has previously been considered

be a potentially serious problem.

We

estimate a model of the probability of individuals

changing jobs over the past year. Because of the possible confusion of changing positions versus

17

changing employers, respondents

that job

we

well

make a mistake

in their answers.

We

would expect

changers have a higher probability of misclassification than do non-job changers. Thus,

consider models both with the same and different probabilities of misclassification, and

test for equality

the January 1987 Current Population Survey of the Census Bureau.

extracted the approximately 5,000 complete personal records of

25 and 55 whose wages are reported.

respondent's job tenure.

Among

may have

men between

the questions in the survey

This type of question

confuse position with job or

is

well-known for

its

is

the ages of

one asking for the

misclassification; people

Those respondents who give

re-entered the labor force.

tenure as 12 months or fewer are classified as having changed jobs in the last year.

individuals

who answer more

means of the data are

We

in

V

estimate a probit specification on the variable for changing jobs, which

is

of Freeman (1984).

misclassification.

We

t-statistic

Thus,

of about 8.3.

misclassification of the response

Next,

is

allowed

for.

change also becomes much greater

we

maximize the log likelihood

we

estimate the probability to be

we

find quite strong evidence of

is

Likewise, the effect of higher earnings in

in the misclassification specification.

allow for different probabilities of misclassification as in equation (15) since

In the third

the probability of misclassification if the observed response

is

CK,,

For

found to be much higher when possible

non-job changers are less likely to misreport their status.

from

Note

of the probit coefficients also change by substantial amounts.

instance, the effect of unions in deterring job changes

affecting job

We

present the results in Table VI.

for the probability of misclassification

Many

call

same explanatory variables but allowing a,

the probability of misclassification, to be non-zero.

0.058, with an asymptotic

we

quite similar to specifications previous used in the applied labor

literature, c.f. the specification

when we allow

The

below.

function presented initially in equation (7) using the

that

Those

than one year are classified as not having changed jobs.

Table

Jobchange. Our specification

economics

we

of the probabilities.

Our data come from

We

may

column of

no job change,

the probability of misclassification if the observed response

allow the misclassification probabilities to differ across the responses.

is

is

the table, oq,

allowed to differ

a job change. Thus,

we

Freeing the a parameters

produces a markedly different value for a, than in the case where the two are constrained to be

equal,

a,

jumps

to 0.31 while ao

remains

at

0.06 The difference between the two estimates of

18

a

in

1.51,

column 3

which

is

is

.248 and has a standard error of .164, giving

it

an asymptotic

t-statistic

of

not quite significant. However, the effect of both union and earnings in deterring

job change are even greater than previously estimated. Thus, allowing for different probabilities

of misclassification again leads to quite different results than in the original probit specification.

We now

perform kernel regression

misclassification exists in the responses.

the situation clearly.

We

find the

0.6.

These

The graph below

minimum

determine

to

The

if

it

also demonstrates that response

kernel regression results in Figure 3 demonstrate

uses the probit coefficients to create an index function.

probability of job change to be around .06 with an increase up to about

results are extremely close to the

MLE probit results of Table VI

where we estimate

the respective probabilities to be .06 and 0.7 with an asymptotic standard error of 0.17.

Semiparametric estimation of the cdf can reveal the a' s by showing different asymptotes

at

each end of the function. In our case, the lower asymptote

much

less well defined, is in the

50-60%

to

the upper one, though

not totally satisfactory.

is

We cannot achieve

model parameters nor can we estimate the sampling

Thus,

distribution of the misclassification probabilities.

methodology which allows us

6-7% and

However, semiparametric estimation of the

range.

probability of misclassification by visual inspection

consistent estimates of the job change

is

overcome

we now develop

a semi-parametric

shortcomings of our previous approach.

the

Nevertheless, our results up to this point do demonstrate quite convincingly that misclassification

of job changes does exist in the

Figure

f»»^.

>»•.

17

3:

TI.M

n

CPS

data.

Probit Coefficients; Jobchange Data

IMJ

O

r

o

.

in

o

o

rn

O

o

(N

o

O

o

o

o

OOOOq

^oooooooO

o

o -26

-22

-'8

-11

-'0

-06

-0-2

2

V. Consistent Semiparametric Estimates

We now derive a methodology which permits consistent estimates of the misclassification

We first use the Maximum Rank

probabilities and the unknown slope coefficients."

(MRC)

Correlation

Han

estimator described in

This binary response estimator

(1987).

straightforward to calculate even in the instance of multiple explanatory variables and

MRC

variables.

operates on the principle that for observations where the index Xj3

a given estimate of

MRC

)3,

we would

expect that

indicator variable a "one" in cases

Thus, the

MRC

function which

An

is

over

all distinct

advantage of using

required.

> yj)nx]0 >

1(.)

used.

The

MRC

=

Xj0) +

(i,j)

to I, but instead is

problem remains monotonic

in the "correct" order.

may

1(.)

i

=

=

<

is

Ij

=

(1,...,N).

the normality assumption is not

/3's in

when

just such a case; the cdf

Xj/3,

probit

the index in the case of

any

no longer

However,

Thus the

Manski (1985) discusses use of the maximum score estimator when a single misclassification

probability exists for the data.

20

the

so long as the probabilities of

correct classification, Xq and t,, exceed 0.5 each and do not depend on the X's.

"

(23)

otherwise and the

probabilities of misclassification.

in the indicator variable,

y)]

lead to inconsistent results

allow estimation of the

bounded by the

yj)l{x.^

while

is that

monotonic transformation of the index. Misclassification

runs from

constructed by giving an

is

from the sample

instead of probit (or logit)

to

for

The

see equation (14).

1;

<

/(>'..

if (.) is true,

1

pairs of elements

was designed

Xj/3 for

is:

Violation of the distributional assumption

is

(or logit)

MRC

>

x,

Xfi and then a sum

maximized

an indicator function with

1(.) is

summation

E[^(>'.

+

where the dependent variables are also

is

''

S^ (0) =

=

dummy

The necessary monotonicity property

yj.

holds under misclassification so long as Tq

observations are ordered by the index ^

where

>

y;

>

is

/3's

MRC

from

by Han (1987) and asymptotic normality of the

and

MRC

coefficients

Cavanaugh and Sherman (1992). However, we

in

The consistency

estimation are consistent in the case of misclassification.

proved

is

proved by Sherman (1992)

is

need a semiparametric, consistent

still

estimate of the misclassification probabilities, ao and a,.

Misclassification probabilities are given

The

technique gives estimates of those asymptotes.

would be kernel regression on each

we can

construct such a cdf.) However,

of a distribution.

last

tail

Where does

by the asymptotes of the

first

of the cdf.

it is

method

that

not obvious

how

to

the estimated

data

become very

thin

so

tails

and the

observation becomes disproportionately important.

Instead, in order to solve for the

as

semiparametrically,

we

use the technique of isotonic

regression (IR). IR nonparametrically estimates a cdf using ranked index values.

is

|8's

weight observations in the

The

the "tail" begin, for example.

a researcher might choose

we know

(Recall that

the chosen

cdf;

useful for our problem because the final estimate

is in

The technique

the form of a step function; the lowest

and highest "steps" provide consistent estimates of oq and 1-a,. The combination of

MRC

and

IR techniques achieves consistent estimates of both the coefficients on the explanatory variables

and an estimate of the amount of misclassification

in

The

the data.

Isotonic Regression

technique involves the following basic steps.

First,

N"^

we

consistent.

use the coefficient estimates from the

We

want

p

.

MRC

Next, the indices are ordered so that

respect to the index v

is

procedure of Han (1983) which are

v,

estimated

<

Vj

<

The index

/3's.

Vfj.

An

any non-decreasing function defined on the

isotonic regression of y if

of the dependent variable

to estimate the probability distribution

conditional on an index constructed from the

Xj

MRC

is

defined

Vj

=

isotonic function, F, with

N

index values.

F

is

an

minimizes

it

N

Jliy, -^(v,))^

i-l

In addition,

decreasing,

F

so

(v)

F

=

(Vj.,)

if

<

Groeneboom (1993) proves

v

<

F

v,

(Vi).

and

F

Under

(v)

=

this

that the point estimates,

21

1

if

v

>

Vn.

specification for

F

(v)

F(.)

must also be non-

known index

are N"^ consistent for

values

v,

F

€

(v)

(0,1).

We

Han's estimator of v leads

will demonstrate that using

Performing isotonic regression

index values into pools, where each pool

upon the index being

to the index values

V;

distinct index value.

the algorithm

indexed pool. If the guess for the

are left intact; the second pool

first

is

is

just the average of the

initial set

pool

is

less than the

guess for the second pool, the pools

used for the next comparison.

(Vj) is

Otherwise, the pools are

This process

is

Otherwise, the regression

v,

at the

However,

it is

critical to realize that the

is

is

complete.

end of the algorithm.

In order to observe the lower asymptote of the cdf, the

Similarly for the upper

there are no observations at high index values, the estimated cdf will end before

those values are reached.

value

continued

estimated a's can only be

researcher must have observations with very low index values.

final

is

described completely by the guesses associated

the guess for the pool containing

identified using nonparametric methods.

if

values corresponding

highest steps resulting from the isotonic regression are consistent

estimates of Uq and ai.

asymptote;

>»;

compares the lowest-indexed pool with the next lowest-

isotonic regression of y with resi>ect of v

The lowest and

to organize the

Finally, if any combinations of pools occurred during the last

pools are exhausted.

with the final set of pools;

is

of pools has each pool corresponding to a

sequential comparison of the pools, repeat the process.

The

idea

result.

assigned a "best guess" for the value of y conditional

used for the next comparison.

is

combined, and the combined pool

until the

The

in the pool.'^

Then

is

The "guess"

in the pool.

The

quite straightforward.'^

is

same

to the

The researcher must ask

the question, does the cdf end because the

the "true" asymptote or because there are

no data points

in that area?

In order to

solve the problem, the data would have to contain observations with index values which

approach negative or positive

However,

infinity.

approximately constant at some non-zero a, that

be a problem

in

the data.

Certainly

is

if the

data display a long

good evidence

further investigation

tail

that is

that misclassification could

would be warranted; perhaps

resampling techniques could be employed to find out more about the problem.

Once we have

'^

See Barlow

the

et. al.

MRC

estimates and the IR estimate of the cdf,

(1972) or Robertson

et. al.

we

find the asymptotic

(1988) for detailed discussions of IR and the

algorithms used for estimation.

'^

The non-decreasing property

constrains pools to contain only adjacent index values.

22

distribution of the

IR estimator. To date no asymptotic distribution has been found for methods

which estimate both the unknown

slope

coefficients

from the

insight follows

we

of the form

as

known

fact that

We

use

MRC

use with step functions

the

However, we are able

semiparametric models of discrete response.

of both sets of coefficients here.

and

/3

known

results

cdf simultaneously

to derive the distribution

combined with a basic

insight.

Groeneboom's (1993)

is

N"' consistent, so we can

(The proof

results.

The

estimates are N"^ consistent while isotonic regression

treat the

MRC

deriving the asymptotic distribution for the isotonic regression

in

in

is

coefficients

applying

in

given in the Appendix V.).

Estimation of the Asymptotic Distribution for the Isotonic Regression Step Function

We

a step function where

parameters which

I

is

the index,

come from

I

=

We

X/3.

estimating the model by the

estimator (or other N"'^ estimator),

MRC

we would be

technique in

from

x,,

...^ and

F^

estimate

.

of the steps of

known v

s.t.

the

We

F„

F(v)

.

E

The asymptotic

where /is the derivative of the

its

the last time

maximum. Thus,

/3),

we

we

(1987) and

did not use the

//,...,/„

find the

distribution

(derived

IR functional

was found by Groeneboom (1993): For any

the

( 1

- F(v))

-F(v)

)

^^ TH

^^

^^

(24)

^(v)j

true distribution F,

g

is

the derivative of the distribution of the

where two-sided Brownian motion minus the parabola

Groeneboom technique

to estimate the

However, our approach which

23

utilizes

v^

reaches

asymptotic distribution cannot

be applied to the Cosslett-type IR estimator because the slope coefficients

along with the cdf.

Han

(0,1),

a

is

with

are interested in the asymptotic standard errors associated with the height

^- F(v)

Z

|7)

unable to derive the asymptotic

N"^ consistent parameter estimates of

n'^iFAv)

index, and

If

Using the index observations

distribution of the misclassification probabilities.

Pr()'=l

use the N"^ consistent estimates of these

then do an isotonic regression using these estimates for the index value.

MRC

=

use the method of isotonic regression to estimate the c.d.f. F(/)

/3

are being estimated

an N"^ estimator for

jS

allows the

parameter vector

The

to

be treated as known for purposes of estimating the cdf.

distribution of

Z can

be written (Groeneboom (1984)) as

= l.s(v)s(-v),

h(v)

^

V €

St

(25)

2

where the function

s has a Fourier transform

9 1/3

H^)

^

=

(26)

i4j(2-"^H'i)

and where A/

is

the Airy Function and

Z will

Solving for

symmetry of

integration,

h^.

we

Using

I

= V-

1

allow us to estimate the variance of F^

HAT. We have E(Z) =

from

Also, via numerical approximation of the Fourier transform and numerical

estimate Var(Z)

(23),

we

=

0.26.

then have

^

^.(v)(l--^n(^))/(v)

VariF(v)

- F(v))

= 1.04

f

(27)

2ngiv)

where we use the numerical estimate of the index density for

g(v).

use the numerical derivative of F„(v) for/(v) since the derivative of a step function

at

a

fmue number of

points (where

"slope approximation" arrived at by looking at the

jumps from or

defined to be the slope of the straight line from the point half

current step and the point half

way up

The

density of the index

sensitive to the choice of

the step.

The process

is

window

we

calculate

estimated using a

width.

The

it

assuming

of the

/3's

we

use a

this definition

of the

that the next step level is one.

point at which g{v)

24

zero except

the vertical rise to the

Because

window width of

that estimates the standard errors

cannot

to the adjacent steps. J{v) is

way up

the rise to the next step.

slope does not exist for the highest step,

is

Instead, for a given step level,

is infinity).

it

we

Unfortunately,

is

0.

1

;

the results are not

estimated

uses a kernel

is

the middle of

window of

a*n'

"^

where a

number of

observations.

IX use a kernel window of 0.05. Again,

the estimates

the standard deviation of the index function and n

is

The standard

errors for the a's in Table

window

are not greatly affected by the choice of

width.

Consistent Semiparametric Estimates of the Job

We now

data from the

MRC

apply the combined

GPS. The

results

Change Model

and isotonic regression estimators

MRC/IR

length one.

Thus, the absolute magnitude of the coefficients

MRC/IR

MRC/IR.

In order to

compare

MRC/IR

Western stays fixed across methods;

it is

which will convert the

MRC/IR

by the same

is

indeterminate; only the ratios

coefficients.

We

also the coefficient

assume

which

In this

is

assumed

way we

obtain a scaling factor

MRC/IR

was seen before

in

Thus, when

we

procedure gives results that always

use the combined

MRC/IR

can

significantly

it

between the

correcting for

we

find that the

Both are estimated quite

reject the hypothesis that the probabilities

of misclassification are

changes the estimate of a^; only 3.5% of observed non-jobchangers have a

misclassified response.

As mentioned above,

amount of data we have

range

lie in

Freeing the misclassification model from the assumption of a normal error distribution

equal.

the

now

However,

MLE

approach

estimated probabilities of misclassification are 0.035 and 0.395.

MLE produces

Table VI.

estimates obtained from ordinary probit, ignoring misclassifications, and

we

be fixed in

to

factor.

estimating the model using the

and

on

coefficient into the probit coefficient and multiply the remaining

point estimates which differ from those of probit as

precisely,

with

that the coefficient

Table VIII restates the earlier results (Table VI) for ease of comparison.

misclassification.

MLE

from probit and

the results

calculating the asymptotic semiparametric standard errors.

coefficients

job change

produces coefficients which are scaled so that each coefficient vector has

necessary to scale the

it is

to the

of using the estimators on the job change data are reported in

Table VIII.

can be found from

the

is

is

seems

in that

the accuracy of the upper asymptote depends

index range.

Since the number of datapoints in the upper

small, the estimates of a, should be perhaps be viewed with

to us that the

lower asymptote

is

well established.

low values of the index and the lowest step

is

some

caution.

However,

There are plenty of observations

relatively long.

25

on

at

Figure 4

In

we

misspecification and also the

Figure 5 shows the

in either direction.

the cdf

is

the

plot

MRC/IR

The confidence

we smooth

comparison of the

MRC/IR

when

becomes non-monotonic

is

at the

upper

of our data.

tail

MRC/IR

quite different from the

One can

also include a

Figure 6b

tries

Figure 6a

estimate.

an alternative

Again, the upper

Kernel regression techniques are

where data become

sparse.

assumption of normally distributed errors were correct for our data

expect to see the

In

see that the kernel

to construct the kernel estimate.

basically not suited to estimating the asymptotes of a cdf

If the

We

small.

problematic for observations at the ends of the distribution

approach by using the 200 nearest neighbors

of the cdf

becomes

the size of the step function

because there are only observations on one side of the point.

tail

IR step function estimate of

estimated cdf with a standard kernel estimate of the cdf.

is

for

cdf with a confidence interval of two standard errors

the estimation of the confidence interval.

uses a fixed-window kernel; this

estimate

model which allows

results are reasonably similar.

interval demonstrates that the

estimated accurately, except

these situations,

The

estimate of the cdf.

MRC/IR estimate of the

MLE

cdf from the

estimated

MRC coefficients come very close to the MLE coefficients.

set,

Some

we would

coefficients,

such as Union, match closely, but others such as Last Grade Attended and Married, do not. The

differences could well arise from a failure of the underlying probit

model assumptions of

normality.

To

MRC/IR

explore further the accuracy of the

conducted another simulation analysis.

We generated

approach compared

to probit,

we

5,000 observations of simulated data with

three explanatory variables (none distributed normally), a constant, and a normally distributed

error.

The

Ten percent of

results

the binary response dependent variable

was purposefully

misclassified.

MLE

correcting for

of the three estimation techniques are reported in Table IX.

misclassification should return the coefficients used to construct the data, as should

no special correction. The conclusion of

are not

It is

met by our job change

also interesting to note

data,

this exercise is that the

and

that the

from Table VIII

that the

misclassification probabilities quite accurately

formed by the

earlier semiparametric

and

MRC

and

MLE

with

assumptions required for probit

estimator provides superior estimates.

MRC/IR

estimator

is

able to estimate the

their values are close to

analysis.

26

MRC

our expectations

VI. Conclusions

The

discussion above shows that ignoring potential misclassification of a dependent

when

variable can result in substantially biased and inconsistent coefficient estimates

The researcher can use our maximum

or logit specifications.

above

likelihood procedure described

of misclassification and estimate consistent coefficients.

to estimate the extent

misclassification probabilities are asymmetric across groups, they

However, should the

errors in the data not

may

be estimated

amount of

misclassification

Furthermore, the isotonic regression techniques detailed above provide a procedure

will give consistent estimates of the misclassification percentages in the data.

to derive

easily.

MRC technique of Han (1987)

will yield consistent estimates of the coefficients regardless of the

which

still

If the

be normally distributed, these coefficients may

nevertheless be inconsistent. Semiparametric regression using the

in the data.

using probit

We are able

and estimate the asymptotic distribution for both the slope parameters and for the

misclassification probabilities.

Other model specification problems may exist besides misclassification: e.g. nonnormality or heteroscedasticity. Misclassification in particular need not be the problem in a case

where the probit model does not

fit.

However,

the types of results

misclassification can be a serious problem; results that suggest

we

its

achieve here suggests that

existence certainly justify

looking more closely at the data to determine what error structure does exist.

We

apply our econometric techniques to job change data from the CPS.

misclassification exists in the data.

depending on the response.

We

We

find that

Furthermore, the probabilities of misclassification differ

are able to estimate the parameters by the

MLE

parametric

approach and by the distribution free semi-parametric approach; the estimated parameters are

reasonably similar although they are estimated with only moderate levels of precision.

approach

is

quite straightforward to use on discrete response models that are

applied research.

Thus,

we hope our approach

data —especially in probit and logit models.

27

will

Our

commonly used

in

be useful to others working with discrete

References

Barlow, R.

et.al.

(1972), Statistical Inference under Order Restrictions

.

(New York, John

Wiley).

Cavanagh, Chris and Sherman, Robert (1992), "Rank Estimators for Monotone Index Models,"

Bellcore mimeo.

Chua, Tin Chiu and Wayne Fuller (1987), "A Model for Mulitnomial Response Error Applied

Labor Flows," Journal of the American Statistical Association 82:397:46-51.

Maximum

Cosslett, Stephen, R. (1983), "Distribution-Free

to

Likelihood Estimator of the Binary Choice

Model," Econometrica 51:3:765-782.

Freeman, Richard, (1984), " Longitudinal Analyses of the Effects of Trade Unions" Journal of Labor

Economics, vol. 2, no. 1, 1-26.

Greene, William (1990), Econometric Analysis

Groeneboom,

Piet (1985), "Estimating

.

(New York, Macmillan).

Monotone Density", in L.M. Le Cam and R.A. Olshen, eds.,

in Honor of Jerzy Neyman and Jack Keifer vol H,

Proceedings of the Berkeley Conference

.

(Hay ward, CA: Wadsworth).

Groeneboom,

Censored Observations", mimeo.

Piet (1993), "Asymptotics for Incomplete

Han, Aaron (1987), "Non-Parametric Analysis of a Generalized Regression Model: The

Rank Correlation Estimator," Journal of Econometrics 35:303-316.

Maximum

Han, Aaron, and Hausman, Jerry A., (1990), "Flexible Parametric Estimation of Duration and

Competing Risk Models," Journal of Applied Econometrics, 5, 1-28.

Hardle, W., (1990), Applied Nonparametric Regression

Klein, R., and Spady, R., (1993),

"An

.

Cambridge University

Press, Cambridge.

Efficient Semiparametric Estimator for Binary

Response

Models," Econometrica 61:2.

Manski, Charles F., (1985), "Semiparametric Analysis of Discrete Response: Asymptotic Properties

of the Maximum Score Estimator," Journal of Econometrics 27:313-333.

Manski, Charles and Daniel McFadden (1981), Structural Analysis of Discrete Data with Econometric

A pplications (Cambridge, MIT Press).

.

MacFadden, Daniel (1984), "Econometric Analysis of Qualitative Response Models,", in Z. Griliches

and M. Intrilligator, eds.. Handbook of Econometrics vol. 2, (Amsterdam: North Holland).

,

and Lee, Lung Fei (1984), "Switching Regression Models with Imperfect Sample

Separation Information with an Application on Cartel Stability" Econometrica 52(2):391-418.

Porter, Robert

28

Poterba,

J.

and L. Summers (1993), "Unemployment Benefits, Labor Market Transitions, and

A Multinomial Logit Model with Errors in Classification" MIT mimeo.

Spurious Flows:

Pratt,

John W., (1981), "Concavity of the Log Likelihood," Journal of the American

Association, 76, No. 373, 103-106.

Robertson, T.

et. al.,

(1988), Order Restricted Statistical Inference

.

(New York, John

Statistical

Wiley).

Ruud, Paul, (1983), "Sufficient Conditions for the Consistency of MLE Despite Misspecifications of

Distribution in Multinomial Discrete Choice Models," Econometrica 51:1:225-28.

Sherman, Robert P., (1993), "The Limiting Distribution of the

mimeo.

Stock, James,

Thomas

Coefficients,"

Stoker, and James Powell (1989),

Maximum Rank Correlation

Estimator"

"Semiparametric Estimation of Index

Econometrica 57:6:1403-30.

Silverman, B.W., (1986), Density Estimation for Statistics and Data Analysis

London,

29

.

Chapman

&

Hall,

Table

la:

Simulations

drawn from a lognormal distribution

Xj is a dummy variable drawn from a uniform

Xjis drawn from a uniform distribution

X,

is

Sample

Repetitions

=

Design

146

n=5000

Probit: y

misclassified

a

0.02

—

00

-1.0

-0.787

^1

0.20

02

1.5

/?3

-0.60

distribution

ratio to

constant

—

MLE:

solving

for

a

ratio to

constant

0.0192

(.0054)(.0051)

-0.990

1

(.068)(.077)

(.069)(.066)

0.158

0.20

(.001)(.005)

1.49

1.61

0.66

—

00

-1.0

-0.567

/3,

0.20

0.0497

—

1

(.0076)(.0075)

-1.007

1

0.20

1.06

1.87

0.76

-0.431

(.019)(.019)

n=5000

a

0.20

—

00

-1.0

-0.163

0^

0.20

02

1.5

0,

-0.60

0.20

1.504

1.50

(.082)(.089)

(.051)(.048)

-0.60

0.201

(.OlOK.Oll)

(.010)(.004)

03

0.60

(.084)(.090)

0.114

1.5

-0.598

—

(.073)(.058)

02

1.51

(.026)(.028)

(.023)(.021)

0.05

0.20

(.075)(.073)

-0.518

a

0.199

(.008)(.009)

1.27

(.063)(.054)

n=5000

1

-0.599

0.60

(.032)(.034)

—

—

0.198

(.014)(.013)

-0.991

1

(.168)(.159)

(.061)(.050)

0.037

0.23

0.554

3.40

0.20

1.48

1.49

(.182)(.169)

(.045)(.041)

-0.228

1.40

-0.592

0.60

(.072)(.064)

(.018)(.016)

left

0.198

(.023)(.022)

(.005)(.002)

(The

1

hand parentheses contain the standard deviation of the coefficient across 146 Monte Carlo

The right hand parentheses contain the average (n= 146) standard error calculated from

simulations.

MLE. The

similarity of the

two show

that the

maximum

30

likelihood asymptotics

work

well.)

Table lb: Simulations from Table la in Ratios

X,

is

Xj

X3

is

is

Repetitions

drawn from a lognornial distribution

a dummy variable drawn from a uniform

drawn from a uniform distribution

=

Design

146

n=5000

00

a

solving for

1

small

e

large

1

1

e

small

1

e

large

1

0.2

0.20

0.20

0.20

0.20

02

1.5

1.61

1.58

1.51

1.48

/3,

0.6

0.66

0.63

0.60

0.58

.02

n=5000

a =

MLE:

Probit: y misclassified

Ratios

e

a =

distiibution

^0

1

1

1

1

0.2

0.20

0.20

0.20

0.19

02

1.5

1.87

1.67

1.50

1.47

03

0.6

0.76

0.66

0.60

0.57

n=5000

00

1

a = .20

0:

0.2

0.23

0.24

0.20

0.21

02

1.5

3.40

2.49

1.49

1.50

03

0.6

1.40

1.04

0.60

0.63

.05

1

1

31

1

Simulation

Table

11:

vector

=

Simulations:

One Sample Generated

(-3, 0.3, 0.2)

X, drawn from a normal distribution

Xj a

true

dummy

a

variable

drawn from a uniform

True Probit

X and y

sample size

distribution

Probit: y

misclassified

MLE

using

true

a

MLE:

for

solving

a

a

a = .025

^0

n=2000

/32

a = .05

/3o

n=2000

^2

a = .l

/3o

n=2000

02

a = .2

00

n=2000

02

Note:

The

-2.958

-2.443

-2.937

-3.170

(.138)

(.119)

(.166)

(.289)

.295

.240

.290

.314

(.012)

(.010)

(.015)

(.028)

.204

.208

.224

.236

(.080)

(.075)

(.088)

(.095)

-3.048

-2.396

-3.266

-3.210

.046

(.137)

(.114)

(.200)

(.291)

(.014)

.296

.233

.316

.311

(.012)

(.009)

(.018)

(.027)

.296

.168

.290

(.079)

(.074)

(.097)

(.100)

-3.007

-1.901

-3.191

-2.961

.085

(.137)

MOl)

(.243)

(.359)

(.020)

(.013)

.282

.296

.187

.316

.293

(.012)

(.008)

(.022)

(.054)

.235

.143

.238

.218

(.077)

(.069)

(.110)

(.105)

-3.270

-1.186

-3.480

-3.282

.190

(.151)

(.086)

(.401)

(.532)

(.019)

.328

.122

.370

.348

(.013)

(.006)

(.040)

(.056)

.164

.051

-.0379

-.020

(.082)

(.065)

(.173)

(.163)

coefficients are different in each

generated for each row; thus

.0397

it is

row of column one because only one random sample

/3's would match exactly.

unlikely that the

32

is

Table

ill:

vector =(-2,

Logit Simulations

:

One Sample Generated

1)

Xi drawn from a normal distribution

True a

Sample

size

True Logit

X and y

00

Logit: y

misclassified

^0

/3i

.05

-1.990 1.012

-.967

n=

2000

(.153) (.054)

(.095)(.023)

n=

a=

n=

.1

2000

.2

2000

true

^.

a=

a=

MLE

.504

with

a known

/3o

-1.897

^1

.933

/3o

solving for

jS

/3,

-1.830 .901

a

.045

(.207)(.080)(.008)

-2.100

-2.115 1.079 .101

-.612

(.159) (.058)

(.081) (.017)

(.257) (.107)

-2.057 1.045

-.399

-2.338 1.146

(.154)(.056)

(.071) (.012)

.201

a and

(.192) (.070)

-2.131 1.065

.342

MLE

1.071

(.401) (.178)