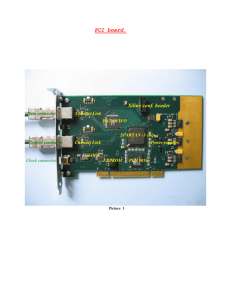

The Emonator: A Novel Musical Interface

advertisement