MIT Sloan School of Management

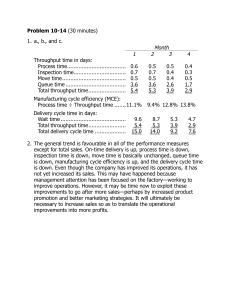

advertisement