Experimental data analysis Lecture 4: Model Selection Dodo Das

advertisement

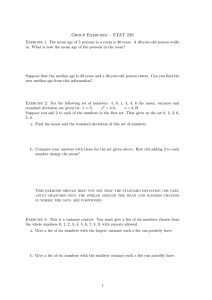

Experimental data analysis Lecture 4: Model Selection Dodo Das Review of lecture 3 Asymptotic confidence intervals (nlparci) Bootstrap confidence intervals (bootstrp) Bootstrap hypothesis testing Figure 6: Bootstrap distibutions for comparing the two datasets shown in Figure 5 How to select the best model? Case study: Fitting data with models of varying complexity. Simulate data from the following model: Fit with 4 different models: Single dataset fits How to rank models? The model with the highest number of parameters will typically give the best fit. But introducing more parameters increases model complexity, and the requires us to estimate values of all the additional parameters from limited data. Increasing the number of parameters also makes the model more susceptible to noise. Birth Weight (grams) FIGURE 4.7. The BPD data from Example 4.6. The data are shown with sm 4.1Quantifying The Bias–Variance Tradeoff model Risk vertical lines. Thecomplexity: estimates are fromDefining logistic regression (solid line), local likelih GURE 4.7. The(dashed BPDline) data from Example 4.6. The data are shown with s and local linear regression (dotted line). tical are from logistic regression (solid line), local likelih Let lines. f!nSquared (x) The be anestimates estimate of a function f (x). The squared error (or L ) loss 2 error at a point: ashed line)isand The localaverage linearofregression this loss is (dotted called theline). risk or mean squared error (ms function " # " #2 and is denoted by:f!n (x) = f (x) − f!n (x) . L f (x), (4.8) " # error (m e average of this loss is called the risk or mean squared mse = R(f (x), f!n (x)) = E L(f (x), f!n (x)) . ( d is denoted Averageby: over many experiments: mean squared error (or risk) The random variable in equation (4.9) is the function f!n which implic " will use the terms# risk and mse in depends on the observed data. We mse = R(f (x), f!n (x)) = E L(f (x), f!n (x)) . ( changeably. A simple calculation (Exercise 2) shows that " # ! (4.9) the =function R f (x),is fn (x) bias2x + Vx f!n e random variable in equation which implic (4 pends on the observed data. We will use the terms risk and mse in where angeably. A simple calculation (Exercise 2)f!nshows biasx = E( (x)) − f that (x) ere is the bias of"f!n (x) and ! # 2 R f (x), fn (x) = biasx !+ Vx Vx = V(fn (x)) is the variance of f!n (x). In words: (4 Bias-Variance tradeoff 54 4. Smoothing: General Concepts risk = mse = bias + variance. 2 Risk Bias squared ions refer to the risk at a point x. N different values of x. In density esti ated risk or integrated mean squ Variance Less smoothing Optimal smoothing " More smoothing FIGURE 4.9. The bias–variance tradeoff. The bias increases and the variance deMore complexity Less complexity Bias-variance tradeoff for the simulated data Simulate many independent replicates, and fit them individually. Bias-variance tradeoff for the simulated data The guiding principle: ‘Parsimony’ Even though the 5th order polynomial fits the model the best (lowest SSR), it has high variance. In contrast, the linear model has the lowest variance, but a high bias. The goal is to pick the model that does the best job with the least number of parameters. Methods for choosing an optimal model In general, we don’t know the ‘true’ model, so we can’t calculate the risk. Therefore, we need some way to rank model. The general idea is to give models preference if they reduce SSR, but penalize them if they have too many parameters. Two main techniques. 1. Akaike’s information criterion (AIC): Based on an information theory-based approximation for risk 2. F-test: Based on asymptotic statistical theory of the distribution of normal errors Akaike’s information criterion AIC = - 2ln(L) + 2k + 2k(k+1)/(n-k-1) For least squares regression: AIC = SSR + 2k + 2k(k+1)/(nk-1) n = number of data points, k = number of parameters Lower AIC is better. For the i-th model among candidate models Δi = (AIC)i - (AIC)min wi = exp(-Δi/2)/Σ exp(-Δi/2) f the F-test, consider the simulated dose-response F-test dequately captured by a fixed-slope Hill function Can only use for nested models (eg: the linear, quadratic etter and with thepolynomial variable-slope Hill compare function quintic models). Cannot the (equatio saturable model with any of these using F-test. is is that the simpler of the two models is sufficien plicated model fits the data significantly better. T Compute the F-statistic SSR0 − SSR1 N − m1 F = · , SSR1 m1 − m0 parameters and the more complicated model has Null hypothesis: Simple model is sufficient. the F -distribution with (m1 − m0 , N − m1 ) as sh Look up table for associated p-value. If p<0.05, reject null hypothesis at 5% significance level. F -score p-value Is p < 0.05? Example: Application of F test 89.23 3.96×10−9 Yes Reject null hypothesis 2 y (response) R =0.81 m=2 a more general application of the1F-test, consider the simulated dose-response data in Fig. 8. We wish to de 2 R captured =0.93 m=3 e whether this data can be adequately by a fixed-slope Hill function (equation 2 with two paramet and Emax ), or if it is fitted better with the variable-slope Hill function (equation 1 with the additional param As before, the null hypothesis 0.5 is that the simpler of the two models is sufficient to fit the data, and the altern othesis is that the more complicated model fits the data significantly better. The F -score in this case is defi 0 0 SSR0 − SSR1 N − m1 F = · , SSR1 m1 − m0 ( re the simple model has m0 parameters and the more complicated model has m1 parameters. We compute core and a p-value based on the F0.01 -distribution with (m1 0.1 − m0 , N − m1 ) as shown 1 below: x (dose) Number of data points, N 20 m0 =Simulated 2 m Number of model 1 =3 mparing the fit of two models to aparameters single data set. data (blue circles) is obtain 0.318 squared residualscurve SSR −1.0 , an 1 = distributed noiseSum to aofdose-response with0 = B0.881 = 0, ESSR = 1, EC = 10 max 50 F -score 30.1 models we will consider (equation 7.45 × 10−6 2), that contains two fitti p-value are the reduced Hill function a slightly more complicated mo ax ) with two fixedIsparameters p < 0.05? (B = 0 and n = 1), andYes null n) hypothesis ns three fitting parameters (EC50 , EReject with one fixed parameter (B = 0). Give max , and