Chapter 7 (Model Assessment and Selection )

advertisement

Chapter 7

(Model Assessment and Selection )

발표 일자 : 2004년 7월 15일

발 표 자:정보혜

Contents

Model Assessment and Selection

1. Introduction

2. Bias, Variance and Model Complexity

3. The Bias-Variance Decomposition

4. Optimism of the Training Error Rate

5. Estimates of In-Sample Prediction Error

6. The Effective Number of Parameter

7. The Bayesian Approach and BIC

8. Minimum Description Length

9. Vapnik-Chernovenkis Dimension

10. Cross-Validation

11.Bootstrap Methods

2

1. Introduction

◆ Model Assessment and Selection

-Model Selection: estimating the performance of different models in order to

choose the (approximate) best one.

-Model Assessment: having chosen a final model, estimating its prediction error

(generalization error) on new data.

Assessment of performance guides the choice of learning method or model,

And gives us a measure of the quality of the ultimately chosen model.

3

2. Bias, Variance and Model Complexity

◆Test error (generalization error) : the

expected prediction error over an

independent test sample.

◆Training Error : the average loss the

training sample.

-Model becomes more complex

decrease in bias

increase in variance

In between there is an optimal model

complexity that gives minimum test

error.

4

3. The Bias-Variance Decomposition

The expected prediction error of a regression fit

using squared-error loss:

at an input point

▶

-For the K-nearest-neighbor regression fit:

The number neighbors k is inversely related to the model complexity.

Increase in k (complexity decrease) bias increase , variance decrease

5

,

3. The Bias-Variance Decomposition

-For a linear model fit by least squares:

Here

is the N-vector of linear weights that produce the fit

and hence

This variance changes with x0 ,its average is

▶

In-sample error is

Model complexity is directly elated to the number of parameters p.

6

3. The Bias-Variance Decomposition

-For a ridge regression fit :

Variance term-the linear weights are different.

Bias termLet

denote the parameters of the best-fitting linear approximation to f:

The average squared bias :

Ex0

The average squared model bias is the error between the best-fitting linear approximation and the true

function. The average squared estimation bias is the error between the average estimate

and

the best fitting linear approximation.

7

3. The Bias-Variance Decomposition

Figure shows the bias-variance

tradeoff.

The model space is the set of all

linear predictions from p inputs.

“closest fit” is

The large yellow circle indicates

variance.

A shrunken or regularized fit (to fit a

model fewer predictors, or regularize

the coefficients by shrinking them

toward zero) has an additional

estimation bias, but it has smaller

variance.

8

3. The Bias-Variance Decomposition

3.1 Example :Bias-Variance Tradeoff

Prediction error (red) , squared bias

(green) and variance (blue).

The top row is regression with squared

error loss: The bottom row is

classification with 0-1 loss.

The variance and bias curves are the

same in regression and classification,

but the prediction error curve is different.

This means that the best choices of

tuning parameters may differ in the two

settings.

9

4. Optimism of the Training Error Rate

err <Err

Because the same data is being used to fit the method and assess its error.

In-sample error

The Ynew notation indicates that we observe N new response values at each of the training points xi, ,

i=1,2,…,N.

Err is “extra-sample” error.

Err= E(Errin)

Optimism

For squared error, o-1, and other loss functions.

10

4. Optimism of the Training Error Rate

In summary,

-example (least squares linear fit):

▶

And so,

d↑ op↑

N↑ op ↓

-An obvious way to estimate prediction error is to estimate the optimism and

then add it to the training error rate err. (AIC, BIC and others)

11

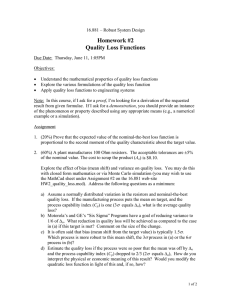

5. Estimates of In-Sample Prediction Error

The general form of the in-sample estimates:

Cp (fit under squared error loss)

Here

is an estimate of the noise variance, obtained from the mean-squared error of a low-bias

model.

AIC (fit under log-likelihood loss)

Here

is a family of densities for Y (containing “true” density),

estimate of , and “loglik” is the maximized log-likelihood:

It relies on a relationship similar to (7.20) that holds asymptotically as

12

is the maximum-likelihood

5. Estimates of In-Sample Prediction Error

-for the Gaussian model (with variance

assumed known), the AIC

statistic is equivalent to Cp. ▶

Choose the model giving smallest AIC over the set of models considered.

For nonlinear and other complex models, replace d by some

measure of model complexity.

Given a set of models

indexed by a tuning parameters ,denote by

and

. The training error and number of parameters for each model.

We define

If we have a total of p inputs, and we choose the best-fitting linear model

with d<p inputs, the optimism will exceed

13

5. Estimates of In-Sample Prediction Error

14

6. The Effective Number of Parameter

A linear fitting method

Where S is an

matrix depending on the input vectors x i but not on yi .

Then the effective number of parameters is defined as

-If S is an orthogonal-projection matrix onto a basis set spanned by M

features, then trace(S)=M.

trace(S) is exactly the correct quantity to replace d as the number of

parameters in the Cp statistic.

15

7. The Bayesian Approach and BIC

BIC (The Bayesian information criterion)

-Under the Gaussian model (assuming the variance

is known)

-2∙ loglik =

(which is

for squared error loss)

BIC ∝ AIC (Cp) with the factor 2 replaced by log N.

BIC tends to penalize complex models more heavily than AIC when

16

7. The Bayesian Approach and BIC

The Bayesian approach to model selection

Suppose we have a set candidate models Mm,m=1,…,M and corresponding

model parameters

, and we wish to choose a best model from among

them. Assuming we have a prior distribution

for the parameters of

each model Mm.

-the posterior probability of a given model

Where Z represents the training data {xi , yi}N1 .

-the posterior odds

If odds>1 , then choose model m, otherwise choose model l .

The contribution of the data toward the posterior odds

17

7. The Bayesian Approach and BIC

Approximating

As N∞ under some regularity conditions.

For loss function

, this is equivalent to the BIC criterion.

∴ Choosing the model with minimum BIC is equivalent to choosing the model

with largest (approximate) posterior probability.

AIC vs BIC

N ∞ , BIC will select the correct model approaches one

N ∞ , AIC tens to choose models which are too complex

But, for finite samples, BIC often chooses models that are too simple, because

of its heavy penalty on complexity.

18

8. Minimum Description Length

The theory of coding for data

If messages are sent with probabilities Pr (zi) , i=1,2,…, use code lengths li=

-log2Pr(zi) and

Model selection

We have a model M with parameters , and data

consisting of both

inputs and outputs. Let the probability of the outputs under the model be

-Choose the model that minimizes Length.

Minimizing description length is equivalent to maximizing posterior probability.

19

9. Vapnik-Chernovenkis Dimension

Definition of The VC dimension

The VC dimension of the class {f (x, α )} is defined to be the largest number of

points ( in some configuration) that can be shattered by numbers of {f (x, α )}

The VC dimension is a way of measuring the complexity of a class of functions by

assessing how wiggly its members can be.

This is a very wiggly function that gets

even rougher as the frequency

Α increases, but it has only one

parameter

In general, a linear indicator function

In p dimensions has VC dimension

p+1, Which is also the number of free

parameters.

20

9. Vapnik-Chernovenkis Dimension

-If we fit N training points using a class of functions {f(x, α)} having VC

dimension h, then with probability at least 1- η over training sets:

The bounds agreement with the AIC .

But, the result in (7.41)are stronger.

-SRM approach fit s a nested sequence of models of increasing VC dimensions

h1<h2 <…, and then chooses the mode with the smallest value of the upper

bound.

-drawback of SRM approach is the difficulty in calculating the VC dimension of

a class of functions.

21

9. Vapnik-Chernovenkis Dimension

◆ 9.1 Example

Boxplots show the distribution of

the relative error

Over the four scenarios of figure

7.3.

This is the error in using the

chosen model relative to the best

model.

The AIC seems to work well in all

four scenarios, despite the lack of

theoretical support with 0-1 loss.

BIC dose nearly as well, while the

performance of SRM is mixed.

22

10. Cross-Validation

A method of estimating the extra-sample error Err directly.

K-fold cross validation

Let

be an indexing function that indicates the partition to which

observation i is allocated by the randomization.

is the fitted function with the kth part of the data removed.

-The cross-validation estimate of prediction error:

The case K=N is known as leave-one-out cross-validation.

What value shoud we choose for K?

K smaller more bias, less variance

K larger less bias, more variance

23

10. Cross-Validation

The prediction error and tenfold

cross-validation curve estimated

from a single training set.

Both curves have minima at p=10,

although the CV curve is rather flat

Beyond 10.

“one-standard error” rule:

To choose the most parsimonious

model whose error is no more than

one standard error above the error

of the best model.

Here it looks like a model with abot

p=9 predictors would be chosen,

While true model uses p=10.

24

10. Cross-Validation

Generalized cross-validation

-Linear predictor:

for many linear fitting methods:

-The GCV approximation:

as an approximation to the leave one-out (K=N) cross-validation for the linear

predictor under squared error loss.

The similarity between GCV and AIC can be seen from the approximation

25

11.Bootstrap Methods

◆ The Bootstrap process

We wish to assess the statistical

accuracy of a quantity S(Z) computed

from a our dataset.

B training sets each of size N are

drawn with replacements from the

original data set. The quantity of

interest S(Z) is computed from each

bootstrap training set, and the values

are used to assess the

statistical accuracy of S(Z).

26

11.Bootstrap Methods

-For example, Estimate of the variance of S(Z)

Where

.

Monte-Carlo estimae of the variance of S(Z) under sampling from the empirical

distribution function for the data

.

◆ Bootstraping the prediction prediction error

If

is the predicted value at , from the model fitted to the bth bootstrap

dataset,

- Bootstrap samples act as the ‘training “ sample

- original samples act as the ‘test’ sample

under estimate Err due to the overlap of the ‘training’ sample & the ‘test’

sample.

27

11.Bootstrap Methods

◆ Leave –one-out bootstrap

Here

is the set of indices of the bootstrap samples b that do not contain

observation I, and

is the number of such samples.

is biased upward as an estimate of Err.

The average number of distinct observations in each bootstrap sample is about

0.632*N.

◆ 0.632 bootstrap estimate

28

11.Bootstrap Methods

Figure shows the results of fivefold

cross validation and the 0.632+

bootstrap estimate in the same four

problems of figure 7.7.

Both measures perform well overall,

perhaps the same or slightly worse

that the AIC in figure 7.7

29