Models and Mechanisms for Tangible User ...

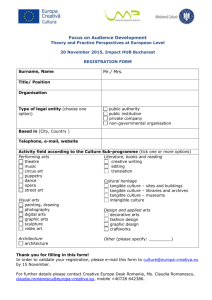

advertisement