Document 11143353

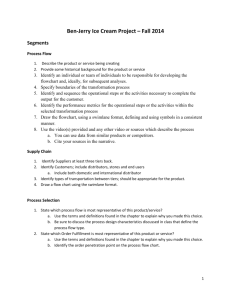

advertisement