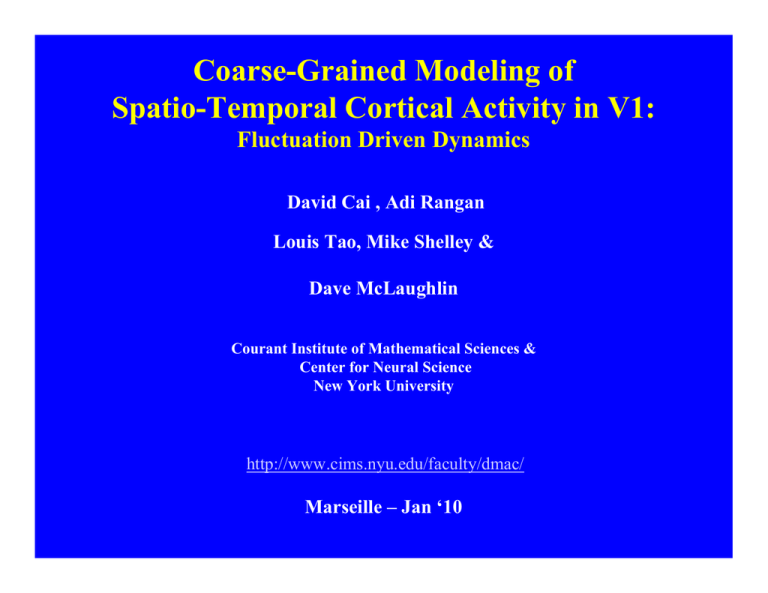

Coarse-Grained Modeling of Spatio-Temporal Cortical Activity in V1: Fluctuation Driven Dynamics

advertisement

Coarse-Grained Modeling of

Spatio-Temporal Cortical Activity in V1:

Fluctuation Driven Dynamics

David Cai , Adi Rangan

Louis Tao, Mike Shelley &

Dave McLaughlin

Courant Institute of Mathematical Sciences &

Center for Neural Science

New York University

http://www.cims.nyu.edu/faculty/dmac/

Marseille – Jan ‘10

Background

For the past decade, we have been studying the

“front end” of the visual system

V1 -- the primary visual cortex

Through large scale computational modeling

Visual Pathway: Retina --> LGN --> V1 --> Beyond

The “primary visual cortex (V1)” is a “layered structure”,

with O(10,000) neurons per square mm, per layer.

Our Model (~16mm2, 64 pinwheels)

5x105 coupled Integrate & Fire (I&F), conductance-based, point neurons

τ

dVE (x)

= − [VE (x) − ε R ] − g E E ( x, t ) [VE (x) − ε E ] − g EI ( x, t ) [V (x) − ε I ]

dt

(

)

(

g EE ( x, t ) = FLGN (x) + f noise (x) + S S EE ∑ K S x ,x ' ∑ GE S t − T f ,l x ' + S L EE ∑ ' K L x ,x ' ∑ GE L t − T f ,l x '

l

x'

x'

Local

Spontaneous: FLGN=0

)

l

Long Rang

Conductance Time Course:

…

…

…

(

G t − Tf l

VT

)

Tf l

t

εR

Nonlinearity from spike-threshold:

Whenever V(x,t) = VT, the neuron "fires", spike-time T f l recorded,

and V(x,t) is reset to R ,

held at R for an absolute refractory period τ ref .

ε

ε

Local:

G S (t ) =

f

σd −σr

⎛

⎡ t ⎤

⎡ t ⎤⎞

⎜⎜ exp ⎢ − ⎥ − exp ⎢ − ⎥ ⎟⎟ θ ( t )

⎣ σr ⎦⎠

⎣ σd ⎦

⎝

Long-range:

GE L ( t ) = (1 − Λ ) GAMPA ( t ) + ΛGNMDA ( t )

AMPA: ~5ms

NMDA: ~80-100ms

Summary Description of the Model

•

•

•

Large scale, single layer model (16 mm^2; 64 orientation pinwheels)

Fast integrator (advance in computational technology)

Conductance based, I&F point neurons

– O(10^5) neurons

– Simple and complex cells

– Excitatory & Inhibitory neurons

•

Local connections

–

–

–

–

•

Isotropic

Inhibition: GABAa;

Excitation: AMPA & NMDA;

Excitatory length scales longer than (comparable with) inhibitory

Long range connections

– Excitatory

– NMDA & AMPA

– Orientation Specific

•

All connections sparse

Network Architecture

Tiling of Orientation Hypercolumns

Lateral Connections and Orientation -- Tree Shrew

Bosking, Zhang, Schofield & Fitzpatrick

J. Neuroscience, 1997

Short Range (Excitatory and inhibitory):

Long Range, Excitatory Connections:

⎛ x − x'

⎜− 2

exp

⎜ σ EE

2πσ 2 EE

⎝

σ EE , σ EI 100 − 300μ m

1. Gaussian, σ L 1500μ m

2. NMDA +AMPA

3. Orientation-specific, Anisotropy

K S x,x ' =

1

2

⎞

⎟

⎟

⎠

Summary Performance of the Model

• Not balanced, but over inhibited, with large conductances

• Feed For’d Network, primarily,

– But with strong lateral recurrent connections

• Intermittant desuppressed state (IDS)

– High gain, but “pre-hysteresis” regime

– In which fluctuations dominate

• One model, when in (from) IDS state, reproduces in detail

– Linear/nonlinear behavior of simple/complex cells

– Orientation selectivity

– Voltage sensitive dye observations

• Spontaneous activity

• Response to line motion illusion stimuli

Coarse-Grained Asymptotic

Representations

Needed for computational efficiency

“Scale-Up” -- to

• Larger lateral area

• Multiple layers

• All methods for coarse-graining begin with

First, tile the cortical layer with coarse-grained (CG) patches

Most Common Coarse-Grained

Reduction

Average firing rate models

[Cowan & Wilson (’72); ….;

Shelley & McLaughlin(’02)]

Average firing rate of an excitatory (inhibitory) neuron,

within coarse-grained patch located at location x in the

cortical layer:

mσ(x,t), σ = E,I

Such mean-field approaches will never capture fluctuation

driven dynamics – dynamics driven by temporal

fluctuations

Cortical networks have a very

“noisy” dynamics

• Strong temporal fluctuations

• On synaptic timescale

• Fluctuation driven spiking

Experiment Observation

Fluctuations in Orientation Tuning (Cat data from Ferster’s Lab)

Ref:

Anderson, Lampl, Gillespie, Ferster

Science, 1968-72 (2000)

Fluctuation-driven

spiking

(very noisy dynamics,

on the synaptic time scale)

Solid:

average

( over 72 cycles)

Dashed: 10 temporal

trajectories

•

To accurately and efficiently describe these networks requires that

temporal fluctuations be retained in a coarse-grained representation.

•

“Pdf ” representations –

ρσ(v,g; x,t), σ = E,I

will retain temporal fluctuations.

•

But will not be very efficient numerically

•

Needed – a reduction which retains temporal

1.

2.

•

Means &

Variances

Kinetic Theory provides this representation

Ref: Cai, Tao, Shelley & McLaughlin, PNAS, pp 7757-7762 (2004)

Kinetic Theory begins from

PDF representations

ρσ(v,g; x,t), σ = E,I

• Knight & Sirovich;

• Nykamp & Tranchina, Neural Comp (2001)

• Haskell, Nykamp & Tranchina, Network (2001) ;

• For convenience of presentation, I’ll sketch the derivation

a single CG patch, with 200 excitatory Integrate & Fire

neurons

• The results extend to interacting CG cells which include

inhibition – as well as different cell types such as “simple”

& “complex” cells.

• First, replace the 200 neurons in this CG patch by an

equivalent pdf representation

• Then derive from the pdf rep, kinetic theory

• N excitatory neurons (within one CG cell)

• Random coupling throughout the CG cell;

• AMPA synapses (with a short time scale σ)

τ ∂t vi = -(v – VR) – gi (v-VE)

σ ∂t gi = - gi + Σl f δ(t – tl) +

(Sa/N) Σl,k δ(t – tlk)

vi (tik) = 1; vi (t = tik + ) = 0

ρ(g,v,t) ≡ N-1 ∑i=1,N E{δ[v – vi(t)] δ[g – gi(t)]},

Expectation “E” over Poisson spike train { tl }

τ ∂t vi = -(v – VR) – gi (v-VE)

σ ∂t gi = - gi + Σl f δ(t – tl) + (Sa/N) Σl,k δ(t – tlk)

Evolution of pdf -- ρ(g,v,t): (i) N>>1; (ii) the total input to each

neuron is (modulated) Poisson spike trains.

∂t ρ = τ-1∂v {[(v – VR) + g (v-VE)] ρ} + ∂g {(g/σ) ρ}

+ ν0(t) [ρ(v, g-f/σ, t) - ρ(v,g,t)]

+ N m(t) [ρ(v, g-Sa/Nσ, t) - ρ(v,g,t)],

ν0(t) = modulated rate of incoming Poisson spike train;

m(t) = average firing rate of the neurons in the CG cell

= ∫ J(v)(v,g; ρ)|(v= 1) dg,

and where J(v)(v,g; ρ) = -{[(v – VR) + g (v-VE)] ρ}

∂t ρ = τ-1∂v {[(v – VR) + g (v-VE)] ρ} + ∂g {(g/σ) ρ}

+ ν0(t) [ρ(v, g-f/σ, t) - ρ(v,g,t)]

+ N m(t) [ρ(v, g-Sa/Nσ, t) - ρ(v,g,t)],

N>>1;

f << 1; ν0 f = O(1);

∂t ρ = τ-1∂v {[(v – VR) + g (v-VE)] ρ}

+ ∂g {[g – G(t)]/σ) ρ} + σg2 /σ ∂gg ρ + …

where σg2 = ν0(t) f2 /(2σ) + m(t) (Sa)2 /(2Nσ)

G(t) = ν0(t) f + m(t) Sa

Kinetic Theory Begins from Moments

•

•

•

•

ρ(g,v,t)

ρ(g)(g,t) = ∫ ρ(g,v,t) dv

ρ(v)(v,t) = ∫ ρ(g,v,t) dg

μ1(v)(v,t) = ∫ g ρ(g,t⏐v) dg

where ρ(g,v,t) = ρ(g,t⏐v) ρ(v)(v,t).

∂t ρ = τ-1∂v {[(v – VR) + g (v-VE)] ρ}

+ ∂g {[g – G(t)]/σ) ρ} + σg2 /σ ∂gg ρ + …

First, integrating ρ(g,v,t) eq over v yields:

σ ∂t ρ(g) = ∂g {[g – G(t)]) ρ(g)} + σg2 ∂gg ρ(g)

Fluctuations in g are Gaussian

σ ∂t ρ(g) = ∂g {[g – G(t)]) ρ(g)} + σg2 ∂gg ρ(g)

PDF of g

−3

3.5

x 10

EXC

P(gEXC)

Gaussian

3

P(gEXC)

2.5

2

1.5

1

0.5

0

75

80

85

−1

gEXC (sec )

90

95

Integrating ρ(g,v,t) eq over g yields:

∂t ρ(v) = τ-1∂v [(v – VR) ρ(v) + μ1(v) (v-VE) ρ(v)]

Integrating [g ρ(g,v,t)] eq over g yields an

equation for

μ1(v)(v,t) = ∫ g ρ(g,t⏐v) dg,

where ρ(g,v,t) = ρ(g,t⏐v) ρ(v)(v,t)

∂t μ1(v) = - σ-1[μ1(v) – G(t)]

+ τ-1{[(v – VR) + μ1(v)(v-VE)] ∂v μ1(v)}

+ Σ2(v)/ (τρ(v)) ∂v [(v-VE) ρ(v)]

+ τ-1(v-VE) ∂vΣ2(v)

where Σ2(v) = μ2(v) – (μ1(v))2 .

Closure:

One obtains:

(i) ∂vΣ2(v) = 0;

(ii) Σ2(v) = σg2

∂t ρ(v) = τ-1∂v [(v – VR) ρ(v) + μ1(v)(v-VE) ρ(v)]

∂t μ1(v) = - σ-1[μ1(v) – G(t)]

+ τ-1{[(v – VR) + μ1(v)(v-VE)] ∂v μ1(v)}

+ σg2 / (τρ(v)) ∂v [(v-VE) ρ(v)]

Together with a diffusion eq for ρ(g)(g,t):

σ ∂t ρ(g) = ∂g {[g – G(t)]) ρ(g)} + σg2 ∂gg ρ(g)

Fluctuation-Driven Dynamics

70

3.5

3

ρ(V)

2.5

2

N=75

Theory→

←I&F (solid)

1.5

50

1

0

0

40

0.2

0.4

0.6

0.8

1

V, Membrane Potential

2

(b)

EXC

)

30

20

10

0

5

1

firing rate (Hz)

0.5

ρ(g

Firing Rate (Hz)

60

(a)PDF of v

Fokker-Planck→

N=75

σ=5msec

0

S=0.05

5

10

15−1

f=0.01g

(sec )

EXC

10

Simulation

Theory

Zero σ Theory

Mean−Driven (N → ∞, f → 0)

Theory→

←I&F

20

←Mean-driven limit (

Hard thresholding N → ∞

G

input

−1

(sec )

15

):

20

Bistability and Hysteresis

¾ Network of Simple, Excitatory only

Fluctuation−Driven Hysteresis

180

N=16!

N=16

160

Firing Rate (Hz)

140

120

100

80

Mean-Driven:

Fluctuation-Driven:

60

N →∞

40

20

Relatively Strong

Cortical Coupling:

0

0

2

4

6

Ginput

8

10

12

14

Bistability and Hysteresis

¾ Network of Simple, Excitatory only

Fluctuation−Driven Hysteresis

180

N=16!

160

120

Fluctuation−Driven Hysteresis

100

180

160

140

80

60

40

Firing Rate (Hz)

Firing Rate (Hz)

140

120

Mean-Driven:

100

80

60

40

20

20

0

8

Relatively Strong

Cortical Coupling:

0

0

8.1

8.2

2

8.3

8.4

8.5

Ginput

4

8.6

8.7

8.8

6

8.9

9

Ginput

8

10

12

14

Realistic Extensions

Extensions to

• many interacting coarse-grained local patches,

• with excitatory and inhibitory neurons,

• and with neurons of different types (simple &

complex).

The pdf then takes the form

ρσ,ν(v,g; x,t),

where x is the coarse-grained label, σ = E,I

and ν labels cell type

Three Dynamic Regimes of Cortical Amplification (Kinetic Th):

Fluctuation Theory: Effect of C

ee

140

1) Weak Cortical Amplification

No Bistability/Hysteresis

2) Near Critical Cortical Amplification

3) Strong Cortical Amplification

Bistability/Hysteresis

120

Firing Rate (Hz)

100

80

60

(2)

(1)

40

(3)

20

0

5

10

15

20

25

−1

30

35

40

Ginput (sec )

O(100) cells; 25% inhibitory; 75% excitatory

Excitatory Cells Shown -- complex cells (solid lines); simple cells (dotted lines)

Complex Cell Firing Rate (spikes/sec)

Integrate & Fire Networks

N = 25

N = 50

N = 100

N = 200

300

250

200

150

100

50

0

200

100

24

23

50

22

N

21

25

20

−1

GInput (sec )

Firing rate vs. input conductance for 4 networks

with varying pN: 25 (blue), 50 (magneta), 100

(black), 200 (red). Hysteresis occurs for pN=100

and 200. Fixed synaptic coupling Sexc/pN

Summary

• Kinetic Theory is a numerically efficient (103 -105 more efficient than I&F), and remarkably

accurate, method for “scale-up”

Ref: PNAS, pp 7757-7762 (2004)

• Kinetic Theory introduces no new free

parameters into the model, and has a large

dynamic range from the rapid firing “meandriven” regime to a fluctuation driven regime.

Too good to be true?

What’s missing?

• As in many moment closures –

– The zeroth moment is more accurate than the first

moment;

– Existence can fail (Ly & Tranchina, 2007);

• That is, at low but realistic firing rates, equations too rigid to

have steady state solutions which satisfy the boundary

conditions;

• Diffusion (in v) fixes this existence problem – by introducing

boundary layers;

• But far more serious problem -- Kinetic Theory

does not capture detailed “spike-timing”

information.

Too good to be true?

What’s missing?

• As in many moment closures –

– The zeroth moment is more accurate than the first

moment;

– Existence can fail (Li & Tranchina, 2007);

• That is, at low but realistic firing rates, equations too rigid to

have steady state solutions which satisfy the boundary

conditions;

• Diffusion (in v) fixes this existence problem – by introducing

boundary layers;

• But far more serious problem -- Kinetic Theory

does not capture detailed “spike-timing”

information.

Too good to be true?

What’s missing?

• As in many moment closures –

– The zeroth moment is more accurate than the first

moment;

– Existence can fail (Li & Tranchina, 2007);

• That is, at low but realistic firing rates, equations too rigid to

have steady state solutions which satisfy the boundary

conditions;

• Diffusion (in v) fixes this existence problem – by introducing

boundary layers;

• But far more serious problem -- Kinetic Theory

does not capture detailed “spike-timing”

information.

Most likely the cortex works, on very short time

scales, through neurons correlated by detailed

spike timing.

Recent experimental and computational results

have brought into focus the importance of

space-time patterns of cortical activity, caused by

sequential firing events.

For example, imaging with voltage sensitive dyes

Stimulus

V1

Voltage Sensitive Dye

−66

F/F %

mV

0.1

−0.1

−72

0

1

Time (seconds)

Firing Rate

Single Cell Response

LGN

0

Stimulus Angle

180

Voltage Sensitive Dyes: Indicators spatiotemporal activity

2

• Experiments in Grinvald’s lab have shown that, in

(anesthetized) cat primary visual cortex, patterns of

spontaneous cortical activity are very similar to patterns of

activity evoked by external stimuli, except

• the patterns of spontaneous activity are only “meta-stable”

– drifting, as well as cycling irregularly between distinct

patterns.

¾ PCS (Preferred Cortical State) of a neuron:

VP x;θ op ≡ ∑ i V x, T f i ;θ op / N f under optimal drive.

(

)

(

Tsodyks, Kenet, Grinvald

& Arieli; Science (1999)

)

¾ Spike-triggered Spontaneous Pattern vs. Evoked:

Vst ( x ) ≡ ∑ i V x, T f i / N f

(

)

¾ SI (Similarity Index): the correlation coefficient ρ (θop ; t )

between instantaneous V ( x, t )and the PCS VP x;θ op

(

)

r

9

Cortical mechanisms behind IDS State

Ref: Cai, Rangan, McLaughlin, PNAS ‘05

• Sparse connections; strong local inhibition

-- which yield fluctuation dominated dynamics

• Long range (LR) orientation specific excitatory

connections, of moderate strength

-- which set spatial and orientation patterns

• LR connections with NMDA receptors

-- which set the time scales of the patterns

• Key point: Sparse intermittent firings, at low firing rates

-- which recruit near-by packets of neurons to fire

-- which cause sequential long distance firing events

-- which in turn generate strongly space-time

correlated NMDA conductance patterns

Line-Motion Illusion:

Similarly, sequential firing events over long lateral

distances, cause (in computer models) spatial-temporal

patterns of cortical activity which are observed (in

anesthetized cats, in voltage sensitive dye experiments)

after the stimulation which causes the perception of the

line motion illustion.

Again, in the computer simulations (PNAS, 2005), these

patterns of cortical activity are caused by sequential firing

events, over long lateral cortical distances.

Experiment — Line Motion Illusion

• Input — Present stimulus

• Output — Image the cortical response

Time

Stimulus

Experiment

Voltage

Experiment

Voltage

Stimulus

Grinvald et al.

Cortical mechanisms which produce the cortical

activity of the “line motion illusion”

Ref: Cai, Rangan, McLaughlin; PNAS ‘05

• From the IDS cortical operating point

• Flashed square sets up localized cortical activity – a new

cortical operating point

• From which the cortex responds to the flashed bar

-- LR orientation specific excitatory connections

-- on the 80 ms NMDA time scale

-- interacting with local fast inhibition

-- at “high conductance” g ~ v

• To produce the illusion of motion – the “line motion

illusion”

• Key Point: Sparse correlated sequential firing events,

acting sequentially, together with NMDA conductances

• Coarse-grained theories involve local

averaging in both space and time.

• Hence, coarse-grained theories average out

detailed spike timing information.

• Ok for “rate codes”, but if spike-timing

statistics is to be studied, must modify the

coarse-grained approach

PT #2: Embedded point neurons will capture

these statistical firing properties

[Ref: Cai, Tao & McLaughlin, PNAS (2004)]

•

•

•

For “scale-up” – computer efficiency

Yet maintaining statistical firing properties of multiple neurons

Model especially relevant for biologically distinguished sparse, strong

sub-networks – perhaps such as long-range connections

•

Point neurons -- embedded in, and fully interacting with, coarsegrained kinetic theory,

Or, when kinetic theory accurate by itself, embedded as “test

neurons”

•

∂ B

∂

ρλ ( v ) = ⎡⎣U λ v, μλ E B , μλ I B ρλ B ( v ) ⎤⎦

∂t

∂v

(

)

σ λ EB 2 ∂ ⎡⎛ v − ε E

∂

∂

1

B

B

B

B

B

μλ E ( v ) = U λ v, μλ E , μ λ I

μλ E ( v ) −

μ ( v ) − g λ EB ( t ) + B

∂t

∂v

σ E λE

ρλ ( v ) ∂v ⎢⎣⎜⎝ τ

(

)

(

)

σ λ IB 2 ∂ ⎡⎛ v − ε I

∂

∂

1

B

B

B

B

B

μ λ I ( v ) = U λ v, μλ E , μ λ I

μλ I ( v ) −

μ ( v ) − g λ IB ( t ) + B

∂t

∂v

σ I λI

ρλ ( v ) ∂v ⎢⎣⎜⎝ τ

(

)

g λ EB ( t ) = f E ν 0 E

B

B

( t ) + Sλ E

(

B

mE

B

(t ) +

g λ IB ( t ) = f I Bν 0 I B ( t ) + Sλ I B mI B ( t ) +

S λ E BD

NE

S λ I BD

NI

(

D

)

t

∫e

D

t

∫e

−

−

t −s

σE

t −s

σI

⎤

⎞ B

ρ

v

(

)

⎥

λ

⎟

⎠

⎦

⎤

⎞ B

ρ

v

⎟ λ ( )⎥

⎠

⎦

∑ ∑ p μδ ( t − t μ ) ds,

j

j∈PE D μ

j

∑ ∑μ p μδ ( t − t μ ) ds

k

k

k∈PI D

)

(

)

2

2

B

BD

t −s

⎡

⎤

S

S

t −

2

λ

E

1 ⎢ B

λE

σE

B

B

2

σ λ EB ( t ) =

fE ν 0E (t ) +

mE ( t ) +

e

p j μ δ ( t − t j μ ) ds ⎥ ,

∑

∑

D ∫

⎥

2σ E ⎢

pN E

pN E

j∈PE D μ

⎣

⎦

2

2

B

BD

t −s

⎡

⎤

S

S

t −

2

λ

I

1 ⎢ B

λI

σI

B

B

2

σ λ IB ( t ) =

fI ν 0I (t ) +

mI ( t ) +

e

pk μ δ ( t − tk μ ) ds ⎥

∑

∑

D ∫

⎥

2σ I ⎢

pN I

pN I

k∈PI D μ

⎣

⎦

(

( )

)

(

)

(

)

dVi λ D

τ

= − Vi λ D − ε r − Gi λ ED ( t ) Vi λ D − ε E − Gi λ ID ( t ) Vi λ D − ε I ,

dt

(

)

(

)

dGi λ ED

Sλ E D

D

i

λ ED

σE

= −Gi + f E ∑ δ t − tμ +

dt

NE D

μ

(

+ Sλ E DB

)

∑ ∑μ p μδ ( t − t ' μ )

j

(

)

∑ ∑μ p μδ ( t − t μ )

j

j

j∈PE D

j

j∈PE B

dGi λ ID

Sλ I D

D

i

λ ID

σI

= −Gi + f I ∑ δ t − Tμ + D

dt

NI

μ

(

+ Sλ I DB

)

∑ ∑μ p μδ ( t − T ' μ )

k

k∈PI

∑ ∑μ p μδ ( t − T μ )

k

k∈PI

k

D

k

B

⎧ 1, α = simple

λ = E , I ; α = simple, complex, βα = ⎨

⎩0, α = complex

Poisson spike trains {t ' j μα ' } , {T ' j μα ' } are reconstructed from the rate mEα ' B N Eα ' B , mIα ' B N Iα ' B .

I&F vs. Embedded Network Spike Rasters

a) I&F Network: 50 “Simple” cells, 50 “Complex” cells. “Simple” cells driven at 10 Hz

b)-d) Embedded I&F Networks: b) 25 “Complex” cells replaced by single kinetic equation;

c) 25 “Simple” cells replaced by single kinetic equation; d) 25 “Simple” and 25 “Complex”

cells replaced by kinetic equations. In all panels, cells 1-50 are “Simple” and cells 51-100

are “Complex”. Rasters shown for 5 stimulus periods.

Rasters

Cross−CorrelationISI

Neuron Label

Embedded Network

Neuron Label

10

200

−1

10

6

150

−2

10

4

100

2

50

4.5

0

−0.25

−3

4.6

4.7

4.8

Time (sec)

4.9

5

10

−4

0

10

0.2510−3

−2

10

−1

10

0

250

8

Full I & F Network

0

250

8

10

200

−1

10

6

150

−2

10

4

100

2

50

4.5

0

−0.25

−3

4.6

4.7

4.8

Time (sec)

4.9

5

10

−4

0

10

0.2510−3

ti−tj (sec)

−2

10

−1

10

ISI (sec)

Raster Plots, Cross-correlation and ISI distributions.

(Upper panels) KT of a neuronal patch with strongly coupled embedded neurons;

(Lower panels) Full I&F Network.

Shown is the sub-network, with neurons 1-6 excitatory; neurons 7-8 inhibitory;

EPSP time constant 3 ms; IPSP time constant 10 ms.

Reverse-time correlation for a simple cell in a ring model for the orientation tuning dynamics

of V1 neurons

Cai D et al. PNAS 2004;101:14288-14293

©2004 by National Academy of Sciences

Computational Efficiency

• For statistical accuracy in these CG patch settings, Kinetic

Theory is 103 -- 105 more efficient than I&F;

• The efficiency of the embedded sub-network scales as N2,

where N = # of embedded point neurons;

(i.e. 100 Æ 20 yields 10,000 Æ400)

Conclusion:

Coarse-grained, scale-up methods

•

Kinetic Theory is

– a numerically efficient (103 -- 105 more efficient than I&F);

– remarkably accurate;

– accurately captures firing rates in fluctuation dominated systems;

•

Any pure coarse-grained theory cannot capture, with scale-up

efficiency, correlations caused by the detailed spike-timing of

sequences of neurons – which is likely how the cortex frequently

works.

•

Most promising method for scale-up: Neuronal sub-networks

– embedded within a coarse-grained representation, which describes

fluctuation driven dynamics,

– fully interacting with that coarse-grained representation.

Conclusion:

Coarse-grained, scale-up methods (Cont)

In addition,

– we’ve developed fast “multipole” algorithms for integrate & fire

systems [Cai and Rangan, J Comp Neural Sci (2007)];

– And we’ve developed a method for data analysis of sequential

firing events – event tree chains [Rangan, Cai & McLaughlin, PNAS

(2008)]

II. Our Large-Scale Model of V1

•

•

A detailed, large- scale model of the input layer of Primary Visual Cortex (V1);

Realistically constrained by experimental data;

Refs from our

group:

(Today ) =>

-----------

PNAS (2000) {Our original model, orientation tuning}

J Neural Science (2001) {Simple cells}

J Comp Neural Sci (2002) {Reduction to firing rate eqs}

PNAS (2004) {High conductance operating point}

PNAS (2004) {Single model, simple & complex cells}

---------

PNAS (2004) {Fluctuations & Pdf – kinetic theory}

PNAS(2004) {Embedded Sub-Network}

PNAS (2005) {Space-time spontan. cortical activity}

PNAS (2005) {Line-motion illusion}

--- J Comp Phys (2007) {Numerical algorithm for kinetic eqs}

--- J Comp Neural Sci (2007) {Fast algorithm, integrate & fire eqs}

--- PNAS (2008) {Event tree data analysis}

--- Phys Rev Lett (2009) {Diagrammatic pdf analysis}

--- http://www.cims.nyu.edu/faculty/dmac/

Modeled at :

Courant Institute of Math. Sciences

& Center for Neural Science, NYU

In collaboration with:

*** Robert Shapley (Neural Science)

**

**

**

**

David Cai

Michael Shelley

Aaditya Rangan

Louis Tao

Tony Guillamon

Jacob Wielaard

David Lorentz

Mainik Patel

(Physics & Appl Math)

(Computational Sci & Neural Sci)

(Computational Sci)

(PKU; Computational Sci)

(Former post doc; Mathematics)

(Former Post doc; Physics & CompSci)

(Former UG, Math-Neural Science)

(Grad Student, MdPhd)

Simple System

Integrate-and-fire type excitatory cells

S?

Hodgkin-Huxley type inhibitory cells

1024ms

S1

Power spectrum

10

S2

10

5

20

50

Stimulus 1

Event-tree level 0 – Firing Rate

Inhibitory cells

Excitatory cells

7

20 60

spikes/second

Stimulus 1

Event-tree level 1

α →β

β

β →α

α

α

β

α

β

γ

β → α is more frequent than α → β

(although β does not synapse on α )

Stimulus 1

Event-tree level 2

β →α →γ

γ → β →α

Event-tree level 3

Stimulus 1

Simple System

Integrate-and-fire type excitatory cells

S?

Hodgkin-Huxley type inhibitory cells

1024ms

S1

Power spectrum

10

S2

10

5

20

50

Event-tree level 1

Stimulus 1

Event-tree level 1

Stimulus 2

Short Observation Times

Tobs= O(100 ms)

Short Observation Times

Tobs= O(100 ms)

• But these event-trees were for long observation

times;

• Can information be carried by, and extracted

from, this coarse-grained event-tree projection

-- over short but realistic observation times of

O(100ms)?

• For over short times, differences in the

occurances of specific event-chains in the eventtree will depend both upon the differences in

signal and the firing irregularities due to short

observation times.

Discriminate stimuli? Within SHORT time?

time

∞

S1

S2

S?

T ~ 200ms

Which characteristics of short time observations of the dynamics reflect the stimulus?

Probabilistic Representation

• For a given stimulus, we estimate the

Tobs probability distribution for each event chain

within the event tree;

• That is, for each stimulus and each event chain,

we obtain the probabilities that the chain occurs

k times within Tobs, , k = 0,1,2, ……

• with the estimate of the probability obtained by

running the experiment over and over again.

Probabilistic Representation

• We use these probabilities to estimate, for one

(short) Tobs, which stimulus drove the response.

– For some specific chains, the pdf’s for different stimuli will be

quite distinct; these individual chains could then be used to

distinguish between stimuli. However, which ones to use will not

be known. Hence,

– Each chain in the tree “has a vote” for which stimulus, with that

chain’s vote weighted by a how well its pdf’s are separated for

the stimuli

– A = ½ ∫ Max (P1, P2) dn ; B = 1 – A; I = A/B

– Weight = log (I)

Sensitive to the Dynamical State of the

Cortex

Sensitive to the Dynamical State of the

Cortex

• Event chains and trees capture sequential

firing events, over time and cortical location

• As such, they are sensitive to the dynamical

state of the cortex (but, their construction

does not depend upon specific architectures)

• The success in fine discrimination for the

event-trees depends upon the dynamical

state of the cortex.

Near Synchrony

Bursty

Near asynchrony

Realistic Example: Orientation Selectivity

in V1

Other applications – Visual Cortex (V1)

Estimate orientation θ of stimulus, based only on cortical response for a short time

200ms

200ms

Stimulus:

vs

The cat itself can tell if the stimuli are ~2o apart, whereas humans can tell ~20’ apart

Experimental results using “state analysis” (AB Bonds et al.)

1. If Δθ is large, the firing rate is sufficient to discriminate.

2. If Δθ is small, correlations between several neurons are needed

to discriminate.

Orientation discrimination in visual cortex

~1mm

Discriminability

100%

Δθ=18ο

75%

Δθ=6ο

50%

1

2

3

mmax

4

Summary

•

Event tree coding is a coding scheme which is

– extended in cortical space and time

– swift

– reliable

•

•

•

•

•

•

•

It works for recurrent networks, and depends upon their dynamical

state

It can swiftly discriminate between fine differences in stimuli, when

firing rate alone cannot discriminate

As it uses only firing events, it seems to avoid “curses of

dimensionality”

We have shown that it works well with large scale computer models,

And thus, it provides a possible means for cortical encoding and

information carrying

Ref: Rangan, Cai & McLaughlin, PNAS (2008)

Next: Begin to analyze data from multi-electrode grids