Mathematics Academies 2011-2013 Cohort 1 Evaluation Study

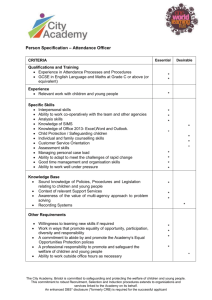

advertisement