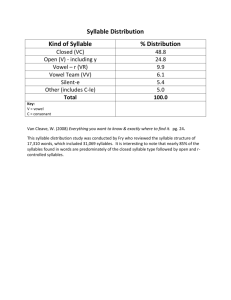

OCT 1 LIBRARIES 2008

advertisement