USING DIBELS NEXT READING FLUENCY OR TEXT READING GIFTED STUDENTS

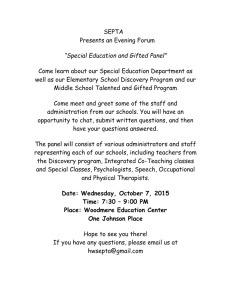

advertisement