Autonomy and adaptiveness: a architectures Sanjoy K. Mitter***

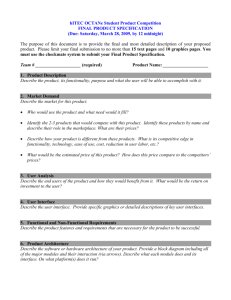

advertisement