Prediction of Rainfall in Saudi Arabia

advertisement

Prediction of Rainfall in Saudi Arabia

A THESIS

SUBMITTED TO THE GRADUATE EDUCATIONAL POLCIES COUNCIL

IN PARTIAL FULFILLMENT OF THE REQUIREMENTS

For the degree

MASTER OF SCIENCE

By

Hadi Obaid M Alshammari

Adviser Dr. Rahmatullah Imon

Ball State University

Muncie, Indiana

May 2015

ACKNOWLEDGEMENTS

I would like to gratefully and sincerely thank my supervisor Professor Dr. Rahmatullah Imon for

the patient guidance, encouragement and advice he has provided throughout my time as his

student. I have been extremely lucky to have a supervisor who cared so much about my work,

and who responded to my questions and queries so promptly. His mentorship was paramount in

providing a well-rounded experience consistent my long-term career goals. For everything

you’ve done for me Dr. Imon, thank you. I would like also to thank the rest of my thesis

committee: Dr. Rich Stankewitz and Dr. Yayuan Xiao for their encouragement, insightful

comments and patience.

Finally, I would like to thank my family: my mother, my brothers and sisters, for supporting me

throughout my life.

Hadi Obaid M Alshammari

March, 30, 2015

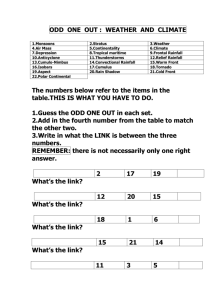

Table of Contents

4

CHAPTER 1

INTRODUCTION

1.1 Saudi Arabia Climate Data

1.2 Outline of the Study

4

5

10

12

CHAPTER 2

ESTIMATION OF MISSING VALUES IN SAUDI ARABIA CLIMATE DATA

2.1 Imputation Methods

12

2.2 Expectation Maximization Algorithm

14

2.3 Estimation with Trend Models

15

2.4 Estimation with Smoothing Techniques

17

2.5 Robust Methods

18

2.6 Nonparametric Bootstrap

21

2.7 Estimation of Missing Values for Saudi Arabia Climate Data

24

2.8 Trend of Climate Data for Saudi Arabia

32

CHAPTER 3

MODELING AND FITTING OF DATA USING REGRESSION AND MEDIATION METHODS

34

34

3.1 Classical Regression Analysis

34

3.2 Mediation

42

3.3 Accuracy Measures

43

3.4 A Comparison of Regression and Mediation Fits for Gizan

45

48

CHAPTER 4

FORECASTNG WITH ARIMA MODELS

48

4.1 The Box-Jenkins Methodology

48

4.2 Stationary and Nonstationary Time Series

49

4.3 Test for Significance of a White Noise Autocorrelation Function

52

4.4 ARIMA Models

54

4.5 Estimation and Specification of ARIMA Models

60

4.6 Diagnostic Checking

62

4.7 Computing a Forecast

63

4.8 Fitting of the ARIMA Model to Saudi Arabia Rainfall Data

69

CHAPTER 5

79

EVALUATION OF FORECASTS BY REGRESSION, MEDIATION AND ARIMA

MODELS

77

5.1 Cross Validation in Regression and Time Series Models

79

5.2 Evaluation of Forecasts for Rainfall Data

81

CHAPTER 6

CONCLUSIONS AND AREAS OF FUTURE RESEARCH

86

86

6.1 Conclusions

86

6.2 Areas of Future Research

87

REFERENCES

88

APPENDIX A

91

Saudi Arabia Climate Data

List of Tables

Chapter 2

Table 2.1: Trend of Complete Climate Data for Saudi Arabia

32

Chapter 3

Table 3.1: Actual and Predicted Fits of Rainfall for Gizan

45

Table 3.2: Accuracy Measures for Regression and Mediation Fits of Rainfall for Gizan

47

Chapter 4

Table 4.1: Specification of ARIMA Models

62

Table 4.2: ACF and PACF Values of Rainfall Data for Gizan

70

Chapter 5

Table 5.1: Rainfall Forecast for Gizan

82

Table 5.2: MSPE of Regression, Mediation and ARIMA Forecasts of Rainfall for Gizan

83

Table 5.3: Predicted Trend of Rainfall in Saudi Arabia

84

List of Figures

Chapter 1

Figure 1.1 Major Cities of Saudi Arabia

5

Figure 1.2: Time Series Plot of Total Number of Rainy Days in Gizan

7

Figure 1.3: Time Series Plot of Yearly Temperature of Gizan

7

Figure 1.4: Time Series Plot of Maximum Temperature in Gizan

7

Figure 1.5: Time Series Plot of Minimum Temperature in Gizan

8

Figure 1.6: Time Series Plot of Wind Speed in Gizan

8

Figure 1.7: Time Series Plot of Rainfall in Gizan

8

Figure 1.8: Individual Value Plot of Rainy Days vs Rainfall in Gizan

9

Chapter 2

Figure 2.1: Time Series Plot of Total Number of Rainy Days in Gizan with Complete Data

26

Figure 2.2: Time Series Plot of Yearly Temperature of Gizan with Complete Data

27

Figure 2.3: Time Series Plot of Maximum Temperature in Gizan with Complete Data

28

Figure 2.4: Time Series Plot of Minimum Temperature in Gizan with Complete Data

29

Figure 2.5: Time Series Plot of Wind Speed in Gizan with Complete Data

30

Figure 2.6: Time Series Plot of Rainfall in Gizan with Complete Data

31

1

Chapter 3

Figure 3.1: Normal Probability Plot of Rainfall vs Rainy Days in Gizan

40

Figure 3.2: Normal Probability Plot of Rainfall vs Different Climate Variables in Gizan

41

Figure 3.3: Time Series Plot of Original and Predicted Rainfall for Gizan

41

Figure 3.4: Regression vs Mediation Analysis

43

Figure 3.5: Time Series Plot of Actual and Predicted Fits of Rainfall for Gizan

46

Chapter 4

Figure 4.1: ACF and PACF of Rainfall Data for Gizan

70

Figure 4.2: ACF and PACF of Rainfall Data for Hail

70

Figure 4.3: ACF and PACF of Rainfall Data for Abha

71

Figure 4.4: ACF and PACF of Rainfall Data for Al Ahsa

71

Figure 4.5: ACF and PACF of Rainfall Data for Al Baha

71

Figure 4.6: ACF and PACF of Rainfall Data for Arar

72

Figure 4.7: ACF and PACF of Rainfall Data for Buriedah

72

Figure 4.8: ACF and PACF of Rainfall Data for Dahran

72

Figure 4.9: ACF and PACF of Rainfall Data for Jeddah

73

Figure 4.10: ACF and PACF of Rainfall Data for Khamis Mashit

73

Figure 4.11: ACF and PACF of Rainfall Data for Madinah

73

Figure 4.12: ACF and PACF of Rainfall Data for Mecca

74

Figure 4.13: ACF and PACF of Rainfall Data for Quriat

74

2

Figure 4.14: ACF and PACF of Rainfall Data for Rafha

74

Figure 4.15: ACF and PACF of Rainfall Data for Riyadh

75

Figure 4.16: ACF and PACF of Rainfall Data for Sakaka

75

Figure 4.17: ACF and PACF of Rainfall Data for Sharurah

75

Figure 4.18: ACF and PACF of Rainfall Data for Tabuk

76

Figure 4.19: ACF and PACF of Rainfall Data for Taif

76

Figure 4.20: ACF and PACF of Rainfall Data for Turaif

76

Figure 4.21: ACF and PACF of Rainfall Data for Unayzah

77

Figure 4.22: ACF and PACF of Rainfall Data for Wejh

77

Figure 4.23: ACF and PACF of Rainfall Data for Yanbu

77

Figure 4.24: ACF and PACF of Rainfall Data for Bishah

78

Figure 4.25: ACF and PACF of Rainfall Data for Najran

78

Chapter 5

Figure 5.1: Time Series Plot of Rainfall Forecast for Gizan

83

3

CHAPTER 1

INTRODUCTION

Prediction of rainfall is still a huge challenge to the climatologists. It is the most important

component of a climate system. Most of the burning issues of our time like global warming, floods,

draught, heat waves, soil erosion and many other climatic issues are directly related with rainfall.

Every country should have a better understanding and prediction of its weather to avoid

environmental issues in the future. In Saudi Arabia, we have little rain and snow comparing with

other western countries. However, Saudi Arabia has a lot of damages if the weather becomes

rainy or snowy. Since we do not have enough studies regarding environmental issues in Saudi

Arabia, we usually face problems with that. As a desert country, people think that we do not need

to deal seriously with weather damages, but the reality is different. Our government spends a lot

of money to fix the damages which are caused by environmental change. In 2011, Jeddah

Municipality made a session to discuss the causes of environmental issues. “Nowadays, the

environmental change is major problem in Saudi Arabia, and we need to forecast the damages

before it happened”. (Eng. Alzahrani 2011). In this session, all speakers agree that we should

have a lot of studies about our future weather to be able to avoid the problems and damages of it.

In that year (2011), Prince Khaled Al-Feisal employed more than 9 companies to deal with

environmental issues just in Jeddah. (Okaz newspaper, 2011). Saudi Arabia has been suffering

with lots of kinds of environmental issues. Since the population is increasing, the need for

solving these issues becomes very significant. We are interested to study the weather of Saudi

Arabia and its environmental issues. In this study, we will forecast the future rainfall of Saudi

Arabia, and try to know the reasons that cause rainfall. We will employ appropriate statistical

4

models and methods to predict rainfall correctly and also to identify the variables responsible for

rain.

1.1 Saudi Arabia Climate Data

In our study, we would like to consider a variety of climate data that might be useful to predict

the rainfall of Saudi Arabia. A political map of Saudi Arabia is presented in Figure 1.1

Figure 1.1 Major Cities of Saudi Arabia

5

Saudi Arabia is a large country and its climate patterns in different regions are different. For this

reason instead of considering data for the entire Saudi Arabia we consider data from 26 major

cities of this country which are: Gizan, Hail, Madinah, Makkah (Mecca), Najran, Rafha, Riyadh,

Sharurah, Tabuk, Taif, Turaif, Wejh, Yanbo, Abha, Al Baha, Sakaka, Guriat, Arar, Buraydah,

Alqasim (Unayzah), Dahran, Al Ahsa, Khamis, Mushait, Jeddah and Bishah. Initially we have

considered eleven climate variables of Saudi Arabia from year 1986 to 2014. Data are taken from

the Saudi Arabia Presidency of Meteorology and Environment which is available in

http://ww2.pme.gov.sa/ which are also presented in Appendix A. The variables we observe here

are

Data

T

Scale of measurement

- Average annual daily temperature

Celsius (°C)

TM - Annual average daily maximum temperature

Celsius (°C)

Tm – Annual Average daily minimum temperature

Celsius (°C)

PP - Rain or snow precipitation total (annual)

(mm)

V - Annual daily average wind speed

Km\h

RA - Number of days with rain

Days

SN - Number of days with snow

Days

TS - Number of days with storm

Days

FG - Number of foggy days

Days

TN - Number of days with tornado

Days

GR - Number of days with hail

Days

6

At first we construct time series plots of six of the most important variables for the city Gizan

and they are shown in Figures 1.2 – 1.7.

Time Series Plot of Rainy Days

35

30

Rainy Days

25

20

15

10

5

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 1.2: Time Series Plot of Total Number of Rainy Days (RA) in Gizan

Time Series Plot of Temp

31.25

31.00

Temp

30.75

30.50

30.25

30.00

29.75

29.50

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 1.3: Time Series Plot of Yearly Temperature (T) of Gizan

7

Time Series Plot of Temp_Max

36.25

36.00

Temp_Max

35.75

35.50

35.25

35.00

34.75

34.50

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 1.4: Time Series Plot of Maximum Temperature (TM) in Gizan

Time Series Plot of Temp_Min

27.0

Temp_Min

26.5

26.0

25.5

25.0

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 1.5: Time Series Plot of Minimum Temperature (Tm) in Gizan

Time Series Plot of Wind Speed

14

Wind Speed

13

12

11

10

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 1.6: Time Series Plot of Wind Speed (V) in Gizan

8

Time Series Plot of Rainfall

350

300

Rainfall

250

200

150

100

50

0

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 1.7: Time Series Plot of Rainfall (PP) in Gizan

The time series plots of the variables reveal several interesting features of time series. For each

variable the information for the year 2005 is missing. This is not only the case with Gizan, for all

other 25 cities we observe the same situation. We also observe that the yearly average

temperature and minimum temperature trend up. The number of rainy days and the maximum

temperature show slight decreasing trend. The average wind speed shows a decreasing pattern

and then tends to increase. The total amount of rainfall shows slightly decreasing pattern

although it is surprising to see that on many years there is zero amount of rainfall whereas there

are quite a few rainy days on those years. Certainly it cannot be right. To investigate this issue a

bit further we consider a one-way ANOVA test for the difference in the number of rainy days

among the days when we have no rain and enough rain and the MINITAB output and boxplot

and individual value plot are given below. Here no reported rainfall days are categorized by 0

and the reported rainfall days are categorized by 1.

9

Individual Value Plot of Rainy Days vs Rainfall

35

30

Rainy Days

25

20

15

10

5

0

1

Rainfall

Figure 1.8: Individual Value Plot of Rainy Days (RA) vs Rainfall (PP) in Gizan

One-way ANOVA: Rainy Days versus Rainfall

Source

Rain

Error

Total

DF

1

27

28

S = 5.636

Level

0

1

N

10

19

SS

36.9

857.6

894.6

MS

36.9

31.8

R-Sq = 4.13%

Mean

13.100

15.474

StDev

4.433

6.150

F

1.16

P

0.291

R-Sq(adj) = 0.58%

Individual 95% CIs For Mean Based on

Pooled StDev

--+---------+---------+---------+------(-------------*--------------)

(----------*----------)

--+---------+---------+---------+------10.0

12.5

15.0

17.5

The above MINITAB output for one way analysis of variance (ANOVA) between reported no

rainfall days and rainfall days show that the difference of total amount of rainfall on those two

types of days are not statistically significant. This table contains sum of squares (SS), degrees of

freedom (DF), mean squares (MS), the F statistic and its associated p-value for rainfall over

rainy days. The p-value for the test statistic F is 0.291 (much above the cut-off value 0.05) shows

that the difference is not significant. The 95% confidence interval for no rainfall has a huge

overlap with the 95% confidence interval of rainfall days and hence they are not separated at the

5% level of significance. But practically it is simply impossible to believe that the total number

10

of days when the total rainfall is zero is statistically insignificant with the number of day where

there was high rainfall. We need to address this issue later on.

1.2 Outline of the Study

Here is the outline of my thesis. In Chapter 2, we introduce different methodologies we use in

our research that include the estimation of missing values that include a robust and bootstrap

expectation minimization (EM) algorithm. We also study the trend of variables in this section. In

Chapter 3 we employ regression and mediation methods for the prediction of rainfall in different

parts of Saudi Arabia. Fitting and forecasting of data using ARIMA models are discussed in

Chapter 4. In order to determine the most appropriate ARIMA model we employed both

graphical methods based on the autocorrelation function (ACF) and the partial autocorrelation

function and numerical tests such as the t-test and the Ljung-Box test based on the ACF and the

PACF. We report a cross validation in Chapter 5 which is designed to investigate which of the

regression, mediation and ARIMA models can generate better forecasts of rainfall in Saudi

Arabia. We also report the future trend of rainfall in different regions of Saudi Arabia.

11

CHAPTER 2

ESTIMATION OF MISSING VALUES IN SAUDI ARABIA

CLIMATE DATA

We have observed in Section 1.1 that each climate variable of Saudi Arabia has a missing

observation in it. Missing data are a part of almost all research and it has a negative influence on

the analysis, such as information loss and, as a result, a loss of efficiency, loss of unbiasedness of

estimated parameters and loss of power. An excellent review of different aspects of missing

values is available in Little and Rubin (2002) and Alshammari (2015). In this section we

introduce a few commonly used missing values estimation techniques which are popular with

statisticians.

2.1 Imputation Methods

In this section we will discuss a couple of imputation techniques for estimating missing values.

2.1.1 Mean Imputation Technique

Among the different methods for solving the missing value, Imputation methods (Little and

Rubin (2002)) is one of the most widely used techniques to solve incomplete data problems.

Therefore, this study stresses several imputation methods to determine the best methods to

replace missing data.

Let us consider n observations x1 , x2 ,..., xn together with m missing values denoted

by x1* , x2* ,..., xm* . Thus the total number of observed data with missing values is n + m given as

12

x1 , x2 ,..., xn1 , x1* , xn1 1 , xn1 2 ,..., xn2 , x2* , xn2 1 , xn2 2 ,..., xm* ,......., xn

.

(2.1)

Therefore, the first missing value occurs after n1 observations, the second missing value occurs

after n 2 total observations, and so on. Note that there might be more than one consecutive

missing observation.

Mean-before Technique

The mean-before technique is one of the most popular imputation techniques in handling missing

data. This technique consists of substituting all missing values with the mean of observed data

since the last missing data point. Thus for the data in (2.1), x1* will be replaced by

x1

1 n1

xi

n1 i 1

(2.2)

and x 2* will be replaced by

x2

n2

1

xi

(n2 n1 1) i n1 1

(2.3)

and so on.

Mean-before-after Technique

The mean-before-after technique substitutes all missing values with the mean of one observed

datum before the missing value and one observed datum after the missing value. Thus for the

data in (2.1), x1* will be replaced by

x1

x n1 x n1 1

(2.4)

2

and x 2* will be replaced by

13

x2

x n2 x n2 1

(2.5)

2

and so on.

2.1.2 Median Imputation Technique

Since mean is highly sensitive to extreme observations and/or outliers, median imputation is

becoming more popular now-a-days [Mamun (2014)]. Instead of mean the missing value for a

data set given in (2.1), the missing value is estimated by the median. For example, for the median

before technique, x1* in (2.2) and x 2* in (2.3) will be replaced by their respective sample medians

and so on.

2.2

Expectation Maximization Algorithm

Let y be an incomplete (containing few missing observations) data vector, e.g., as in (2.1), whose

density function is f ( y; ) where is a p-dimensional parameter. If y were complete, the

maximum likelihood of would be based on the distribution of y. The log-likelihood function of

y, log L( y; ) l ( y; ) log f ( y; ) where l = Log L is required to be maximized. As y is

incomplete we may denote it as ( yobs , y mis ) where y obs is the observed data and y mis is the

unobserved missing data. Let us assume that the missing data is missing by random, then for

some functions f 1 and f 2 :

f ( y, ) f ( yobs , y mis ; ) f1 ( yobs ; ) f 2 ( y mis | yobs ; ) .

(2.6)

Considering the log-likelihood function, we see

lobs ( ; yobs ) l ( ; y) log f 2 ( y mis | yobs ; ).

(2.7)

The EM algorithm is focused on maximizing l ( ; y) in each iteration by replacing it by its

14

conditional expectation given the observed data y obs . The EM algorithm has an E-step

(estimation step) followed by an M-step (maximization step) as follows:

E-step: Compute Q( ; (t ) ) where

Q( ; (t ) ) E ( t ) [l ( ; y) | yobs ].

(2.8)

for the t-th iteration and E stands for expectation.

M-step: Find (t 1) such that

Q( (t 1) ; (t ) ) ≥ Q( ; (t ) ) .

(2.9)

The E-step and M-step are repeated alternately until the difference L( (t 1) ) L( (t ) ) is less than

, where is a small quantity.

If the convergence attribute of the likelihood function of the complete data, that is L( ; y) , is

attainable, then convergence of EM algorithm also attainable. The rate of convergence depends

on the number of missing observations. Dempster, Laird, and Rubin (1977) show that

convergence is linear with rate proportional to the fraction of information about in l ( ; y) that

is observed.

2.3

Estimation with Trend Models

The missing value estimation techniques discussed above are designed for independent

observations. But in regression or in time series we assume a model and that should have a

consideration when we try to estimate missing values. In time series things are even more

challenging as the observations are dependent. Here we consider several commonly used missing

value technique useful for time series.

15

We begin with simple models that can be used to forecast a time series on the basis of its

past behavior. Most of the series we encounter are not continuous in time, instead, they consist of

discrete observations made at regular intervals of time. We denote the values of a time series by

{ y t }, t = 1, 2, …, T. Our objective is to model the series y t and use that model to forecast y t

beyond the last observation yT . We denote the forecast l periods ahead by yˆ T l .

We sometimes can describe a time series y t by using a trend model defined as

yt TR t t

(2.10)

where TR t is the trend in time period t.

2.3.1. Linear Trend Model:

TR t 0 1t .

(2.11)

for constants 0 and 1 .

We can predict y t by

yˆ t ˆ0 ˆ1t

.

(2.12)

2.3.2. Polynomial Trend Model of Order p

TR t 0 1t 2 t 2 ... p t p

(2.13)

for constant coefficients. We can predict y t by

ŷt ˆ0 ˆ1t ˆ2t 2 ... ˆ pt p

.

(2.14)

2.3.3. Exponential Trend Model:

The exponential trend model, which is often referred to a growth curve model, is defined as

16

y t 0 1 t .

t

(2.15)

If 0 > 0 and 1 > 0, applying a logarithmic transformation to the above model yields

y t 0 1 t t

*

*

*

*

(2.16)

where yt ln yt , 0 ln 0 , 1* ln 1 and t ln t .

*

*

*

2.4 Estimation with Smoothing Techniques

Several decomposition and smoothing techniques are available in the literature for estimating the

missing value of time series data.

2.4.1. Moving Average

Moving average is a technique of updating averages by dropping the earliest observation and

adding the latest observation of a series. Let us suppose we have T = nL observations, where L is

the number of seasons, then the first moving average computed for that season is obtained as

y1 y1 y 2 ... y L / L .

(2.17)

The second moving average is obtained as

y2 y2 ... y L y L1 / L y1 y L1 y1 / L .

(2.18)

In general, the m-th moving average is obtained as

y m y m1 y m L1 y m1 / L

.

(2.19)

2.4.2. Centered Moving Average

Instead of the moving average we often use the centered moving average (CMA) which is the

average of two successive moving average values.

17

Some other useful smoothing techniques that can be used for estimating the missing values in

time series are exponentially weighted moving average (EWMA) and the Holt-Winter model.

We can use these methods to estimate the missing values by taking moving average of 5 to 10

neighboring data points around the missing observation.

2.5

Robust Methods

Robust procedures are nearly as efficient as the classical procedure when classical assumptions

hold strictly but are considerably more efficient overall when there is a small departure from

them. The main application of robust techniques in a time series problem is to try to devise

estimators that are not strongly affected by outliers or departures from the assumed model. A

large body of literature is now available [Rousseuw and Leroy (1987), Maronna, Martin, and Yohai

(2006), Hadi, Imon and Werner (2009)] for robust techniques that are readily applicable in linear

regression or in time series.

2.5.1. Least Median of Squares

Rousseeuw (1984) proposed the Least Median of Squares (LMS) method which is a fitting technique

less sensitive to outliers than the OLS. In the OLS, we estimate parameters by minimizing the sum of

n

squared residuals

u

t 1

2

t

which is obviously the same if we minimize the mean of squared residuals

1 n 2

1 n 2

.

Sample

means

are

sensitive

to

outliers,

but

medians

are

not.

Hence

to

make

u

t

ut less

n t 1

n t 1

sensitive we can replace the mean by a median to obtain median sum of squared residuals

2

MSR ( ˆ ) = Median { uˆ t }.

18

(2.20)

Then the LMS estimate of is the value that minimizes MSR ( ˆ ). Rousseeuw and Leroy (1987)

have shown that LMS estimates are very robust with respect to outliers.

2.5.2. Least Trimmed Squares

The least trimmed (sum of) squares (LTS) estimator is proposed by Rousseeuw (1984). Here we

arbitrarily trim a certain amount of extreme observations from both tails of the data. Let us assume that

we have trimmed 100 % observations and h is the remaining number of observations after trimming.

In this method we try to estimate in such a way that

h

2

LTS ( ˆ ) = Minimize uˆ t ,

(2.21)

t 1

where ût is the t-th ordered residual. For a trimming percentage of , Rousseeuw and Leroy (1987)

suggested choosing the number of observations h based on which the model is fitted as h = [n (1 – )]

+ 1. The advantage of using LTS over LMS is that, in the LMS we always fit the regression line based

on roughly 50% of the data, but in the LTS we can control the level of trimming. When we suspect that

the data contains nearly 10% outliers, the LTS with 10% trimming will certainly produce better result

than the LMS. We can increase the level of trimming if we suspect there are more outliers in the data.

2.5.3. M – estimator

Huber (1973) generalized the estimation of parameters by considering a class of estimators, which

chooses to

n

n

Minimize i Minimize yi xi

i 1

i 1

T

(2.22)

where is a symmetric function less sensitive to outliers than squares. An estimator of this type is

called an M – estimator, where M stands for maximum likelihood. It is easy to see from (2.22) that the

function is related to the likelihood function for an appropriate choice of an error distribution. For

19

example if the error distribution is normal, then z z 2 / 2 , –∞ < z < ∞, which also yields the OLS

estimator. The M – estimator obtained from (2.22) is not scale invariant. To obtain a scale invariant

version of this estimator we solve

n

y i xi T

i

.

Minimize Minimize

i 1

i 1

n

(2.23)

In most of the practical applications, the value of is unknown and it is usually estimated before

solving equation (2.22). A popular choice of is

~ = MAD (normalized)

where MAD stands for the median absolute deviation. To minimize (2.22), we have to equate the first

partial derivatives of w.r.t. for j = 0, 1, …, p to zero, yielding a necessary condition for a

minimum. This gives a system of k = p + 1 equations

y i xi T

= 0,

xij

~

i 1

n

j = 0, 1, …, p

(2.24)

where . In general the function is nonlinear and (2.24) must be solved by iterative methods.

2.5.4. S – estimator

Rouusseeuw and Yohai (1984) suggested another class of robust estimator based on the minimization

of the dispersion of the residuals:

ˆ 1 ,

ˆ 2 , ...,

ˆ n

s

The above dispersion is defined as the solution of in a way that

1 n

ˆ i / s K

n i 1

(2.25)

K is often put equal to E where is the standard normal. The function must satisfy the

following conditions: is symmetric and continuously differentiable and (0) = 0. The estimator

20

thus obtained is called an S – estimator because it is derived from a scale statistic in an implicit way. In

fact s given in the above estimating equation is an M – estimator of scale.

2.5.5. MM – estimator

The MM – estimator was originally proposed by Yohai (1987). The objective was to produce a robust

point estimator that maintained good efficiency. The MM – estimator has three stages:

The initial estimate is an S – estimate, so it is fairly robust.

The second stage computed an M – estimate of the error standard deviation using the residuals

from the initial S – estimate.

The last step is an M – estimate of the parameters using a hard redescending weight function to

put a very small (often zero) weight to sufficiently large residuals.

In an extensive performance evaluation of several robust regression estimators, Simpson and

Montgomery (1998) report that MM –estimators have high efficiency and work well in most outlier

scenarios.

2.6

Nonparametric Bootstrap

Nonparametric methods have recently been very popular to statisticians because they do not require

standard assumptions to hold where the reality is otherwise. Among the nonparametric techniques the

bootstrap technique proposed by Efron (1979) has become extremely popular with statisticians.

In this procedure one can create a huge number of sub-samples from a pre-observed data set by a

simple random sampling with replacement. These sub-samples could be later used to investigate the

nature of the population without having any assumption about the population itself.

There are several forms of the bootstrap, and additionally, several other resampling methods

that are related to it, such as jackknifing, cross-validation, randomization tests, and permutation

21

tests. Suppose that we draw a sample S= x1 , x2 ,..., xn from a population P= x1 , x2 ,..., x N

imagine further at least for the time being, that N is very much larger than n, and that S is either a

simple random or an independent random sample, from P. Now suppose that we are interested in

some statistic T = t(S) as an estimate of the corresponding population parameter θ = t(P). Again,

θ could be a vector of parameters and T the corresponding vector of estimates, but for simplicity

assumes that θ is scalar. A traditional approach to statistical inference is to make assumptions

about the structure of the population (e.g., an assumption of normality), and along with the

stipulation of random sampling, to use these assumptions to derive the sampling distribution of

T, on which classical inference is based. In certain instances, the exact distribution of T may be

intractable and so we instead derive its asymptotic distribution. This familiar approach has two

potentially important deficiencies:

1. If the assumptions about the population are wrong, then the corresponding sampling

distribution of the statistic may be seriously inaccurate. On the other hand, if asymptotic

results are relied upon these may not hold to the required level of accuracy in a relatively

small sample.

2. The approach requires sufficient mathematical process to derive the sampling distribution

of the statistic of interest. In some cases, such a derivation may be prohibitively difficult.

In contrast, the nonparametric bootstrap allows us to estimate the sampling distribution of a

statistic which is empirical in nature. That means this statistic is obtained from a data without

making any assumptions about the form of the population of that data, without deriving the

sampling distribution explicitly. The essential idea of the nonparametric bootstrap is as follows:

We proceed to draw a sample of size n from among the elements of S, sampling with

*

*,

, x12

..., x1*n } . It is necessary to sample

replacement. Call the resulting bootstrap sample S1* {x11

22

with replacement, because we would otherwise simply reproduce the sample S. In effect, we are

treating the S as an estimate of the population P; that is, each element Xi of S is selected for the

bootstrap sample with probability 1/n, mimicking the original selection of the sample S from the

population P. We repeat this procedure a large number of times, B selecting many bootstrap

*

samples; the bth such bootstrap sample is denoted S b* {xb*1 , xb*2 ,..., xbn

} .The basic hypothesis is

this: representative and sufficient resample from the original sample would contain information

about the original population as the original sample does represent the population.

In the real world, an unknown distribution F has given the observed data S= x1 , x2 ,..., xn

by random sampling. We calculate a statistic of interest T = t(S). In the bootstrap world, the

*

empirical distribution F̂ gives bootstrap samples Sb* {xb*1 , xb*2 ,..., xbn

} by random sampling from

which we calculate bootstrap replications of the statistic of interest, Tb t ( Sb ) . The big

*

*

advantage of the bootstrap world is that we can calculate as many replications of Tb* as we want

or at least as many as we can afford. Next, we compute the statistic T for each of the bootstrap

samples; that is Tb t ( S b ) . Then the distribution of Tb* around the original estimate T is

*

*

analogous to the sampling distribution of the estimator T around the population parameter θ. For

B

example the average of the bootstrapped statistics, T * Eˆ * (T * )

average of the bootstrap statistics; then

Bia sˆ* T * T is

T

b 1

B

*

b

. Estimate of the

an estimate of the bias of T.

*

Similarly, the estimated bootstrap variance of T, Vˆ * (T * ) (Tb T * ) 2 /( B 1) , that estimates

the sampling variance of T. The random selection of bootstrap samples is not an essential aspect

of the nonparametric bootstrap. At least in principle, we could enumerate all bootstrap samples

23

of size n. Then we could calculate E * (T * ) and V * (T * ) exactly, rather than having to estimate

them. The number of bootstrap samples, however, is astronomically large unless n is tiny. There

are, therefore two sources of error in bootstrap inference: (a) the error induced by using a

particular sample S to represent the population; (b) the sampling error produce by failing to

estimate all bootstrap samples. Making the number of bootstrap replications B sufficiently large

can control the latter sources of error.

How large should we take B, the number of bootstrap replications uses to evaluate

different estimates. The possible bootstrap replications is B = nn. To estimate the standard error

we usually use the number of bootstrap replications to be between 25 to 250. But for other

estimates such as confidence interval or a regression estimate, B is much bigger. We may

increase B if T= t (S) a very complicated function of X to increasing variability. Bootstrap

replications depend on the value of X, if n ≤ 100 we generally replicate B ≤ 10000.

Here are two rules of thumb gathered from Efron and Tibshirani's experience

Even a small number of bootstrap replications, say B = 25, is usually informative. B = 50

is often enough to give a good estimate of standard error.

Very seldom are more than B = 200 replications needed for estimating a standard error

For estimating a standard error, the number B will ordinarily be in the range 25-2000. Much

bigger values of B are required for bootstrap confidence interval, cross-validation, randomization

tests and permutation test. Some other suggestions are also available in the literature [see Efron

(1987), Hall (1992), Booth and Sarker (1998)] about the number of replications needed in

bootstrap.

24

2.7 Estimation of Missing Values for Saudi Arabia Climate Data

In order to find the best method for estimating the missing values in time series such as the

number of road accidents in Saudi Arabia, Alshammari (2015) considered the following

methods:

Mean Imputation

Median Imputation

Linear Trend Model

Quadratic Trend Model

Exponential Trend Model

Centered Moving Average

EM-OLS

EM-LTS

EM-LMS

EM-M

EM-MM

BOOT-OLS

BOOT-LTS

BOOT-LMS

BOOT-M

BOOT-MM

According to Alshammari (2015), the expectation minimization algorithm based on the robust

MM estimator (EM-MM) method performs the best in estimating the missing values. We

25

followed his suggestion and estimate missing values for each of the variable for each city by the

EM-MM method. Here we present a graphical display of estimated values in a time series plot

for six important variables in Gizan. It is worth mentioning that we have looked at the graphical

displays for all 26 cities as reported in Table 2.1 but they are not presented here because of

brevity.

Time Series Plot of Rainy Days

35

30

Rainy Days

25

20

15

10

5

1986

1990

1994

1998

2002

2006

2010

2014

Year

Time Series Plot of Rainy Days

35

30

Rainy Days

25

20

15

10

5

1986

1990

1994

1998

2002

2006

2010

2014

Year

Figure 2.1: Time Series Plot of Total Number of Rainy Days in Gizan with Complete Data

26

Time Series Plot of Temp

31.25

31.00

Temp

30.75

30.50

30.25

30.00

29.75

29.50

1986

1990

1994

1998

2002

2006

2010

2006

2010

2014

Year

Time Series Plot of Temp

31.25

31.00

Temp

30.75

30.50

30.25

30.00

29.75

29.50

1986

1990

1994

1998

2002

2014

Year

Figure 2.2: Time Series Plot of Yearly Temperature of Gizan with Complete Data

27

Time Series Plot of Temp_Max

36.25

36.00

Temp_Max

35.75

35.50

35.25

35.00

34.75

34.50

1986

1990

1994

1998

2002

2006

2010

2014

2010

2014

Year

Time Series Plot of Temp_Max

36.25

36.00

Temp_Max

35.75

35.50

35.25

35.00

34.75

34.50

1986

1990

1994

1998

2002

2006

Year

Figure 2.3: Time Series Plot of Maximum Temperature in Gizan with Complete Data

28

Time Series Plot of Temp_Min

27.0

Temp_Min

26.5

26.0

25.5

25.0

1986

1990

1994

1998

2002

2006

2010

2014

2010

2014

Year

Time Series Plot of Temp_Min

27.0

Temp_Min

26.5

26.0

25.5

25.0

1986

1990

1994

1998

2002

2006

Year

Figure 2.4: Time Series Plot of Minimum Temperature in Gizan with Complete Data

29

Time Series Plot of Wind Speed

14

Wind Speed

13

12

11

10

1986

1990

1994

1998

2002

2006

2010

2014

2010

2014

Year

Time Series Plot of Wind Speed

14

Wind Speed

13

12

11

10

1986

1990

1994

1998

2002

2006

Year

Figure 2.5: Time Series Plot of Wind Speed in Gizan with Complete Data

30

Time Series Plot of Rainfall

350

300

Rainfall

250

200

150

100

50

0

1986

1990

1994

1998

2002

2006

2010

2014

2006

2010

2014

Year

Time Series Plot of Rainfall

350

300

Rainfall

250

200

150

100

50

0

1986

1990

1994

1998

2002

Year

Figure 2.6: Time Series Plot of Rainfall in Gizan with Complete Data

The above plots show that the EM-MM method yields pretty good estimates of the missing

values since they are quite consistent with their previous and next observations.

31

2.8 Trend of Climate Data for Saudi Arabia

We have just mentioned that we estimated missing values of climate data for the year 2005 for

all 25 major cities in Saudi Arabia but for brevity we are not presenting all of them. The

following table gives a summary of our findings for all 25 cities. This table presents the trends of

six most important climate variables for studying rainfall such as rainy days, yearly average

temperature, maximum temperature, minimum temperature, wind speed and total amount of rain

fall after the estimation the estimation of missing values.

Table 2.1: Trend of Complete Climate Data for Saudi Arabia

City

Rainy Days

Temp (Y) Temp (Max) Temp (Min)

Wind Speed

Rainfall

Gizan

Decrease

Increase

Decrease

Increase

Decrease

Decrease

Hail

Decrease

Increase

Increase

Increase

Increase

Increase

Madinah

Decrease

Increase

Increase

Increase

Decrease

Increase

Makkah

Increase

Increase

Increase

Increase

Decrease

Decrease

Najran

Decrease

Increase

Increase

Increase

Decrease

Decrease

Rafha

Decrease

Increase

Increase

Increase

Decrease

Decrease

Riyadh

Decrease

Increase

Increase

Increase

Decrease

Decrease

Sharurah

Decrease

Increase

Increase

Increase

Increase

Decrease

Tabuk

Decrease

Increase

Increase

Increase

Increase

Decrease

Taif

Decrease

Increase

Increase

Increase

Increase

Increase

Turaif

Decrease

Increase

Increase

Increase

Increase

Decrease

Wejh

Decrease

Increase

Increase

Increase

Decrease

Increase

Yanbo

Decrease

Increase

Increase

Increase

Decrease

Decrease

32

Abha

Decrease

Increase

Decrease

Decrease

Al Baha

Decrease

Increase

Increase

Increase

Decrease

Decrease

Sakaka

Decrease

Increase

Increase

Increase

Decrease

Decrease

Guriat

Decrease

Decrease

Decrease

Arar

Decrease

Increase

Increase

Increase

Increase

Increase

Buraydah

Decrease

Increase

Increase

Increase

Decrease

Decrease

Alqasim

Decrease

Increase

Increase

Increase

Decrease

Decrease

Dahran

Decrease

Increase

Increase

Increase

Increase

Decrease

Al Ahsa

Increase

Increase

Increase

Increase

Decrease

Increase

Khamis

Decrease

Increase

Increase

Increase

Increase

Decrease

Jeddah

Decrease

Increase

Increase

Increase

Decrease

Increase

Bishah

Decrease

Decrease

Decrease

Increase

Increase

Increase

Decrease

Decrease

33

Increase

Increase

Increase

CHAPTER 3

MODELING AND FITTING OF DATA USING REGRESSION

AND MEDIATION METHODS

In this chapter at first we discuss different classical, robust and nonparametric methods

commonly used in regression and later use them for the revenue and expenditure data of Saudi

Arabia.

3.1 Classical Regression Analysis

Regression is probably the most popular and commonly used statistical method in all branches of

knowledge. It is a conceptually simple method for investigating functional relationships among

variables. The user of regression analysis attempts to discern the relationship between a

dependent (response) variable and one or more independent (explanatory/predictor/regressor)

variables. Regression can be used to predict the value of a response variable from knowledge of

the values of one or more explanatory variables.

To describe this situation formally, we define a simple linear regression model

Yi X i ui

(3.1)

where Y is a random variable, X is a fixed (nonstochastic) variable and u is a random error term

whose value is based on an underlying probability distribution (usually normal). For every value

X there exists a probability distribution of u and therefore a probability distribution of the Y’s.

We can now fully specify the two-variable linear regression model as given in (3.1) by listing its

important assumptions.

1. The relationship between Y and X is linear.

34

2. The X’s are nonstochastic variables whose values are fixed.

3. Each error ui has zero expected values: E( ui )= 0

4. The error term has constant variance for all observations, i.e.,

E( u i ) = 2 , i = 1, 2, …, n.

2

5. The random variables u i are statistically independent. Thus,

E( u i u j ) = 0, for all i j.

6. Each error term is normally distributed.

3.1.1 Tests of Regression Coefficients, Analysis of Variance and Goodness of

Fit

We often like to establish that the explanatory variable X has a significant effect on Y, that the

coefficient of X (which is ) is significant. In this situation the null hypothesis is constructed in

way that makes its rejection possible. We begin with a null hypothesis, which usually states that

a certain effect is not present, i.e., = 0. We estimate by ˆ and the standard error of ˆ

denoted by s ˆ from the data and compute the statistic

t=

ˆ

~ t n2 .

s ˆ

(3.2)

which means the statistic t follows a t distribution with n – 2 degrees of freedom. Residuals can

provide a useful measure of the fit between the estimated regression line and the data. A good

regression equation is one which helps explain a large proportion of the variance of Y. Large

residuals imply a poor fit, while small residuals imply a good fit. The problem with using the

residuals as a measure of goodness of fit is that its value depends on the units of the dependent

35

variable. For this we require a unit-free measure for the goodness of fit. Thus the total variation

of Y (usually known as total sum of squares TSS) can be decomposed into two parts: the residual

variation of Y (error sum of squares ESS) and the explained variation of Y (regression sum of

squares RSS). To standardize, we divide both sides of the equation

TSS = ESS + RSS

by TSS to obtain

1=

ESS

RSS

+

.

TSS

TSS

We define the R – squared ( R 2 ) of the regression equation as

R2 =

RSS

ESS

=1–

.

TSS

TSS

(3.3)

Thus R 2 is the proportion of the total variation in Y explained by the regression of Y on X. It is

easy to show that R 2 ranges in value between 0 and 1. But it is only a descriptive statistics.

Roughly speaking, we associate a high value of R 2 (close to 1) with a good fit of the model by

the regression line and associate a low value of R 2 (close to 0) with a poor fit. How large must

R 2 be for the regression equation to be useful? That depends upon the area of application. If we

could develop a regression equation to predict the stock market, we would be ecstatic if R 2 =

0.50. On the other hand, if we were predicting death in a road accident, we would want the

prediction equation to have strong predictive ability, since the consequences of poor prediction

could be quite serious.

It is often useful to summarize the decomposition of the variation in Y in terms of an

analysis of variance (ANOVA). In such a case the total explained and unexplained variations in

Y are converted into variances by dividing by the appropriate degrees of freedom. This helps us

to develop a formal procedure to test the goodness of fit by the regression line. Initially we set

36

the null hypothesis that the fit is not good. In other words, our hypothesis is that the overall

regression is not significant in a sense that the explanatory variable is not able to explain the

response variable in a satisfactory way.

ANOVA Table for a Two Variable Regression Model

Components

Sum of Squares

Degrees of freedom

Mean SS

F statistic

Regression

RSS

1

RSS/1 = RMS

RMS/EMS~ F1,n 2

Error

ESS

n–2

ESS/(n –2) = EMS

Total

TSS

n–1

Here we compute the mean sum of squares for both regression (RMS) and error (ESS) by

dividing RSS and ESS by their respective degrees of freedom as shown in column 4 of the above

ANOVA table. Finally we compute the ratio RMS / EMS which follows an F distribution with 1

and n – 2 degrees of freedom (which is also a square of a t distribution with n – 2 d. f.). If the

calculated value of this ratio is greater than F1, n 2,0.05 , we reject the null hypothesis and conclude

that the overall regression is significant at the 5% level of significance.

3.1.2. Regression Diagnostics and Tests for Normality

Diagnostics are designed to find problems with the assumptions of any statistical procedure. In a

diagnostic approach we estimate the parameters by the classical method (the OLS) and then see

whether there is any violation of assumptions and/or irregularity in the results regarding the six

standard assumptions mentioned at the beginning of this section. But among them the

assumption of normality is the most important assumption.

The normality assumption means the errors are distributed as normal. The simplest

graphical display for checking normality in regression analysis is the normal probability plot.

37

This method is based in the fact that if the ordered residuals are plotted against their cumulative

probabilities on normal probability paper, the resulting points should lie approximately on a

straight line. An excellent review of different analytical tests for normality is available in Imon

(2003). A test based on the correlation of true observations and the expectation of normalized

order statistics is known as the Shapiro – Wilk test. A test based on an empirical distribution

function is known as the Anderson – Darling test. It is often very useful to test whether a given

data set approximates a normal distribution. This can be evaluated informally by checking to see

whether the mean and the median are nearly equal, whether the skewness is approximately zero,

and whether the kurtosis is close to 3. Skewness and kurtosis are measures of skewed and peaked

behavior of a population respectively. The commonly used measures of skewness and peakness

are S

3

2

3

and K

4

where k E[ X E ( X )]k is called the k-th central moment of the

4

2

random variable X. When the data come from a normal population with expectation and

variance 2 , then the standard results show that 3 = 0 and 4 3 4 which yield S = 0 and K

= 3. For samples, we can estimate k by mk

1 n

x x k where x is the sample mean for a

n i 1

m3

sample of size n. Then the coefficients of skewness and kurtosis are estimated by Sˆ

and

3

m2

m

Kˆ 44 . Thus a more formal test for normality is given by the Jarque – Bera statistic:

m2

JB = [n / 6] [ Sˆ 2 ( Kˆ 3) 2 / 4]

(3.4)

Imon (2003) suggests a slight adjustment to the JB statistic to make it more suitable for the

regression problems. His proposed statistic based on rescaled moments (RM) of ordinary least

squares residuals is defined as

38

RM = [n c 3 / 6] [ Sˆ 2 c ( Kˆ 3) 2 / 4]

(3.5)

where c = n/(n – p), p is the number of independent variables in a regression model. Both the JB

and the RM statistic follow a chi square distribution with 2 degrees of freedom. If the values of

these statistics are greater than the critical value of the chi square, we reject the null hypothesis

of normality.

Here we report a regression analysis for Gizan. We try to predict rainfall. At first we fit

the total amount of rainfall on the number of rainy days. Our common sense tells us that there

must be a very strong linear relationship here, but because of too many strange values in the

rainfall the data relationship does not turn out that strong. The MINITAB output of this analysis

is given below. We observe from this output that although the number of rainy days has a

significant impact on rainfall as the p-value is 0.016, the R 2 of this fit is only 19.7%.

Regression Analysis: Rainfall versus Rainy Days

The regression equation is

Rainfall = - 56.1 + 7.48 Rainy Days

Predictor

Constant

Rainy Days

Coef

-56.14

7.480

S = 86.9938

SE Coef

45.58

2.909

R-Sq = 19.7%

T

-1.23

2.57

P

0.229

0.016

R-Sq(adj) = 16.7%

Analysis of Variance

Source

Regression

Residual Error

Total

DF

1

27

28

SS

50053

204334

254387

MS

50053

7568

F

6.61

P

0.016

The normal probability plot as shown in Figure 3.1 clearly shows a nonnormal pattern

and the corresponding RM statistic has p-value 0.003.

39

Normal Probability Plot

(response is Rainfall)

99

95

90

Percent

80

70

60

50

40

30

20

10

5

1

-200

-100

0

100

200

300

Residual

Figure 3.1: Normal Probability Plot of Rainfall vs Rainy Days in Gizan

When we include a few more explanatory variables such as temperature, maximum temperature,

minimum temperature and wind speed, the fit improves a bit. The R 2 value goes up to 54.6%

and the normal probability plot (see Figure 3.2) shows a better normality pattern. The p-value of

the RM statistic is 0.765.

Regression Analysis: Rainfall versus Rainy Days, Temp, ...

The regression equation is

Rainfall = 1933 + 6.57 Rainy Days + 118 Temp - 92.4 Temp_Max - 97.6 Temp_Min

+ 21.1 Wind Speed

Predictor

Constant

Rainy Days

Temp

Temp_Max

Temp_Min

Wind Speed

Coef

1933

6.575

118.43

-92.45

-97.60

21.10

S = 70.8575

SE Coef

1648

2.565

84.63

39.58

55.44

12.53

R-Sq = 54.6%

T

1.17

2.56

1.40

-2.34

-1.76

1.68

P

0.253

0.017

0.175

0.029

0.092

0.106

R-Sq(adj) = 44.7%

Analysis of Variance

Source

Regression

Residual Error

Total

DF

5

23

28

Source

Rainy Days

Temp

Seq SS

50053

14986

DF

1

1

SS

138909

115478

254387

MS

27782

5021

F

5.53

P

0.002

40

Temp_Max

Temp_Min

Wind Speed

1

1

1

19635

39996

14239

Normal Probability Plot

(response is Rainfall)

99

95

90

Percent

80

70

60

50

40

30

20

10

5

1

-150

-100

-50

0

50

Residual

100

150

200

Figure 3.2: Normal Probability Plot of Rainfall (PP) vs Different Climate Variables in Gizan

But we are not entirely happy with this fit. Figure 3.3 gives the fitted values of rainfall together

with the original values. We observe from this plot that for 8 years we predict negative amount

of rainfall which cannot be true.

Scatterplot of Rainfall, Fitted Rainfall vs Year

350

Variable

Rainfall

Fitted Rainfall

300

250

Y-Data

200

150

100

50

0

-50

1985

1990

1995

2000

Year

2005

2010

2015

Figure 3.3: Time Series Plot of Original and Predicted Rainfall (PP) for Gizan

41

We see similar pattern in rainfall data for other regions of Saudi Arabia and the details results are

omitted for brevity.

3.2 Mediation

We have already observed some strange behavior of rainfall data for different parts in Saudi

Arabia. In many years the total amounts of rainfall are zero although there are quite a few rainy

days there. It clearly indicates something is wrong with the data. When there are 30 to 40 rainy

days in a year, the total amount of rainfall cannot be zero. Probably the corresponding data are

missing and in the data sheet they wrongly type those as zeroes. In such a situation a regression

model can fail to predict rainfall. But we have seen in the previous section that the number of

rainy days has a high correlation with the total amount of rainfall and we can also establish a

significant linear relationship between them. Since the total number of rainy days data is

complete we can use that information to predict or forecast rainfall by an indirect regression

approach. Indirect regression is popularly known as mediation in the regression literature.

In statistics, a mediation model is one that seeks to identify and explicate the mechanism or

process that underlies an observed relationship between an explanatory (independent) variable

and a response (dependent) variable via the inclusion of a third explanatory variable, known as a

mediator variable. The mediation method was first proposed by Baron and Kenny (1986) and

then developed by many authors. An excellent review of mediation technique is available in

Montgomery et al. (2014). Rather than hypothesizing a direct causal relationship between the

independent variable and the dependent variable, a mediational model hypothesizes that the

independent variable influences the mediator variable, which in turn influences the dependent

variable. Thus, the mediator variable serves to clarify the nature of the relationship between the

42

independent and dependent variables. In other words, mediating relationships occur when a third

variable plays an important role in governing the relationship between the other two variables.

Figure 3.4: Regression vs Mediation Analysis

Figure 3.4 gives a visual representation of the overall mediating relationship to be explained.

Mediation analyses are employed to understand a known relationship by exploring the

underlying mechanism or process by which one variable (X) influences another variable (Y)

through a mediator (M). For example, suppose a cause X affects a variable (Y) presumably

through some intermediate process (M). In other words X leads to M which leads to Y. In our

study we assume that the climate variables will lead to the number of rainy days and that will

lead to total amount of rainfall. Thus, the number of rainy days has become an intervening

variable which is called a mediator.

3.3 Accuracy Measures

When several methods are available for fitting or predicting a regression or time series, we need

to find some kind of accuracy measures for comparison of the goodness of fit. For a regular

regression and/or time series three measures of accuracy of the fitted model: MAPE, MAD, and

43

MSD are very commonly used. For all three measures, the smaller the value, the better the fit of

the model. Use these statistics to compare the fits of the different methods.

3.3.1. MAPE

Mean absolute percentage error (MAPE) measures the accuracy of fitted time series values. It

expresses accuracy as a percentage.

MAPE =

| y

yˆt / yt |

t

T

100

(3.6)

where yt equals the actual value, ŷt equals the fitted value, and T equals the number of

observations.

3.3.2. MAD

MAD stands for mean absolute deviation, measures the accuracy of fitted time series values. It

expresses accuracy in the same units as the data, which helps conceptualize the amount of error.

MAD =

| y

t

yˆt |

T

(3.7)

where yt equals the actual value, ŷt equals the fitted value, and T equals the number of

observations.

3.3.3. MSD

Mean squared deviation (MSD) is always computed using the same denominator, T, regardless

of the model, so we can compare MSD values across models. MSD is a more sensitive measure

of an unusually large forecast error than MAD.

MSD =

y

yˆt

2

t

T

44

(3.8)

where yt equals the actual value, ŷt equals the fitted value, and T equals the number of

observations.

3.4 A Comparison of Regression and Mediation Fits for Gizan

To offer a comparison between regression and mediation methods in fitting the rainfall data, we

fit the data by both methods and the results of the fitted values together with the actual rainfall

values are presented in Table 3.1. It is worth mentioning that we employed the ordinary least

squares method to compute the regression fits. Here the response variable is total amount of

rainfall and the explanatory variables are temperature, maximum temperature, minimum

temperature, number of rainy days and wind speed. For the mediation values at first we predict

the mediator variable total number of rainy days by temperature, maximum and minimum

temperature and wind speed and then obtain the predicted rainfall as

Predicted rainfall = Predicted number of rainy days × average rainfall per day

Table 3.1: Actual and Predicted Fits of Rainfall for Gizan

Year

1986

1987

1988

1989

1990

1991

1992

1993

1994

1995

1996

1997

1998

Rainfall

0.00

0.00

0.00

28.96

53.09

0.00

318.77

310.89

2.03

113.80

257.05

48.01

2.03

Regression

44.283

8.429

-38.270

-7.876

42.723

48.151

256.727

219.078

96.859

184.732

89.395

87.237

81.041

45

Mediation

16.100

17.120

14.940

15.930

53.140

48.151

256.727

309.800

56.320

184.732

210.236

87.237

39.876

1999

2000

2001

2002

2003

2004

2005

2006

2007

2008

2009

2010

2011

2012

2013

2014

0.00

0.00

0.00

0.00

0.00

12.95

46.70

10.20

9.80

9.15

9.15

9.80

10.20

91.70

206.78

0.00

10.041

26.527

27.865

-15.492

-40.160

-1.602

44.897

77.789

85.927

2.663

-9.468

48.110

-0.415

99.371

39.181

43.317

10.041

26.527

27.865

15.234

16.132

14.768

44.897

34.935

55.673

2.663

12.254

15.897

16.944

99.371

134.143

55.045

Scatterplot of Rainfall, Regression, Mediation vs Year

350

Variable

Rainfall

Regression

Mediation

300

250

Y-Data

200

150

100

50

0

-50

1985

1990

1995

2000

Year

2005

2010

2015

Figure 3.5: Time Series Plot of Actual and Predicted Fits of Rainfall (PP) for Gizan

A graphical display of this data in terms of a time series plot is presented in Figure 3.5. This

graph clearly shows that the mediation method is fitting the data better than the regression

method for this data.

46

Table 3.2: Accuracy Measures for Regression and Mediation Fits of Rainfall for Gizan

Measures

Regression

Mediation

MAPE

228.50

68.34

MAD

47.06

25.84

MSD

3930.88

1156.93

Finally we compute the accuracy measures MAPE, MAD and MSD for the fitted values obtained

by regression and mediation methods and present them in Table 3.2. This table clearly shows

that all three accuracy measures for mediation are much less than their corresponding regression

results. Thus we may conclude that there exists empirical evidence which shows mediation

method can fit the rainfall data of Gizan better than regression methods.

47

CHAPTER 4

FORECASTNG WITH ARIMA MODELS

In this chapter we discuss different aspects of data analysis techniques useful in time series

analysis. Here the prime topic of our discussion will be ARIMA models. We will talk about

fitting of a model and generating forecasts using ARIMA models. An excellent review of

different aspects of stochastic time series modelling is available in Pyndick and Rubenfield

(1998), Bowerman et al. (2005) and Imon (2015). We assume that the time series models have

been generated by a stochastic process. In other words, we assume that each value y1 , y 2 , …,

yT in the series is randomly drawn from a probability distribution. We could assume that the

observed series y1 , y 2 , …, yT is drawn from a set of jointly distributed random variables. If we

could specify the probability distribution function of our series, we could determine the

probability of one or another future outcome. Unfortunately, the complete specification of the

probability distribution function for a time series is usually impossible. However, it usually is

possible to construct a simplified model of the time series which explains its randomness in a

manner that is useful for forecasting purposes.

4.1 The Box-Jenkins Methodology

The Box-Jenkins methodology consists of a four-step iterative procedure.

Step 1: Tentative Identification: Historical data are used to tentatively identify an appropriate

Box-Jenkins model.

Step 2: Estimation: Historical data are used to estimate the parameters of tentatively identified

model.

48

Step 3: Diagnostic Checking: Various diagnostics are used to check the adequacy of the

tentatively identified model and, if need be, to suggest an improved model, which is then

regarded as a new tentatively identified model.

Step 4: Forecasting: Once a final model is obtained, it is used to forecast future time series

values.

4.2 Stationary and Nonstationary Time Series

It is important to know whether the stochastic process that generates the series can be assumed to

be invariant with respect to time. If the characteristic of the stochastic process changes over time

we call the process nonstationary. If the process is nonstationary, it will often be difficult to

represent the time series over past and future intervals of time by a simple algebraic model. By

contrast, if the process is stationary, one can model the process via an equation with fixed

coefficients that can be estimated from past data.

Properties of Stationary Process

We have said that any stochastic time series y1 , y 2 , …, yT can be thought of as having been

generated by a set of jointly distributed random variables; i.e., the set of data points y1 , y 2 , …,

yT represents a particular outcome (also known as a realization) of the joint probability

distribution function p( y1 , y 2 , …, yT ). Similarly, a future observation yT 1 can be thought of

as being generated by a conditional probability distribution function

p( yT 1 | y1 , y 2 , …, yT )

(4.1)

that is, a probability distribution for yT 1 given the past observations y1 , y 2 , …, yT . We define

a stationary process, then, as one whose joint distribution and conditional distribution both are

invariant with respect to displacement in time. In other words, if the series is stationary, then

49

p( y t , y t 1 , …, y t k ) = p( yt m , yt m1 , …, yt m k )

and

p( y t ) = p( yt m )

(4.2)

for any t, k, and m.

If the series y t is stationary, the mean of the series, which is defined as

y = E( y t )

(4.3)

must also be stationary, so that E( y t ) = E( yt m ), for any two different time period t and m.

Furthermore, the variance of the series

y 2 = E[( y t – y )] 2

(4.4)

must be stationary, so that

E[( y t – y )] 2 = E[( yt m – y )] 2 .

(4.5)

Finally, for any lag k, the covariance of the series

k = Cov ( y t , y t k ) = E[( y t – y ) ( y t k – y )]

(4.6)

must be stationary, so that Cov ( y t , y t k ) = Cov ( yt m , yt m k ).

If a stochastic process is stationary, the probability distribution p( y t ) is the same for all

time t and its shape can be inferred by looking at the histogram of the observations y1 , y 2 , …,

yT . An estimate of the mean y can be obtained from the sample mean

T

y =

y

t 1

t

/T

(4.7)

and an estimate of the variance y can be obtained from the sample variance

2

50

ˆ y 2 =

T

y

t 1

y / T.

2

t

(4.8)

Usually it is very difficult to get a complete description of a stochastic process. The

autocorrelation function could be extremely useful because it provides a partial description of the

process for modeling purposes. The autocorrelation function tells us how much correlation there

is between neighboring data points in the series y t . We define the autocorrelation with lag k as

k

Cov y t , y t k

V yt V yt k

(4.9)

For a stationary time series the variance at time t is the same as the variance at time t + k; thus

from (4.6) the autocorrelation becomes

k

k

k

0

y2

(4.10)

where 0 = Cov ( y t , y t ) = E[( y t – y ) ( y t – y )] = y2 and thus 0 = 1 for any stochastic

process.

Suppose the stochastic process is simply

y t t

where t is an independently distributed random variable with zero mean. Then it is easy to

show that for this process, 0 = 1 and k = 0 for k > 0. This particular process is known as

white noise, and there is no model that can provide a forecast any better than yˆ T l = 0 for all l.

Thus, if the autocorrelation function is zero (or close to zero) for all k > 0, there is little or no

value in using a model to forecast the series.

51

4.3 Test for Significance of a White Noise Autocorrelation Function

In practice, we use an estimate of the autocorrelation function, called the sample autocorrelation

(SAC) function

T k

rk

y

t 1

t

y y t k y

T

y

t 1

t

y

(4.11)

2

It is easy to see from their definitions that both the theoretical and the estimated autocorrelation

functions are symmetrical; i.e., k = k and rk = r k .

4.3.1. Bartlett’s test

Here the null hypothesis is H 0 : k = 0 for k > 0. Bartlett shows that when the time series is

generated by a white noise process, the sample autocorrelation function is distributed

approximately as a normal with mean 0 and variance 1/T. Hence, the test statistic is

|z| =

T | rk |

(4.12)

and we reject the null hypothesis at the 95% level of significance, if |z| is greater than 1.96.

4.3.2. The t-test based on SAC

The standard error of rk is given by

1/ T

k 1

SE rk

2

1

2

ri / T

i 1

if k 1

if k 1

.

(4.13)

The t-statistic for testing the hypothesis H 0 : k = 0 for k > 0 is defined as

T = rk /SE( rk )

(4.14)

52

and this test is significant when |T| > 2.

4.3.3. Box and Pierce Test and Ljung and Box Test

To test the joint hypothesis that all the autocorrelation coefficients are zero we use a test statistic

introduced by Box and Pierce (1970). Here the null hypothesis is

H 0 : 1 = 2 = … = k = 0.

Box and Pierce show that the appropriate statistic for testing this null hypothesis is

k

Q=T

r

i 1

2

(4.15)

i

is distributed as chi-square with k degrees of freedom.

A slight modification of the Box-Pierce test was suggested by Ljuang and Box (1978),

which is known as the Ljuang-Box Q (LBQ) test defined as

k

Q T (T 2) (T k ) 1 ri

2

i 1

.

(4.16)

Thus, if the calculated value of Q is greater than, say, the critical 5% level, we can be 95% sure

that the true autocorrelation coefficients are not all zero.

4.3.4. Stationarity and the Autocorrelation Function

How can we decide whether a series is stationary or determine the appropriate number of times a

homogenous nonstationary series should be differenced to arrive at a stationary series? The

correlogram (a plot of autocorrelation coefficients against the number of lag periods) could be a

useful indicator of it. For a stationary series, the autocorrelation function drops off as k becomes

large, but this usually is not the case for a nonstationary series. In order to employ the BoxJenkins methodology, we must examine the behavior of the SAC. The SAC for a nonseasonal

53

time series can display a variety of behaviors. First, the SAC for a nonseasonal time series can

cut off at lag k. We say that a spike at lag k exists in the SAC if the SAC at lag k is statistically

significant. Second, we say that the SAC dies down if this function does not cut-off but rather

decreases in a steady fashion. In general, it can be said that

1. If the SAC of the time series values either cuts off fairly quickly or dies down fairly

quickly, then the time series values should be considered stationary.

2. If the SAC of the time series values dies down extremely slowly, then the time series

values should be considered nonstationary.

4.4 ARIMA Models

In this section we introduce some commonly used stochastic time series models which are

popularly known as integrated autoregressive moving average (ARIMA) models.

4.4.1. White Noise