Document 10983496

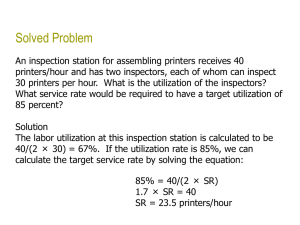

advertisement