A Novel Inference Algorithm On Graphical Model

by

Yewen Pu

Submitted to the Department of Electrical Engineering and Computer

Science

in partial fulfillment of the requirements for the degree of

wU

0

-

z.-

Masters of Science in Computer Science and Engineering

CJ)

CI)

at the

LLI (iuo

<0

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

-Ii01

February 2015

@ Massachusetts Institute of Technology 2015. All rights reserved.

Signature redacted

.................................. ..

Author .........

Department of Electrical Engineering and Computer Science

January 30, 2015

Signature redacted

Certified by

Armando Solar-Lezama

Associate Professor

Thesis Supervisor

Signature redacted

.....................

Accepted by....

)

LC

)OProfessor Leslie A. Kolodziejski

Chair, Department Committee on Graduate Theses

1w)

A Novel Inference Algorithm On Graphical Model

by

Yewen Pu

Submitted to the Department of Electrical Engineering and Computer Science

on Jan 30, 2015, in partial fulfillment of the

requirements for the degree of

Masters of Science in Computer Science and Engineering

Abstract

We present a framework for approximate inference that, given a factor graph and

a subset of its variables, produces an approximate marginal distribution over these

variables with bounds. The factors of the factor graph are abstracted as as piecewise

polynomial functions with lower and upper bounds, and a variant of the variable elimination algorithm solves the inference problem over this abstraction. The resulting

distributions bound quantifies the error between it and the true distribution. We also

give a set of heuristics for improving the bounds by further refining the binary space

partition trees.

Thesis Supervisor: Armando Solar-Lezama

Title: Associate Professor

3

4

Acknowledgments

A list:

Thanks Armando, my advisor, for guidance and mental therapies and kind words.

Thanks Zenna, my labmate, for asking "but what does it mean?"

Thanks Rohit, my labmate, for helps with algorithms and speaking spanish.

Thanks Thomas Gregoire, Alex Townsend, Will Cuello, for math.

Thanks Meng, whom in hours of desperation, developed the skill to cook.

5

6

Contents

Introduction Overview . . . . . .

13

1.2

Bayesian Inference

. . . . . . . .

13

1.3

Probablistic Programming

. . . .

15

1.4

Related Works . . . . . . . . . . .

16

1.4.1

Sampling

. . . . . . . . .

16

1.4.2

Variational Inference

. . .

17

1.4.3

Variable Elimination

. . .

17

Our Approach . . . . . . . . . . .

17

.

.

.

.

.

.

.

.

1.1

1.5

19

. . . . . . . . . . . . . . . . . . .

19

. . . . . . . . . . .

. . . . . . . . . . . . . . . . . . .

19

Factors. . . . . . . . . . .

. . . . . . . . . . . . . . . . . . .

22

. . . . . . . .

. . . . . . . . . . . . . . . . . . .

25

.

. . . . . . . . . . . . . . . . . . .

25

. . . . . . . . . . .

. . . . . . . . . . . . . . . . . . .

26

28

30

Algorithm Overview

2.2

Factor Graph

Distribution Abstraction

2.3.2

Patch

2.3.3

Abstract Factor . . . . . .

.

.

2.3.1

.

2.4

Factor Abstraction

.... ... ... ... ... ...

.

2.3

.

2.2.1

.

2.1

.

. . . . . . .

.

The Algorithm

... .... ... ... .. ....

2.4.1

Variable Elimination

.

2

13

Introduction

.... ... ... ... ... ...

30

2.4.2

Patch for the original factors . . . . . . . . . . . . . . . . . . .

31

2.4.3

Patch for multiplication

... .... ... ... ... ...

31

2.4.4

Patch for integration

Operations on Factors

. . . . . .

. . .

.

.

1

32

7

36

.

. . . . . . . . . . . .

Measurement of Error

. . . . . . . .

. . . . . . . . . . . .

36

2.5.2

The Splitting Heuristic . . . . . . . .

. . . . . . . . . . . .

37

2.5.3

Optimization of the Heuristic

. . . .

. . . . . . . . . . . .

38

Polynomial Bounds . . . . . . . . . . . . . .

. . . . . . . . . . . .

40

2.6.1

Polynomial Approximation . . . . . .

. . . . . . . . . . . .

40

2.6.2

Approximate Bounding . . . . . . . .

. . . . . . . . . . . .

41

2.6.3

Exact Bounding . . . . . . . . . . . .

. . . . . . . . . . . .

42

2.6.4

Bounding Polynomials Exactly

. . .

. . . . . . . . . . . .

42

2.6.5

Bounding Potential Functions Exactly

. . . . . . . . . . . .

43

.

.

.

.

.

.

2.5.1

.

2.6

Refinement Heuristic . . . . . . . . . . . . .

.

2.5

3 Results

45

Simple Constraint . . . . . . . . . . . . . . . . . . . . . . . . . . . .

45

3.2

Backward Inference . . . . . . . . . . . . . . . . . . . . . . . . . . .

46

.

.

3.1

A Potential Function and Distance Functions

8

47

List of Figures

1-1

A code snippet on how one might express our example problem in a

probablistic programming language setting

. . . . . . . . . . . . . .

15

2-1

Visualizing the factor graph of the bus problem

. . . . . . . . . . . .

21

2-2

An example of a partition set Pf and the finest partition part* . . . .

28

2-3

The patch set Pf organized as a BSP to form the abstract factor G

29

2-4

The 1-dimensional abstraction factors G1 and G 2 are being multiplied.

The upper bound for the domain d is computed by multiplying together the polynomials from the smallest coverings of d from each of

the abstract factors . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2-5

33

The 2-dimensional abstract factor GI is integrated. A domain d has

its intersected patches in GI being drawn, for brevity these patches do

. . . . . . . . . . . . . . . . . . . .

not nessesarily come from a BSP

2-6

34

The domain d, a rectangle, has its distance bounded by a and b, deriving from the bounding sphere . . . . . . . . . . . . . . . . . . . . .

44

3-1

Visualizing the result factor of propagating a simple constraint . . . .

45

3-2

Visualizing the relationship between y and x after making an observation 46

9

10

List of Tables

11

12

Chapter 1

Introduction

1.1

Introduction Overview

In this master thesis, we explore a new method of performing bayesian inference on

a factor graph. It is the first algorithm of its kind to be able to perform inference

on a continuously valued factor graph with sound bounds.

At each stage of the

algorithm's execution, an under and over approximation of the true distribution is

computed, guaranteeing the true distribution to lay between these bounds.

1.2

Bayesian Inference

Bayesian inference is a set of techniques that allow one to model a phenomenon by

changing the hypothesis as one acquires more observations

tions in various disciplines of science such as biology

[6],

[4].

It has found applica-

geology [2], and physics [11].

Modeling a phenomenon in a bayesian setting gives a systematic framework on how

to robustly adjust the hypothesis with both prior beliefs and additional observations.

Formally, bayesian inference addresses the following question: Given some prior

beliefs about a hypothesis x, expressed as a prior probability p(x), a likelihood function p(yI'), modeling how likely one is to make an observation y depending on the

hypothesis x, and an actual observation y, we wish to conclude the posterior probability p(xly). The posterior probability is interpreted as our updated beliefs about

13

our hypothesis x taken into account the observations. The posterior probability can

be obtained by applying bayes rule:

p~l)=

p~ ~~)(1.1)

P(I)-p(ylx)p(x)

p(y)

Well use the following example to guide our discussion: Suppose we have a bag

of 3 coins, with two fair coins and one unfair coin that has a _a_

10 chance of showing a

head. We grab a coin from the bag at random, and flips the coin. If we observe the

flip to be a tail, what is the probability that we have chosen the fair coin?

From the problem statement, we know the prior distribution is as follows:

2

-

p(f air) =

-

1(1.2)

p(unf air) =

And the likelihood as follows:

1

p(taillfair)

2

2

p(tailUnTJair) =

p(head unf air) =

(1.3)

-

1

-

-

p(head f air)

We first compute p(tail), then apply bayes rule, to obtain the solution as follows:

p(tail) = p(tailIfair)p(fair) + p(tail unf air)p(unfair) = -1

p(tail f air)p(fair)

p(tail)

_

10

11

30

(1.4)

Note that in computing the posterior probability, the quantity p(tail) can be seen

as a normalization factor, which can be computed in the end. In practice, one often

adopt the proportionality version of the bayes rule:

p(xIy) cX p(y x)p(x)

14

(1.5)

and leave the normalization until the very end of the computation.

1.3

Probablistic Programming

Although one can always attempt solve the inference problems by hand, for larger and

more complex instances, it can be helpful to express the problems in a probabilistic

programming language. A probabilistic programming language is a set of programming language constructs that allows the user to formally model probabilistic (and

deterministic) relationships between objects of interest, and make queries about certain aspects of the model

[5].

A probabilistic programming language usually comes

with it an underlying algorithm, which solves for the query posed by the user.

Our example problem above may be expressed in a probabilistic programming

language as follows:

fair = Ber(2/3)

outcome = (if

(fair)

Ber (1/2)

e ls e

Ber (9/10)

end)

observe(outcome = false)

query-distribution(fair)

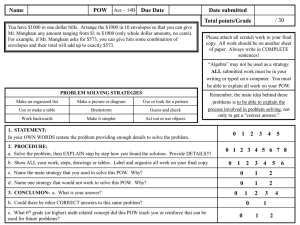

Figure 1-1: A code snippet on how one might express our example problem in a

probablistic programming language setting

Here, the function Ber models the bernoulli distribution with the probability

of returning true, which we can interpret as a coin has landed on its head.

variable fair thus denote the probability of us picking a fair coin.

The

The outcome

of our experiment outcome depends on if we have picked a fair coin.

If we have

chosen a fair coin, we can get a head with probability I, denoted by another bernoulli

distribution Ber(1/2), and if we have chosen an unfair coin, we can get a head with

probability -.

The observe function denote the outcome of our experiment is a tail,

15

and by querying the probability distribution of fair, we can answer the question

pr(f air tail).

Once the problem is modeled, the probablistic programming framework needs

to solve for the query posed by the user. There are two main approaches to solving the query, the generative/sampling based approach and the variational inference

approach. We will discuss these approaches briefly to give a context of where our

techniques fit in.

1.4

1.4.1

Related Works

Sampling

Conceptually, a sampling based inference algorithm works by executing the program

and collect traces of the execution. For instance, by executing the program in our

example, we might get a trace (fair = true, outcome = true). The traces are then

filtered via rejection sampling, discarding the traces that contradicts with our observation. For instance, the trace (fair = false, outcome = true) will be discarded since

it does not satisfy the observation that the outcome is a tail. The remaining traces

are then counted to approximate the queried probability. In this setting, the more

samples one can drawn, the closer the estimation it is to the true probability. When

the program size becomes large, however, one must adopt a more efficient sampling

algorithm than rejection sampling, such as MCMC, which moves from one valid sample state to another with a proposal distribution to avoid unnecessary rejections [1].

A MCMC algorithm provides a sample distribution that is guaranteed to converge to

the desired distribution if the algorithm is executed for sufficiently large number of

iterations. A key challenge to the sampling approach is the measurement of quality,

as there is difficult to bound the closeness between the sample distribution and the

true distribution at each iteration of the algorithm, as convergence is only guaranteed

16

in the limit.

1.4.2

Variational Inference

In contrast to the sampling approach which approximate the queried distribution,

the variational inference approach first simplifies the distribution to a simpler form,

then computes a locally optimal, symbolic solution of this simpler distribution [3]. In

the variational setting, the form of the distributions are restricted, for instance to the

family of exponential distributions with conjugate priors, and the posterior distribution is assumed to be factorizable. These assumptions then allows for fast symbolic

computations of the posterior distribution. In our example, we might approximate

the Ber distribution with a beta distribution, and approximate the if expression

with a mixture of gaussians. The challenge of the variational method is thus, while

able to compute the posterior quickly, there is no guarantee how close the computed

distribution is from the true distribution.

1.4.3

Variable Elimination

In the case where the variables are discrete, one can perform the variable elimination

algorithm, successively summing and multiplying factors until only the variables of

the query remains [12]. This algorithm can be extended to handle large amount of

variables by approximating the multiplication step, integrating part of the variables

away before the full product space is explored

1.5

[7].

Our Approach

The starting point for our approach is the general variable elimination algorithm

on factor graphs [12].

This algorithm works over the discrete domain, summing

away the variables that are not present in the queried distribution. We work with

17

the continuous analog of this algorithm, replacing summation with integration. The

main challenge of the continuous algorithm is that it requires the computation of

integrals and function products which may not have closed form solutions. Instead of

computing the exact integrals and products, Our algorithm computes these operations

on abstractions representing over-approximations and under-approximations of the

true distributions.

This allows the algorithm to compute sound upper and lower

bounds on the probabilities of arbitrary events. The algorithm achieves precision by

adaptively refining these abstractions to increase precision only in the regions where

additional precision is needed.

In contrast with sampling-based approaches such as MCMC, our algorithm can

provide strong guarantees on the probabilities of particular events, giving a sound

lower and upper bound at each stage of its refinement toward a better precision. In

contrast with variational inference methods, our approach is non-parametric and can

provide guaranees without making assumptions about the forms of the underlying

distribution.

It also provides sound bounds which the variational method cannot

guarantee.

In summary, we present the first algorithm capable of computing sound over and

under-approximations of distributions over multiple variables on a factor graph. We

show that by adaptively refining these abstractions, the algorithm can focus resources

on the regions of the distribution that need it most. As a consequence of providing

sound bounds, our algorithm does suffer from slowness of computation, however, it

does enables us to ask questions previously unanswerable by the sampling or variational approaches. We will also demonstrate that our approach provides a flexible

framework for extension by including user defined distributions and constraints. We

evaluate the algorithm on a number of micro-benchmarks designed to illustrate the

different features of the algorithm as well as two simple case studies: a model of bus

arrival and a model of the monty-hall problem.

18

Chapter 2

The Algorithm

2.1

Algorithm Overview

We will now give a detailed description of our algorithm.

We will first formally

describe what is a factor graph, and what kind of factors are supported in our framework. We will then describe the abstraction on the factors, assuming we can provide

lower and upper bound for the potential functions. We will then describe the variable elimination algorithm on this abstraction. We will then describe our refinement

heuristics for refining the factors for higher precisions. Lastly we will demonstrate

how to provide sound lower and upper bounds for the factors.

2.2

Factor Graph

A factor graph is a form of representation for a probability distribution. Formally, a

factor graph is a tuple (X, F) where X is the set of variables and F is the set of factors

over these variables. A variable x E X can take on values from a domain dom(x) C R,

a domain dom({xo, . .

, Xk}) over a set of variables is the cartesian product of each of

the variables domain. A factor f E F has a set of contributing variables denoted by

var(f). A factor also has an associated potential function over its variables, which

19

maps an assignment of these variables to a real number. Intuitively, the potential

function relates a set of variables by giving a higher weight for events that are more

likely to happen among these variables. For instance, one might use a factor to model

the relationships between the occurance of rain and the observation that the ground

is wet by returning a large value for when both events happen, and a small value

for when one event happens and the other does not. We will denote the potential

function by

f

as well when the context makes it clear. A factor graph is usually

represented as a bipartite graph where the nodes are the variables and the factors,

and edges denote whether a variable contributes to a factor.

A factor graph defines a joint probability distribution p over X as a product of

its potential functions:

p(x 1

r

... xn)=

J

fj(x3 1 ...

(2.1)

xjk)

fj EF

where Z is the normalization constant and

ij1, .

A marginalized distribution over a subset A

. ,

=

E var(fh).

{xo, . .

, Xr}

C X of the variables

is given by integrating away the rest of the variables:

p(x 1 . .. Xr) =

J

p(x1 ...

xn)dz

(2.2)

In this paper, we focus on the following question: given a factor graph (X, F) and a

subset of the variables A C X, produce an approximation of the marginal distribution

over the subset with sound bounds. Note we do not mention conditional probability

because one can express conditional probability in a factor graph setting by adding

new factors to the graph. The following example illustrates how an inference problem

might be expressed this way.

20

Example: Waiting For Bus

Consider the problem of predicting the arrival time

of a bus. Imagine a bus that comes once every hour in an unspecified 20 minutes

interval. Given an observation of the bus at the 10th minutes of the hour, what is

the distribution for the arrival time of the bus over the next hour? We can model

this problem with a factor graph as follows:

i_start = Var([0, 601)

i_end = Var([0, 601)

Factor(Plus(i.start, 20, iend))

Factor(Uniform(i-start, i_end, 10))

nextbus = Var([0, 601)

Factor(Uniform(i-start, i_end, next.bus))

20

i-start

_end

Uniform

Uniform

10

next-bus

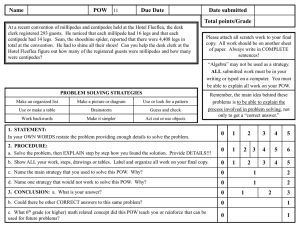

Figure 2-1: Visualizing the factor graph of the bus problem

The factor graph generated by this program is shown in figure 3-2 In our example,

the variables are instantiated with the Var keyword and an interval domain, and

the factors are instantiated with the Factor keyword and the potential function to

be associated with this factor. The Plus factor constraints that the start time must

preceed the end time by 20 minutes. This effectively give assignments of ijtart and

iend a higher weight of they differ by 20. The observation of the first bus which

21

came at minute 10 is modeled by adding an additional uniform factor, with 10 as the

output. Also note that we only handle continuous distributions in our framework,

where we can approximate discrete distributions with their continuous analogs.

To predict the arrival time of the next bus, we ask for the distribution on next-bus.

Note that in this particular example we asked for a marginal distribution of a single

variable, but in general our framework can solve for distribution of multiple variables.

2.2.1

Factors

We now formally describe the set of factors that are supported in our algorithm.

Recall factors in a Factor Graph is simply a function mapping from a set of (real

valued) assignments to a non-negative value. There are two kinds of factors that we

support:

" factors that express distributions

" factors that express constraints

Our framework supports several distribution potentials, we list a few here.

Uriiform(a,b, x)

=

U[bra

otherwise

0

e*-L)2

Gauss(i, 0-, X)

if (a < x < b) A (b - a > E)

=

-

o, f2-7

Note that these distribution are no different than their usual definitions, and we

can add additional distributions easily.

Factor with a potential function that describes a constraint requires more efforts

to define. Factors such as equalities or additions does not naturally define a potential

function, but rather, they impose a constraint on the values attainable by the set of

22

variables. One can take the boolean approach into defining their potential functions.

For instance, let plus(y, X1, X2) denotes the constraint that y is the sum of x, and

2,

one might define the potential function for plus as follows:

2

)

PlUs(y, Xi, x

1 if

(2.3)

(y = X1 + X2)

0 otherwise

However, a boolean potential as the one defined above has measure 0 in the

domain which the potential is defined over, which is harmful as we employ a numerical

approach in bounding the potential functions. Therefore, we adopt a relaxed notion

of constraint, based on a distance function.

A distance function d, for a constraint c measures the euclidean distance between

an arbitrary ponit x C dom(f) to the set of points that satisfied the constraint:

dc(x) = miny {norm(x - y) I y satisfies c}

(2.4)

Here, the function norm computes the euclidean distance between the point x and

the point y.

Finding the distance function for an arbitrary constraint is computed by taking

the lagrange multiplier, where the objective function is simply the distance between

a point y and our input point x, and the constraint function that restrict the point

y to satisfy the constraint. In many cases, the satisfying set {y

satisfies c} for a

constraint c can be decomposed into a finitely many simpler shapes, and the distance

function is thus a minimum of the distances from our point x to each of these shapes.

We list several distance functions below, the full list is available in the appendix.

23

Note that when the point x is on satisfying set, the distance is 0.

3(j (y - xi - X2))2

dpus (y, x 1 , x 2 ) =

dequai(y,

x)

=

-

2

(2.5)

(2.6)

With the distance function defined, we define the potential function of a constraint

as follows

fc(x) = Gaussian(p = 0, o-, dc(x))

(2.7)

By mapping the distance function for a constraint d, with a gaussian, we have the

guarantee that as the constraint is satisfied, the value of the potential function is at

its highest, and when the constraint is unsatisfied, instead of setting the potential to

0, we measure "how far" has the point becomes unsatisfied, and gradually decays the

potential function depending on the distance.

We would like to remark that the choice of euclidean distance is not incidental,

as it enables us to compute sound bounds for the potential functions, which we will

discuss in a later section. If a user wish to extend the set of constraint potentials

without the requirement of sound bounds but rather approximate bounds, one may

supply distance function measured in other norms, as long as the distance satisfies

the condition that it is 0 when the constraint is satisfied.

24

2.3

Factor Abstraction

2.3.1

Distribution Abstraction

As stated above, our goal is to compute a marginal distribution, call it g, over a subset

A C X. In general, this problem has no closed form solution, so the common approach

is to either find an approximate distribution g* that is a member of a parameterized

family of functions (such as the exponential family) or to derive a set of samples

through a monte carlo simulation. A problem with both approaches is that, while

they may work well in practice, they can not provide hard bounds on the probability

of a specific event.

To compute a set of hard bounds, our method derives an an abstraction of the

desired distribution g. The abstraction j stands for a set of (unnormalized) distributions that is guaranteed to contain the exact distribution, and is bounded by gmmj

and

Ymax,

where:

S{h

Vx E dom(g), g...i(x) < h(x) < gmax(x)}

Given this abstraction, we can bound the probability of an event E by first defining

two functions:

gElower(x)

min(x)

if x

C

E, gma(x) otherwise

gE,,,,(x) = gmca (x) if x C E, gmin(x) otherwise

One can think of these functions as the most conservative distributions out of q for

25

bounding the probability of E. For instance, the function gE,1 0

is used to compute

the lower bound, and it assumes the smallest density values over E and the largest

density values outside of E. The bound is given as:

fLEE

gE1 0 wer

fXglue

(x)dx

(x) dx

< fr(E

< Pr(E) <

'x

E uPpe.(x)

YXEupper

dx

(x)dx

A nice property to note is that given g, and two events E1 and E2 such that

E1 C E2 , lowerbound(Pr(Ei)) < lowerbound(Pr(E2 )) and upperbound(Pr(E1)) <

upperbound(Pr(E2 )).

It remains that we need a computationally tractable way of expressing gmin and

gmax,

and in our framework, they are given as piecewise polynomials. Although we

would like to remark that the choice of any class of functions which has a closed form

multiplication and integration will be just as well.

We now formally define how the abstraction is implemented in our algorithm,

starting with the basic construct of a Patch. and moving up to an abstraction over a

factor called the AbstractFactor.

2.3.2

Patch

A Patch of a factor

f

It is a triple

is used to define the piece-wise polynomial.

(d, pl, p,), where d is the domain of the patch, and pi and p, are lower and upper

bound polynomials, respectively, such that for any x C d, pl(x) < p(x).

A domain d of a patch is a cartesian product of intervals d = I, x

Ii C dom(xi) Vxi C X. For a particular interval Ij, if xj

that Ij = dom(xj).

...

x I, , where

var(f), then we insist

By defining the domains on all the variables' domain instead

of only the variables that contributes to the factor

f,

we can express intersections

of domains between abstractions of different factors more easily.

26

Intersections of

domains is defined by the usual cartesian intersection:

f

di n d2

ij

i2j

We say patch, is a subset of patchy if

patchi C patch 6 (dom(patchi) n dom(patchj) = don(patchi))

A set of patch can form a partition for the factor f

.

partition({patch1, .. ,patch,} ) e

Pf is the Patch Set of a factor

(y

f,

dom(patchi) = dom(

f ))

A

(p

jni, p =0)

it is a set of patches that has the following

properties:

* there exists a patch in Pf that covers the entire domain of

f

3patch G Pf : dom(f) = dom(patch)

* any patch in Pf belongs to a partition of

f

made of patches in Pf

Vpatch C Pf ]X = {patchj C Pf} s.t. patch, E X A partition(X)

A finest partition part* is a partition of factor

(2.8)

f which

(2.9)

each of its patches cannot

contain a smaller patch in Pf

part* = {patch E Pf I Vpatchj C Pf,patch Z patch}

27

(2.10)

We now give an example illustrating a few of the definitions above. Let

factor that covers the domain [0,16] x [0, 16], let Pf the covering set of

f

f

be a

be {[0, 16] x

[0, 16], [0,16] x [0, 8], [0, 16] x [8, 16], [0,8] x [0, 8], [8,16] x [0, 8]1 and the a finest partition

part*

=

{[0, 16]

x [8, 16], [0, 8] x [0, 8], [8, 16] x [0, 8]}

Patch Set of a factor, P,

[0,1]x[6, 167]

[0,x1 6]x[,,16]

x

[0,16]x[0,11

[0,16]x[0,81

(8,16]x--

0,8](0,B]

The finest partition part*

[

I[08S]

B 16x816]

I 816

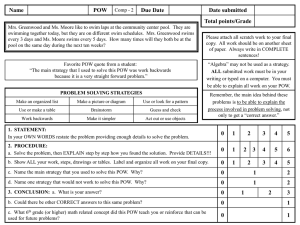

Figure 2-2: An example of a partition set Pf and the finest partition part*

We can now give the definition for gm n and gmax precisely as follows:

grin(x)

patch.pW(x) s.t. x E domr(patch) A patch G part*

(2.11)

x c domr(patch) A patch E part*

(2.12)

f, organized

as a binary

gmax(X) = patch.pi(x) s.t.

2.3.3

Abstract Factor

An Abstract Factor Gf consists of a patch set Pf of a factor

space partition BSP. Each patch in the patch set Pf make up the BSP as follows

BSP = Leaf (patch)

I Branch(svar, patch, BSP, BSP)

Here, svar denote the variable which splitted the domain of the branch.

An

example of an abstract factor is given below, using the Pf defined in the previous

section:

28

[0,161x[8,161

[8,16]x

[0,16x0,161

y

[0,81

[0, 16]x[0,8)

-

x

[0,8] x

[0,81

Figure 2-3: The patch set Pj organized as a BSP to form the abstract factor Gf

Organizing the patch set into a BSP makes several sub-routines of our algorithm

possible, such as the computation of intergrals.

of patches.

It also enables effecient look up

However, we will still refer to the patch set Pf as it is whenever the

explaination is more concise.

29

2.4

Operations on Factors

2.4.1

Variable Elimination

One way of solving for the marginal distribution is using the variable elimination (VE)

algorithm[12]. Given a factor graph (X, F) and a subset of the variables A C X, the

VE algorithm successively pick variables x' C X\A, and marginalize it away until

only the variables that remain are in A. The algorithm is given below, in its recursive

formulation:

VE(X, F, A) :

if(X\A)

0:

(2.13)

(2.14)

Hf

(2.15)

fEF

else :

(2.16)

let x' E (X\A)

F

{f E F I x' E var(f)}

f-

JJ1f

(2.17)

(2.18)

(2.19)

f E F.1

f\XI

fx, dx'

(2.20)

X' = X\{x'}

(2.21)

F' = (F\Fxi) U{f\xI}

(2.22)

VE(X', F', A)

(2.23)

However, for a set of arbitrary potential functions, the intergration operator may

not have a, closed form solution. As stated before, one of the main contributions of

our approach is to use abstractions to represent the factors, and we will now show

how one can perform the VE algorithm over the abstract factors. To do so, we must

achieve the following:

* convert the each of the original factors f E F to abstract factors Gf

30

* able to compute pair-wise multiplication of abstract factors G1 x Gf2

s able to compute integration of an abstract factor f, Gfdy

Once the above objectives can be met, the VE algorithm will iteratively reduce

the set of original abstract factors, creating intermediate abstract factors along the

way, until only a single abstract factor, Gresuit, remains. This abstract factor is the

output of the VE algorithm.

Essentially, the computation of an abstract factor Gf is the computation of the

patches in its patch set PF. Once the set of patches are obtained, they can be organized

together easily with some book keeping to form the BSP that makes up the abstract

factor. Therefore, we focus on the computation of a single patch for the remainder of

this section.

2.4.2

Patch for the original factors

The computation of patches for the original factors will be thoroughly examined in a

later chapter. For time time being, we will assume given a domain d we can always

find the appropriate lower bound polynomial pi and the upper bound polynomial pu

that correctly bounds the original potential function over the domain d.

2.4.3

Patch for multiplication

To compute a patch for a multiplication, the algorithm is given as input two abstract

factors GfI and G2, along with a domain d, which it uses to compute the appropriate

lower and upper bound pi and pu over the domain d.

Let's define the smallest cover c* of a domain d in a patch set Pf as follows:

c* = argminpatch{volume(patch) e Pf I d C domairn(patch)}

31

(2.24)

Where volume is simply the volume of d, obtained by multipliing the length of

all its intervals. The intuition of the smallest cover is that we want to find the most

precise patches in Gf 1 and Gf 2 that can be used to derive our bounds, and a smaller

patch will have a higher precision compared to a big one. The requirement that d C

domain(c*) is important because one cannot multiply together piece-wise polynomial

bounds and obtain a single polynomial bound over d. In the implementation of our

algorithm the smallest cover is found by searching the BSP of each of the factors for

a faster lookup.

Let c*' be the best cover of d in the patch set Pfi of Gf i, and let c*2 be the best

cover of d in the patch set Pf 2 . Then, we define the lower and upper bound for the

domain d as follows:

*1

Pi =

*2

c *P1i x C *P

x c*2 TU

PU = c *1

Since multiplication of polynomials gives another polynomial, the resulting p, and

pu are valid lower and upper bounds for the potential function over d. Note that

the bound only holds when the polynomials are non-negative, which is the case since

a distribution function can never be negative. We illustrate the computation of the

upper bound pu in multiplication with the following figure.

2.4.4

Patch for integration

To compute a patch for integration, the algorithm is given as input an abstract factor

Gf along with a variable y which we would like to integrate over. For convinience, we

use Ya(d) and Yb(d) to denote the lower and upper ends of the interval corresponding

to the variable y in a domain d.

32

P1

3

p2

G31:

G2:

3 x q1

G1 x G2

d

Figure 2-4: The 1-dimensional abstraction factors G 1 and G 2 are being multiplied.

The upper bound for the domain d is computed by multiplying together the polynomials from the smallest coverings of d from each of the abstract factors

Unlike integrateion, there may be many patches in Gf that intersects with the

domain d, as the integration reduces the dimension of the factor by 1.

Akin to

multiplication, however, we would also like to find the smallest covering of domain

d within Gf.

The algorithm for finding the smallest covering uses the BSP data

structure, and is given below:

smallestCover(BSP, d, y)

if

BSP == Leaf (Patch)

{Pat ch}

else

if BSP.split-var == y

union(smallestCover(BSP.left, d, y),

smallestCover(BSP.right, d, y))

else

if contains(BSP.left.patch, d)

smallestCover(BSP.left, d, y)

else

if contains(BSP.right.patch, d)

smallestCover(BSP.right, d, y)

else

BSP.Patch

end

end

end

end

end

One should notice that when the split variable is equal to the variable we're

33

integrating over, we can use it to filter which side of the BSP to further explore. This

is a nice property of the BSP: The abstract factor Gf and the abstract factor which

the patch over d is computed over shares the same variable y, and BSP only partition

the space by halving the domain, the intersection of d will only happen at one branch

of the BSP. or not at all, when the split variable is not equal to y. This property

let us find the smallest coverings for the domain d in Gf: If one branch contains d,

go down that branch, if neither branches can contain d, then we should return the

current patch without sub-dividing it. Below is a figure illustrating a smallestCover

for d computed in the integration step.

G1:

inte(G1,y)

d

Figure 2-5: The 2-dimensional abstract factor G1 is integrated. A domain d has its

intersected patches in G1 being drawn, for brevity these patches do not nessesarily

come from a BSP

Let C* be the best covers for d in Gf, consisting of patches in Gf. We define the

lower and upper bound for d as follows:

34

I

patcheC*

Pu =

E

patcheC*

Yb(patch.d)

ya (patch.d)

patch.pl dy

/Yb(patch.d)

patch.pu dy

ya(patch.d)

Since inequalities are preserved under integration, the new bounds pi and pu are

again valid lower and upper bounds for the true potential function over the domain

d.

35

2.5

Refinement Heuristic

Up until this point we demonstrated that one can compute the lower and upper

polynomial bounds of a patch over a domain d, and the resulting patches can be

then organized together to form a BSP of an abstract factor G. However, the task

of choosing a good set of patches remains. It is not difficult to see why a uniform

coverage of patches is inefficient, for the regions where the lower bound is already close

to the upper bound, there is no reason to further partition the patches for a better

precision. To intelligently partition the domain into patches, we needed a heuristic

function that can decide, at each iteration of our refinement step, which patch should

be splitted into smaller patches. We begin by defining a measurement of errors of

a factor, and explain why a some simple heuristics are inadequate. We then define

a measure of imprecision of a patch, and describe a heuristic that selects the patch

based on a ranking of all patches imprecisions. We finish this section by noting several

nice properties of this heuristic and the optimization opportunities these properties

enable.

2.5.1

Measurement of Error

To obtain a heuristic, we must first define an appropriate cost function. We define

the error of an abstract factor G as follows:

volum e(patch)

Err(G) =

(2.25)

patchE part*

Here, part* refers to the finest partition of an abstract factor G, and volume of

a patch is computed by integrating the difference function pu - pi over the entire

domain of the patch.

Naively, one would simply split a patch if it has a big volume, i.e. its lower bound

36

is much smaller than its upper bound. However, this approach does not work for two

reasons:

e The volumes of patches increases multiplicatively, a small differences in the

bounds of one patch can cause a much larger volume in other patches down

stream

* Some patches with big errors do not need to be split, because they are multiplied

by a patch that is close to 0, effectively cancling out the errors

Instead, one should measure directly the effect of a patch on the resulting factor

of the VE algorithm, Gresuit. One approach is to simply attempt to split a patch, and

measure the difference of error between Gresuitunspit and Gresuispit, and select the

patch that leads to the greatest decrease of errors. However, this approach does not

work because the effect of a split of a single patch becomes diluted by the imprecision

of other patches, and the improvement cannot be measured accurately until the other

patches have also been refined and made precise. As a result, this heuristic will favor

patches that has an immediate improvement on the qualities of Gresuit, and is prone

to get stuck in an local optimal, splitting a single patch into smaller and smaller

patches while ignoring the other patches altogether.

2.5.2

The Splitting Heuristic

Instead of measuring the effect of a patch with the errors, our heuristic measure the

effect of a patch with imprecisions. Intuitively, the imprecision of a patch is measured

by pretending all the other patches are precise, and that this patch is the only patch

with imprecise lower and upper bounds. Formally, let G',teh be the set of abstract

factors where all the patches have their lower bounds pi set to be equal to their upper

bounds pu, except for the patch in question patch*. Then, the imprecision is defined

as:

37

Imprecision(patch*) = E

(rr(G'tch*

esult)

(2.26)

Our heuristic computes the imprecision of all the patches, and choose the one with

the largest imprecision to split.

patchpit = argminpatchImprecision(patch)

(2.27)

By measuring imprecision instead of error, the effects of a patch's bound are not

diluted by the imprecision of other patches. As a result, as a patch is splitted and

became smaller, its imprecision will also decreases compared to the other patches,

allowing other patches to split as well. This avoids the problem of getting stuck in a

local optimal.

2.5.3

Optimization of the Heuristic

As one can imagine, computing imprecision for all of the patches is an expensive

task. In the worst case, this computation runs in 0(n2 ) where n is the total number

of patches, as during the computation of imprecision, each patch induces its own

modified abstract factors G'>ch, effectively creating a completely new set of patches.

However, there is a nice property of imprecision: For any patch, its imprecision can

only decrease as the other patches become more refined. Let G denote a set of abstract

factors and G' denote the same set of abstract factors after several refinement and

splitting has occured, then:

38

Err(Gatch ,result) <; ETTr(Gpatch,result)

( 2.28

)

Vpatch

Because our heuristic only split the patch with the most imprecision, if a patch

already has a small imprecision, there is no need to re-compute its imprecision because

only patches with larger imprecisions can be chosen by the heuristic for splitting.

Using this insight, we can organize all the patches and their imprecisions into a

queue, and only re-compute the maximum imprecision patches of the queue.

get-split()

=

patch, imprecision = dequeue!(imprecision-queue)

recomputed-imprecision = compute-imprecision(patch)

while (recomputed-imprecision != imprecision)

enqueue!(imprecision-queue, patch, recomputed-imprecision)

patch, imprecision = dequeue! (imprecision-queue)

recomputed-imprecision = computeimprecision(patch)

end

patch

end

This algorithm chooses the patch with the largest imprecision, if it discovers its

imprecision is the same as its re-computed imprecision, it returns the patch. Otherwise, it put the patch along with its re-computed imprecision back into the queue

and try again.

Further optimization is obtained by noticing the bounds of a patch only has effect

on few of the other patches, so imprecision of a patch can be computed more locally,

by using only the patches that it affects.

39

2.6

Polynomial Bounds

Recall from the variable elimination section, the soundness of the algorithm only

assumes that one can derive a sound lower bound pi and a sound upper bound p" for

the patches of the original factors, as the bounds are preserved in the integration and

multiplication operations. We would like to emphasize that our technique can work

with any valid lower and upper bounds for the patches, as long as the bounds come

from a family of functions that can be symbolically multiplied and integrated. In our

algorithm, the family of functions are polynomials, as they can be easily multiplied

and integrated, and can be easily bounded. The key question of this section is, given

an arbitrary potential function

f, that

is non-negative and over a domain d, can the

f

algorithm provide a lower and upper bound such that p' <

< pa. We will explain

how to obtain the bounds in two parts: In part one we will briefly explain how to

obtain a good polynomial approximation to the function

f,

and in part two we will

explain how to shift the polynomial by a constant, so that the shifted polynomial

becomes valid bounds for the function

2.6.1

f.

Polynomial Approximation

In the approximation step, an arbitrary, multi-variate function f(Xi,

be approximated with a polynomial p over a domain d.

As function

...

f

X) must

is a valid

potential function, it must have non-zero measure, is everywhere non-negative, and

continuous. The function

f is

not necessarily differentiable, as our distance functions.

which defines the potentials for the constraints, uses the min function internally.

To approximate the function

f,

we use techniques developed in the chebfun pack-

age of matlab [8], which we will describe on a high level. This algorithm works by

iteratively project the function f onto an outer product of Chebychev polynomials.

Let p, denote a chebychev polynomial of the single variable xi, then the product

40

PP = f1

p

is a polynomial of variables X1 ... Xk, expressed as an outer product. The

algorithm constructs ppi, which attempts to approximate our original function

computes the residue ri =

f

f,

and

- ppi. This residue function is again approximated by

PP2, and a new residue is computed r2 = r1 - PP2. This process is repeated until the

residue function is sufficiently small. The result is a sum of outer products of polynomials, which is the polynomial p = ppi + - - - + PPk that approximates the function

f.

In our work, we limit the degree of the chebychev polynomials to 2, as higher degrees,

while able to approximate the function

f

better, becomes numerically unstable when

being multiplied and integrated.

2.6.2

Approximate Bounding

Although the algorithm in

[8]

gives very good polynomial approximations, it does

not provide an error bound on how close the polynomial actually is to the function it

approximates. As a result, we are tasked with retrofitting the approximate polynomial

p with two constants ci and c2 such that p - c

<

f

< p

+ c2.

The simplest bounding method is simply use random sampling in conjunction of

gradient descent on the difference function

f

- p.

The maximum of the difference

function becomes c2, as it is the amount p will have to shift up to be always greater

than

f.

Conversely, the minimum of the difference function becomes -ci,

amount p will have to shift down to be always smaller than

f.

as it is the

This is the default

method of proving bounds as it gives very good bounds and is cheap to compute.

The obvious dropback of this method is it is not sound, as sampling with gradient

descent is prone to stuck in local minimums, which will lead to an incorrect bound.

However, in practice it rarely appears to behave abnormally.

41

2.6.3

Exact Bounding

However, if one insists on providing sound bounds, the algorithm can provide these

sound bounds at the expense of bound quality. Consider a function

f

and its ap-

proximation polynomial p over domain d, consider the bounds on the value of f and

p individually: a < min(f) < max(f) < b, c < min(p) < max(p) < d. Then, by

letting c2 = b - c and ci = d - a, p - cl and p + c2 forms a valid lower and upper

bound for the function

f

over d. It thus remains to provide sound bounds for the

polynomial p and the potential function

f

individually over the domain d, which we

will describe now.

2.6.4

Bounding Polynomials Exactly

Given a polynomial p over a domain d, the maximum and minimum of the polynomial

can be bounded in two ways, either via interval arithmetic or by root-finding. Interval

arithmetic [9] is a form of arithmetic where the operators work on intervals instead of

single values, with the guarantee that for any points chosen from the input intervals,

the usual arithmetic operation on those points results in a point that is contained

in the result interval. For instance, we can add two intervals: [1, 3] + [3, 5] = [4, 8],

and multiply two intervals [-1, 2] * [-1,2] = [-2,4].

As a polynomial is a series

of additions and multiplications, one can bound the maximum and minimum of a

polynomial over a domain d using interval arithmetic.

Another more precise way of obtaining a bound on the polynomial is to use rootfinding. We know the maximum and minimum of the polynomial can only occur at

the critical points where all the partial derivatives of the polynomials are equal to

0, or at the boundaries of the domain.

Using a root finding library [10], one can

easily identify the critical points on the interiors of the domain, and by substituting

the values at the boundaries of the domain to obtain a simpler polynomial, one can

obtain all the critical points of the polynomial on domain d, and enumerate over them

42

to obtain the maximum and the minimum.

2.6.5

Bounding Potential Functions Exactly

Like polynomials, one can bound the potential functions with interval arithmetic to

obtain a very loose bound.

However, we are able to find the exact bound for the

uniform potential function over the domain d analytically, the code for computing

this bound is in the appendix. For the gaussian function, we are still in the process

of discovering a sound bound for its potentials.

For the potential function that corresponds to a constraint, however, we are able

to obtain very tight bounds symbolically.

Recall that the potential function of a

constraint factor is given by first obtaining the distance function d, of the constraint

c, then applying a gaussian function on top of the distance function to obtain the

potential function. Therefore, if we are able to bound the distance function over a

domain d, we can obtain the bound for the potential function, as the gaussian function

is monotonically decreasing for a non-zero input. The question we must answer is

therefore: Given a distance function d, and a domain d, what is the smallest and

largest distances attainble over all the points in the domain?

The fact that the distance function measures euclidean distance will play a key

role: We can draw a bounding sphere around the domain d, and since the distance

function is measured in the euclidean distance, the smallest and largest distance value

attainable over the domain d is bounded by the smallest and largest value attainble

on the bounding sphere:

43

Bound f,d:

o

-

center(d)

diameter(d)

2

dfurthest = df(o) + r

r

dciosest = max(0, df(o) - r)

Below is a figure illustrating the bound for a distance function for a constraint

factor.

{y I y satisfy

c}

b

d

Figure 2-6: The domain d, a rectangle, has its distance bounded by a and b, deriving

from the bounding sphere

44

Chapter 3

Results

We will now list several preliminary results of our algorithm.

3.1

Simple Constraint

Consider the propagation of a simple set of constraints. Say we have the constraints

x = y and x = 5, and we wish to draw a conclusion on the value of y. This can

be done by combining the two factors together and integrating away the variable x.

Below is the result after 100 steps of refinements.

~~xbn

Figure 3-1: Visualizing the result factor of propagating a simple constraint

As we can see, the distribution correctly cluster around the valu y = 5, and we

45

have the guarantee that the true distribution lies between the lower bound and the

upper bound.

3.2

Backward Inference

Consider these set of constraints z = Unif (y, x), y + 20 = x, z = 10. Given these

constraints, what can one say about the joint distribution of y, x? This experiment

explores the ability to infer the hidden relationship between y and x after making an

observation on the output z.

Color

150

Figure 3-2: Visualizing the relationship between y and x after making an observation

This is a preliminary result demonstrating the ability to infer relationships between

variables, in this case, we can see that the distribution roughly satisfies the constraint

y+20 = x, however, the effect of making an observation at z = 10 is not so pronounced

from this experiment. This can be due to a insufficient number of refinements, which

our algorithm still has trouble with scaling.

46

Appendix A

Potential Function and Distance

Functions

Here is the python code defining the bound object for the uniform distribution

# the variable order for this is [low, high, x]

# the value for this potential is 1 /

(high - low) if

low < x < high

# and 0 otherwise

class UniformPotential(Potential):

def __init__(self, delta):

self .delta = delta

def find_minmax(self, constraints):

L1, L2 = constraints[0]

H1, H2 = constraints[1]

X1, X2 = constraints[2]

delta = self.delta

# let's first find the minimum

# for finding the minimum, there are 2 steps to it. For the 8 vertex of the

# cube if any point is UNSAT, then the minimum is 0 otherwise, it is the

# smallest achievable value of 1 / (H2 - Li), the value of x does _not_

# matter here

def pt-satconstraints(pt):

l,h,x = pt

sati = 1 <= x

sat2 = x <= h

47

sat3 = h >= 1 + delta

return sati and sat2 and sat3

pts-satisfy = [pt-satconstraints(pt) for pt in self.get-points(constraints)]

# if it has false, min is 0, otherwise, min is smallest value

minimum = 0.0 if False in pts-satisfy else 1.0 / (H2 - Li)

# let's now find the maximum, some helper functions first

# the idea is the max can only happen at the SAT side, so we don't need to

# worry about the UNSAT regions, if nothing is sat, the max will just be 0

# intersection with the first constraint H >= X1

# return a box if has intersection, or None if intersection empty

def getifirst intersection(11, 12, hi, h2):

if hi >= X1:

return 11, 12, hi, h2

if h2 < X1:

return None, None, None, None

else:

return 11, 12, X1, h2

# intersection with the second constraint L <= X2

# return a box if has intersction, or None if intersection empty

def get.second intersection(11, 12, hi, h2):

if 11 == None:

return None, None, None, None

if 12 <= X2:

return 11, 12, hi, h2

if 11 > X2:

return None, None, None, None

else:

return 11, X2, hi, h2

# given a point 11, hh in 2D, check if it satisfy the 3rd constraint H > L

# + delta

def pt.sat_3rd(pt):

11, hh = pt

return hh >= 11 + delta

# run through the boxes through the first 2 if there are any UNSAT

# we know the max / min are both 0, otherwise we do some math

afterintersection = get-second-intersection(*get-firstintersection(Li, L2, H1

48

maximum = None

# if intersection is empty, our box is unsat

# both upper / lower are 0

if after intersection[0] == None:

maximum = 0.0

else:

11, 12, hi, h2 = after-intersection

pt-satisfiability = [pt-sat_3rd(pt) for pt in self.get-points([(l1,12),(h1,h2

# if everything sat, max is closest to line, min is furthest(to line) max

# is closest

if False not in pt-satisfiability:

maximum = 1.0 / (hi - 12)

# if none of the points satisfy, we're entirely out of the game, so 0, 0

# for min max

if True not in pt-satisfiability:

maximum = 0.0

# if some are SAT and some are UNSAT, then the line pierce the thing.

# the minimum is outside aka 0, the max is 1.0 / delta

if False in ptsatisfiability and True in pt-satisfiability:

maximum = 1.0 / delta

return minimum, maximum

Here are the python code defining various distance functions for the constraint

factors

# equality:

# y = x

@to_11_norm

def equal-dist(lst):

xl = 1st[0]

x2 = 1st[11

return 0.5 * pow((xl - x2), 2)

# constant:

# x = const

def constdist(const):

def constdist(lst):

x = lst[0]

return equal-dist([x, const])

return constdist

# uniform:

# x = uniform (a, b)

49

@to_11_norm

def uniform_dist(a, b):

def _uniform(lst):

x = ist[]

if a < x and x < b:

return 0.0

else:

return 5.0

return _uniform

# asssert:

# asserting x is true

def assertdist(lst):

x = ist[]

return equal-dist([x,

pow(xl,2) + pow(x2,2),

pow(xl-1,2) + pow(x2,2),

pow(xl,2) + pow(x2-1,2),

+ pow(xl-1,2) + pow(x2-1,2)

)

# and:

# y = x1 and x2

@to_11_norm

def and-dist(lst):

y = ist[O]

xl = 1st[1]

x2 = lst[2]

return min( pow(y,2) +

pow(y,2) +

pow(y,2) +

pow(y-1,2)

1.0])

pow(xl,2) + pow(x2,2),

+ pow(xl-1,2) + pow(x2,2),

+ pow(xl,2) + pow(x2-1,2),

+ pow(xl-1,2) + pow(x2-1,2)

)

# or:

# y = x1 or x2

@to_11_norm

def ordist(lst):

y = ist[]

xl = 1st[11

x2 = lst[2]

return min( pow(y,2) +

pow(y-1,2)

pow(y-1,2)

pow(y-1,2)

# xor:

# y = x1 xor x2

@to_11_norm

50

pow(xl,2) + pow(x2,2),

+ pow(xl-1,2) + pow(x2,2),

+ pow(xl,2) + pow(x2-1,2),

pow(xl-1,2) + pow(x2-1,2)

)

def xordist(lst):

y = lst[O]

xi = 1st[1]

x2 = lst[2]

return min( pow(y,2) +

pow(y-1,2)

pow(y-1,2)

pow(y,2) +

# plus:

# y = x1 + x2

@to_11_norm

def plus.dist (1st):

y = ist[]

x1 = lst[11

x2 = 1st[21

return 3*pow(l.0/3 * (xl+x2-y),2)

# TODO: make it not approximate

# times:

# y = x1 * x2

@to_11_norm

def times-dist (1st):

y = ist[Ol

xl = 1st[11

x2 = lst[2]

return abs(y-(xl*x2))

# mod:

# y = x1 % x2

def moddist(lst):

y = lst[O]

x1 = lst[1]

x2 = lst[2]

def mod-sat(pt):

y,xl,x2 = pt

if (x2 == 0):

return False

return y == x1 % x2

yr = round(y)

x1r = round(xl)

x2r = round(x2)

maxdist = ()

d = 0

51

canddist = ()

while (d < maxdist):

for i in range(-d,d+1):

for j in range(-d,d+1):

ptl = (yr-d, xlr+i, x2r+j)

pt2 = (yr+d, xlr+i, x2r+j)

pt3 = (yr+i, x1r-d, x2r+j)

pt4 = (yr+i, xlr+d, x2r+j)

pt5 = (yr+i, xlr+j, x2r-d)

pt6 = (yr+i, xlr+j, x2r+d)

candidates = filter(mod-sat,[ptl,pt2,pt3,pt4,pt5,pt6l)

dists = map(lambda x: euclid-dist(x,(y,xl,x2)), candidates)

if dists == [I:

continue

canddist = min(canddistmin(dists))

#euclidian distance actually returns the squared dist, we need to sqrt it

maxdist = int(pow(cand-dist,0.5)+1)

d = d+1

return canddist

# indirect less than:

# y = x1 < x2

@to_11_norm

def lt-dist (1st):

y = lst[0]

x1 = lst[1]

x2 = 1st[21

def ltcasel(y, x1, x2):

if (xl > x2 or x1 == x2):

return pow(y,2)

else:

return pow(y,2)+2*pow((xl-x2)/2,2)

def ltcase2(y, x1, x2):

if (xl < x2):

return pow(y-1,2)

else:

return pow(y-1,2)+2*pow((xl-x2)/2,2)

return min(lt-casel(y,xl,x2),lt-case2(y,xl,x2))

# direct less than

# x1 < x2

@to_11_norm

def lessdist (1st):

y = 1.0

xl = 1st[0]

52

x2 = 1st [1

def lt-casel(y, xl, x2):

if (xl > x2 or xl == x2):

return pow(y,2)

else:

return pow(y,2)+2*pow((xl-x2)/2,2)

def ltcase2(y, xl, x2):

if (xl < x2):

return pow(y-1,2)

else:

return pow(y-1,2)+2*pow((xl-x2)/2,2)

return min(lt-casel(y,xl,x2),lt-case2(y,xl,x2))

# indirect equal:

# y =

(xl == x2)

@to_11_norm

def eq-eq-dist (1st):

y = ist[Ol

x1 = lst[1]

x2 = 1st[21

dist-uneq = 0

# if the two numbers are sufficiently different, take projection

if (abs(xl - x2) > 1):

distuneq = pow(y,2)

# otherwise, takes the two lines:

# x2 = xl+1, x2 = xl-l and

# take the min dist to those two lines

else:

distuneqi = pow(y, 2) +\

pow(0.5*(x2-xl-1), 2) +\

pow(0.5*(xl-x2+1), 2)

distuneq2 = pow(y, 2) +\

pow(O.5*(x2-xl+l), 2) +\

pow(0.5*(xl-x2-1), 2)

distuneq = min(dist-uneql, dist-uneq2)

dist-eq = pow(0.5*(x2-xl),2) +\

pow(0.5*(xl-x2),2) +\

pow(y-1,2)

return min(dist-eq, dist-uneq)

# gernealize from indirect equal:

# y = lxl - x21 < bound

@to_11_norm

def samedist(bound):

53

def _eq-eq-dist (1st):

y = lst[O]

xl = 1st [1]

x2 = 1st[21

true-y-dist = pow(l - y, 2)

false-y-dist = pow(y, 2)

distlaterall = pow(0.5*(x2-xl-bound),2) +\

pow(O.5*(xl-x2+bound), 2)

distlateral2 = pow(0.5*(x2-xl+bound),2) +\

pow(O.5*(xt-x2-bound), 2)

# if sufficiently close, distance to true is just projection

if (abs(xl - x2) < bound):

dist-eq = true-y-dist

dist-uneq = false-y-dist + min(distlaterall, distlateral2)

return min (dist-eq, dist.uneq)

else:

dist-uneq = false-y-dist

dist-eq = true.y.dist + min(distlaterall, distlateral2)

return min (dist-eq, distuneq)

return _eq-eqdist

# not:

# y = not x

@to_11_norm

def not-dist(lst):

)

y = lst[O]

x = lst[1]

return min( pow(y-1,2) + pow(x,2),

pow(y,2) + pow(x-1,2)

# neg:

# y = -x

@to_11_norm

def neg-dist(lst):

y = ist[O]

x = lst[1]

return 2*pow((y+x)/2,2)

# arracc:

# y = idx xO x2 x3 x4 ...

def arracc-dist(lst):

y = ist[Ol

xn-1

54

idx = 1st [11

xs = lst[2]

size = len(xs)

def d-regionl():

if (idx < -1):

return pow(y,2)

else:

return pow(idx+1,2) + pow(y,2)

def d-region-po:

ret = ()

for p in range(size):

ret = min(ret,

pow(idx-p,2) + 2.0 * pow((y-xs[pl)/2,2)

return ret

)

def d-region2(:

if (idx > size):

return pow(y,2)

else:

return pow(idx-size,2) + pow(y,2)

return min(d-regionl(), d-region2(, d.region-po)

# arrass

# y = idx realidx noval yes-val

def arrassdist(lst):

y = lst[0]

idx = 1st[1]

real idx = lst[21

valno = 1st[3]

val-yes = 1st[41

def d-eqO:

return pow(idx-real idx,2) + pow((y-val-yes)/2,2) * 2

def duneqi():

if (idx < realidx - 1):

return 2 * pow((y-val-no)/2,2)

else:

return 2 * pow((y-val-no)/2,2) + pow(idx-(real-idx-1),2)

def duneq2():

if (idx > realidx + 1):

return 2 * pow((y-valno)/2,2)

else:

return 2 * pow((y-val-no)/2,2) + pow(idx-(real-idx+1),2)

55

return min(d-eq(),d.un-eqi(),d-un-eq2())

# if then else: if b then vi else v2

@to_11_norm

def ite-dist(lst):

y = ist[]

b = ist[1]

v1 = lst[2]

v2 = 1st[31

def dtrueand-vl():

return pow(b-1.0,2) + pow((y-vt)/2,2) * 2

def dfalseand-v2():

return pow(b-0.0,2) + pow((y-v2)/2,2) * 2

return min(d.trueand-vl(), dfalse_andv2())

56

Bibliography

[1] Christophe Andrieu, Nando de Freitas, Arnaud Doucet, and Michael I. Jordan.

An introduction to MCMC for machine learning. Machine Learning, 50(1-2):543, 2003.

[2] R.C. Aster, B. Borchers, and C.H. Thurber. ParameterEstimation and Inverse

Problems. Elsevier Science, 2011.

[3] Matthew J. Beal. Variational Algorithms for Approximate Bayesian Inference.

PhD thesis, Gatsby Computational Neuroscience Unit, University College London, 2003.

[4] Christopher M. Bishop. PatternRecognition and Machine Learning (Information

Science and Statistics). Springer-Verlag New York, Inc., Secaucus, NJ, USA,

2006.

[5] Noah D. Goodman and Andreas Stuhlm"uller. The design and implementation

of probabilistic programming languages. Accessed: 2014-08-27.

[6] John P. Huelsenbeck, Fredrik Ronquist, Rasmus Nielsen, and Jonathan P. Bollback. Bayesian inference of phylogeny and its impact on evolutionary biology.

Science, 294(5550):2310-2314, December 2001.

[7] Emma Rollon and Rina Dechter. New mini-bucket partitioning heuristics for

bounding the probability of evidence. In Maria Fox and David Poole, editors,

AAAI. AAAI Press, 2010.

[8] Alex Townsend and Lloyd N. Trefethen. An extension of chebfun to two dimensions. SIAM J. Scientific Computing, 35(6), 2013.

[9] Warwick Tucker. Validated numerics : A Short Introduction to Rigorous Computations. Princeton University Press, 2011.

[10] Jan Verschelde. Algorithm 795: Phcpack: A general-purpose solver for polynomial systems by homotopy continuation. ACM Trans. Math. Softw., 25(2):251276, June 1999.

[11] Udo von Toussaint. Bayesian inference in physics. Rev. Mod. Phys., 83:943-999,

Sep 2011.

57

[12] N.L. Zhang and D. Poole. A simple approach to bayesian network computation.

In proceedings of the 10th Canadian Conference on Artificial Intelligence, pages

16-22, Banff, Alberta, Canada, 1994.

58