Document 10949553

advertisement

Hindawi Publishing Corporation

Mathematical Problems in Engineering

Volume 2011, Article ID 463087, 22 pages

doi:10.1155/2011/463087

Research Article

Global Convergence of a Nonlinear Conjugate

Gradient Method

Liu Jin-kui, Zou Li-min, and Song Xiao-qian

School of Mathematics and Statistics, Chongqing Three Gorges University, Chongqing 404000, China

Correspondence should be addressed to Liu Jin-kui, liujinkui2006@126.com

Received 5 March 2011; Revised 21 May 2011; Accepted 4 June 2011

Academic Editor: Piermarco Cannarsa

Copyright q 2011 Liu Jin-kui et al. This is an open access article distributed under the Creative

Commons Attribution License, which permits unrestricted use, distribution, and reproduction in

any medium, provided the original work is properly cited.

A modified PRP nonlinear conjugate gradient method to solve unconstrained optimization

problems is proposed. The important property of the proposed method is that the sufficient descent

property is guaranteed independent of any line search. By the use of the Wolfe line search, the

global convergence of the proposed method is established for nonconvex minimization. Numerical

results show that the proposed method is effective and promising by comparing with the VPRP,

CG-DESCENT, and DL methods.

1. Introduction

The nonlinear conjugate gradient method is one of the most efficient methods in solving

unconstrained optimization problems. It comprises a class of unconstrained optimization

algorithms which is characterized by low memory requirements and simplicity.

Consider the unconstrained optimization problem

minn fx,

x∈R

1.1

where f : Rn → R is continuously differentiable, and its gradient g is available.

The iterates of the conjugate gradient method for solving 1.1 are given by

xk1 xk αk dk ,

1.2

2

Mathematical Problems in Engineering

where stepsize αk is positive and computed by certain line search, and the search direction dk

is defined by

dk ⎧

⎨−gk ,

for k 1,

⎩−g β d ,

k

k k−1

for k ≥ 2,

1.3

where gk ∇fxk , and βk is a scalar. Some well-known conjugate gradient methods include

Polak-Ribière-Polyak PRP method 1, 2 , Hestenes-Stiefel HS method 3 , Hager-Zhang

HZ method 4 , and Dai-Liao DL method 5 . The parameters βk of these methods are

specified as follows:

βkPRP

βkHS

gkT gk − gk−1

,

gk−1 2

gkT gk − gk−1

T ,

dk−1 gk − gk−1

βkHZ βkDL yk−1 − 2dk−1

gkT yk−1

T

dk−1

yk−1

−t

yk−1 2

T

T

dk−1

yk−1

gkT sk−1

T

dk−1

yk−1

1.4

gk

T

dk−1

yk−1

,

,

t ≥ 0,

where || · || is the Euclidean norm and yk−1 gk − gk−1 . We know that if f is a strictly convex

quadratic function, the above methods are equivalent in the case that an exact line search is

used. If f is nonconvex, their behaviors may be further different.

In the past few years, the PRP method has been regarded as the most efficient conjugate gradient method in practical computation. One remarkable property of the PRP method

is that it essentially performs a restart if a bad direction occurs see 6 . Powell 7 constructed an example which showed that the PRP method can cycle infinitely without approaching any stationary point even if an exact line search is used. This counterexample also

indicates that the PRP method has a drawback that it may not globally be convergent when

the objective function is nonconvex. Powell 8 suggested that the parameter βk is negative

in the PRP method and defined βk as

βk max 0, βkPRP .

1.5

Gilbert and Nocedal 9 considered Powell’s suggestion and proved the global convergence

of the modified PRP method for nonconvex functions under the appropriate line search. In

addition, there are many researches on convergence properties of the PRP method see 10–

12 .

In recent years, much effort has been investigated to create new methods, which not

only possess global convergence properties for general functions but also are superior to

original methods from the computation point of view. For example, Yu et al. 13 proposed a

Mathematical Problems in Engineering

3

new nonlinear conjugate gradient method in which the parameter βk is defined on the basic

of βkPRP such as

βkVPRP

⎧ 2

T

⎪

⎪

⎨ gk − gk gk−1

ν g T dk−1 gk−1 2

k

⎪

⎪

⎩0,

2

if gk > gkT gk−1 ,

1.6

otherwise,

where ν > 1 in this paper, we call this method as VPRP method. And they proved the

global convergence of the VPRP method with the Wolfe line search. Hager and Zhang 4

discussed the global convergence of the HZ method for strong convex functions under the

Wolfe line search and Goldstein line search. In order to prove the global convergence for

general functions, Hager and Zhang modified the parameter βkHZ as

βkMHZ max βkHZ , ηk ,

1.7

−1

,

dk min η, gk 1.8

where

ηk η > 0.

The corresponding method of 1.7 is the famous CG-DESCENT method.

Dai and Liao 5 proposed a new conjugate condition, that is,

dkT yk−1 −tgkT sk−1 ,

t ≥ 0.

1.9

Under the new conjugate condition, they proved global convergence of the DL conjugate

gradient method for uniformly convex functions. According to Powell’s suggestion, Dai and

Liao gave a modified parameter

βk max

gkT yk−1

T

dk−1

yk−1

,0 − t

gkT sk−1

T

dk−1

yk−1

,

t ≥ 0.

1.10

The corresponding method of 1.10 is the famous DL method. Under the strong Wolfe

line search, they researched the global convergence of the DL method for general functions.

Zhang et al. 14 proposed a modified DL conjugate gradient method and proved its global

convergence. Moreover, some researchers have been studying a new type of method called

the spectral conjugate gradient method see 15–17 .

This paper is organized as follows: in the next section, we propose a modified PRP

method and prove its sufficient descent property. In Section 3, the global convergence of the

method with the Wolfe line search is given. In Section 4, numerical results are reported. We

have a conclusion in the last section.

4

Mathematical Problems in Engineering

2. Modified PRP Method

In this section, we propose a modified PRP conjugate gradient method in which the parameter βk is defined on the basic of βkPRP as follows:

βkMPRP

⎧

⎪

⎪

⎨

2

gk − g T gk−1

k

max 0, g T dk−1 gk−1 2

k

⎪

⎪

⎩0,

2

2

if gk ≥ gkT gk−1 ≥ mgk ,

2.1

otherwise,

in which m ∈ 0, 1. We introduce the modified PRP method as follows.

2.1. Modified PRP (MPRP) Method

Step 1. Set x1 ∈ Rn , ε ≥ 0, and d1 −g1 , if g1 ≤ ε, then stop.

Step 2. Compute αk by some inexact line search.

Step 3. Let xk1 xk αk dk , gk1 gxk1 , if ||gk1 || ≤ ε, then stop.

Step 4. Compute βk1 by 2.1, and generate dk1 by 1.3.

Step 5. Set k k 1, and go to Step 2.

In the convergence analyses and implementations of conjugate gradient methods, one

often requires the inexact line search to satisfy the Wolfe line search or the strong Wolfe line

search. The Wolfe line search is to find αk such that

fxk αk dk ≤ fxk δαk gkT dk ,

2.2

gxk αk dk T dk ≥ σgkT dk ,

2.3

where 0 < δ < σ < 1. The strong Wolfe line search consists of 2.2 and the following

strengthened version of 2.3:

gxk αk dk T dk ≤ −σgkT dk .

2.4

Moreover, in most references, we can see that the sufficient descent condition

2

gkT dk ≤ −cgk ,

c>0

2.5

is always given which plays a vital role in guaranteeing the global convergence properties of

conjugate gradient methods. But, in this paper, dk can satisfy 2.5 without any line search.

Theorem 2.1. Consider any method 1.2-1.3, where βk βkMPRP . If gk /

0 for all k ≥ 1, then

2

gkT dk < −gk ,

∀k ≥ 1.

2.6

Mathematical Problems in Engineering

5

Proof. Multiplying 1.3 by gkT , we get

2

gkT dk −gk βkMPRP gkT dk−1 .

2.7

If βkMPRP 0, from 2.7, we know that the conclusion 2.6 holds. If βkMPRP /

0, the proof is

divided into two cases in the following.

Firstly, if gkT dk−1 ≤ 0, then from 2.1 and 2.7, one has

gkT dk

2

gk − g T gk−1

k

T

2 · gk dk−1

T

max 0, gk dk−1 gk−1

2

2 gk − gkT gk−1

− gk · gkT dk−1

gk−1 2

2 2

gkT gk−1 /gk · gkT dk−1 − gkT dk−1 gk−1 2

−gk ·

gk−1 2

2 2

gk−1 − gkT dk−1 1 − gkT gk−1 /gk 2

−gk ·

gk−1 2

gk−1 2

2

2

≤ −gk · 2 −gk < 0.

gk−1 2

−gk 2.8

Secondly, if gkT dk−1 > 0, then from 2.7, we also have

gkT dk

2

2

2 gk − gkT gk−1

< − gk · gkT dk−1 − gkT gk−1 ≤ −mgk .

T

gk dk−1

2.9

From the above, the conclusion 2.6 holds under any line search.

3. Global Convergences of the Modified PRP Method

In order to prove the global convergence of the modified PRP method, we assume that the

objective function fx satisfies the following assumption.

Assumption H

i The level set Ω {x ∈ Rn | fx ≤ fx1 } is bounded, that is, there exists a positive

constant ξ > 0 such that for all x ∈ Ω, ||x|| ≤ ξ.

ii In a neighborhood V of Ω, f is continuously differentiable and its gradient g is

Lipchitz continuous, namely, there exists a constant L > 0 such that

gx − g y ≤ Lx − y,

∀x, y ∈ V.

3.1

6

Mathematical Problems in Engineering

Under these assumptions on f, there exists a constant γ > 0 such that

gx ≤ γ

∀x ∈ Ω.

3.2

The conclusion of the following lemma, often called the Zoutendijk condition, is used

to prove the global convergence properties of nonlinear conjugate gradient methods. It was

originally given by Zoutendijk 18 .

Lemma 3.1. Suppose that, Assumption H holds. Consider any iteration of 1.2-1.3, where dk

satisfies gkT dk < 0 for k ∈ N and αk satisfies the Wolfe line search, then

gkT dk 2

k≥1

< ∞.

dk 2

3.3

Lemma 3.2. Suppose that Assumption H holds. Consider the method 1.2-1.3, where βk βkMPRP ,

and αk satisfies the Wolfe line search and 2.6. If there exists a constant r > 0, such that

gk ≥ r,

∀k ≥ 1,

3.4

then one has

uk − uk−1 2 < ∞,

3.5

k≥2

where uk dk /||dk ||.

Proof. From 2.1 and 3.4, we get

gkT gk−1 /

0.

3.6

By 2.6 and 3.6, we know that dk /

0 for each k.

Define the quantities

rk −gk

,

dk δk βkMPRP dk−1 dk .

3.7

By 1.3, one has

uk −gk βkMPRP dk−1

dk

rk δk uk−1 .

dk dk 3.8

Since uk is unit vector, we get

rk uk − δk uk−1 δk uk − uk−1 .

3.9

Mathematical Problems in Engineering

7

1

0.9

0.8

0.7

0.6

Y 0.5

0.4

0.3

0.2

0.1

0

0

2

4

6

8

10

X

MPRP method

VPRP method

CG-DESCENT method

DL+ method

Figure 1: Performance profiles of the conjugate gradient methods with respect to CPU time.

1

0.9

0.8

0.7

0.6

Y 0.5

0.4

0.3

0.2

0.1

0

0

1

2

3

4

5

6

7

8

9

10

X

MPRP method

VPRP method

CG-DESCENT method

DL+ method

Figure 2: Performance profiles with respect to the number of iterations.

From δk ≥ 0 and the above equation, one has

uk − uk−1 ≤ 1 δk uk − uk−1 1 δk uk − 1 δk uk−1 8

Mathematical Problems in Engineering

≤ uk − δk uk−1 δk uk − uk−1 2rk .

3.10

By 2.1, 3.4, and 3.6, one has

g T gk−1

1 ≥ k 2

gk 2

3.11

> m.

From 3.3, 2.6, 3.4, and 3.11, one has

⎞

2

T

g

g

k−1

k

⎝rk · ⎠

m2

rk ≤

gk 2

0

0

k≥1,dk /

k≥1,dk /

2

⎛

2

gkT gk−1

0

k≥1,dk /

dk 2

≤

0

k≥1,dk /

gkT dk

2

3.12

2

dk 2

< ∞,

so

rk 2 < ∞.

3.13

k≥1,dk /0

By 3.10 and the above inequality, one has

uk − uk−1 2 < ∞.

3.14

k≥2

Lemma 3.3. Suppose that Assumption H holds. If 3.4 holds, then βkMPRP has property (∗ ), that is,

1 there exists a constant b > 1, such that |βkMPRP | ≤ b,

2 there exists a constant λ > 0, such that ||xk − xk−1 || ≤ λ ⇒ |βkMPRP | ≤ 1/2b.

Proof. From Assumption ii, we know that 3.2 holds. By 2.1, 3.2, and 3.4, one has

βkMPRP

gk gk−1 · gk 2γ 2

≤

≤ 2 b.

r

gk−1 2

3.15

Mathematical Problems in Engineering

9

1

0.9

0.8

0.7

0.6

Y 0.5

0.4

0.3

0.2

0.1

0

0

1

2

3

4

5

6

7

8

9

10

X

MPRP method

VPRP method

CG-DESCENT method

DL+ method

Figure 3: Performance profiles with respect to the number of function evaluations.

Define λ r 2 /2Lγb. If ||xk − xk−1 || ≤ λ, then from 2.1, 3.1, 3.2, and 3.4, one has

βkMPRP

2

gk − g T gk−1 g T gk − gk−1

k

k

≤

gk−1 2

gk−1 2

gk · gk − gk−1 γLλ

1

.

≤

≤ 2 2b

r

gk−1 2

3.16

Lemma 3.4 see 19 . Suppose that Assumption H holds. Let {xk } and {dk } be generated by 1.21.3, in which αk satisfies the Wolfe line search and 2.6. If βk ≥ 0 has the property (∗ ) and 3.4

holds, then there exits λ > 0, for any Δ ∈ Z and k0 ∈ Z , for all k ≥ k0 , such that

Rλk,Δ >

Δ

,

2

3.17

where Rλk,Δ {i ∈ Z : k ≤ i ≤ k Δ − 1, ||xi − xi−1 || ≥ λ}, |Rλk,Δ | denotes the number of the Rλk,Δ .

Theorem 3.5. Suppose that Assumption H holds. Let {xk } and {dk } be generated by 1.2-1.3, in

which αk satisfies the Wolfe line search and 2.6, βk βkMPRP , then one has

lim infgk 0.

k → ∞

3.18

10

Mathematical Problems in Engineering

Table 1: The numerical results of the modified PRP method.

Problem

ROSE

FROTH

BADSCP

BADSCB

BEALE

HELIX

BRAD

GAUSS

MEYER

GULF

BOX

SING

WOOD

KOWOSB

BD

OSB1

BIGGS

OSB2

JENSAM

VARDIM

WATSON

PEN2

Dim

2

2

2

2

2

3

3

3

3

3

3

4

4

4

4

5

6

11

6

7

8

9

10

11

3

5

6

8

9

10

12

15

5

6

7

8

10

12

15

20

5

10

15

20

30

40

50

60

NI

24

11

26

11

21

25

20

3

1

1

1

67

33

57

26

1

121

341

12

13

11

12

26

NaN

4

6

5

7

7

7

7

8

59

387

1768

3934

4319

1892

1527

3001

111

185

154

178

123

147

152

163

NF

109

80

227

89

75

76

73

8

1

2

1

263

150

222

127

1

449

900

49

56

53

65

133

NaN

40

57

65

72

78

81

90

92

200

1281

5834

13373

15102

6762

5552

11308

439

845

774

989

610

700

744

813

NG

90

61

210

79

59

61

61

6

1

2

1

228

117

195

96

1

396

811

32

35

30

38

94

NaN

26

38

43

47

50

52

58

60

167

1134

5191

11920

13451

6007

4933

10107

393

752

679

889

534

617

651

720

CPU

0.3651

0.0594

0.2000

0.1085

0.1449

0.1754

0.1380

0.0164

0.0063

0.0173

0.0574

0.5000

0.2421

0.4000

0.1995

0.0157

1.0000

1.3000

0.0900

0.1872

0.1678

0.1160

0.2604

NaN

0.0135

0.0296

0.0270

0.0327

0.0647

0.0646

0.0647

0.0948

0.2000

1.4000

6.0000

14.0000

17.0000

9.0000

7.0000

19.0000

0.4000

1.5000

0.5000

0.6000

0.4000

0.5000

1.2000

0.7000

Mathematical Problems in Engineering

11

Table 1: Continued.

Problem

PEN1

TRIG

ROSEX

SINGX

BV

IE

Dim

5

10

20

30

50

100

200

300

10

20

50

100

200

300

400

500

100

200

300

400

500

1000

1500

2000

100

200

300

400

500

1000

1500

2000

200

300

400

500

600

1000

1500

2000

200

300

400

500

600

1000

1500

2000

NI

30

88

32

73

72

29

28

27

41

56

49

61

56

52

57

53

26

26

26

26

26

26

26

26

78

79

73

89

91

93

82

80

1813

636

226

188

86

21

11

2

6

6

6

6

6

6

6

6

NF

151

415

155

350

346

189

198

201

92

136

106

137

116

106

116

109

123

123

123

123

123

123

123

123

320

335

308

367

374

385

347

341

4326

1501

516

420

190

40

20

6

13

13

13

13

13

13

13

13

NG

125

357

124

290

285

147

152

150

82

127

103

127

114

101

114

108

103

103

103

103

103

103

103

103

283

293

269

324

330

342

306

299

4063

1418

487

398

184

37

19

5

7

7

7

7

7

7

7

7

CPU

0.2742

0.9000

0.3349

0.7000

0.3000

0.2458

0.4759

1.0464

0.3817

0.5634

0.1949

0.3857

2.0205

11.6394

44.9734

89.8125

0.2323

0.2583

0.3078

0.4697

0.6781

2.4474

5.3979

9.9364

0.8000

0.8000

0.8000

1.6000

2.2000

8.0000

15.8000

28.4000

9.0000

5.4000

2.7000

3.2000

1.9000

0.9963

1.0900

0.5456

0.3063

0.6698

1.1916

1.8511

2.6615

7.3635

16.6397

29.4927

12

Mathematical Problems in Engineering

Table 2: The numerical results of the VPRP method.

Problem

TRID

ROSE

FROTH

BADSCP

BADSCB

BEALE

HELIX

BRAD

GAUSS

MEYER

GULF

BOX

SING

WOOD

KOWOSB

BD

OSB1

BIGGS

OSB2

JENSAM

VARDIM

WATSON

Dim

200

300

400

500

600

1000

1500

2000

2

2

2

2

2

3

3

3

3

3

3

4

4

4

4

5

6

11

6

7

8

9

10

11

3

5

6

8

9

10

12

15

5

6

7

8

10

12

15

20

NI

35

36

37

35

36

35

36

37

24

12

99

13

12

74

30

3

1

1

1

101

174

71

42

1

113

264

9

11

10

17

17

6

4

6

5

7

7

7

7

8

193

342

1451

6720

NaN

3507

5271

NaN

NF

81

83

83

78

80

79

84

85

111

78

394

30

48

203

100

7

1

2

1

341

482

234

161

1

375

667

33

39

42

90

124

76

40

57

65

72

78

81

90

92

535

1002

4157

19530

NaN

10432

15817

NaN

NG

74

75

75

73

76

75

79

79

85

59

336

19

35

175

80

4

1

2

1

289

417

203

125

1

330

603

17

17

19

57

84

46

26

38

43

47

50

52

58

60

473

890

3678

17300

NaN

9234

14006

NaN

CPU

0.3327

0.3587

0.3731

0.4935

0.6862

1.7180

4.0501

7.5866

0.1585

0.0698

0.4000

0.0448

0.0402

0.5000

0.2027

0.0085

0.0058

0.0052

0.0561

0.7000

0.6000

0.3000

0.2451

0.0063

0.8000

1.5000

0.0696

0.1137

0.0883

0.1850

0.1435

0.1111

0.0290

0.0203

0.0273

0.036

0.0715

0.0458

0.0672

0.0622

1.4000

2.2000

5.0000

20.0000

NaN

13.0000

24.0000

NaN

Mathematical Problems in Engineering

13

Table 2: Continued.

Problem

PEN2

PEN1

TRIG

ROSEX

SINGX

BV

Dim

5

10

15

20

30

40

50

60

5

10

20

30

50

100

200

300

10

20

50

100

200

300

400

500

100

200

300

400

500

1000

1500

2000

100

200

300

400

500

1000

1500

2000

200

300

400

500

600

1000

1500

2000

NI

129

90

434

941

771

248

952

927

32

90

28

76

64

23

21

28

36

56

45

58

64

52

60

59

24

24

24

24

24

24

24

24

169

199

365

627

129

229

100

128

NaN

7278

3837

1842

898

133

19

2

NF

499

379

1467

2959

2531

978

2788

2822

151

383

160

334

344

160

174

192

82

125

93

120

135

102

132

127

111

111

111

111

111

111

111

111

562

676

1181

2025

431

788

329

428

NaN

12990

6707

3236

1562

232

36

6

NG

434

328

1298

2612

2193

860

2511

2435

124

324

121

279

280

122

128

143

71

114

85

113

128

99

125

116

85

85

85

85

85

85

85

85

483

576

1031

1756

367

675

280

363

NaN

12989

6706

3235

1561

231

35

5

CPU

1.0000

0.3000

2.2000

3.0000

3.0000

1.6000

3.0000

4.0000

0.3752

0.8000

0.1119

0.3000

0.5000

0.2212

0.4081

1.0207

0.4048

0.6413

0.3426

0.4573

2.0248

12.4258

52.5691

101.6207

0.2798

0.2808

0.3136

0.4271

0.6181

2.1821

4.7644

8.8878

0.6000

1.4000

3.7000

9.0000

2.5000

16.9000

16.0000

36.0000

NaN

55.0000

42.0000

26.0000

17.6000

6.2000

2.0484

0.5156

14

Mathematical Problems in Engineering

Table 2: Continued.

Problem

IE

TRID

Dim

200

300

400

500

600

1000

1500

2000

200

300

400

500

600

1000

1500

2000

NI

7

7

7

7

7

7

7

7

33

35

35

35

37

34

37

37

NF

15

15

15

15

15

15

15

15

75

79

78

78

82

76

86

86

NG

8

8

8

8

8

8

8

8

71

75

74

74

78

72

81

80

CPU

0.3596

0.7986

1.4202

2.2147

3.1811

8.8316

19.7838

34.7553

0.3758

0.3654

0.3912

0.5188

0.7388

1.6832

4.1950

7.5255

1

0.9

0.8

0.7

0.6

Y 0.5

0.4

0.3

0.2

0.1

0

0

1

2

3

4

5

6

7

8

9

10

X

MPRP method

VPRP method

CG-DESCENT method

DL+ method

Figure 4: Performance profiles with respect to the number of gradient evaluations.

Proof. To obtain this result, we proceed by contradiction. Suppose that 3.18 does not hold,

which means that there exists r > 0 such that

gk ≥ r,

so, we know that Lemmas 3.2 and 3.4 hold.

for k ≥ 1,

3.19

Mathematical Problems in Engineering

15

Table 3: The numerical results of the CG-DESCENT method.

Problem

ROSE

FROTH

BADSCP

BADSCB

BEALE

HELIX

BRAD

GAUSS

MEYER

GULF

BOX

SING

WOOD

KOWOSB

BD

OSB1

BIGGS

OSB2

JENSAM

VARDIM

WATSON

PEN2

Dim

2

2

2

2

2

3

3

3

3

3

3

4

4

4

4

5

6

11

6

7

8

9

10

11

3

5

6

8

9

10

12

15

5

6

7

8

10

12

15

20

5

10

15

20

30

40

50

60

NI

36

12

40

16

11

66

47

3

1

1

1

62

103

77

53

1

128

379

NaN

12

11

NaN

5

21

4

6

5

7

7

7

7

8

135

421

1822

2607

NaN

3370

5749

5902

128

147

663

734

810

1488

744

755

NF

132

64

213

101

45

179

143

10

1

2

1

198

298

222

204

1

395

915

NaN

51

50

NaN

59

148

40

57

65

72

78

81

90

92

391

1186

5278

7589

NaN

10111

17442

18524

485

571

2262

2452

2438

4502

2342

2457

NG

107

48

189

88

33

152

122

8

1

2

1

164

247

192

162

1

341

827

NaN

32

26

NaN

34

105

26

38

43

47

50

52

58

60

336

1043

4655

6716

NaN

8930

15368

16282

421

486

1996

2181

2264

3960

2056

2188

CPU

0.2371

0.0462

0.2000

0.1212

0.0405

0.4397

0.2292

0.0093

0.0085

0.0068

0.0600

0.3000

0.5000

0.4000

0.3000

0.0084

0.7000

1.2000

NaN

0.1114

0.0844

NaN

0.0421

0.1749

0.0246

0.0174

0.0266

0.0226

0.0323

0.0495

0.0610

0.0583

0.5000

1.4000

6.0000

9.0000

NaN

12.0000

27.0000

36.0000

1.1000

0.4000

2.0000

3.0000

3.0000

5.0000

2.0000

3.0000

16

Mathematical Problems in Engineering

Table 3: Continued.

Problem

PEN1

TRIG

ROSEX

SINGX

BV

IE

Dim

5

10

20

30

50

100

200

300

10

20

50

100

200

300

400

500

100

200

300

400

500

1000

1500

2000

100

200

300

400

500

1000

1500

2000

200

300

400

500

600

1000

1500

2000

200

300

400

500

600

1000

1500

2000

NI

39

94

35

76

81

31

25

26

33

60

43

53

59

51

58

51

36

34

35

31

31

37

34

32

77

54

99

79

69

101

66

69

NaN

NaN

4509

1635

925

247

18

2

7

7

7

7

7

7

7

7

NF

174

404

162

347

375

184

171

186

74

145

95

116

123

109

121

110

124

125

133

121

129

142

132

128

227

172

301

245

215

322

210

210

NaN

NaN

8044

2926

1605

418

38

6

15

15

15

15

15

15

15

15

NG

141

345

127

286

313

141

126

139

65

132

90

108

118

105

114

105

99

102

107

100

106

116

106

103

187

141

248

207

180

271

174

173

NaN

NaN

8043

2925

1604

417

37

5

8

8

8

8

8

8

8

8

CPU

0.3298

0.8000

0.1220

0.3000

0.4000

0.2434

0.4106

1.0160

0.2781

0.5098

0.3620

0.4474

1.7555

12.6411

45.0506

89.7073

0.1230

0.1539

0.3939

0.5789

0.8736

3.5602

7.4845

12.9238

0.7000

0.2376

1.0000

1.3000

1.6000

8.6000

12.4000

23.1000

NaN

NaN

63.0000

34.0000

24.5000

16.9000

3.0564

0.6883

0.3546

0.7927

1.4039

2.2022

3.1297

8.6892

19.5607

34.7423

Mathematical Problems in Engineering

17

Table 3: Continued.

Problem

TRID

Dim

200

300

400

500

600

1000

1500

2000

NI

31

33

32

34

35

36

36

35

NF

70

73

72

76

78

81

84

80

NG

58

65

61

71

74

77

79

73

CPU

0.2691

0.2857

0.4264

0.6853

0.9484

2.6056

5.8397

9.9491

We also define uk dk /||dk ||, then for all l, k ∈ Z l ≥ k, one has

xl − xk−1 l

xi − xi−1 · ui−1

ik

l

l

si−1 · uk−1 si−1 ui−1 − uk−1 ,

ik

3.20

ik

where si−1 xi − xi−1 , that is,

l

si−1 · uk−1 xl − xk−1 −

ik

l

si−1 ui−1 − uk−1 .

3.21

ik

From Assumption H, we know that there exists a constant ξ > 0 such that

x ≤ ξ,

for x ∈ V.

3.22

From 3.21 and the above inequality, one has

l

l

si−1 ≤ 2ξ si−1 · ui−1 − uk−1 .

ik

3.23

ik

Let Δ be a positive integer and Δ ∈ 8ξ/λ, 8ξ/λ 1 where λ has been defined in Lemma 3.4.

From Lemma 3.2, we know that there exists k0 such that

i≥k0

ui1 − ui 2 ≤

1

.

4Δ

3.24

18

Mathematical Problems in Engineering

Table 4: The numerical results of the DL method.

P

ROSE

FROTH

BADSCP

BADSCB

BEALE

HELIX

BARD

GAUSS

MEYER

GULF

BOX

SING

WOOD

KOWOSB

BD

OSB1

BIGGS

OSB2

JENSAM

VARDIM

WASTON

PEN2

Dim

2

2

2

2

2

3

3

3

3

3

3

4

4

4

4

5

6

11

6

7

8

9

10

11

3

5

6

8

9

10

12

15

5

6

7

8

10

12

15

20

5

10

15

20

30

40

50

60

It

25

9

19

11

11

50

20

2

1

1

1

91

148

76

67

1

59

279

9

9

NaN

15

17

5

4

6

5

7

7

7

7

8

62

346

1247

2034

5973

3200

1865

6420

83

128

277

290

767

675

617

NaN

f iter

110

65

146

56

50

144

76

5

1

2

1

295

402

236

208

1

211

699

35

35

NaN

91

136

80

40

57

65

72

78

81

90

92

208

1055

3443

6104

17601

9291

5402

18320

329

519

1040

1113

2231

1929

2223

NaN

Grade iter

87

50

133

47

39

122

64

3

1

2

1

250

349

204

177

1

189

614

19

17

NaN

57

96

50

26

38

43

47

50

52

58

60

173

927

3085

5408

15699

8259

4790

16360

283

449

922

981

2040

1706

1962

NaN

CPU

0.1192

0.0283

0.1003

0.0434

0.0355

0.2616

0.0824

0.0079

0.0087

0.0062

0.0601

0.5000

0.8000

0.4000

0.3000

0.0083

0.3000

1.4000

0.0570

0.1276

NaN

0.1148

0.2084

0.0882

0.0153

0.0192

0.0184

0.0219

0.0438

0.0242

0.0442

0.0535

0.2000

1.8000

4.0000

7.0000

21.0000

12.0000

8.0000

30.0000

0.7000

1.0000

0.9000

0.9000

3.0000

3.0000

2.0000

NaN

Mathematical Problems in Engineering

19

Table 4: Continued.

P

PEN1

TRIG

ROSEX

SINGX

BV

IE

Dim

5

10

20

30

50

100

200

300

10

20

50

100

200

300

400

500

100

200

300

400

500

1000

1500

2000

100

200

300

400

500

1000

1500

2000

200

300

400

500

600

1000

1500

2000

200

300

400

500

600

1000

1500

2000

It

33

78

24

73

66

22

27

29

34

59

46

55

56

52

56

54

25

25

25

25

25

25

25

25

113

117

215

113

110

137

152

110

NaN

NaN

5624

3314

1618

258

18

2

6

6

6

6

6

6

6

6

f iter

165

353

119

367

356

154

178

195

82

140

94

120

116

110

115

121

110

110

110

110

110

110

110

110

384

397

735

384

367

452

494

367

NaN

NaN

8458

4854

2291

312

30

6

13

13

13

13

13

13

13

13

Grade iter

139

298

94

305

293

117

136

147

71

129

88

111

111

105

113

116

87

87

87

87

87

87

87

87

329

341

634

329

313

388

420

313

NaN

NaN

8457

4853

2290

311

29

5

7

7

7

7

7

7

7

7

CPU

0.2615

0.7000

0.0842

0.3000

0.6000

0.1957

0.4346

1.0408

0.2910

0.5161

0.3865

0.4625

1.6476

12.3062

44.8831

97.7043

0.0859

0.2019

0.2697

0.4169

0.5990

2.2166

4.8426

9.1263

1.0000

0.5000

2.1000

1.6000

2.2000

9.2000

22.1000

30.2000

NaN

NaN

49.0000

38.0000

25.0000

8.7000

1.7251

0.5129

0.3075

0.6731

1.1939

1.8608

2.6785

7.3685

16.5794

29.5854

20

Mathematical Problems in Engineering

Table 4: Continued.

P

TRID

Dim

200

300

400

500

600

1000

1500

2000

It

33

35

36

35

37

34

37

37

f iter

75

79

80

78

82

76

86

86

Grade iter

71

75

76

73

78

72

81

80

CPU

0.2606

0.2866

0.3653

0.4857

0.6950

1.6550

4.1380

7.4702

From the Cauchy-Schwartz inequality and 3.24, for all i ∈ k, k Δ − 1 , one has

ui−1 − uk−1 ≤

i−1 uj − uj−1 jk

⎛

⎞1/2

i−1 2

≤ i − k1/2 ⎝ uj − uj−1 ⎠

3.25

jk

≤ Δ1/2 ·

1

4Δ

1/2

1

.

2

By Lemma 3.4, we know that there exists k ≥ k0 such that

Rλk,Δ >

Δ

.

2

3.26

It follows from 3.23, 3.25, and 3.26 that

λΔ λ λ

1 kΔ−1

< Rk,Δ <

si−1 ≤ 2ξ.

4

2

2 ik

3.27

From 3.27, one has Δ < 8ξ/λ, which is a contradiction with the definition of Δ. Hence,

lim infgk 0,

k → ∞

3.28

which completes the proof.

4. Numerical Results

In this section, we compare the modified PRP conjugate gradient method, denoted the MPRP

method, to VPRP method, CG-DESCENT method, and DL method under the strong Wolfe

line search about problems 20 with the given initial points and dimensions. The parameters

Mathematical Problems in Engineering

21

are chosen as follows: δ 0.01, σ 0.1, v 1.25, η 0.01, and t 0.1. If ||gk || ≤ 10−6

is satisfied, we will stop the program. The program will be also stopped if the number of

iteration is more than ten thousands. All codes were written in Matlab 7.0 and run on a PC

with 2.0 GHz CPU processor and 512 MB memory and Windows XP operation system.

The numerical results of our tests with respect to the MPRP method, VPRP method,

CG-DESCENT method, and DL method are reported in Tables 1, 2, 3, 4, respectively. In the

tables, the column “Problem” represents the problem’s name in 20 , and “CPU,” “NI,” “NF,”

and “NG” denote the CPU time in seconds, the number of iterations, function evaluations,

gradient evaluations, respectively. “Dim” denotes the dimension of the tested problem. If the

limit of iteration was exceeded, the run was stopped, and this is indicated by NaN.

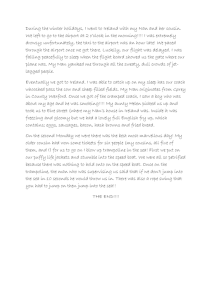

In this paper, we will adopt the performance profiles by Dolan and Moré 21 to

compare the MPRP method to the VPRP method, CG-DESCENT method, and DL method

in the CPU time, the number of iterations, function evaluations, and gradient evaluations

performance, respectively see Figures 1, 2, 3, 4. In figures,

X τ −→

1

size p ∈ P : log2 rp,s ≤ τ ,

np

Y P rp,s ≤ τ : 1 ≤ s ≤ ns .

4.1

Figures 1–4 show the performance of the four methods relative to CPU time, the

number of iterations, the number of function evaluations, and the number of gradient

evaluations, respectively. For example, the performance profiles with respect to CPU time

means that for each method, we plot the fraction P of problems for which the method is

within a factor τ of the best time. The left side of the figure gives the percentage of the test

problems for which a method is the fastest; the right side gives the percentage of the test

problems that are successfully solved by each of the methods. The top curve is the method

that solved of the most problems in a time that was within a factor τ of the best time.

Obviously, Figure 1 shows that MPRP method outperforms VPRP method, CGDESCENT method, and DL method for the given test problems in the CPU time. Figures

2–4 show that the MPRP method also has the best performance with respect to the number

of iterations and function and gradient evaluations since it corresponds to the top curve. So,

the MPRP method is computationally efficient.

5. Conclusions

We have proposed a modified PRP method on the basic of the PRP method, which can

generate sufficient descent directions with inexact line search. Moreover, we proved that

the proposed modified method converge globally for general nonconvex functions. The

performance profiles showed that the proposed method is also very efficient.

Acknowledgments

The authors wish to express their heartfelt thanks to the referees and Professor Piermarco

Cannarsa for their detailed and helpful suggestions for revising the paper. This work

was supported by The Nature Science Foundation of Chongqing Education Committee

KJ091104 and Chongqing Three Gorge University 09ZZ-060.

22

Mathematical Problems in Engineering

References

1 E. Polak and G. Ribire, “Note sur la xonvergence de directions conjugees,” Rev Francaise Informat

Recherche Operatinelle 3e Annee, vol. 16, pp. 35–43, 1969.

2 B. T. Polak, “The conjugate gradient method in extreme problems,” USSR Computational Mathematics

and Mathematical Physics, vol. 9, pp. 94–112, 1969.

3 M. Al-Baali, “Descent property and global convergence of the Fletcher-Reeves method with inexact

line search,” IMA Journal of Numerical Analysis, vol. 5, no. 1, pp. 121–124, 1985.

4 W. W. Hager and H. Zhang, “A new conjugate gradient method with guaranteed descent and an

efficient line search,” SIAM Journal on Optimization, vol. 16, no. 1, pp. 170–192, 2005.

5 Y. H. Dai and L. Z. Liao, “New conjugacy conditions and related nonlinear conjugate gradient

methods,” Applied Mathematics and Optimization, vol. 43, no. 1, pp. 87–101, 2001.

6 W. W. Hager and H. Zhang, “A survey of nonlinear conjugate gradient methods,” Pacific Journal of

Optimization, vol. 2, no. 1, pp. 35–58, 2006.

7 M. J. D. Powell, “Nonconvex minimization calculations and the conjugate gradient method,” in

Numerical Analysis (Dundee, 1983), vol. 1066 of Lecture Notes in Mathematics, pp. 122–141, Springer,

Berlin, Germany, 1984.

8 M. J. D. Powell, “Convergence properties of algorithms for nonlinear optimization,” SIAM Review,

vol. 28, no. 4, pp. 487–500, 1986.

9 J. C. Gilbert and J. Nocedal, “Global convergence properties of conjugate gradient methods for

optimization,” SIAM Journal on Optimization, vol. 2, no. 1, pp. 21–42, 1992.

10 L. Zhang, W. Zhou, and D. Li, “A descent modified Polak-Ribière-Polyak conjugate gradient method

and its global convergence,” IMA Journal of Numerical Analysis, vol. 26, no. 4, pp. 629–640, 2006.

11 Z. Wei, G. Y. Li, and L. Qi, “Global convergence of the Polak-Ribière-Polyak conjugate gradient

method with an Armijo-type inexact line search for nonconvex unconstrained optimization

problems,” Mathematics of Computation, vol. 77, no. 264, pp. 2173–2193, 2008.

12 L. Grippo and S. Lucidi, “A globally convergent version of the Polak-Ribière conjugate gradient

method,” Mathematical Programming, vol. 78, no. 3, pp. 375–391, 1997.

13 G. Yu, Y. Zhao, and Z. Wei, “A descent nonlinear conjugate gradient method for large-scale

unconstrained optimization,” Applied Mathematics and Computation, vol. 187, no. 2, pp. 636–643, 2007.

14 J. Zhang, Y. Xiao, and Z. Wei, “Nonlinear conjugate gradient methods with sufficient descent

condition for large-scale unconstrained optimization,” Mathematical Problems in Engineering, vol. 2009,

Article ID 243290, 16 pages, 2009.

15 Y. Xiao, Q. Wang, and D. Wang, “Notes on the Dai-Yuan-Yuan modified spectral gradient method,”

Journal of Computational and Applied Mathematics, vol. 234, no. 10, pp. 2986–2992, 2010.

16 Z. Wan, Z. Yang, and Y. Wang, “New spectral PRP conjugate gradient method for unconstrained

optimization,” Applied Mathematics Letters, vol. 24, no. 1, pp. 16–22, 2011.

17 L. Zhang, W. Zhou, and D. Li, “Global convergence of a modified Fletcher-Reeves conjugate gradient

method with Armijo-type line search,” Numerische Mathematik, vol. 104, no. 4, pp. 561–572, 2006.

18 G. Zoutendijk, “Nonlinear programming, computational methods,” in Integer and Nonlinear

Programming, J. A. badie, Ed., pp. 37–86, North-Holland, Amsterdam, Netherlands, 1970.

19 Y. H. Dai and Y. Yuan, Nonlinear Conjugate Gradient Method, vol. 10, Shanghai Scientific & Technical,

Shanghai, China, 2000.

20 J. J. Moré, B. S. Garbow, and K. E. Hillstrom, “Testing unconstrained optimization software,”

Association for Computing Machinery Transactions on Mathematical Software, vol. 7, no. 1, pp. 17–41, 1981.

21 E. D. Dolan and J. J. Moré, “Benchmarking optimization software with performance profiles,”

Mathematical Programming, vol. 91, no. 2, pp. 201–213, 2002.

Advances in

Operations Research

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Advances in

Decision Sciences

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Mathematical Problems

in Engineering

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Algebra

Hindawi Publishing Corporation

http://www.hindawi.com

Probability and Statistics

Volume 2014

The Scientific

World Journal

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International Journal of

Differential Equations

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Volume 2014

Submit your manuscripts at

http://www.hindawi.com

International Journal of

Advances in

Combinatorics

Hindawi Publishing Corporation

http://www.hindawi.com

Mathematical Physics

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Complex Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International

Journal of

Mathematics and

Mathematical

Sciences

Journal of

Hindawi Publishing Corporation

http://www.hindawi.com

Stochastic Analysis

Abstract and

Applied Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

International Journal of

Mathematics

Volume 2014

Volume 2014

Discrete Dynamics in

Nature and Society

Volume 2014

Volume 2014

Journal of

Journal of

Discrete Mathematics

Journal of

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Applied Mathematics

Journal of

Function Spaces

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Optimization

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014