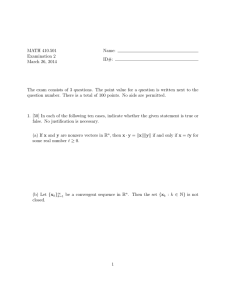

A Hybrid Extragradient Method for Bilevel Pseudomonotone Variational Inequalities with Multiple Solutions

advertisement

A Hybrid Extragradient Method for

Bilevel Pseudomonotone Variational

Inequalities with Multiple Solutions

Lu-Chuan Ceng1 , Yeong-Cheng Liou2 and Ching-Feng Wen3

1

Department of Mathematics, Shanghai Normal University, Shanghai 200234, China. This research was

partially supported by the Innovation Program of Shanghai Municipal Education Commission (15ZZ068),

Ph.D. Program Foundation of Ministry of Education of China (20123127110002), and Program for Outstanding

Academic Leaders in Shanghai City (15XD1503100). Email: zenglc@hotmail.com

2

Department of Information Management, Cheng Shiu University, Kaohsiung 833, Taiwan; and Research

Center of Nonlinear Analysis and Optimization and Center for Fundamental Science, Kaohsiung Medical

University, Kaohsiung 807, Taiwan (simplex liou@hotmail.com). This research was supported in part by

MOST 103-2923-E-037-001-MY3, MOST 104-2221-E-230-004 and Kaohsiung Medical University ‘Aim for the

Top Universities Grant, grant No. KMU-TP103F00’.Email: simplexliou@hotmail.com

3

Corresponding author. Center for Fundamental Science, and Center for Nonlinear Analysis and Optimization, Kaohsiung Medical University, Kaohsiung 80708, Taiwan. Email: cfwen@kmu.edu.tw

1

Abstract. In this paper, we introduce and analyze a hybrid extragradient algorithm for

solving bilevel pseudomonotone variational inequalities with multiple solutions in a real Hilbert

space. The proposed algorithm is based on Korpelevich’s extragradient method, Mann’s iteration method, hybrid steepest-descent method and viscosity approximation method (including

Halpern’s iteration method). Under mild conditions, the strong convergence of the iteration

sequences generated by the algorithm is derived.

Keywords: Bilevel variational inequality; Hybrid extragradient algorithm; Pseudomonotonicity; Lipschitz continuity; Global convergence.

AMS subject classifications. 49J30; 47H09; 47J20; 49M05

2

1. Introduction

Let H be a real Hilbert space with inner product h·, ·i and norm k · k. C be a nonempty

closed convex subset of H and PC be the metric projection of H onto C. If {xn } is a sequence

in H, then we denote by xn → x (respectively, xn * x) the strong (respectively, weak)

convergence of the sequence {xn } to x. Let S : C → H be a nonlinear mapping on C. We

denote by Fix(S) the set of fixed points of S and by R the set of all real numbers. A mapping

S : C → H is called L-Lipschitz continuous if there exists a constant L ≥ 0 such that

kSx − Syk ≤ Lkx − yk,

∀x, y ∈ C.

In particular, if L = 1 then S is called a nonexpansive mapping; if L ∈ [0, 1) then S is called

a contraction.

Let A : C → H be a nonlinear mapping on C. The classical variational inequality problem

(VIP) is to find x ∈ C such that

hAx, y − xi ≥ 0,

∀y ∈ C.

(1.1)

The solution set of VIP (1.1) is denoted by VI(C, A).

The VIP (1.1) was first discussed by Lions [13]. There are many applications of VIP (1.1)

in various fields; see e.g., [6,7,9,30]. In 1976, Korpelevich [2] proposed an iterative algorithm

for solving the VIP (1.1) in Euclidean space Rn :

(

yk = PC (xk − τ Axk ),

xk+1 = PC (xk − τ Ayk ),

∀k ≥ 0,

with τ > 0 a given number, which is known as the extragradient method. The literature on

the VIP is vast and Korpelevich’s extragradient method has received great attention given by

many authors, who improved it in various ways; see e.g., [10-12,14-16,18,24-25,33-35,37-38,40]

and references therein.

Let A : C → H and B : H → H be two mappings. Consider the following bilevel

variational inequality problem (BVIP):

Problem AKM. We find x∗ ∈ VI(C, B) such that

hAx∗ , x − x∗ i ≥ 0,

∀x ∈ VI(C, B),

(1.2)

where VI(C, B) denotes the set of solutions of the VIP: Find y ∗ ∈ C such that

hBy ∗ , y − y ∗ i ≥ 0,

∀y ∈ C,

(1.3)

In particular, whenever H = Rn , the BVIP was recently studied by Anh, Kim and Muu

[40].

Bilevel variational inequalities are special classes of quasivariational inequalities (see [1,3,4,39])

and of equilibrium with equilibrium constraints considered in [17,29]. However it covers some

3

classes of mathematical programs with equilibrium constraints (see [29]), bilevel minimization

problems (see [36]), variational inequalities (see [20,22,26,28]) and complementarity problems.

In what follows, suppose that A and B satisfy the following conditions:

(C1) B is pseudomonotone on H and A is β-strongly monotone on C;

(C2) A is L1 -Lipschitz continuous on C;

(C3) B is L2 -Lipschitz continuous on H;

(C4) VI(C, B) 6= ∅.

It is remarkable that under conditions (C1)-(C4), Problem AKM has only a solution because A is β-strongly monotone and L1 -Lipschitz continuous on C. In 2012, Anh, Kim and

Muu [40] introduced the following extragradient iterative algorithm for solving the above

bilevel variational inequality.

Algorithm AKM (see [40, Algorithm 2.1]). Initialization. Choose u ∈ Rn , x0 ∈ C, k =

0, 0 < λ ≤ L2β2 , positive sequences {δk }, {λk }, {αk }, {βk }, {γk } and {¯k } such that

1

lim δk = 0,

k→∞

∞

X

¯k < ∞,

k=0

αk + βk + γk = 1 ∀k ≥ 0,

∞

X

αk = ∞,

k=0

lim αk = 0, lim βk = ξ ∈ (0, 12 ], lim λk = 0, λk ≤

k→∞

k→∞

k→∞

1

L2

∀k ≥ 0.

Step 1. Compute

(

yk := PC (xk − λk Bxk ),

zk := PC (xk − λk Byk ).

Step 2. Inner loop j = 0, 1, .... Compute

xk,0 := zk − λAzk ,

yk,j := PC (xk,j − δj Bxk,j ),

xk,j+1 := αj xk,0 + βj xk,j + γj PC (xk,j − δj Byk,j ).

If kxk,j+1 − PVI(C,B) xk,0 k ≤ ¯k then set hk := xk,j+1 and go to Step 3.

Otherwise, increase j by 1 and repeat the inner loop Step 2.

Step 3. Set xk+1 := αk u + βk xk + γk hk . Then increase k by 1 and go to Step 1.

Theorem AKM (see [40, Theorem 3.1]). Suppose that the assumptions (C1)-(C4) hold.

Then the two sequences {xk } and {zn } in Algorithm AKM converges to the same point x∗

which is a solution of the BVIP.

It is well known than an important approach to the BVIP is the Tikhonov regularization method. The main idea of this method for monotone variational inequalities is to add

a strongly monotone operator depending on a parameter to the cost operator to obtain a

parameterized strongly monotone variational inequality, which is uniquely solved. By letting

4

the parameter to a suitable limit, the sequence of the solutions of the regularized problems

will tend to the solution of the original problem. This result allows that the Tikhonov regularization method can be used to solve bilevel monotone variational inequalities. Recently,

in [5,21,27] the Tikhonov method with generalized regularization operators and bifunctions is

edtended to pseudomonotone variational inequalities and equilibrium problems, respectively.

However in this case, the regularized subproblems, may fail to be strongly monotone, even

pseudomonotone, since the sum of a strongly monotone operator and a pseudomonotone operator, in general, is not pseudomonotone. In our opinion, the existing methods that require some

monotonicity properties cannot be applied to solve the regularized subvariational inequalities.

Therefore the above Theorem AKM shows that the Algorithm AKM (i.e., an extragradienttyle algorithm) is an efficient approach for directly solving bilevel pseudomonotone variational

inequalities.

Motivated and inspired by the above facts, we introduce and analyze a hybrid extragradient

algorithm for solving bilevel pseudomonotone variational inequalities with multiple solutions

in a real Hilbert space. The proposed algorithm is based on Korpelevich’s extragradient

method (see [2]), Mann’s iteration method, hybrid steepest-descent method (see [19,31]) and

viscosity approximation method (see [15,41]) (including Halpern’s iteration method). Under

some mild conditions, the strong convergence of the iteration sequences generated by the

proposed algorithm is derived. Our results improve and extend the corresponding results

announced by some others, e.g., Anh, Kim amd Muu [40, Theorem 3.1].

2. Preliminaries

Throughout this paper, we assume that H is a real Hilbert space whose inner product and

norm are denoted by h·, ·i and k · k, respectively. Let C be a nonempty closed convex subset of

H. We write xn * x to indicate that the sequence {xn } converges weakly to x and xn → x to

indicate that the sequence {xn } converges strongly to x. Moreover, we use ωw (xn ) to denote

the weak ω-limit set of the sequence {xn }, i.e.,

ωw (xn ) := {x ∈ H : xni * x for some subsequence {xni } of {xn }}.

Recall that a mapping A : C → H is called

(i) monotone if

hAx − Ay, x − yi ≥ 0,

∀x, y ∈ C;

(ii) η-strongly monotone if there exists a constant η > 0 such that

hAx − Ay, x − yi ≥ ηkx − yk2 ,

∀x, y ∈ C;

(iii) α-inverse-strongly monotone if there exists a constant α > 0 such that

hAx − Ay, x − yi ≥ αkAx − Ayk2 ,

5

∀x, y ∈ C.

It is obvious that if A is α-inverse-strongly monotone, then A is monotone and α1 -Lipschitz

continuous.

The metric (or nearest point) projection from H onto C is the mapping PC : H → C

which assigns to each point x ∈ H the unique point PC x ∈ C satisfying the property

kx − PC xk = inf kx − yk =: d(x, C).

y∈C

Some important properties of projections are gathered in the following proposition.

Proposition 2.1. For given x ∈ H and z ∈ C:

(i) z = PC x ⇔ hx − z, y − zi ≤ 0, ∀y ∈ C;

(ii) z = PC x ⇔ kx − zk2 ≤ kx − yk2 − ky − zk2 , ∀y ∈ C;

(iii) hPC x − PC y, x − yi ≥ kPC x − PC yk2 , ∀y ∈ H.

Consequently, PC is nonexpansive and monotone.

If A is an α-inverse-strongly monotone mapping of C into H, then it is obvious that A is

continuous. We also have that, for all u, v ∈ C and λ > 0,

1

-Lipschitz

α

k(I − λA)u − (I − λA)vk2 ≤ ku − vk2 + λ(λ − 2α)kAu − Avk2 .

So, if λ ≤ 2α, then I − λA is a nonexpansive mapping from C to H.

Definition 2.1. A mapping T : H → H is said to be:

(a) nonexpansive if

kT x − T yk ≤ kx − yk, ∀x, y ∈ H;

(b) firmly nonexpansive if 2T − I is nonexpansive, or equivalently, if T is 1-inverse strongly

monotone (1-ism),

hx − y, T x − T yi ≥ kT x − T yk2 , ∀x, y ∈ H;

alternatively, T is firmly nonexpansive if and only if T can be expressed as

1

T = (I + S),

2

where S : H → H is nonexpansive; projections are firmly nonexpansive.

It can be easily seen that if T is nonexpansive, then I − T is monotone. It is also easy to

see that a projection PC is 1-ism. Inverse strongly monotone (also referred to as co-coercive)

operators have been applied widely in solving practical problems in various fields.

We need some facts and tools in a real Hilbert space H which are listed as lemmas below.

Lemma 2.1. Let X be a real inner product space. Then there holds the following

inequality

kx + yk2 ≤ kxk2 + 2hy, x + yi, ∀x, y ∈ X.

6

It is not hard to prove the following lemma which will be used in the sequel. Here we omit

its proof.

Lemma 2.2. Let F : H → H be a κ-Lipschitzian and η-strongly monotone operator with

positive constants κ, η > 0 and V : H → H be an l-Lipschitzian mapping. If µη − γl > 0 for

µ, γ ≥ 0, then µF − γV is (µη − γl)-strongly monotone, that is,

h(µF − γV )x − (µF − γV )y, x − yi ≥ (µη − γl)kx − yk2 ,

∀x, y ∈ H.

Lemma 2.3. Let H be a real Hilbert space. Then the following hold:

(a) kx − yk2 = kxk2 − kyk2 − 2hx − y, yi for all x, y ∈ H;

(b) kλx + µyk2 = λkxk2 + µkyk2 − λµkx − yk2 for all x, y ∈ H and λ, µ ∈ [0, 1] with

λ + µ = 1;

(c) If {xk } is a sequence in H such that xk * x, it follows that

lim sup kxk − yk2 = lim sup kxk − xk2 + kx − yk2 ,

k→∞

∀y ∈ H.

k→∞

Let C be a nonempty closed convex subset of a real Hilbert space H. We introduce some

notations. Let λ be a number in (0, 1] and let µ > 0. Associating with a nonexpansive

mapping S : C → H, we define the mapping S λ : C → H by

S λ x := Sx − λµF (Sx),

∀x ∈ C,

where F : H → H is an operator such that, for some positive constants κ, η > 0, F is

κ-Lipschitzian and η-strongly monotone on H; that is, F satisfies the conditions:

kF x − F yk ≤ κkx − yk and hF x − F y, x − yi ≥ ηkx − yk2

for all x, y ∈ H.

Lemma 2.4 (see [19, Lemma 3.1]). S λ is a contraction provided 0 < µ <

kS λ x − S λ yk ≤ (1 − λτ )kx − yk,

where τ = 1 −

q

2η

;

κ2

that is,

∀x, y ∈ C,

1 − µ(2η − µκ2 ) ∈ (0, 1].

Lemma 2.5 (see [7, Demiclosedness principle]). Let C be a nonempty closed convex subset

of a real Hilbert space H. Let S be a nonexpansive self-mapping on C with Fix(S) 6= ∅. Then

I − S is demiclosed. That is, whenever {xn } is a sequence in C weakly converging to some

x ∈ C and the sequence {(I −S)xn } strongly converges to some y, it follows that (I −S)x = y.

Here I is the identity operator of H.

Lemma 2.6 (see [19, Lemma 2.1]). Let {an } be a sequence of nonnegative numbers

satisfying the condition

an+1 ≤ (1 − αn )an + αn βn , ∀n ≥ 0,

7

where {αn } and {βn } are sequences of real numbers such that

P

(i) {αn } ⊂ [0, 1] and ∞

n=0 αn = ∞, or equivalently,

∞

Y

(1 − αn ) := lim

n→∞

n=0

(ii) lim supn→∞ βn ≤ 0, or

Then, limn→∞ an = 0.

P∞

n=0

n

Y

(1 − αk ) = 0;

k=0

|αn βn | < ∞.

3. Iterative Algorithm and Convergence Criteria

Let C be a nonempty closed convex subset of a real Hilbert space H. Throughout this

section, we always assume the following

F : H → H is a κ-Lipschitzian and η-strongly monotone operator with positive constants

κ, η > 0, and V : H → H is an l-Lipschitzianqmapping;

0 < µ < κ2η2 and 0 ≤ γl < τ with τ = 1 − 1 − µ(2η − µκ2 );

A : C → H and B : H → H are two mappings such that the hypotheses (H1)-(H4) hold:

(H1) B is pseudomonotone on H;

(H2) A is β-inverse-strongly monotone on C;

(H3) B is L-Lipschitz continuous on H;

(H4) VI(C, B) 6= ∅.

Next, we introduce and consider the following BVIP, which may have multiple solutions.

Problem 3.1. We find x∗ ∈ VI(C, B) such that

hAx∗ , x − x∗ i ≥ 0,

∀x ∈ VI(C, B),

(3.1)

where VI(C, B) denotes the set of solutions of the VIP: Find y ∗ ∈ C such that

hBy ∗ , y − y ∗ i ≥ 0,

∀y ∈ C.

(3.2)

Algorithm 3.1. Initialization. Choose u ∈ H, x0 ∈ H, k = 0, 0 < λ ≤ 2β, positive

sequences {δk }, {λk }, {αk }, {βk }, {γk } and {¯k } such that

lim δk = 0,

k→∞

∞

X

¯k < ∞,

k=0

αk + βk + γk = 1 ∀k ≥ 0,

∞

X

αk = ∞,

k=0

lim αk = 0, lim βk = ξ ∈ (0, 12 ], lim λk = 0, λk ≤

k→∞

k→∞

k→∞

8

1

L

∀k ≥ 0.

Step 1. Compute

vk = αk γV xk + γk xk + ((1 − γk )I − αk µF )xk ,

yk := PC (vk − λk Bvk ),

zk := PC (vk − λk Byk ).

Step 2. Inner loop j = 0, 1, .... Compute

xk,0 := zk − λAzk ,

yk,j := PC (xk,j − δj Bxk,j ),

xk,j+1 := αj xk,0 + βj xk,j + γj PC (xk,j − δj Byk,j ).

If kxk,j+1 − PVI(C,B) xk,0 k ≤ ¯k then set hk := xk,j+1 and go to Step 3.

Otherwise, increase j by 1 and repeat the inner loop Step 2.

Step 3. Set xk+1 := αk u + βk xk + γk hk . Then increase k by 1 and go to Step 1.

Let C be a nonempty closed convex subset of H, B : C → H be monotone and L-Lipschitz

continuous on C, and S : C → C be a nonexpansive mapping such that VI(C, B)∩Fix(S) 6= ∅.

Let the sequences {xn } and {yn } be generated by

x0 ∈ C chosen arbitrarily,

yk = PC (xk − δk Bxk ),

xk+1 = αk x0 + βk xk + γk SPC (xk − δk Byk ) ∀k ≥ 0,

where {αk }, {βk }, {γk } and {δk } satisfy the following conditions:

δk > 0 ∀k ≥ 0, lim δk = 0,

k→∞

αk + βk + γk = 1 ∀k ≥ 0,

∞

X

αk = ∞, lim αk = 0,

k→∞

k=0

0 < lim inf βk ≤ lim supβk < 1.

k→∞

k→∞

Under these conditions, Yao, Liou and Yao [23] proved that the sequences {xk } and {yk }

converge to the same point PVI(C,B)∩Fix(S) x0 .

Applying these iteration sequences with S being the identity mapping, we have the following lemma.

Lemma 3.1. Suppose that the hypotheses (H1)-(H4) hold. Then the sequence {xk,j }

generated by Algorithm 3.1 converges strongly to the point PVI(C,B) (zk − λAzk ) as j → ∞.

Consequently, we have

khk − PVI(C,B) (zk − λAzk )k ≤ ¯k

∀k ≥ 0.

In the sequel we always suppose that the inner loop in the Algorithm 3.1 terminates after

a finite number of steps. This assumption, by Lemma 3.1, is satisfied when B is monotone on

C.

9

Lemma 3.2. Let sequences {vk }, {yk } and {zk } be generated by Algorithm 3.1, B be

L-Lipschitzian and pseudomonotone on H, and p ∈ VI(C, B). Then, we have

kzk − pk2 ≤ kvk − pk2 − (1 − λk L)kvk − yk k2 − (1 − λk L)kyk − zk k2 .

(3.3)

Proof. Let p ∈ VI(C, B). That means

hBp, x − pi ≥ 0,

∀x ∈ C.

Then, for each λk > 0, p satisfies the fixed point equation

p = PC (p − λk Bp).

Since B is pseudomonotone on H and p ∈ VI(C, B), we have

hBp, yk − pi ≥ 0 ⇒ hByk , yk − pi ≥ 0.

Then, applying Proposition 2.1 (ii) with vk − λk Byk and p, we obtain

kzk − pk2 ≤ kvk − λk Byk − pk2 − kvk − λk Byk − zk k2

= kvk − pk2 − 2λk hByk , vk − pi + λ2k kByk k2 − kvk − zk k2

− λ2k kByk k2 + 2λk hByk , vk − zk i

= kvk − pk2 − kvk − zk k2 + 2λk hByk , p − zk i

= kvk − pk2 − kvk − zk k2 + 2λk hByk , p − yk i + 2λk hByk , yk − zk i

≤ kvk − pk2 − kvk − zk k2 + 2λk hByk , yk − zk i.

(3.4)

Applying Proposition 2.1 (i) with vk − λk Bvk and zk , we also have

hvk − λk Bvk − yk , zk − yk i ≤ 0.

Combining this inequality with (3.4) and observing that B is L-Lipschitz continuous on H,

we obtain

kzk − pk2 ≤ kvk − pk2 − k(vk − yk ) + (yk − zk )k2 + 2λk hByk , yk − zk i

= kvk − pk2 − kvk − yk k2 − kyk − zk k2 − 2hvk − yk , yk − zk i

+ 2λk hByk , yk − zk i

= kvk − pk2 − kvk − yk k2 − kyk − zk k2 − 2hvk − λk Byk − yk , yk − zk i

= kvk − pk2 − kvk − yk k2 − kyk − zk k2 − 2hvk − λk Bvk − yk , yk − zk i

+ 2λk hBvk − Byk , zk − yk i

≤ kvk − pk2 − kvk − yk k2 − kyk − zk k2 + 2λk hBvk − Byk , zk − yk i

≤ kvk − pk2 − kvk − yk k2 − kyk − zk k2 + 2λk kBvk − Byk kkzk − yk k

≤ kvk − pk2 − kvk − yk k2 − kyk − zk k2 + 2λk Lkvk − yk kkzk − yk k

≤ kvk − pk2 − kvk − yk k2 − kyk − zk k2 + λk L(kvk − yk k2 + kzk − yk k2 )

≤ kvk − pk2 − (1 − λk L)kvk − yk k2 − (1 − λk L)kyk − zk k2 .

(3.5)

2

This completes the proof.

10

Lemma 3.3. Suppose that the hypotheses (H1)-(H4) hold and that VI(VI(C, B), A) 6= ∅.

Then the sequence {xk } generated by Algorithm 3.1 is bounded.

Proof. Since limk→∞ αk = 0, limk→∞ βk = ξ ∈ (0, 12 ] and αk + βk + γk = 1, we get

lim (1 − γk ) = lim (αk + βk ) = ξ.

k→∞

k→∞

Hence, we may assume, without loss of generality, that 0 <

Take an arbitrary p ∈ VI(VI(C, B), A). Then we have

hAp, x − pi ≥ 0,

αk

1−γk

≤ 1 for all k ≥ 0.

∀x ∈ VI(C, B),

which implies

p = PVI(C,B) (p − λAp).

Then, it follows from (2.1), Proposition 2.1 (iii), β-inverse strong monotonicity of A, and

0 < λ ≤ 2β that

kPVI(C,B) (zk − λAzk ) − pk2 = kPVI(C,B) (zk − λAzk ) − PVI(C,B) (p − λAp)k2

≤ kzk − λAzk − p + λApk2

≤ kzk − pk2 + λ(λ − 2β)kAzk − Apk2

≤ kzk − pk2 .

(3.6)

Furthermore, from Algorithm 3.1 and Lemma 2.4, we have

kvk − pk = kαk γV xk + γk xk + ((1 − γk )I − αk µF )xk − pk

= kαk (γV xk − µF p) + γk (xk − p) + ((1 − γk )I − αk µF )xk

− ((1 − γk )I − αk µF )pk

≤ αk γkV xk − V pk + αk k(γV − µF )pk + γk kxk − pk

+ k((1 − γk )I − αk µF )xk − ((1 − γk )I − αk µF )pk

≤ αk γlkxk − pk + αk k(γV − µF )pk + γk kxk − pk

αk µ

αk µ

+ (1 − γk )k(I − 1−γ

F )xk − (I − 1−γ

F )pk

k

k

≤ αk γlkxk − pk + αk k(γV − µF )pk + γk kxk − pk

αk

+ (1 − γk )(1 − 1−γ

τ )kxk − pk

k

= αk γlkxk − pk + αk k(γV − µF )pk + γk kxk − pk

+ (1 − γk − αk τ )kxk − pk

= (1 − αk (τ − γl))kxk − pk + αk k(γV − µF )pk

)pk

= (1 − αk (τ − γl))kxk − pk + αk (τ − γl) k(γVτ−µF

−γl

)pk

≤ max{kxk − pk, k(γVτ−µF

}.

−γl

11

(3.7)

Utilizing (3.5)-(3.7) and the assumptions 0 < λ ≤ 2β,

P∞

¯k

k=0 < ∞ we obtain that

kxk+1 − pk = kαk u + βk xk + γk hk − pk

≤ αk ku − pk + βk kxk − pk + γk khk − pk

≤ αk ku − pk + βk kxk − pk + γk khk − PVI(C,B) (zk − λAzk )k

+ γk kPVI(C,B) (zk − λAzk ) − pk

≤ αk ku − pk + βk kxk − pk + γk ¯k + γk kzk − pk

≤ αk ku − pk + βk kxk − pk + γk ¯k + γk kvk − pk

)pk

≤ αk ku − pk + βk kxk − pk + γk ¯k + γk max{kxk − pk, k(γVτ−µF

}

−γl

k(γV −µF )pk

≤ αk ku − pk + (βk + γk ) max{kxk − pk,

} + ¯k

τ −γl

k(γV −µF )pk

} + ¯k

= αk ku − pk + (1 − αk ) max{kxk − pk,

τ −γl

k(γV −µF )pk

≤ max{kxk − pk, ku − pk,

} + ¯k

τ −γl

)pk

}+

≤ max{kx0 − pk, ku − pk, k(γVτ−µF

−γl

)pk

}+

≤ max{kx0 − pk, ku − pk, k(γVτ−µF

−γl

k

X

¯j

j=0

∞

X

¯k

k=0

< ∞,

which shows that the sequence {xk } is bounded, and so are the sequences {vk }, {yk } and {zk }.

2

Lemma 3.4 (see [32]). Let {xk } and {yk } be two bounded sequences in a real Banach

space X. Let {βk } be a sequence in [0, 1]. Suppose that

0 < lim inf βk ≤ lim supβk < 1,

k→∞

k→∞

xk+1 = (1 − βk )yk + βk xk ,

lim sup(kyk+1 − yk k − kxk+1 − xk k) ≤ 0.

k→∞

Then, limk→∞ kyk − xk k = 0.

Lemma 3.5. Suppose that the hypotheses (H1)-(H4) and that the sequences {vk }, {yk }

and {zk } are generated by Algorithm 3.1. Then, we have

kzk+1 − zk k ≤ (1 + λk+1 L)kvk+1 − vk k + λk kByk k

+ λk+1 (kBvk+1 k + kByk+1 k + kBvk k).

Moreover

lim kzk+1 − zk k = lim kvk+1 − vk k = 0.

k→∞

k→∞

Proof. Since B is L-Lipschitzian on H, for each x, y ∈ H, we have

k(I − λk B)x − (I − λk B)yk = kx − y − λk (Bx − By)k

≤ kx − yk + λk kBx − Byk

≤ (1 + λk L)kx − yk.

12

(3.8)

Combining this inequality with Proposition 2.1 (iii), we have

kzk+1 − zk k = kPC (vk+1 − λk+1 Byk+1 ) − PC (vk − λk Byk )k

≤ k(vk+1 − λk+1 Byk+1 ) − vk + λk Byk k

= k(vk+1 − λk+1 Bvk+1 ) − (vk − λk+1 Bvk )

+ λk+1 (Bvk+1 − Byk+1 − Bvk ) + λk Byk k

≤ (1 + λk+1 L)kvk+1 − vk k + λk kByk k

+ λk+1 (kBvk+1 k + kByk+1 k + kBvk k).

(3.9)

This is the desired result (3.8).

Now we denote xk+1 = (1 − βk )wk + βk xk . Then, we have

u+γk+1 hk+1

u+γk hk

− αk 1−β

wk+1 − wk = αk+11−β

k+1

k

αk+1

γk+1

αk

= ( 1−β

−

)u

+

(

−

1−βk

1−βk+1

k+1

γk

)hk

1−βk

+

γk+1

(hk+1

1−βk+1

− hk ).

(3.10)

Note that, for 0 < λ ≤ 2β, we have from (2.1) that

kPVI(C,B) (zk+1 − λAzk+1 ) − PVI(C,B) (zk − λAzk )k2

≤ k(zk+1 − λAzk+1 ) − (zk − λAzk )k2

≤ kzk+1 − zk k2 + λ(λ − 2β)kAzk+1 − Azk k2

≤ kzk+1 − zk k2 .

Then, combining (3.11) with λ ≤ 2β and (3.10) we get

kwk+1 − wk k − kxk+1 − xk k

αk+1

γk+1

γk+1

γk

αk

− 1−β

|kuk + | 1−β

− 1−β

|khk k + 1−β

khk+1 − hk k

≤ | 1−β

k+1

k

k+1

k

k+1

− kxk+1 − xk k

γk+1

αk+1

γk

αk

− 1−β

|kuk + | 1−β

− 1−β

|(kPVI(C,B) (zk − λAzk )k + ¯k )

≤ | 1−β

k+1

k

k+1

k

γk+1

+ 1−βk+1 kPVI(C,B) (zk+1 − λAzk+1 ) − PVI(C,B) (zk − λAzk )k

γk+1

+ 1−β

(kPVI(C,B) (zk+1 − λAzk+1 ) − hk+1 k

k+1

+ kPVI(C,B) (zk − λAzk ) − hk k) − kxk+1 − xk k

γk+1

αk+1

γk

αk

≤ | 1−β

− 1−β

|kuk + | 1−β

− 1−β

|(kPVI(C,B) (zk − λAzk )k + ¯k )

k+1

k

k+1

k

γk+1

γk+1

+ 1−βk+1 kzk+1 − zk k + 1−βk+1 (¯k+1 + ¯k ) − kxk+1 − xk k.

Hence,

kwk+1 − wk k − kxk+1 − xk k

γk+1

αk+1

αk

− 1−β

|kuk + | 1−β

−

≤ | 1−β

k+1

k

k+1

γk

|(kPVI(C,B) (zk

1−βk

γk+1

(¯

+ ¯k )

1−βk+1 k+1

γk+1 (1+λk+1 L)

kvk+1 − vk k +

1−βk+1

γk+1

(λk+1 (kBvk+1 k + kByk+1 k

1−βk+1

+

+

− kxk+1 − xk k

αk+1

γk+1

αk

≤ | 1−β

− 1−β

|kuk + | 1−β

−

k+1

k

k+1

+

+

− λAzk )k + ¯k )

+ kBvk k) + λk kByk k)

γk

|(kPVI(C,B) (zk − λAzk )k + ¯k )

1−βk

γk+1

kxk+1 − xk k + 1−β

(¯k+1 + ¯k )

k+1

γk+1 (1+λk+1 L)

kvk+1 − vk k −

1−βk+1

γk+1

(λk+1 (kBvk+1 k + kByk+1 k

1−βk+1

13

+ kBvk k) + λk kByk k).

(3.11)

On the other hand, we define w̃k =

calculations show that

w̃k+1 − w̃k =

=

=

=

vk −γk xk

,

1−γk

which implies that vk = (1 − γk )w̃k + γk xk . Simple

vk+1 −γk+1 xk+1

−γk xk

− vk1−γ

1−γk+1

k

αk+1 γV xk+1 +((1−γk+1 )I−αk+1 µF )xk+1

k )I−αk µF )xk

− αk γV xk +((1−γ

1−γk+1

1−γk

αk+1

αk+1

αk

αk

γV xk+1 − 1−γ

γV xk + xk+1 − xk + 1−γ

µF xk − 1−γ

µF xk+1

1−γk+1

k

k

k+1

αk+1

αk

(γV xk+1 − µF xk+1 ) + 1−γk (µF xk − γV xk ) + xk+1 − xk .

1−γk+1

So, it follows that

kw̃k+1 − w̃k k

αk+1

αk

≤ 1−γ

(kγV xk+1 k + kµF xk+1 k) + 1−γ

(kµF xk k + kγV xk k) + kxk+1 − xk k

k+1

k

αk+1

αk

≤ kxk+1 − xk k + ( 1−γk+1 + 1−γk )M0 ,

(3.12)

where supk≥0 {kµF xk k + kγV xk k} ≤ M0 for some M0 > 0. In the meantime, from vk =

(1 − γk )w̃k + γk xk , together with (3.12), we get

kvk+1 − vk k = kγk+1 xk+1 + (1 − γk+1 )w̃k+1 − (γk xk + (1 − γk )w̃k )k

= k(1 − γk+1 )(w̃k+1 − w̃k ) − (γk+1 − γk )w̃k

+ γk+1 (xk+1 − xk ) + (γk+1 − γk )xk k

≤ (1 − γk+1 )kw̃k+1 − w̃k k + γk+1 kxk+1 − xk k + |γk+1 − γk |kxk − w̃k k

αk+1

αk

+ 1−γ

)M0 ]

≤ (1 − γk+1 )[kxk+1 − xk k + ( 1−γ

k+1

k

+ γk+1 kxk+1 − xk k + |γk+1 − γk |kxk − w̃k k

αk+1

αk

+ 1−γ

)M0 + |γk+1 − γk |kxk − w̃k k.

≤ kxk+1 − xk k + ( 1−γ

k+1

k

(3.13)

Combining (3.11) and (3.13) we have

kwk+1 − wk k − kxk+1 − xk k

αk+1

γk+1

αk

≤ | 1−β

− 1−β

|kuk + | 1−β

−

k+1

k

k+1

+ ¯k )

=

+ ¯k )

γk

|(kPVI(C,B) (zk − λAzk )k

1−βk

γk+1 (1+λk+1 L)

αk+1

αk

+

[kxk+1 − xk k + ( 1−γk+1 + 1−γ

)M0

1−βk+1

k

γk+1

+ |γk+1 − γk |kxk − w̃k k] − kxk+1 − xk k + 1−βk+1 (¯k+1 + ¯k )

γk+1

+ 1−β

(λk+1 (kBvk+1 k + kByk+1 k + kBvk k) + λk kByk k)

k+1

αk+1

γk+1

γk

αk

|kuk + | 1−β

− 1−β

|(kPVI(C,B) (zk − λAzk )k

| 1−βk+1 − 1−β

k

k+1

k

(1+λk+1 L)

αk+1

αk

+ γk+11−β

[( 1−γ

+ 1−γ

)M0 + |γk+1 − γk |kxk − w̃k k]

k+1

k+1

k

γk+1 (1+λk+1 L)

γk+1

+ ( 1−βk+1

− 1)kxk+1 − xk k + 1−β

(¯k+1 + ¯k )

k+1

γk+1

+ 1−βk+1 (λk+1 (kBvk+1 k + kByk+1 k + kBvk k) + λk kByk k).

(3.14)

From the assumptions αk + βk + γk = 1, limk→∞ βk = ξ ∈ (0, 12 ], limk→∞ αk = 0 and

limk→∞ λk = 0, it follows that limk→∞ |γk+1 − γk | = 0,

lim |

k→∞

αk+1

αk

γk+1

γk

−

| = lim |

−

| = 0 and

k→∞

1 − βk+1 1 − βk

1 − βk+1 1 − βk

14

lim

k→∞

γk+1 (1 + λk+1 L)

= 1.

1 − βk+1

Combining these equalities with (3.14), we obtain from Lemma 3.3 and limk→∞ ¯k = 0 that

lim sup(kwk+1 − wk k − kxk+1 − xk k) ≤ 0.

k→∞

Now applying Lemma 3.4, we have

lim kwk − xk k = 0.

k→∞

Hence by xk+1 = (1 − βk )wk + βk xk , we deduce that

lim kxk+1 − xk k = lim (1 − βk )kwk − xk k = 0,

k→∞

k→∞

(3.15)

which together with limk→∞ λk = 0, (3.8) and (3.13), implies that

lim kvk+1 − vk k = 0 and

k→∞

lim kzk+1 − zk k = 0.

k→∞

(3.16)

Lemma 3.6. Suppose that the hypotheses (H1)-(H4) hold and that VI(VI(C, B), A) 6= ∅.

Then for any p ∈ VI(VI(C, B), A) we have

kxk+1 − pk2 ≤ αk ku − pk2 + βk kxk − pk2 + γk kvk − pk2 + 2γk ¯k kzk − pk

+ γk ¯2k − γk (1 − λk L)(kvk − yk k2 + kyk − zk k2 ).

Moreover

k→∞

k→∞

(3.17)

lim kPVI(C,B) (zk − λk Azk ) − zk k = 0,

lim kPVI(C,B) (yk − λk Ayk ) − yk k = 0.

Proof. By Lemma 3.1, we know that

lim xk,j = PVI(C,B) (zk − λAzk ),

j→∞

which together with 0 < λ ≤ 2β, inequality (3.3), limk→∞ βk = ξ ∈ (0, 12 ] and p ∈ VI(VI(C, B), A),

implies that

kxk+1 − pk2 = kαk u + βk xk + γk hk − pk2

≤ αk ku − pk2 + βk kxk − pk2 + γk khk − pk2

≤ αk ku − pk2 + βk kxk − pk2 + γk (kPVI(C,B) (zk − λAzk ) − pk + ¯k )2

= αk ku − pk2 + βk kxk − pk2

+ γk (kPVI(C,B) (zk − λAzk ) − PVI(C,B) (p − λAp)k + ¯k )2

≤ αk ku − pk2 + βk kxk − pk2 + γk (k(zk − λAzk ) − (p − λAp)k + ¯k )2

≤ αk ku − pk2 + βk kxk − pk2 + γk (kzk − pk + ¯k )2

= αk ku − pk2 + βk kxk − pk2 + γk kzk − pk2 + 2γk ¯k kzk − pk + γk ¯2k

≤ αk ku − pk2 + βk kxk − pk2 + 2γk ¯k kzk − pk + γk ¯2k

+ γk (kvk − pk2 − (1 − λk L)kvk − yk k2 − (1 − λk L)kyk − zk k2 )

= αk ku − pk2 + βk kxk − pk2 + γk kvk − pk2 + 2γk ¯k kzk − pk + γk ¯2k

− γk (1 − λk L)(kvk − yk k2 + kyk − zk k2 ).

15

(3.18)

On the other hand, from Algorithm 3.1 we have

kvk − pk2 = kαk γV xk + γk xk + ((1 − γk )I − αk µF )xk − pk2

= kαk (γV xk − µF p) + γk (xk − p) + ((1 − γk )I − αk µF )xk

− ((1 − γk )I − αk µF )pk2

= kαk γ(V xk − V p) + αk (γV p − µF p) + γk (xk − p)

+ ((1 − γk )I − αk µF )xk − ((1 − γk )I − αk µF )pk2

≤ kαk γ(V xk − V p) + γk (xk − p) + ((1 − γk )I − αk µF )xk

− ((1 − γk )I − αk µF )pk2 + 2αk h(γV − µF )p, vk − pi

≤ [αk γkV xk − V pk + γk kxk − pk + k((1 − γk )I − αk µF )xk

− ((1 − γk )I − αk µF )pk]2 + 2αk h(γV − µF )p, vk − pi

≤ [αk γlkxk − pk + γk kxk − pk + (1 − γk − αk τ )kxk − pk]2

+ 2αk h(γV − µF )p, vk − pi

= [αk τ γlτ kxk − pk + γk kxk − pk + (1 − γk − αk τ )kxk − pk]2

+ 2αk h(γV − µF )p, vk − pi

2

≤ αk τ (γl)

kxk − pk2 + γk kxk − pk2 + (1 − γk − αk τ )kxk − pk2

τ2

+ 2αk h(γV − µF )p, vk − pi

2

2

= (1 − αk τ −(γl)

)kxk − pk2 + 2αk h(γV − µF )p, vk − pi

τ

2

≤ kxk − pk + 2αk h(γV − µF )p, vk − pi.

(3.19)

Combining (3.18) and (3.19), we get

kxk+1 − pk2

≤ αk ku − pk2 + βk kxk − pk2 + γk kvk − pk2 + 2γk ¯k kzk − pk

+ γk ¯2k − γk (1 − λk L)(kvk − yk k2 + kyk − zk k2 )

≤ αk ku − pk2 + βk kxk − pk2 + γk [kxk − pk2 + 2αk h(γV − µF )p, vk − pi]

+ 2γk ¯k kzk − pk + γk ¯2k − γk (1 − λk L)(kvk − yk k2 + kyk − zk k2 )

≤ αk ku − pk2 + kxk − pk2 + 2αk k(γV − µF )pkkvk − pk + 2γk ¯k kzk − pk

+ γk ¯2k − γk (1 − λk L)(kvk − yk k2 + kyk − zk k2 ),

which immediately yields

γk (1 − λk L)(kvk − yk k2 + kyk − zk k2 )

≤ αk ku − pk2 + kxk − pk2 − kxk+1 − pk2 + 2αk k(γV − µF )pkkvk − pk

+ 2γk ¯k kzk − pk + γk ¯2k

≤ αk ku − pk2 + kxk − xk+1 k(kxk − pk + kxk+1 − pk) + 2αk k(γV − µF )pkkvk − pk

+ 2γk ¯k kzk − pk + γk ¯2k .

Since αk + βk + γk = 1, αk → 0, βk → ξ ∈ (0, 21 ], ¯k → 0, λk → 0 and kxk − xk+1 k → 0, from

the boundedness of {xk }, {vk } and {zk } we obtain

lim kvk − yk k = 0 and

k→∞

lim kyk − zk k = 0.

k→∞

In addition, it is clear from αk → 0 that as k → ∞,

kvk − xk k = kαk γV xk + γk xk + ((1 − γk )I − αk µF )xk − xk k

= kαk (γV xk − µF xk )k → 0.

16

(3.20)

That is,

lim kvk − xk k = 0.

(3.21)

k→∞

Taking into consideration that kvk − zk k ≤ kvk − yk k + kyk − zk k and kzk − xk k ≤ kzk − vk k +

kvk − xk k, we deduce from (3.20) and (3.21) that

lim kvk − zk k = 0 and

k→∞

lim kzk − xk k = 0.

(3.22)

k→∞

It is clear from (3.20) and (3.22) that

lim kxk − yk k = 0.

(3.23)

k→∞

Since A is β-inverse-strongly monotone, it is known from (2.1) that I − λA is a nonexpansive

mapping for 0 < λ ≤ 2β. Again by Proposition 2.1 (iii) and Lemma 3.1 we have

kPVI(C,B) (yk − λAyk ) − xk+1 k

≤ kPVI(C,B) (yk − λAyk ) − PVI(C,B) (zk − λAzk )k

+ kPVI(C,B) (zk − λAzk ) − xk+1 k

≤ k(I − λA)yk − (I − λA)zk k + kPVI(C,B) (zk − λAzk ) − xk+1 k

≤ kyk − zk k + αk kPVI(C,B) (zk − λAzk ) − uk

+ βk kPVI(C,B) (zk − λAzk ) − xk k + γk ¯k

≤ kyk − zk k + αk kPVI(C,B) (zk − λAzk ) − uk + ¯k

+ βk kPVI(C,B) (zk − λAzk ) − PVI(C,B) (yk − λAyk )k

+ βk kPVI(C,B) (yk − λAyk ) − yk k + βk kyk − xk k

≤ kyk − zk k + αk kPVI(C,B) (zk − λAzk ) − uk

+ ¯k + βk k(I − λA)zk − (I − λA)yk k

+ βk kPVI(C,B) (yk − λAyk ) − yk k + βk kyk − xk k

≤ kyk − zk k + αk kPVI(C,B) (zk − λAzk ) − uk + ¯k

+ βk kzk − yk k + βk kPVI(C,B) (yk − λAyk ) − yk k + βk kyk − xk k.

Consequently, from (3.24), we have

kPVI(C,B) (yk − λAyk ) − yk k

≤ kPVI(C,B) (yk − λAyk ) − xk+1 k + kxk+1 − xk k + kxk − yk k

≤ kyk − zk k + αk kPVI(C,B) (zk − λAzk ) − uk + ¯k

+ βk kzk − yk k + βk kPVI(C,B) (yk − λAyk ) − yk k + βk kyk − xk k

+ kxk+1 − xk k + kxk − yk k

= (1 + βk )kyk − zk k + αk kPVI(C,B) (zk − λAzk ) − uk + ¯k

+ βk kPVI(C,B) (yk − λAyk ) − yk k + (1 + βk )kyk − xk k + kxk+1 − xk k,

which immediately yields

kPVI(C,B) (yk − λAyk ) − yk k

1+βk

αk

kyk − zk k + 1−β

kPVI(C,B) (zk − λAzk ) − uk +

≤ 1−β

k

k

1+βk

1

+ 1−βk kyk − xk k + 1−β

kxk+1 − xk k.

k

17

¯k

1−βk

(3.24)

Since αk + βk + γk = 1, αk → 0, βk → ξ ∈ (0, 21 ], ¯k → 0, kyk − zk k → 0, kyk − xk k → 0 and

kxk+1 − xk k → 0 (due to (3.15), (3.20) and (3.23)), we conclude that

lim kPVI(C,B) (yk − λAyk ) − yk k = 0.

k→∞

(3.25)

From (2.1) and Proposition 2.1 (iii), it follows that

kPVI(C,B) (zk − λAzk ) − zk k

≤ kPVI(C,B) (zk − λAzk ) − PVI(C,B) (yk − λAyk )k

+ kPVI(C,B) (yk − λAyk ) − yk k + kyk − zk k

≤ k(I − λA)zk − (I − λA)yk k + kPVI(C,B) (yk − λAyk ) − yk k + kyk − zk k

≤ kzk − yk k + kPVI(C,B) (yk − λAyk ) − yk k + kyk − zk k

≤ kPVI(C,B) (yk − λAyk ) − yk k + 2kyk − zk k.

Utilizing the last inequality we obtain from (3.20) and (3.25) that

lim kPVI(C,B) (zk − λAzk ) − zk k = 0.

k→∞

(3.26)

2

This completes the proof.

Theorem 3.1. Suppose that the hypotheses (H1)-(H4) hold and that VI(VI(C, B), A) 6=

∅. Then the two sequences {xk } and {zk } in Algorithm 3.1 converge strongly to the same

point x∗ ∈ VI(VI(C, B), A) provided kxk+1 − xk k + ¯k = o(αk ), which is a unique solution to

the VIP

¯ − ξγV

¯ )x∗ − u, p − x∗ i ≥ 0, ∀p ∈ VI(VI(C, B), A),

h(I + ξµF

(3.27)

where ξ¯ = 1 − ξ ∈ [ 1 , 1).

2

Proof. Note that Lemma 3.3 shows the boundedness of {xk }. Since H is reflexive,

there is at least a weak convergence subsequence of {xk }. First, let us assert that ωw (xk ) ⊂

VI(VI(C, B), A). As a matter of fact, take an arbitrary w ∈ VI(VI(C, B), A). Then there

exists a subsequence {xki } of {xk } such that xki * w. From (3.23), we know that yki * w.

It is easy to see that the mapping PVI(C,B) (I − λA) : C → VI(C, B) ⊂ C is nonexpansive

because PVI(C,B) is nonexpansive and I − λA is nonexpansive for β-inverse-strongly monotone

mapping A with 0 < λ ≤ 2β. So, utilizing Lemma 2.5 and (3.25), we obtain

w = PVI(C,B) (w − λAw),

which leads to w ∈ VI(VI(C, B), A). Thus, the assertion is valid.

Also, note that 0 ≤ γl < τ and

µη ≥ τ ⇔ µη ≥ 1 −

q

q

1 − µ(2η − µκ2 )

⇔ 1 − µ(2η − µκ2 ) ≥ 1 − µη

⇔ 1 − 2µη + µ2 κ2 ≥ 1 − 2µη + µ2 η 2

⇔ κ2 ≥ η 2

⇔ κ ≥ η.

18

It is clear that

h(µF − γV )x − (µF − γV )y, x − yi ≥ (µη − γl)kx − yk2 ,

∀x, y ∈ H.

Hence, it follows from 0 ≤ γl < τ ≤ µη that µF − γV is (µη − γl)-strongly monotone. In the

meantime, it is clear that µF − γV is Lipschitzian with constant µκ + γl > 0. We define the

mapping Γ : H → H as below

1

Γ x = (µF − γV )x + ¯(x − u),

ξ

∀x ∈ H,

where u ∈ H and ξ¯ = 1 − ξ ∈ [ 21 , 1). Then it is easy to see that Γ is (µη − γl + 1ξ̄ )-strongly

monotone and Lipschitzian with constant µκ+γl + 1ξ̄ > 0. Thus, there exists a unique solution

x∗ ∈ VI(VI(C, B), A) to the VIP

1

h(µF − γV )x∗ + ¯(x∗ − u), p − x∗ i ≥ 0,

ξ

∀p ∈ VI(VI(C, B), A).

(3.28)

Next, let us show that xk * x∗ . Indeed, take an arbitrary p ∈ VI(VI(C, B), A). In terms

of Algorithm 3.1 and Lemma 2.1, we conclude from (3.3), (3.5) and the β-inverse-strong

monotonicity of A with λ ≤ 2β, that

kxk+1 − pk2 = kαk u + βk xk + γk hk − pk2

≤ kβk (xk − p) + γk (hk − p)k2 + 2αk hu − p, xk+1 − pi

≤ βk kxk − pk2 + γk khk − pk2 + 2αk hu − p, xk+1 − pi

≤ βk kxk − pk2 + γk (kPVI(C,B) (zk − λAzk ) − pk + ¯k )2 + 2αk hu − p, xk+1 − pi

= βk kxk − pk2 + γk (kPVI(C,B) (zk − λAzk ) − PVI(C,B) (p − λAp)k + ¯k )2

+ 2αk hu − p, xk+1 − pi

≤ βk kxk − pk2 + γk (k(I − λA)zk − (I − λA)pk + ¯k )2 + 2αk hu − p, xk+1 − pi

≤ βk kxk − pk2 + γk (kzk − pk + ¯k )2 + 2αk hu − p, xk+1 − pi

= βk kxk − pk2 + γk kzk − pk2 + γk ¯k (2kzk − pk + ¯k ) + 2αk hu − p, xk+1 − pi

≤ βk kxk − pk2 + γk kvk − pk2 + γk ¯k (2kzk − pk + ¯k ) + 2αk hu − p, xk+1 − pi.

(3.29)

Combining (3.19) and (3.29), we get

kxk+1 − pk2

≤ βk kxk − pk2 + γk kvk − pk2 + γk ¯k (2kzk − pk + ¯k ) + 2αk hu − p, xk+1 − pi

2

2

)kxk − pk2 + 2αk h(γV − µF )p, vk − pi]

≤ βk kxk − pk2 + γk [(1 − αk τ −(γl)

τ

+ γk ¯k (2kzk − pk + ¯k ) + 2αk hu − p, xk+1 − pi

2

2

= (βk + γk − αk γk τ −(γl)

)kxk − pk2 + 2αk γk h(γV − µF )p, vk − xk+1 i

τ

+ γk ¯k (2kzk − pk + ¯k ) + 2αk γk h(γV − µF )p + γ1k (u − p), xk+1 − pi

2

2

≤ (1 − αk γk τ −(γl)

)kxk − pk2 + 2αk k(γV − µF )pk(kvk − xk k + kxk − xk+1 k)

τ

+ γk ¯k (2kzk − pk + ¯k ) + 2αk γk h(γV − µF )p + γ1k (u − p), xk+1 − pi

≤ kxk − pk2 + 2αk k(γV − µF )pk(kvk − xk k + kxk − xk+1 k)

+ γk ¯k (2kzk − pk + ¯k ) + 2αk γk h(γV − µF )p + γ1k (u − p), xk+1 − pi,

19

(3.30)

which immediately yields

h(µF − γV )p + γ1k (p − u), xk+1 − pi

≤ 2α1k γk (kxk − pk2 − kxk+1 − pk2 ) + γ1k k(γV − µF )pk(kvk − xk k + kxk − xk+1 k)

¯k

+ 2α

(2kzk − pk + ¯k )

k

kxk −xk+1 k

≤ 2αk γk (kxk − pk + kxk+1 − pk) + γ1k k(γV − µF )pk(kvk − xk k + kxk − xk+1 k)

¯k

+ 2α

(2kzk − pk + ¯k ).

k

(3.31)

Since for any w ∈ ωw (xk ) there exists a subsequence {xki } of {xk } such that xki * w, we

deduce from (3.21), (3.31), γ1k → 1ξ̄ and kxk+1 − xk k + ¯k = o(αk ) that

h(µF − γV )p + 1ξ̄ (p − u), w − pi

= lim h(µF − γV )p + γ1k (p − u), xki − pi

i→∞

i

≤ lim suph(µF − γV )p +

k→∞

= lim suph(µF − γV )p +

k→∞

1

(p

γk

1

(p

γk

− u), xk − pi

− u), xk+1 − pi

−xk+1 k

≤ lim sup kxk2α

(kxk − pk + kxk+1 − pk)

k γk

k→∞

¯k

(2kzk − pk + ¯k )

+ lim sup γ1k k(γV − µF )pk(kvk − xk k + kxk − xk+1 k) + lim sup 2α

k

k→∞

k→∞

= 0.

So, it follows that

1

h(µF − γV )p + ¯(p − u), p − wi ≥ 0,

ξ

∀p ∈ VI(VI(C, B), A).

Since w ∈ ωw (xk ) ⊂ VI(VI(C, B), A), by Minty’s lemma [7] we have

1

h(µF − γV )w + ¯(w − u), p − wi ≥ 0,

ξ

∀p ∈ VI(VI(Ω , B), A);

that is, w is a solution of VIP (3.28). Utilizing the uniqueness of solutions of VIP (3.28),

we get w = x∗ , which hence implies that ωw (xk ) = {x∗ }. Therefore, it is known that {xk }

converges weakly to the unique solution x∗ ∈ VI(VI(C, B), A) of VIP (3.28).

Finally, let us show that kxk − x∗ k → 0 as k → ∞. Indeed, utilizing (3.30) with p = x∗ ,

we have

kxk+1 − x∗ k2

2

2

≤ (1 − αk γk τ −(γl)

)kxk − x∗ k2 + 2αk k(γV − µF )x∗ k(kvk − xk k + kxk − xk+1 k)

τ

+ γk ¯k (2kzk − x∗ k + ¯k ) + 2αk γk h(γV − µF )x∗ + γ1k (u − x∗ ), xk+1 − x∗ i

2

2

τ 2 −(γl)2

∗ 2

= (1 − αk γk τ −(γl)

)kx

−

x

k

+

α

γ

×

k

k

k

τ

τ

τ

2

∗

× τ 2 −(γl)

[

k(γV

−

µF

)x

k(kv

−

x

k

+

kx

k

k

k − xk+1 k)

2 γ

k

¯k

1

∗

∗

+ αk (2kzk − x k + ¯k ) + 2h(γV − µF )x + γk (u − x∗ ), xk+1 − x∗ i].

20

(3.32)

∞

1

Since αk → 0, αk + βk + γk = 1,

¯k = o(αk ) and xk * x∗ ,

k=0 αk = ∞, βk → ξ ∈ (0, 2 ], P

τ 2 −(γl)2

= ∞ and

from (3.15) and (3.21) we conclude that ∞

k=0 αk γk

τ

P

τ

2

∗

lim sup{ τ 2 −(γl)

2 [ γ k(γV − µF )x k(kvk − xk k + kxk − xk+1 k)

k

k→∞

+

¯k

(2kzk

αk

− x∗ k + ¯k ) + 2h(γV − µF )x∗ +

1

(u

γk

− x∗ ), xk+1 − x∗ i]} ≤ 0.

Therefore, applying Lemma 2.6 to (3.32), we obtain that kxk − x∗ k → 0 as k → ∞. Utilizing

(3.23) we also obtain that kzk − x∗ k → 0 as k → ∞. This completes the proof.

2

Remark 3.1. Theorem 3.1 extends, improves, supplements and develops Anh, Kim and

Muu [40, Theorem 3.1] in the following aspects.

(i) The problem of finding a solution x∗ of Problem 3.1 (BVIP) in Theorem 3.1 is very

different from the problem of finding a solution x∗ of Problem AKM because our BVIP generalizes Anh, Kim and Muu’s BVIP in [40, Theorem 3.1] from the space Rn to the general

Hilbert space, and extends Anh, Kim and Muu’s BVIP with only a solution to the setting of

the BVIP with multiple solutions.

(ii) The Algorithm 2.1 in [40] is extended to develop Algorithm 3.1 by virtue of Mann’s

iteration method, hybrid steepest descent method and viscosity approximation method. The

Algorithm 3.1 is more advantageous and more flexible than Algorithm 2.1 in [40] because it

involves solving the BVIP with multiple solutions, i.e., Problem 3.1.

(iii) The proof of our Theorem 3.1 is very different from the proof of Anh, Kim and Muu’s

Theorem 3.1 [40] because the proof of our Theorem 3.1 makes use of the nonexpansivity of

the combination mapping I − λA for inverse-strongly monotone mapping A (see inequality

(2.1)), the contraction coefficient estimate for the composite mapping S λ (see Lemma 2.4),

the demiclosedness principle for nonexpansive mappings (see Lemma 2.5), the convergence

criteria for nonnegative real sequences (see Lemma 2.6) and Suzuki’s lemma (see Lemma 3.4).

References

[1] A.B. Bakushinskii, A.V. Goncharskii, Ill-Posed Problems: Theory and Applications,

Kluwer, Dordrecht, 1994.

[2] G.M. Korpelevich, The extragradient method for finding saddle points and other problems, Matecon. 12 (1976) 747-756.

[3] F. Facchinei, J.S. Pang, Finite-Dimensional Variational Inequalities and Complementarity

Problems, Springer, New York, 2003.

[4] M.H. Xu, M. Li, C.C. Yang, Neural networks for a class of bi-level variational inequalities,

J. Glob. Optim. 44 (2009) 535-552.

[5] P.G. Hung, L.D. Muu, The Tikhonov regularization method extended to pseudomonotone

equilibrium problems, Nonlinear Anal. 74 (2011) 6121-6129.

[6] R. Glowinski, Numerical Methods for Nonlinear Variational Problems, Springer-Verlag,

New York, 1984.

21

[7] W. Takahashi, Nonlinear Functional Analysis, Yokohama Publishers, Yokohama, Japan,

2000.

[8] L.C. Ceng, Q.H. Ansari, J.C. Yao, Some iterative methods for finding fixed points and

for solving constrained convex minimization minimization problems, Nonlinear Anal. 74

(2011) 5286-5302.

[9] J.T. Oden, Quantitative Methods on Nonlinear Mechanics, Prentice-Hall, Englewood

Cliffs, NJ 1986.

[10]N. Nadezhkina, W. Takahashi, Weak convergence theorem by an extragradient method

for nonexpansive mappings and monotone mappings, J. Optim. Theory Appl. 128 (2006)

191-201.

[11]L.C. Zeng, J.C. Yao, Strong convergence theorem by an extragradient method for fixed

point problems and variational inequality problems, Taiwanese J. Math. 10 (5) (2006)

1293-1303.

[12]L.C. Ceng, J.C. Yao, Strong convergence theorems for variational inequalities and fixed

point problems of asymptotically strict pseudocontractive mappings in the intermediate

sense, Acta Appl. Math. 115 (2011) 167-191.

[13]J.L. Lions, Quelques Méthodes de Résolution des Problèmes aux Limites Non Linéaires,

Dunod, Paris, 1969.

[14]L.C. Ceng, Q.H. Ansari, J.C. Yao, Relaxed extragradient iterative methods for variational

inequalities, Appl. Math. Comput. 218 (2011) 1112-1123.

[15]Y. Yao, Y.C. Liou, S.M. Kang, Approach to common elements of variational inequality

problems and fixed point problems via a relaxed extragradient method, Comput. Math.

Appl. 59 (2010) 3472-3480.

[16]S. Huang, Hybrid extragradient methods for asymptotically strict pseudo-contractions

in the intermediate sense and variational inequality problems, Optimization 60 (2011)

739-754.

[17]I.V. Konnov, Regularization methods for nonmonotone equilibrium problems, J. Nonlinear Convex Anal. 10 (2009) 93-101.

[18]L.C. Ceng, A. Petrusel, Relaxed extragradient-like method for general system of generalized mixed equilibria and fixed point problem, Taiwanese J. Math. 16 (2) (2012) 445-478.

[19]H.K. Xu, T.H. Kim, Convergence of hybrid steepest-descent methods for variational inequalities, J. Optim. Theory. Appl. 119 (1) (2003) 185-201.

[20]F. Giannessi, A. Maugeri, P.M. Pardalos, Equilibrium Problems: Nonsmooth Optimization and Variational Inequality Models, Kluwer Academic Publishers, Dordrecht, 2004.

[21]I.V. Konnov, Regularization methods for nonmontone equilibrium problems, J. Nonlinear

Convex Anal. 10 (2009) 93-101.

[22]I.V. Konnov, S. Kum, Descent methods for mixed variational inequalities in a Hilbert

space, Nonlinear Anal. 47 (2001) 561-572.

[23]Y. Yao, Y.C. Liou, J.C. Yao, An extragradient method for fixed point problems and

variational inequality problems, J. Inequal. Appl. 2007, Art. ID 38752, 12pp.

22

[24]L.C. Ceng, C.Y. Wang, J.C. Yao, Strong convergence theorems by a relaxed extragradient

method for a general system of variational inequalities, Math. Methods Oper. Res. 67

(3) (2008) 375-390.

[25]L.C. Ceng, Q.H. Ansari, S. Schaible, Hybrid extragradient-like methods for generalized

mixed equilibrium problems, system of generalized equilibrium problems and optimization

problems, J. Glob. Optim. 53 (2012) 69-96.

[26]L.C. Zeng, J.C. Yao, Modified combined relaxation method for general monotone equilibrium problems in Hilbert spaces, J. Optim. Theory Appl. 131 (3) (2006) 469-483.

[27]I.V. Konnov, D.A. Dyabilkin, Nonmontone equilibrium problems: coercivity conditions

and weak regularization, J. Glob. Optim. 49 (2011) 575-587.

[28]Y. Yao, M.A. Noor, Y.C. Liou, A new hybrid iterative algorithm for variational inequalities, Appl. Math. Comput. 216 (3) (2010) 822-829.

[29]Z.Q. Luo, J.S. Pang, D. Ralph, Mathematical Programs with Equilibrium Constraints,

Cambridge University Press, Cambridge, 1996.

[30]E. Zeidler, Nonlinear Functional Analysis and Its Applications, Springer-Verlag, New

York, 1985.

[31]I. Yamada, The hybrid steepest-descent method for the variational inequality problems

over the intersection of the fixed-point sets of nonexpansive mappings, in: Inherently

Parallel Algorithms in Feasibility and Optimization and Their Applications, D. Batnariu,

Y. Censor, and S. Reich, eds., Amsterdam, North-Holland, Holland, 2001, pp. 473-504.

[32]T. Suzuki, Strong convergence of Krasnoselskii and Mann’s type sequences for one parameter nonexpansive semigroups without Bochner integrals, J. Math. Anal. Appl. 305

(2005) 227-239.

[33]L.C. Ceng, Q.H. Ansari, M.M. Wong, J.C. Yao, Mann type hybrid extragradient method

for variational inequalities, variational inclusions and fixed point problems, Fixed Point

Theory 13 (2) (2012) 403-422.

[34]L.C. Ceng, Q.H. Ansari, J.C. Yao, An extragradient method for solving split feasibility

and fixed point problems, Comput. Math. Appl. 64 (4) (2012) 633-642.

[35]L.C. Ceng, Q.H. Ansari, J.C. Yao, Relaxed extragradient methods for finding minimumnorm solutions of the split feasibility problem, Nonlinear Anal. 75 (4) (2012) 2116-2125.

[36]M. Solodov, An explicit descent method for bilevel convex optimization, J. Convex Anal.

14 (2007) 227- 237.

[37]L.C. Ceng, N. Hadjisavvas, N.C. Wong, Strong convergence theorem by a hybrid

extragradient-like approximation method for variational inequalities and fixed point problems, J. Glob. Optim. 46 (2010) 635-646.

[38]L.C. Ceng, J.C. Yao, A relaxed extragradient-like method for a generalized mixed equilibrium problem, a general system of generalized equilibria and a fixed point problem,

Nonlinear Anal. 72 (2010) 1922-1937.

23

[39]P.N. Anh, L.D. Muu, N.V. Hien, J.J. Strodiot, Using the Banach contraction principle to

implement the proximal point method for multivalued monotone variational inequalities,

J. Optim. Theory Appl. 124 (2005) 285-306.

[40]P.N. Anh, J.K. Kim, L.D. Muu, An extragradient algorithm for solving bilevel pseudomonotone variational inequalities, J. Glob. Optim. 52 (2012) 627-639.

[41]S. Takahashi, W. Takahashi, Viscosity approximation methods for equilibrium problems

and fixed point problems in Hilbert spaces, J. Math. Anal. Appl. 331 (2007) 506-515.

24