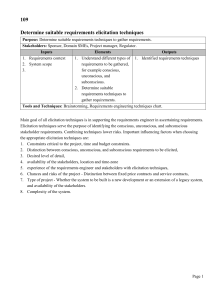

Document 10909117

advertisement