Document 10901342

advertisement

Hindawi Publishing Corporation

Journal of Applied Mathematics

Volume 2012, Article ID 189753, 13 pages

doi:10.1155/2012/189753

Research Article

Approximation Analysis of Gradient Descent

Algorithm for Bipartite Ranking

Hong Chen,1 Fangchao He,2, 3 and Zhibin Pan1

1

College of Science, Huazhong Agricultural University, Wuhan 430070, China

School of Science, Hubei University of Technology, Wuhan 430068, China

3

Faculty of Mathematics and Computer Science, Hubei University,

Wuhan 430062, China

2

Correspondence should be addressed to Hong Chen, chenhongml@163.com

Received 9 March 2012; Revised 12 May 2012; Accepted 26 May 2012

Academic Editor: Yuesheng Xu

Copyright q 2012 Hong Chen et al. This is an open access article distributed under the Creative

Commons Attribution License, which permits unrestricted use, distribution, and reproduction in

any medium, provided the original work is properly cited.

We introduce a gradient descent algorithm for bipartite ranking with general convex losses. The

implementation of this algorithm is simple, and its generalization performance is investigated.

Explicit learning rates are presented in terms of the suitable choices of the regularization parameter

and the step size. The result fills the theoretical gap in learning rates for ranking problem with

general convex losses.

1. Introduction

In this paper we consider a gradient descent algorithm for bipartite ranking generated from

Tikhonov regularization scheme with general convex losses and reproducing kernel Hilbert

spaces RKHS.

Let X be a compact metric space and Y {−1, 1}. In bipartite ranking problem, the

− n

−

learner is given positive samples S {xi }m

i1 and negative samples S {xi }i1 , which are

randomly independent drawn from ρ and ρ− , respectively. Given training set S : S , S− ,

the goal of bipartite ranking is to learn a real-valued ranking function f : X → R that ranks

future positive samples higher than negative ones.

The expected loss incurred by a ranking function f on a pair of instances x , x− is

I{fx −fx− ≤0} , where I{t} is 1 if t is true and 0 otherwise. However, due to the nonconvexity of

I, the empirical minimization method based on I is NP-hard. Thus, we consider replacing I

by a convex upper loss function φfx − fx− . Typical choices of φ include the hinge loss,

the least square loss, and the logistic loss.

2

Journal of Applied Mathematics

The expected convex risk is

E f X

φ fx − f x− dρ x dρ− x− .

1.1

The corresponding empirical risk is

n

m 1 φ f xi − f xj− .

ES f mn i1 j1

1.2

Let ϑ {f ∈ F : f arg minf∈F Ef} be the target function set, where F is

the measurable function space. We can observe that the target function is not unique. In

particular, for the least square loss, the regression function is one element in this set.

The ranking algorithm we investigate in this paper is based on a Tikhonov

regularization scheme associated with a Mercer kernel. We usually call a symmetric and

positive semidefinite continuous function K : X × X → R a Mercer kernel. The RKHS

HK associated with the kernel K is defined see 1 to be the closure of the linear span

of the set of functions {Kx : Kx, · : x ∈ X} with the inner product · K given

by Kx , Kx K Kx, x . The reproducing property takes the form fx f, Kx K , for

all x ∈ X, f ∈ HK . The reproducing property with the Schwartz

inequality yields that

|fx| ≤ Kx, xfHK . Then, f∞ ≤ κfHK , where κ : supx∈X Kx, x.

The regularized ranking algorithm is implemented by an offline regularization scheme

2 in HK

fz,λ arg min ES f λ f

f∈HK

2

HK

,

1.3

where λ > 0 is the regularization parameter. A data free-limit of 1.3 is

fλ arg min E f λ f

f∈HK

2

HK

.

1.4

Though the offline algorithm 1.3 has been well understood in 2, it might

be practically challenging when the sample size m or n is large. The same difficulty

for classification and regression algorithms is overcome by reducing the computational

complexity through a stochastic gradient descent method. Such algorithms have been

proposed for online regression in 3, 4, online classification in 5, 6, and gradient learning in

7, 8. In this paper, we use the idea of gradient descent to propose an algorithm for learning

a target function in ϑ.

Since φ is convex, we know that its left derivative φ− is well defined and nondecreasing

on R. By taking functional derivatives in 1.3, we introduce the following algorithm for

ranking.

Journal of Applied Mathematics

3

Definition 1.1. The stochastic gradient descent ranking algorithm is defined for the sample S

by f1S 0 and

n

m ηt S

Kxi − Kxj− ,

ft1

1 − ηt λ ftS −

φ− ftS xi − ftS xj−

mn i1 j1

1.5

where t ∈ N and {ηt } is the sequence of step sizes.

In fact, Burges et al. 9 investigate gradient descent methods for learning ranking

functions and introducing a neural network to model the underlying ranking function.

From the idea of maximizing the generalized Wilcoxon-Mann-Whitney statistic, a ranking

algorithm using gradient approximation has been proposed in 10. However, these

approaches are different from ours and their analysis focuses on computational complexity.

Recently, for least square loss, numerical experiments by gradient descent algorithm have

been presented in 11. The aim of this paper is to provide generalization bounds for the

gradient descent ranking algorithm 1.5 with general convex losses. To the best of our

knowledge, there is no error analysis in this case; This is why we conduct our study in this

paper.

We mainly analyze the errors ftS − fλ HK and inff∈ϑ ftS − fHK , which is different

from previous error analysis for ranking algorithms based on uniform convergence e.g.,

12–16 and stability analysis in 2, 17, 18. Though the convergence rates of HK norm for

classification and regression algorithms have been elegantly investigated in 19, 20, there is

no such analysis in the ranking setting. The main difference in the formulation of the ranking

problem as compared to the problems of classification and regression is that the performance

or loss in ranking is measured on pairs of examples, rather than on individual examples.

This means in particular that, unlike the empirical error in classification or regression, the

empirical error in ranking cannot be expressed as a sum of independent random variables

17. This makes the convergence analysis of HK norm difficult and previous techniques

invalid. Fortunately, we observe that similar difficulty for gradient learning has been well

overcome in 7, 21, 22 for gradient learning by introducing some novel techniques. In this

paper, we will develop an elaborative analysis in terms of these analysis techniques.

2. Main Result

In this section we present our main results on learning rates of algorithm 1.5 for learning

ranking functions. We assume that φ ∈ C1 R satisfies

φu ≤ C0 1 |u|q ,

φ u ≤ C0 1 |u|q−1 ,

∀u ∈ R

2.1

for some C0 > 0 and q ≥ 1. Denote the constant

Δ∗ 1 4κ

2

q−1

φ− u − φ 0

2 sup

.

C0 φ 0 8κ φ 0

|u|

|u|≤1

2.2

4

Journal of Applied Mathematics

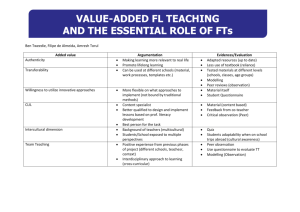

Table 1: The values of parameters for different convex losses.

Loss function

φt t2

φt log1 e−t φt 1 − t

C0

1

2

1

Δ∗

1 12κ2

1 11κ2

1 8κ2

q

2

1

1

α

θ min{1/2θ − 3γ, γβ}

γ θ min{θ − γ, γ − β}

θ min{1/2θ − 3γ, γβ}

Theorem 2.1. Assume φ satisfies 2.1, and choose the step size as

ηt η∗ λmax{q−2,0} t−θ for some 0 < θ < 1, 0 < η∗ ≤

1

.

Δ∗

2.3

s

For 0 < γ < 1 − θ/ min{q − 1, 1}, s > 0, one takes λ t−γ with mn/m n3/2 ≤ t ≤

s

2mn/m n3/2 . Then, for any 0 < δ < 1, with confidence at least 1 − δ, one has

ftS

− fλ

2

HK

≤C

mn

−α

m n3/2

2.4

,

where C is a constant independent of m, n, and

α min sθ − sγ min q 1, 2q − 1 , 1 − sγ 1 q .

2.5

Theorem 2.1 will be proved in the next section where the constant C can be obtained

explicitly. The explicit parameters in Theorem 2.1 are described in Table 1 for some special loss

functions φ. Note that the iteration steps and iterative numbers depend on sample number

m, n. When m On and m → ∞, we have t → ∞ and ηt → 0.

From the results in Theorem 2.1, we know that the balance of samples is crucial to

reach fast learning rates. For m On and the least square loss, the approximation order is

2

Om−1/2 min{sθ−3sγ,1−3sγ} . Moreover, when sθ → 1 and sγ → 0, we have ftS − fλ HK → 0

with the order Om−1/2 .

Now we present the estimates of inff∈ϑ ftS − fHK under some approximation

conditions.

Corollary 2.2. Assume that there is f ∗ ∈ ϑ such that fλ − f ∗ 2HK ≤ Cβ λβ for some 0 < β < 1.

Under the condition in Theorem 2.1, for any 0 < δ < 1, with confidence at least 1 − δ, one has

inf

f∈ϑ

ftS

−f

2

HK

≤C

mn

m n3/2

−α

,

2.6

is a constant independent of m, n, and

where C

α min sθ − sγ min q 1, 2q − 1 , 1 − sγ 1 q , sγβ .

2.7

Journal of Applied Mathematics

5

For m On and the least square loss, by setting s 1/γ3 β, we can derive the

learning rate Om−1/γ62β min{θ−3γ,γβ} . Moreover, if β < θ −3γ/γ, we get the approximation

order Om−β/62β .

For the least square loss, the regression function is an optimal predictor in ϑ. Then, the

bipartite ranking problem can be reduced as a regression problem. Based on the theoretical

analysis in 19, 20, we know that the approximation condition in Corollary 2.2 can be

achieved when the regression function lies in the β 1/2th power of the integral operator

associated with the kernel K.

The highlight of our theoretical analysis results is to provide the estimate of the

distance between ftS and the target function set ϑ in RKHS. This is different from the previous

result on error analysis that focuses on establishing the estimate of |Ef − ES f|. Compared

with the previous theoretical studies, the approximation analysis in HK -norm is new and fills

the gap on learning rates for ranking problem with general convex losses.

We also note that the techniques of previous error estimate for ranking problem mainly

include stability analysis in 2, 17, concentration estimation based on U-statistics in 14,

and uniform convergence bounds based on covering numbers 15, 16. Our analysis presents

a novel capacity-independent procedure to investigate the generalization performance of

ranking algorithms.

3. Proof of Main Result

We introduce a special property of Ef λ/2f2HK . Since the proof is the same as that in

5, we will omit it here.

Lemma 3.1. Let λ > 0. For any f ∈ HK , there holds

λ

f − fλ

2

HK

≤

λ

f

E f 2

2

HK

λ

fλ

− E fλ 2

2

HK

.

3.1

Denote

ftλ n

m 1 Kxi − Kxj− λftS ,

φ− f xi − f xj−

mn i1 j1

3.2

S

ft1

ftS − ηt ftλ .

Now we give the one-step analysis.

Lemma 3.2. For t ≥ 0, one has

S

ft1

− fλ

2

HK

≤ 1 − ηt λ ftS − fλ

where ϕS, t ES fλ − Efλ EftS − ES ftS .

2

HK

ηt2 ftλ

2

HK

2ηt ϕS, t,

3.3

6

Journal of Applied Mathematics

Proof. Observe that

S

ft1

− fλ

2

HK

ftS − fλ

2

HK

ηt2 ftλ

2

HK

2ηt fλ − ftS , ftλ .

K

3.4

Note that

fλ − ftS , ftλ

K

n

m 1 fλ xi − fλ xj−

φ − ftS xi − ftS xj−

mn i1 j1

−ftS xi ftS xj− λ fλ − ftS , ftS

≤ ES fλ − ES ftS − λ ftS

λ

fλ

≤ ES fλ 2

2

HK

2

HK

λ

fλ , ftS

K

3.5

K

λ S

f

− ES ftS 2 t

2

HK

,

where the first and the second inequalities are derived by the convexity of φ and the Schwartz

inequality, respectively.

By Lemma 3.1, we know that

λ S 2

S

f

− ES ft 2 t HK

λ S

≤ ES fλ − E fλ E ftS − ES ftS −

f − fλ

2 t

λ

fλ

ES fλ 2

2

HK

2

HK

3.6

.

Thus, the desired result follows by combining 3.5 and 3.6 with 3.4.

To deal with the sample error iteratively by applying 3.3, we need to bound the

quantity ϕS, t by the theory of uniform convergence. To this end, a bound for the norm of

ftS is required.

Definition 3.3. One says that φ− is locally Lipschitz at the origin if the local Lipschitz constant

Mλ sup

4κ2 φ− 0

φ− u − φ− 0

: |u| ≤

λ

|u|

3.7

is finite for any λ > 0.

Now we estimate the bound of ftS from the ideas given in 5.

Lemma 3.4. Assume that φ− is locally Lipschitz at the origin. If the step size ηt satisfies ηt 4κ2 Mλ

λ ≤ 1 for each t, then ftS HK ≤ 2κ|φ− 0|/λ.

Journal of Applied Mathematics

7

Proof. We prove by induction. It is trivial that f1S 0 satisfies the bound.

Suppose that this bound holds true for ftS , ftS HK ≤ 2κ|φ− 0|/λ. Consider

n

m ηt S

Kxi − Kxj−

1 − ηt λ ftS −

φ− ftS xi − ftS xj−

ft1

mn i1 j1

S

S

n φ

m f

xj− − φ− 0

x

−

f

t

t

−

η

i

t

S

1 − ηt λ ft −

mn i1 j1

ftS xi − ftS xj−

3.8

n

m ηt · ftS xi − ftS xj−

Kxi − Kxj− −

φ− 0 Kxi − Kxj− .

mn i1 j1

Let Lij f f, Kxi − Kxj− K Kxi − Kxj− . Since

0 ≤ Lij f, f K f, Kxi − Kxj−

K

2

≤ 4κ2 f

2

,

HK

3.9

we have Lij ≤ 4κ2 .

Meanwhile, φ− ftS xi − ftS xj− − φ− 0/ftS xi − ftS xj− ≤ Mλ. Then,

n φ−

m ftS xi − ftS xj− − φ− 0

ηt Lij

mn i1 j1

ftS xi − ftS xj−

3.10

is a positive linear operator on HK and its norm is bounded by 4κ2 Mλ.

Since ηt 4κ2 Mλ λ ≤ 1, the operator

−

S

S

n φ−

m f

− φ− 0

x

−

f

t

t xj

ηt

i

A : 1 − ηt λ I −

Lij

mn i1 j1

ftS xi − ftS xj−

3.11

on HK is positive and A ≤ 1 − ηt λI.

Thus,

S

ft1

HK

≤ 1 − ηt λ ftS

2κ φ− 0

.

λ

HK

n

m ηt φ 0 Kxi − Kxj−

mn i1 j1 −

HK

3.12

This proves the lemma.

For r > 0, denote Fr {f ∈ HK : fHK ≤ r}. Meanwhile, denote Lr max{|φ− 2κr|, |φ− −2κr|} and Mr max{|φ2κr|, |φ−2κr|}.

8

Journal of Applied Mathematics

Based on analysis techniques in 21, 23, we derive the capacity-independent bounds

for WS, r : supf∈Fr |ES f − Ef|.

Lemma 3.5. For every r > 0 and ε > 0, one has

ProbS {|WS, r − EWS, r| > ε} ≤ exp −

2m2 n2 ε2

m n3 Mr2

√

√

m n

.

EWS, r ≤ 4Lr κr 2φ0

√

mn

,

3.13

Proof. Because of the feature of S, four cases of samples change should be taken into account

to use McDiarmid’s inequality. Denote by Sk the sample coinciding with S except for xk or

xk− replaced by xk or xk− . It is easy to verify that

|WS, r − WSk , r| sup ES f − E f − sup ESk f − E f

f∈Fr

f∈Fr

mn

≤ sup ES f − ESk f ≤

Mr .

mn

f∈Fr

3.14

Based on MicDiarmid’s inequality in 24, we can derive the first result in Lemma 3.5. To

derive the second result, we denote ξx , u− φfx −fu− . Then, Ef Ex Ex− ξx , x− n

−

and ES f 1/mn m

i1

j1 ξxi , xj . Observe that

n

n

1

1

Ex ξ x , xj− sup

Ex ξ x , xj− − ES f

WS, r ≤ sup E f −

n j1

f∈Fr

f∈Fr n j1

n 1

≤ Ex sup Ex− ξ x , x− −

ξ x , xj−

n j1

f∈Fr

3.15

n

m

1 1

sup sup Ex ξ x , x− −

ξ xi , x−

n j1 f∈Fr x−

m i1

W1 W2 .

Denote Gx {hx− fx − fx− : f ∈ F}. Then,

n

n

1 1

≤ 2sup E sup

. 3.16

EW1 Ex E sup Ex− φ h x− −

φ h xj−

εj φ h xj−

n j1

x

h∈Gx

h∈Gx n j1

Journal of Applied Mathematics

9

Since φhxj− − φ0 ≤ Lr hxj− − 0, we have

n

n

2φ0 1

E

εj h xj− εj

n j1

n

j1

EW1 ≤ 2Lr sup E sup

x

≤

h∈Gx

n

4Lr κr 2φ0 E

εj

n

n

j1

⎛

2

⎞1/2

≤

n

4Lr κr 2φ0 ⎜ ⎟

εj ⎠

⎝E

n

j1

4Lr κr 2φ0

.

√

n

⎛

≤

⎞1/2

3.17

n

4Lr κr 2φ0 ⎝ E

εj εj ⎠

n

j,j 1

With the same fashion, we can also derive

EW2 ≤

4Lr κr 2φ0

.

√

m

3.18

Thus, the second desired result follows by combining 3.17 and 3.18.

Now we can derive the estimate of ϕS, t.

Lemma 3.6. If ηt satisfies (1) for each t and r 2κ|φ− 0|/λ least 1 − δ one has

⎛

2φ0/λ, then with confidence at

⎞

" #

2 ⎠ m n3/2

.

2 log

δ

mn

!

ϕS, t ≤ Bλ : ⎝4Lr κr 2φ0 Mr

3.19

Proof. By Lemma 3.5, we have, with confidence at least 1 − δ,

!

√

√

" #

m n m n3/2 Mr

2

WS, r ≤ 4Lr κr 2φ0

.

2 log

√

2mn

δ

mn

3.20

By taking f 0 in the definition of fλ , we see that

λ

fλ

2

2

HK

≤ E0 0 ≤ φ0.

3.21

Then, for any λ > 0, we have fλ HK ≤ 2φ0/λ. Thus, ftS , fλ ∈ Fr for r 2κ|φ− 0|/λ 2φ0/λ. So, ϕS, t ≤ 2WS, r for each t. This completes the proof.

We are in a position to give bounds for the sample error. We need the following

elementary inequalities that can be found in 3, 5.

10

Journal of Applied Mathematics

Lemma 3.7. (1) For α ∈ 0, 1 and θ ∈ 0, 1,

t

t

α

1$

1− θ

θ

i

j

i1

ji1

≤

3

.

α

3.22

(2) Let v ∈ 0, 1 and θ ∈ 0, 1. Then

⎧

v 1 − 2θ−1

9T 1−θ

18

⎪

1−θ

⎪

T −1

T

⎨

if θ < 1,

exp −

T 1

1

−θ

1−θ

vT θ 1 − θ21−θ

≤

exp

−v

j

2θ

⎩ jt1 ⎭ ⎪

⎪

t1 t

⎩ 8 T 1−v

if θ 1.

1−v

3.23

⎫

⎬

⎧

⎨

(3) For any t < T and θ ∈ 0, 1, there holds

T

j −θ

jt1

⎧

,

⎨ 1 T 11−θ − t 11−θ if θ < 1,

≤ 1−θ

⎩logT 1 − logt 1

if θ 1.

3.24

Proposition 3.8. Let ηt η1 t−θ for some θ ∈ 0, 1, and let η1 satisfy η1 4Mλ λ ≤ 1. Set r and

Bλ as in Lemma 3.6. Denote Bλ 2κLr κ|φ− 0|. Then, with confidence at least 1 − δ, the following

bound holds for t ≥ 1: when θ < 1,

ftS − fλ

2

HK

18B2 η1

η1 λ 1−θ

λ

t −1

exp −

1−θ

λtθ

2Bλ2 η12 t1−θ

η1 λ 1 − 2θ−1

6Bλ

1−θ

,

exp −

t 1

1−θ

1−θ

λ

1 − θ2

≤ fλ

2

HK

3.25

when θ 1,

ftS − fλ

2

HK

≤ fλ

2

t−η1 λ

HK

8Bλ2 η12

6Bλ

.

t 1−η1 λ 1 − η1 λ

λ

3.26

Proof. Since ftS HK ≤ 2κ|φ− 0|/λ, we have |ftS xi − ftS xj− | ≤ 2κftS HK ≤ 2κr. From the

definition of ftλ , we know that ftλ HK ≤ 2κLr κ|φ− 0| Bλ . Thus, when ϕS, t ≤ Bλ , we

have from Lemma 3.2

S

ft1

− fλ

2

HK

≤ 1 − ηt λ ftS − fλ

2

HK

ηt2 Bλ2 2ηt Bλ .

3.27

Applying this relation iteratively, we have

ftS − fλ

2

HK

≤

t−1

$

1 − ηi λ fλ

i1

2

HK

t−1

t−1 $

1 − ηi λ

i1 ji1

ηi2 Bλ2 2ηi Bλ .

3.28

Journal of Applied Mathematics

11

Since ηi η1 i−θ , by Lemma 3.72, we have for θ < 1

⎧

⎫

t−1

t−1

⎨

⎬

1

exp −η1 λ

j −θ

1 − ηi λ ηi2 ≤ η12

⎩

⎭

2θ

i1

ji1

t−1

t−1 $

i1 ji1

≤

18η1

λt

9η12 t1−θ

1 − θ21−θ

exp −

η1 λ 1 − 2

1−θ

θ−1

3.29

t 11−θ

and for θ 1

t−1

t−1 $

1 − ηi λ ηi2 ≤

i1 ji1

8η12

t 1−η1 λ .

1 − η1 λ

3.30

Lemma 3.71 yields

t−1

t−1 $

i1 ji1

t−1

t−1

η1 λ

1$

1− θ

1 − η i λ η i ≤ η1

θ

i

j

i1

ji1

≤

3

.

λ

3.31

By Lemma 3.73, we also have for θ < 1

t−1

1 − ηi λ ≤ exp −

t−1

i1

ηi λ

≤ exp

i1

η1 λ 1 − t1−θ

1−θ

3.32

and for θ 1

t−1

1 − ηi λ ≤ t−η1 λ .

3.33

i1

Combining the above estimations with Lemma 3.6, we derive the desired results.

Now we present the proof of Theorem 2.1.

Proof of Theorem 2.1. First we derive explicit expressionsfor the quantities in Proposition 3.8.

Since λ t−γ , we have r ≤ C3 tγ , where C3 2κ|φ− 0| 2φ0. By 2.1, we find that

q−1

Lr ≤ C0 1 2κrq−1 ≤ C0 1 2κq−1 C3 tq−1γ ,

q

Mr ≤ C0 1 2κq C3 tqγ .

3.34

12

Journal of Applied Mathematics

Then,

!

Bλ ≤ C4 log

" #

2 qγ m n3/2

t

,

δ

mn

q−1

Bλ ≤ C5 tq−1γ ,

q

3.35

q−1

where C4 4C0 κ1 2κq−1 C3 C0 1 2κq C3 2φ0 and C5 2κC0 1 2κq−1 C3

Next, we bound Mλ. When 1 ≤ |u| ≤ 4κ2 |φ− 0|/λ, we have

2φ0.

q−1

φ− u − φ− 0

≤ φ− 0 2q−1 C0 4κ2 φ− 0

λ2−q .

|u|

3.36

Hence,

Mλ ≤

φ− u − φ− 0

sup

|u|

|u|≤1

φ− 0

2

q−1

C0 4κ2 φ− 0

q−1

λmin{2−q,0} .

3.37

It follows that the condition η1 4κ2 Mλ λ ≤ 1 in Proposition 3.8 holds true when ηt η1 t−θ

s

s

and η1 η∗ λmax{q−2,0} . Based on Proposition 3.8 and mn/m n3/2 ≤ t ≤ 2mn/m n3/2 ,

we have, with confidence at least 1 − δ,

⎛

ftS − fλ

2

HK

≤

⎝c1 fλ 2

HK

⎧

⎨ η

c2 η∗2

∗

exp

⎩ 1 − θ 1 − θ21−θ

s1−θ−2q−1γ ⎫⎞

⎬

⎠

⎭

m n3/2

η∗ 1 − 2θ−1 1−θ−γ max{q−1,1}

× exp −

c3

t

1−θ

c4 η∗

mn

m n3/2

mn

sγ1q−1

mn

m n

3/2

3.38

sγ min{q1,2q−1}−sθ

,

where c1 , c2 , c3 , and c4 are constants independent of m, n,and t.

Thus, when 1 − θ − γ max{q − 1, 1} > 0, we can derive the desired result in Theorem 2.1.

Acknowledgments

This work was supported partially by the National Natural Science Foundation of China

NSFC under Grant no. 11001092 and the Fundamental Research Funds for the Central

Universities Program no. 2011PY130, 2011QC022. The authors are indebted to the

anonymous reviewers for their constructive comments.

Journal of Applied Mathematics

13

References

1 N. Aronszajn, “Theory of reproducing kernels,” Transactions of the American Mathematical Society, vol.

68, pp. 337–404, 1950.

2 S. Agarwal and P. Niyogi, “Stability and generalization of bipartite ranking algorithms,” In COLT,

2005.

3 S. Smale and Y. Yao, “Online learning algorithms,” Foundations of Computational Mathematics, vol. 6,

no. 2, pp. 145–170, 2006.

4 S. Smale and D. X. Zhou, “Online learning with Markov sampling,” Analysis and Applications, vol. 7,

no. 1, pp. 87–113, 2009.

5 Y. Ying and D. X. Zhou, “Online regularized classification algorithms,” IEEE Transactions on

Information Theory, vol. 52, no. 11, pp. 4775–4788, 2006.

6 X. M. Dong and D. R. Chen, “Learning rates of gradient descent algorithm for classification,” Journal

of Computational and Applied Mathematics, vol. 224, no. 1, pp. 182–192, 2009.

7 J. Cai, H. Wang, and D. X. Zhou, “Gradient learning in a classification setting by gradient descent,”

Journal of Approximation Theory, vol. 161, no. 2, pp. 674–692, 2009.

8 X. Dong and D. X. Zhou, “Learning gradients by a gradient descent algorithm,” Journal of Mathematical

Analysis and Applications, vol. 341, no. 2, pp. 1018–1027, 2008.

9 C. Burges, T. Shaked, E. Renshaw et al., “Learning to rank using gradient descent,” in Proceedings of

the 22nd international conference on Machine learning, 2005.

10 V. C. Raykar, R. Duraiswami, and B. Krishnapuram, “A fast algorithm for learning a ranking function

from large-scale data sets,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no.

7, pp. 1158–1170, 2008.

11 H. Chen, Y. Tang, L. Q. Li, and X. Li, “Ranking by a gradient descent algorithm,” manuscript.

12 Y. Freund, R. Iyer, R. E. Schapire, and Y. Singer, “An efficient boosting algorithm for combining

preferences,” Journal of Machine Learning Research, vol. 4, no. 6, pp. 933–969, 2004.

13 S. Agarwal, T. Graepel, R. Herbrich, S. Har-Peled, and D. Roth, “Generalization bounds for the area

under the ROC curve,” Journal of Machine Learning Research, vol. 6, pp. 393–425, 2005.

14 S. Clémençon, G. Lugosi, and N. Vayatis, “Ranking and empirical minimization of U-statistics,” The

Annals of Statistics, vol. 36, no. 2, pp. 844–874, 2008.

15 C. Rudin and R. E. Schapire, “Margin-based ranking and an equivalence between AdaBoost and

RankBoost,” Journal of Machine Learning Research, vol. 10, pp. 2193–2232, 2009.

16 C. Rudin, “The P-norm push: a simple convex ranking algorithm that concentrates at the top of the

list,” Journal of Machine Learning Research, vol. 10, pp. 2233–2271, 2009.

17 S. Agarwal and P. Niyogi, “Generalization bounds for ranking algorithms via algorithmic stability,”

Journal of Machine Learning Research, vol. 10, pp. 441–474, 2009.

18 D. Cossock and T. Zhang, “Statistical analysis of Bayes optimal subset ranking,” IEEE Transactions on

Information Theory, vol. 54, no. 11, pp. 5140–5154, 2008.

19 S. Smale and D. X. Zhou, “Shannon sampling. II. Connections to learning theory,” Applied and

Computational Harmonic Analysis, vol. 19, no. 3, pp. 285–302, 2005.

20 S. Smale and D. X. Zhou, “Learning theory estimates via integral operators and their approximations,” Constructive Approximation, vol. 26, no. 2, pp. 153–172, 2007.

21 S. Mukherjee and Q. Wu, “Estimation of gradients and coordinate covariation in classification,”

Journal of Machine Learning Research, vol. 7, pp. 2481–2514, 2006.

22 S. Mukherjee and D. X. Zhou, “Learning coordinate covariances via gradients,” Journal of Machine

Learning Research, vol. 7, pp. 519–549, 2006.

23 H. Chen and L. Q. Li, “Learning rates of multi-kernel regularized regression,” Journal of Statistical

Planning and Inference, vol. 140, no. 9, pp. 2562–2568, 2010.

24 C. McDiarmid, “On the method of bounded differences,” in Surveys in Combinatorics, 1989 (Norwich,

1989), vol. 141, pp. 148–188, Cambridge University Press, Cambridge, UK, 1989.

Advances in

Operations Research

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Advances in

Decision Sciences

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Mathematical Problems

in Engineering

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Algebra

Hindawi Publishing Corporation

http://www.hindawi.com

Probability and Statistics

Volume 2014

The Scientific

World Journal

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International Journal of

Differential Equations

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Volume 2014

Submit your manuscripts at

http://www.hindawi.com

International Journal of

Advances in

Combinatorics

Hindawi Publishing Corporation

http://www.hindawi.com

Mathematical Physics

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Journal of

Complex Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

International

Journal of

Mathematics and

Mathematical

Sciences

Journal of

Hindawi Publishing Corporation

http://www.hindawi.com

Stochastic Analysis

Abstract and

Applied Analysis

Hindawi Publishing Corporation

http://www.hindawi.com

Hindawi Publishing Corporation

http://www.hindawi.com

International Journal of

Mathematics

Volume 2014

Volume 2014

Discrete Dynamics in

Nature and Society

Volume 2014

Volume 2014

Journal of

Journal of

Discrete Mathematics

Journal of

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Applied Mathematics

Journal of

Function Spaces

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Optimization

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014

Hindawi Publishing Corporation

http://www.hindawi.com

Volume 2014