Design of a Real Time FPGA‐based Three Dimensional Positioning Algorithm

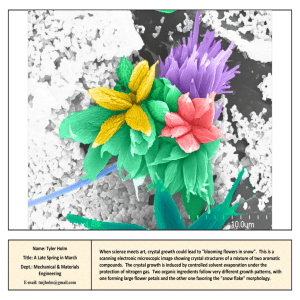

advertisement