ENABLING PROCESS IMPROVEMENTS THROUGH VISUAL PERFORMANCE INDICATORS By G.

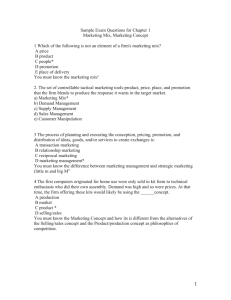

advertisement