The Information Content of Implied Probabilities to Detect Structural Change Alain Guay

advertisement

The Information Content of Implied Probabilities

to Detect Structural Change

Alain Guaya , Jean-François Lamarcheb,†

a

b

Université du Québec à Montréal, Québec, Canada, CIRPÉE and CIREQ.

Department of Economics, Brock University, St. Catharines, Ontario, Canada

Preliminary and incomplete version

First Version: July 2005

This Version: May 28, 2007

Abstract

This paper proposes to use implied probabilities resulting from estimation methods based on unconditional moment conditions to detect structural change. The class of GEL estimators (Smith 1997)

assigns a set of probabilities to each observation such that moment conditions are satisfied. These

restricted probabilities are called implied probabilities. Implied probabilities may also constructed for

the standard GMM (Back and Brown 1993). The proposed structural change statistic tests are based

on the information content in these implied probabilities. We consider cases of structural change

which can occur in the parameters of interest or in the overidentifying restrictions used to estimate

these parameters.

Keywords: Generalized empirical likelihood, generalized method of moments, parameter instability,

structural change.

JEL codes: C12, C32

†

The first author gratefully acknowledges financial support of Fonds pour la Formation de Chercheurs

et l’aide à la Recherche (FCAR). The first and second authors are thankful for the financial support

of the Social Sciences and Humanities Research Council of Canada (SSHRC).

1

Introduction

This paper proposes structural change tests based on implied probabilities resulting from estimation

methods based on unconditional moment restrictions. The Generalized Method of Moments (GMM) is the

standard method to estimate parameters of interest through moment restrictions. However, Monte Carlo

results reveal that the GMM estimators may be seriously biased in small sample.1 Recently, a number

of alternative estimators to GMM have been proposed. Hansen, Heaton and Yaron (1996) suggest the

continuous updated estimator (CUE) which shares same objective function that the GMM but with

a weighting matrix depending on the parameters of interest. The empirical likelihood (EL) estimator

(Qin and Lawless 1994) and the exponential tilting (ET) (Kitamura and Stutzer 1997) have also be

proposed. These alternative estimators are special cases of the Generalized Empirical Likelihood (GEL)

class considered by Smith (1997). These estimators may be shown to be based on the Cressie and Read

(1984) family of power divergence criteria. Recently, Newey and Smith (2004) for an i.i.d. setting and

Anatolyev (2005) with temporal dependence show that, in general, the asymptotic bias of GEL estimators

is less than the GMM estimators especially with many moment conditions.

GEL estimators assigned a probability to each observation such that the moment conditions are

satisfied (see Smith (2004)). This resulting empirical measure is called implied probabilities. Implied

probabilities may also be constructed for the standard GMM as shown by Back and Brown (1993).

The interpretation of the implied probabilities is the following: less weight is assigned to an observation

for which the moment restrictions are not satisfied at the estimated values of the parameters and more

weight to an observation for which the moment restrictions are satisfied. As suggested by Back and Brown

(1993), implied probabilities may then provide a useful diagnostic device for the model specification. In

particular, implied probabilities may content interesting information to detect instability in the sample.

Consequently, we propose the use of these weights in detecting an unknown structural change in the model.

To our knowledge this procedure was first proposed by Back and Brown (1993) but never pursued. Bertille,

Bonnal and Renault (2007) also related the informational content of the moment conditions to the weights

and one of their contribution is in the use of the weights in a three step estimation procedure. Schennach

(2004) also discusses the use of these weights in the context of model misspecification. Ramalho and Smith

(2005) considered Pearson-type test statistics (statistics based on the difference between restricted and

unrestricted estimators of the weights) for the validity of moment restrictions and parametric restrictions

(see also Otsu (2006)).

The proposed test statistics to detect structural change are based on different measures of the discrepancy between these implied probabilities and the unconstrained empirical probabilities

1

T

. These statistic

tests fall in three categories: 1) structural change tests to detect instability in the identifying restrictions

1 See

in particular the special issue of Journal of Business and Economic Statistics, 1996, volume 14.

2

(see for example Andrews (1993)); 2) structural change tests to detect instability in the overidentifying

restrictions (see for example Hall and Sen (1999)) and 3) and optimal predictive tests (see Ghysels, Guay

and Hall (1997) and Guay (2003)). The asymptotic distribution of the statistic tests is derived for the

case of unknown breakpoint under the null and under the alternative hypotheses.

The paper is organized as follows. A discussion on the GMM and GEL estimators are presented in section

2. Section 3 presents formally the full sample and partial sample GMM and GEL estimators. Section 4

presents the test statistics proposed based on the implied probabilities. The simulation results are in

Section 5 while the proofs are in the Appendix.

2

Discussion of GMM and GEL Estimators

In this section we present the estimators used in this paper. We start with an entropy-based formula-

tion of the problem which puts emphasis on the informational content of the estimated weights. We then

move to the more recent GEL formulation (see Newey and Smith (2004) and Smith (2004)).

We consider a random variable {xt : 1 ≤ t ≤ T, T ≥ 1}. Suppose we have data that is summarized by

a T × q matrix g(x, θ) with typical element gti (xt , θ) with i = 1, ..., q which depends on some unknown

p-vector of parameters θ ∈ Θ with Θ ⊂ Rp (the parameter space will be refined subsequently) and that

in the population their expected value is 0. That is,

E[g(xt , θ0 )] = 0

In this paper we consider the overidentifying case with q > p. To put this into context, consider the

consumption-based asset pricing model (see (Lucas 1978), Hansen and Singleton (1982) and Hall (2005)

for a treatment in the context of GMM) which tries to explain how asset are priced and how consumption

spending evolves. In this model a representative agent maximizes her discounted expected utility

∞

X

E[

δ0i U (ct+i )|It ]

i=0

subject to a budget constraint

ct +

N

X

pj,t qj,t =

j=1

N

X

rj,t qj,t−mj + wt

(1)

j=1

In the above ct denotes consumption, there are N assets with price pj,t , quantity purchased qj,t and

return rj,t , wt is labor income, δ is a discount factor and I is the information set of the agent.

The optimal consumption and investment paths satisfy the Euler equations which describes the comovements of consumption and asset prices.

3

m

pj,t U 0 (Ct ) = δ0 j E[rj,t+mj U 0 (Ct+mj )|It ]

m

E[δ0 j (rj,t+mj /pj,t )(U 0 (Ct+mj )/U 0 (Ct ))|It ] − 1 = 0.

If we use a CRRA functional form for the utility function U (ct ) = (cγt − 1)/γ, then

m

E[δ0 j (rj,t+mj /pj,t )(ct+mj /ct )γ−1 |It ] − 1 = 0.

This first-order condition contains two parameters of interest that needs to be estimated. We can get

around this problem by creating additional conditions upon which estimation will be based. For this,

choose a set of instruments zt ∈ It . Now let

m

uj,t (δ, γ) = δ0 j (rj,t+mj /pj,t )(ct+mj /ct )γ−1 − 1

and using a law of iterated expectations we obtain

E[uj,t (δ, γ)zt ] = E[[uj,t (δ, γ)|It ]zt ] = 0

Typically zt contains rj,t /pj,t−mj , ct /ct−mj and a constant.

Now we can have at least as many population moment conditions than parameters to be estimated.

Using the previous notation

E[g(xt , θ0 )] = E[[uj,t (δ, γ)|It ]zt ] = 0

and θ0 contains δ and γ, p = 2 and if we use one asset q = 3. Estimation could be done via maximum

likelihood but this requires making a strong distributional assumption. Instead we use a method of

moments.

The standard GMM estimators are obtained as the solution of

θ̃T = arg min gT (θ)0 WT gT (θ)

θ∈Θ

where WT is a random positive definite symmetric q × q matrix and gT (θ) =

1

T

PT

t=1

g(xt , θ). The

optimal weighting matrix is defined to be the inverse of the covariance matrix of the moment conditions,

4

WT = Ω−1

T where ΩT is a consistent estimator of

Ã

Ω = lim V ar

T →∞

!

T

1 X

√

g(xt , θ)

T t=1

To address the time series properties of the data we use the long run covariance matrix estimator for Ω

ΩT = Γ0 +

T

−1

X

0

k(j/ST )(Γ(j) + Γ(j) ) = Γ0 +

j=1

ST

X

(1 −

j=1

j

)(Γ(j) + Γ(j)0 ),

ST

if the Bartlett kernel, suggested by Newey and West (1987), is used with

Γ0 =

T

1X

g(xt , θ)g(xt , θ)0

T t=1

and

Γ(j) =

T

1 X

g(xt , θ)g(xt−j , θ)0 .

T t=j+1

We note that, in the GMM criterion function, the moment conditions receive equal weight (1/T ) for

each observations. Back and Brown (1993) derived, however, a set of implicit weights using the GMM

estimators given by

πt (θ) =

with J T (θ) =

1

T

PT

t=1

i0

1

1 he

−

JtT (θ) − J T (θ) Ω̂T (θ)−1 g T (θ)

T

T −p

(2)

JetT (θ) and

JetT (θ) =

T

X

κ(|t − j|)g(xt−j , θ)

j=1

where κ(|t − j|) is a real valued weighting function and g T (θ) =

1

T

PT

t=1

g(xt , θ). The following estimator

T

Ω̂T (θ) =

1 X

JtT (θ)g(xt , θ)0

T − p t=1

is a consistent and positive definite estimator of the variance-covariance matrix and it has the usual form

of a heteroskedasticity and autocorrelation consistent (HAC) weight matrix for standard kernels κ(|t − s|)

considered in the vast literature on consistent estimation of variance-covariance matrix (see e.g. Andrews

(1991), Newey and West (1987) and Newey and West (1994). The implied probabilities such defined above

are the empirical measure that ensure that moment conditions are satisfied in the sample.

Now letting the T -vector of explicit weights be {πt : 1 ≤ t ≤ T, T ≥ 1} we can recast the population

moment conditions as

Eπ [g(xt , θ0 )] = 0.

5

The vector π is determined by finding the most probable data distribution of the outcomes given the

data. We can think of π as containing information on the content of the moment conditions. Therefore,

g(x, θ) is viewed as a message. That is, when π is small, the message is informative and vice-versa. This

relation is summarized by the function f (π) = − ln π. The average information is then

I(π) ≡ Eπ f (π) = −

T

X

πt ln πt .

t=1

In practice, the vector π is obtained by solving

max I(π) = −

T

X

πt ln πt ,

t=1

subject to

PT

t=1

πt = 1 and

PT

t=1

g(xt , θ)0 πt = 0. In this case, I(π) can be interpreted as the entropy

measure of Shannon (1948) and it captures the degree of uncertainty in the distribution of π given the

information that we have, the data. Equivalently, we could solve the cross-entropy problem using the

Kullback-Leibler (Kullback and Leibler (1951)) information criterion

Ξ(π, 1/T ) =

T

X

πt ln πt + ln(T ).

t=1

Ξ(π, 1/T ) can then be interpreted as a directed sum of discrepancies between π and the empirical distribution 1/T . Minimizing Ξ is then equivalent to maximizing I.

Maximum uncertainty is reached when I(π) = ln T or when Ξ(·) = 0 with πt = 1/T , ∀t. In this sense,

the frequency distribution of π is chosen such that the missing information is maximized. Maximizing I

subject to zero functions will result, unless q = p, in a frequency distribution of π that is different from

the empirical distribution 1/T , but as close to it as possible. The probabilities reveal and summarize,

therefore, all the necessary information contained in the sample. In particular, we focus on detecting a

structural change in the moment conditions. With no structural change, the weights will fluctuate around

1/T , otherwise the entropy formulation will attempt to reduce the weight on the observation characterized

by the change, and at the same time put more weights on the remaining observations so as to make I(π)

as large as possible.

Estimates of π, given θ, are obtained by maximizing I subject to the weighted zero functions and the

probability constraint. The solution to the Lagrangean yields

exp(γ 0 g(xt , θ))

πtET (θ) = PT

0

t=1 exp(γ g(xt , θ))

(3)

where the q-vector γ contains the Lagrange multipliers and as such measure the degree of departure

from zero of the moment conditions and the superscript stands for exponential tilting (see Kitamura and

6

Stutzer (1997)). Estimates of θ are obtained by substituting π in I(π), maximizing it with respect to λ

and then with respect to θ (see for example Kitamura and Stutzer (1997)).

If the sum of discrepancies between π and the empirical distribution 1/T is not directed (not efficiently

weighted) we obtain a different set of weights given by

πtEL (θ) =

1

T [1 + γ 0 g(xt , θ]

(4)

where the superscript relates to the empirical likelihood approach (see Qin and Lawless (1994) for example). When we evaluate the weights at some estimators we obtain πtET (θ̃T ) and πtEL (θ̃T ). Recently,

Schennach (2004) combined ET and EL into the ETEL estimator that combines the advantages of each

approach.

To provide more intuition on the use of the weights in the detection of a structural change we consider

a small simulation study that contains three examples that have been studied in the structural change

and entropy literature. The first example, similar to the one used by Imbens, Spady and Johnson (1998)

consists of estimating a single parameter, θ, with 2 moment conditions xt − θ = 0 and (xt − θ)2 − 4 = 0

with a sample of 100 observations and xt = θt + ²t where ²t ∼ N (0, 4). This data generating process

(DGP) will be denoted as DGP1. The pulse, DGP2, is such that we have θt = 5 for t = 50 and θ = 10

otherwise. The break, DGP3, considers θt = 10 for t ≤ 20 and t > 80 while θt = 15 for 21 ≤ t ≤ 80.

Finally, the regime shift, DGP4, has θt = 10 for t ≤ 20 and θt = 15 otherwise. In these cases, structural

change occurs via the parameters and can be tested using procedures proposed by Andrews (1993) and

by Andrews and Ploberger (1994).

In contrast, the next two examples consist of structural change through the moment conditions.

Following Hall and Horowitz (1996) and Gregory, Lamarche and Smith (2002) we study a simulated

environment with CRRA which was presented above making a distributional assumption on consumption

growth, xt , with i.i.d data and n = 100. This is DGP5. In particular, we assume that consumption growth

follows a N (0, σ 2 = 0.16). There is a single parameter to be estimated, γ, the coefficient of CRRA and

two moment conditions are used: Et exp[−γ ln xt+1 − 9σ 2 /2 + (3 − γ)zt ] = 1 and Et zt exp[−γ ln xt+1 −

9σ 2 /2 + (3 − γ)zt − 1] = 0 with zt ∼ N (0, σ 2 ). The moment conditions are satisfied when γ = 3. The

structural break occurs in period 51 and is summarized by a shift in γ from 3 to 4.

Lastly, as in Ghysels et al. (1997), we have the estimation of an autoregressive parameter using two

moment conditions when the DGP is an AR(1) (xt = 0.5xt−1 + ²t ), for t ≤ 50, and an ARM A(1, 2)

otherwise (xt = 0.5xt−1 + ²t + 0.5²t−2 ). There are 100 observations and ²t ∼ N (0, 1). This is DGP6. The

two instruments used are the first and second lags of xt .

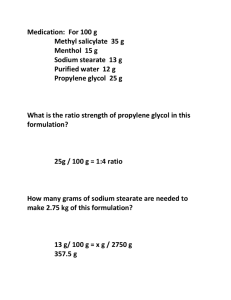

Figure 1 shows the average of the vector of implied probabilities π over 10,000 replications. The

key feature of these figures is that when there is no break, the weights fluctuate around 1/100. With a

7

structural break in the parameter or in the moment conditions, however, more importance is given to

observations (and moment conditions) for which there is no break. This paper focuses on the information

contained in the estimated implied probabilities to detect these two types of structural change.

Now following the recent econometric literature (see Caner (2004), Newey and Smith (2004), Smith

(2004), Caner (2005), Guggenberger and Smith (2005) and Ramalho and Smith (2005)) on GEL we let

ρ(φ) be a function of a scalar φ that is concave on its domain, an open interval Φ that contains 0. Also,

let Γ̃T (θ) = {γ : γ 0 g(xt , θ̃T ) ∈ Φ, t = 1, . . . , T }. Then, the GEL estimator is a solution to the problem

θ̃T = arg min sup

θ∈Θ

T

X

[ρ(γ 0 g(xt , θ)) − ρ0 ]

γ∈Γ̃T (θ) t=1

T

(5)

where ρj () = ∂ j ρ()/∂φj and ρj = ρj (0) for j = 0, 1, 2, . . . . Under this formulation a number of estimators

can be obtained. First, the ET estimator of θ is found by setting ρ(φ) = − exp(φ). Second, the EL

estimator of θ by setting ρ(φ) = ln(1 − φ). Third, the continuously updated estimator, as opposed to

the two-step estimator presented above, of Hansen et al. (1996) can also be obtained from the GEL

formulation by using a quadratic function for ρ(φ).

A similar adjustment for the dynamic structure of g(xt , θ) can also be made in the GEL context

( see Kitamura and Stutzer (1997), Smith (2000), Smith (2004) and Guggenberger and Smith (2005)).

The adjustment consists of smoothing the original moment conditions g(xt , θ)). Defining the smoothed

moment conditions as

gtT (θ) =

µ ¶

t−1

1 X

s

k

g(xt−s , θ)

ST

ST

s=t−T

for t = 1, . . . , T and ST is a bandwidth parameter, k(·) a kernel function and we define where kj =

R∞

k(a)j da. In the time series context, the criteria is then given by:

−∞

T

X

[ρ(kγ 0 gtT (θ)) − ρ0 ]

T

t=1

where k =

3

k1

k2

(6)

(see Smith (2004)).

Full and Partial-Samples GMM and GEL Estimators

To establish the asymptotic distribution theory of tests of parameters based on implied probabilities,

for structural change we need to elaborate on the specification of the parameter vector in our generic

setup. We will consider parametric models indexed by parameters (β, δ) where β ∈ B, with B ⊂ Rr and

δ ∈ ∆ ⊂ Rν . Following Andrews (1993) we make a distinction between pure structural change when no

subvector δ appears and the entire parameter vector is subject to structural change under the alternative

8

and partial structural change which corresponds to cases where only a subvector β is subject to structural

change under the alternative. The generic null can be written as follows:

H0 : β t = β 0

∀t = 1, . . . , T.

(7)

The tests we will consider assume as alternative that at some point in the sample there is a single

structural break, like for instance:

½

βt =

β1 (s) t = 1, ..., [sT ]

β2 (s) t = [sT ] + 1, ..., T

where s determines the fraction of the sample before and after the assumed break point and [.] denotes

the greatest integer function. The separation [T s] represents a possible breakpoint which is governed by

an unknown parameter s. Hence, we will consider a setup with a parameter vector which encompasses

any kind of partial or pure structural change involving a single breakpoint. In particular, we consider a p

dimensional parameter vector θ = (β10 , β20 , δ 0 )0 where β1 and β2 ∈ B ⊂ Rr and θ ∈ Θ = B × B × ∆ ⊂ Rp

where p = 2r + ν. The parameters β1 and β2 apply to the samples before and after the presumed

breakpoint and the null implies that:

H0 : β1 = β2 = β0 .

(8)

We will formulate all our models in terms of θ. Special cases could be considered whenever restrictions

are imposed in the general parametric formulation. One such restriction would be that θ0 = (β00 , β00 )0 ,

which would correspond to the null of a pure structural change hypothesis. Once we have defined the

moment conditions we will also translate this into over-identifying restrictions and relate it to structural

change tests, following the analysis of Sowell (1996) and Hall and Sen (1999).

3.1

Definitions

We need to impose restrictions on the admissible class of functions and processes involved in estimation

to guarantee well-behaved asymptotic properties of GMM estimators using the entire data sample or

subsamples of observations. We discuss a set of regularity conditions required to obtain weak convergence

of partial sample GMM estimators to a function of Brownian motions. To streamline the presentation

we only summarize the assumptions and provide a detailed description of them in Appendix A. We now

formally define the standard GMM estimator using the full sample.

Definition 3.1. The full sample General Method of Moments estimator {θ̃T } is a sequence of random

vectors such that:

θ̃T = arg min gT (θ)0 ŴT gT (θ)

θ∈Θ

where ŴT is a random positive definite symmetric q × q matrix.

9

The optimal weighting matrix W is defined to be the inverse of Ω which was defined in Section 2.

The optimal weighting matrix can be estimated consistently using methods developed by Gallant (1987),

Andrews and Monahan (1992) and Newey and West (1994), among several others.

Several tests for structural change involve partial-sample GMM estimators defined by Andrews (1993).

We consider again the two subsamples, the first based on observations t = 1, . . . , [T s] and the second

covering t = [T s] + 1, . . . , T where s ∈ S ⊂ (0, 1). The partial-sample GMM estimators for s ∈ S based

on the first and the second subsamples are formally defined as:

Definition 3.2. A partial-sample General Method of Moments estimator {θ̂T (s)} is a sequence of random

vectors such that:

θ̂T (s) = arg min gT (θ, s)0 ŴT (s)gT (θ, s)

θ∈Θ

0

for all s ∈ S. Define gt (θ, s) = (g(xt , β1 , δ)0 , 00 ) ∈ R2q×1 for t = [T s] + 1, . . . , T and gt (θ, s) =

0

(g(xt , β2 , δ)0 , 00 ) ∈ R2q×1 for t = [T s] + 1, . . . , T such that

[T s]

T

1X

1 X h g(xt , β1 , δ) i 1

gT (θ, s) =

gt (θ, s) =

+

0

T t=1

T t=1

T

T

X

t=[T s]+1

h

0

g(xt , β2 , δ)

i

and ŴT (s) is a random positive definite symmetric 2q × 2q matrix.

The partial-sample optimal weighting matrix is defined as the inverse of Ω(s) where

Ã

" P

#!

[T s]

1

g(x

,

θ

)

t

0

PT t=1

Ω(s) = lim V ar √

T →∞

T

t=[T s]+1 g(xt , θ0 )

which under the null (8) is asymptotically equal to

i

h

0

Ω(s) = sΩ

0 (1 − s)Ω .

To characterize the asymptotic distribution we define the following matrices:

T

1X

E∂g(xt , β0 , δ0 )/∂β 0 ∈ Rq×r ,

T →∞ T

t=1

Gβ = lim

T

1X

E∂g(xt , β0 , δ0 )/∂δ 0 ∈ Rq×ν ,

T →∞ T

t=1

Gδ = lim

·

G(s) =

sGβ

0

0

(1 − s)Gβ

sGδ

(1 − s)Gδ

¸

∈ R2q×(2r+ν) .

In the GEL setting, the parameter vector is augmented by a vector of auxiliary parameters γ where

each element of this vector is associated to an element of the smoothed moment conditions gtT (θ). Under

10

the null of no structural change relative to the specification of the model, the generic null hypothesis

function of this vector of auxiliary parameters can be written as follows:

H0 : γ t = γ 0 = 0

∀t = 1, . . . , T.

(9)

As for the parameter vector β, the tests we will consider assume as alternative that at some point in the

sample there is a single structural break, namely:

½

γ1 t = 1, ..., [sT ]

γt =

γ2 t = [sT ] + 1, ..., T

Thus under the null H0 = γ10 = γ20 = γ0 = 0. We will show later that a structural change in γ is

associated with instability in over-identifying restrictions.

We now formally define the Generalized Empirical Likelihood (GEL) estimator using the full sample.

Definition 3.3. Let ρ(φ) be a function of a scalar φ that is concave on its domain, an open interval Φ

that contains 0. Also, let Γ̃T (θ) = {γ : kγ 0 gtT (θ̃T ) ∈ Φ, t = 1, . . . , T } with k =

k1

k2 .

Then, the full sample

GEL estimator {θ̃T } is a sequence of random vectors such that:

θ̃T = arg min sup

θ∈Θ

T

X

[ρ (kγ 0 gtT (θ)) − ρ0 ]

T

e T (θ) t=1

γ∈Γ

(10)

where ρj () = ∂ j ρ()/∂φj and ρj = ρj (0) for j = 0, 1, 2, . . . .

The criteria is normalized so that ρ1 = ρ2 = −1 (see Smith (2004)). As mentioned earlier, the GEL

estimator admits a number of special cases recently proposed in the econometrics literature. The CUE

of Hansen, Heaton and Yaron (1996) corresponds to a quadratic function ρ(·). The EL estimator (Qin

and Lawless, 1994) is a GEL estimator with ρ(φ) = ln(1 − φ). The ET estimator (Kitamura and Stutzer

1997) is obtained with ρ(φ) = − exp(φ).

More precisely, the GEL estimator is obtained as the solution to a saddle point problem. Firstly, the

criterion is maximized for given θ. Thus,

γ̃(θ) = arg sup

T

X

[ρ(kγ 0 gtT (θ)) − ρ0 ]

T

γ∈Γ̃T t=1

.

Secondly, the GEL estimator θ̃T is given by the following minimization problem:

θ̃T = arg min

θ∈Θ

T

X

[ρ(kγ̃(θ)0 gtT (θ)) − ρ0 ]

T

t=1

.

From now on, following Kitamura and Stutzer (1997) and Guggenberger and Smith (2005) we focus

on the truncated kernel defined by

k(x) = 1 if |x| ≤ 1 and k(x) = 0 otherwise

11

to obtain

gtT (θ) =

KT

X

1

2KT + 1

g(xt−j , θ) =

j=−KT

KT

X

1

2KT + 1

g(xt−j , θ).

j=−KT

To handle the endpoints in the smoothing we use the approach of ? which sets

gtT (θ) =

min{t−1,KT }

X

1

2KT + 1

g(xt−j , θ).

j=max{t−T,−KT }

Following Smith (2004), we can easily show for this kernel that k1 = 1 and k2 = 1. A consistent estimator

of the long run covariance matrix is given by:

T

Ω̃T (θ) =

2KT + 1 X

gtT (θ̃)gtT (θ̃)0 .

T

t=1

The weights thus obtained using this type of kernel are similar to those obtained with the Bartlett kernel

estimator of the long run covariance matrix of the moment conditions. Define also the derivative of the

smoothed moment conditions as:

GtT (θ) =

KT

X

1

2KT + 1

j=−KT

∂g

(xt−j , θ).

∂θ0

0

Now consider a possible breakpoint [T s]. Define the vector of auxiliary parameters γ = (γ10 , γ20 ) where

γ1 is the vector of the auxiliary parameters for the first part of the sample e.g. t = 1, . . . , [T s] and γ1 for

the second part of the sample; t = [T s] + 1, . . . , T . The partial-sample GEL estimators for s ∈ S based

on the first and the second subsamples are formally defined as:

Definition 3.4. A partial-sample General Empirical Likelihood (PS-GEL) estimator {θ̂T (s)} is a sequence of random vectors such that:

θ̂T (s) =

arg min sup

T

X

[ρ(γ(s)0 gtT (θ, s)) − ρ0 ]

T

[T s]

X

[ρ(γ10 gtT (β1 , δ)) − ρ0 ]

= arg min sup

+

θ∈Θ

T

γ∈Γ̂T (θ)

t=1

θ∈Θ

γ∈Γ̂T (θ) t=1

T

X

t=[T s]+1

[ρ(γ20 gtT (β2 , δ)) − ρ0 ]

T

0

for all s ∈ S, where gtT (θ, s) = (gtT (β1 , δ)0 , 00 ) ∈ R2q×1 for t = [T s] + 1, . . . , T and gtT (θ, s) =

0

0

(gtT (β2 , δ)0 , 00 ) ∈ R2q×1 for t = [T s] + 1, . . . , T with γ(s) = (γ10 , γ20 ) ∈ R2q×1 .

Existence of partial-sample GEL estimators can be established under standard conditions. As explained earlier, the GEL estimators are obtained as the solution to a saddle point problem. Let us

examine the first orders conditions of this saddle point problem for the partial-sample GEL. Firstly, the

12

first order conditions corresponding to the Lagrange multiplier γ are obtained the maximization of the

partial-sample GEL criterion for a given β, δ. Thus,

γ1 (β1 , δ)

=

arg sup

[T s]

X

[ρ(γ1 (β1 , δ)0 gtT (β1 , δ)) − ρ0 ]

T

γ∈Γ̂T (θ) t=1

γ2 (β2 , δ)

=

arg sup

T

X

γ∈Γ̂T (θ) t=[T s]+1

,

[ρ(γ2 (β2 , δ)0 gtT (β2 , δ)) − ρ0 ]

.

T

The first order conditions can then be evaluated at β̂1 , δ̂ for the first part of the sample t = 1, . . . , [T s]

to obtain γ̂1 = γ(β̂1 , δ̂) and at β̂2 , δ̂ for the second part t = [T s] + 1, . . . , T to obtain γ̂2 = γ(β̂2 , δ̂).

The corresponding first order conditions are given by:

[T s]

1X

ρ1 (γ̂1 (β1 , δ)0 gtT (β1 , δ)) gtT (β1 , δ) =

T t=1

1

T

T

X

ρ1 (γ̂2 (β2 , δ)0 gtT (β1 , δ)) gtT (β2 , δ) =

0

0.

t=[T s]+1

Secondly, the partial-sample GEL estimators β̂1 , β̂2 , δ̂ are the minimizer of the partial-sample GEL

criterion (3.2). The corresponding first order conditions are:

[T s]

1X

ρ1 (γ̂1 (β̂1 , δ̂)0 gtT (β̂1 , γ̂))GβtT (β̂1 , δ̂)0 γ̂1 (β̂1 , δ̂) =

T t=1

1

T

and

T

X

ρ1 (γ̂2 (β̂2 , δ̂)0 gtT (β̂2 , γ̂))GβtT (β̂2 , δ̂)0 γ̂2 (β̂2 , δ̂) =

0,

0,

t=[T s]+1

T

1X

ρ1 (γ̂(θ̂(s), s)0 gtK (θ̂(s), s))GδtT (θ̂(s), s)0 γ̂(θ̂(s), s) = 0.

T t=1

(11)

We denote {B(s) : s ∈ [0, 1]} as q-dimensional vectors of mutually independent Brownian motion on

[0, 1] and define

·

J(s) =

Ω1/2 B(s)

Ω1/2 (B(1) − B(s))

¸

where B(π) is a q-dimensional vector of standard Brownian motion.

Theorem 3.1. Under Assumptions 7.1 to 7.10, every sequence of PS-GEL estimators {θ̂(·), γ̂(·), T ≥ 1}

satisfies

´

√ ³

T θ̂(·) − θ0

√

T

γ̂(·)

2KT + 1

⇒

¡

¢−1

G(·)0 Ω(·)−1 J(·),

G(·)0 Ω(·)−1 G(·)

³

⇒

´

¡

¢−1

G(·)0 Ω(·)−1 J(·)

Ω(·)−1 − Ω(·)−1 G(·)0 Ω(·)−1 G(·)

13

as a process indexed by s ∈ S, where S has closure in (0,1) and the sequence GEL estimators θ̂(·) and

the auxiliary sequence parameter estimator γ̂(·) are asymptotically uncorrelated.

Proof: see Appendix.

We now present the corresponding implied probabilities such defined by Back and Brown (1993) and

Smith (2004) for the most commonly used full and partial-sample estimators. Following Back and Brown

(1993), the full sample GMM implied probabilities are defined as:

i0

1 he e

1

JtT (θT ) − J T (θeT ) Ω̂T (θeT )−1 g T (θeT )

π

et (θeT ) = −

T

T −p

with J T (θeT ) =

1

T

PT

t=1

(12)

JetT (θeT ) and

JetT (θeT ) =

T

X

κ(|t − j|)g(xt−j , θeT )

j=1

where κ(|t − j|) is a real valued weighting function and g T (θ) =

1

T

PT

t=1

g(xt , θ). In practice, however,

some of the estimated probabilities may be negative in finite sample although these probabilities are

asymptotically positive. Antoine, Bonnal, and Renault (2007) proposes a shrinkage procedure defined

as weighted average of the standard 2S-GMM’s implied probabilities (1/T ) and the computed implied

probabilities to guarantee the non-negativity of these implied probabilities in finite sample.

Implied probabilities for the full sample ET and EL estimators are respectively given by:

exp[γ̃ 0 gtT (θeT )]

π

etET (θeT ) = PT

0

e

t=1 exp[γ̃ gtT (θT )]

(13)

and

π

etEL (θeT ) =

1

T [1 +

γ̃ 0 gtT (θeT )]

.

(14)

The corresponding unrestricted partial-sample implied probabilities are defined for s ∈ S as:

π

bt (θ̂T (s)) =

with J T (θ̂T (s), s) =

1

T

i0

1

1 he

−

JtT (θ̂T (s), s) − J T (θ̂T (s), s) Ω̂T (s)−1 g T (θ̂T (s), s)

T

T −p

PT

t=1

(15)

JetT (θ̂T (s), s) and

JetT (θ̂T (s), s) =

T

X

κ(|t − j|)gt−j (θ̂T (s), s)

j=1

where κ(|t − j|) is a real valued weighting function and g T (θ̂T (s), s) =

1

T

PT

exp[b

γ (s)0 gtT (θ̂T (s), s)]

π

btET (θ̂T (s)) = PT

γ (s)0 gtT (θ̂T (s), s)]

t=1 exp[b

14

t=1 gt (θ̂T (s), s).

Finally,

(16)

and

π

btEL (θ̂T (s)) =

1

T [1 +

γ

b(s)0 gtT (θbT (s), s)]

.

(17)

The purpose of the next section is to refine the null hypothesis of no structural change. Such a

refinement will enable us to construct various tests for structural change in the spirit of Sowell (1993,

1996a) and Hall and Sen (1999). In a first subsection we refine the null hypothesis, a second subsection

is devoted to tests for parameter constancy, a final subsection covers tests for stability of over-identifying

restrictions.

3.2

Refining the Null Hypothesis

The moment conditions for the full sample under the null can be written as: Egt (θ0 ) = 0, ∀t = 1, . . . , T.

Following Sowell (1993, 1996a), we can project the moment conditions on the subspace identifying the

parameters and the subspace of over-identifying restrictions. In particular, considering the (standardized)

moment conditions for the full sample GMM estimator, such a decomposition corresponds to:

Ω−1/2 Egt (θ0 ) = PG Ω−1/2 Egt (θ0 ) + (Iq − gF )Ω−1/2 Egt (θ0 ),

£

¤−1 0 −1/2

where PG = Ω−1/2 G G0−1 G

GΩ

. The first term is the projection identifying the parameter vector

and the second term is the projection for the over-identifying restrictions. The projection argument enables

us to refine the null hypothesis (8). For instance, following Hall and Sen (1999) we can consider the null,

for the case of a single breakpoint, which separates the identifying restrictions across the two subsamples:

½

PG Ω−1/2 E[gt (θ0 )] = 0

∀t = 1, . . . , [T s]

H0I (s) =

PG Ω−1/2 E[gt (θ0 )] = 0

∀t = [T s] + 1, . . . , T

Moreover, the overidentifying restrictions are stable if they hold before and after the breakpoint. This is

formally stated as H0O (s) = H0O1 (s) ∩ H0O2 (s) with:

H0O1 (s) : (Iq − PG )Ω−1/2 E[gt (θ0 )]

=

0

∀t = 1, . . . , [T s]

H0O2 (s) : (Iq − PG )Ω−1/2 E[gt (θ0 )]

=

0

∀t = [T s] + 1, . . . , T

The projection reveals that instability must be a result of a violation of at least one of the three hypotheses:

H0I (s), H0O1 (s) or H0O2 (s). Various tests can be constructed with local power properties against any

particular one of these three null hypotheses (and typically no power against the others). To elaborate

further on this we consider a sequence of local alternatives based on the moment conditions:

Assumption 3.1. A sequence of local alternatives is specified as:

Egt (θ0 ) = h(η, τ,

15

t √

)/ T

T

(18)

where h(η, τ, r), for r ∈ [0, 1], is a q-dimensional function. The parameter τ locates structural changes

as a fraction of the sample size and the vector η defines the local alternatives.2 These local alternatives

are chosen to show that the structural change tests presented in this paper have non trivial power against

a large class of alternatives. Also, our asymptotic results can be compared with Sowell’s results for GMM

framework.

3.3

Tests for parameter constancy

In this section we introduce several tests for structural change for parameter stability and establish

their asymptotic distribution. The null hypothesis is (8), or more precisely H0I (π). We present Wald,

Lagrange multiplier and likelihood ratio-type statistics. Predictive tests will be discussed in the next

section. For brevity, we consider only test statistics based on the optimal weighting matrix. The results

obtained are valid under an arbitrary weighting matrix. The first is the usual Wald statistic which is

given by:

³

´0

³

´

W aldT (s) = T β̂1T (s) − β̂2T (s) (V̂Ω (s))−1 β̂1T (s) − β̂2T (s) ,

where (V̂Ω (s) =

´−1

³

´

³

β

for i = 1, 2 correV̂1 (s)/s + V̂2 (s)/(1 − s) and V̂i (s) = Ĝβi,tT (s)0 Ω̂−1

i,T (s)Ĝi (s)

sponding the first and the second part of the sample. For the first part of the sample:

[T s]

Ĝβ1,tT (s)

=

1 X ∂gtT (β̂1 (s), δ̂(s))

[T s] t=1

∂β10

b 1T (s)

Ω

=

2KT + 1 X

gtT (β1 (s), δ)gtT (β̂1 (s), δ̂(s))0

[T s] t=1

[T s]

and for the second part of the sample:

bβ

G

2,tT

b 2T (s)

Ω

=

=

1

T − [T s]

2KT + 1

T − [T s]

T

X

t=[T s]+1

T

X

∂gtT (β̂2 (s), δ̂(s))

∂β20

gtT (β̂2 (s), δ̂(s))gtT (β̂2 (s), δ̂(s))0 .

t=[T s]+1

The Lagrange Multiplier statistic does not involve estimators obtained from subsamples, rather it

relies on full sample parameter estimates. The LMT (s) simplifies to (see Andrews 1993) :

h

i−1

T

β

β 0 −1 β

Ĝ

(

Ĝ

Ĝ

(ĜβtT )0 Ω̂−1

g1T (θ̃T , s)0 Ω̂−1

)

Ω̂

T

tT

tT

T

tT

T g1T (θ̃T , s).

s(1 − s)

2 The function h(·) allows for a wide range of alternative hypotheses (see Sowell (1996a)). In its generic form it can be

expressed as the uniform limit of step functions, η ∈ Ri , τ ∈ Rj such that 0 < τ1 < τ2 < . . . < τj < 1 and θ ∗ is in the

interior of Θ. Therefore it can accommodate multiple breaks.

16

where

g1T (θ̃T , s)

=

[T s]

1X

gtT (θ̃T ),

T t=1

ĜβtT

=

T

1 X ∂gtT (β̃, δ̃)

,

T t=1

∂β 0

bT

Ω

=

2KT + 1 X

gtT (β̃, δ̃)gtT (β̃, δ̃)0 .

T

t=1

T

Thus, the LMT (s) corresponds to the projection of the moment conditions evaluated at the full sample

estimator on the subspace identifying the parameter vector β.

The LR-type statistic is defined as the difference between the objective function for the partial sample

GEL evaluated at the unrestricted estimator and at the full sample GEL:

h

i

h

i

0

T

T

ρ(γ̂(s)0 gtT (θ̂(s), s)) − ρ0

X

2T X ρ(γ̃(s) gtT (θ̃, s)) − ρ0

LRT (s) =

−

2K + 1 t=1

T

T

t=1

We state now the main theorem which establishes the asymptotic distribution of the Wald, LM and

LR-type test statistics under the local alternative (18).

Theorem 3.2. Under the null hypothesis H0 in (8) and Assumptions 7.1 to 7.10, the following processes

indexed by s for a given set S whose closure lies in (0, 1) satisfy:

W aldT (s) ⇒ Qr (s), LMT (s) ⇒ Qr (s), LRT (s) ⇒ Qr (s),

with

Qr (s) =

BBr (s)0 BBr (s)

s(1 − s)

and under the alternative (18)

0

Qr (s) =

BBr (s)0 BBr (s) (H(s) − sH(1)) Ω−1/2 PGβ Ω−1/2 (H(s) − sH(1))

+

,

s(1 − s)

s(1 − s)

where BBr (s) = Br (s) − sBr (1) is a Brownian bridge, Br is r-vector of independent Brownian motions

¤−1 β 0 −1/2

£

(G ) Ω

.

and PGβ = Ω−1/2 Gβ (Gβ )0 Ω−1 Gβ

Proof: See Appendix.

The result in Theorem 3.2 tells us that the asymptotic distributions under the null of the Wald,

LR-type and LM statistics are the same as those obtained by Andrews (1993) for the GMM estimator.

When s is unknown, i.e. the case of unknown breakpoint, we can use the above result to construct

statistics across s ∈ S. In the context of maximum likelihood estimation, Andrews and Ploberger (1994)

17

derive asymptotic optimal tests for a gaussian a priori of the amplitude of the structural change based

on the Neyman-Pearson approach which are characterized by an average exponential form. The Sowell

(1996a) optimal tests are a generalization of the Andrews and Ploberger approach to the case of two

measures that do not admit densities. The most powerful test is given by the Radon-Nikodym derivative

of the probability measure implied by the local alternative with respect to the probability measure implied

by the null hypothesis.

The optimal average exponential form is the following:

¶

µ

Z

1 c

−r/2

QT (s) dH(s),

Exp − QT = (1 + c)

exp

21+c

where various choices of c determine power against close or more distant alternatives and H(·) is the weight

function over the value of s ∈ S. In the case of close alternatives (c = 0), the optimal test statistic takes

R

the average form, aveQT = S QT (s)dH(s). For a distant alternative (c = ∞), the optimal test statistics

¡R

¢

takes the exponential form, expQT = log S exp[ 12 QT (s)]dJ(s) . The supremum form often used in the

literature corresponds to the case where c/(1 + c) → ∞. The sup test is given by sup QT = sups∈S QT (s).

The following Theorem gives the asymptotic distribution for the exponential mapping for QT when

QT corresponds to the Wald, LM and LR ratio-type tests under the null.

Theorem 3.3. Under the null hypothesis H0 in (8) and Assumptions 7.1 to 7.10, the following processes

indexed by s for a given set S whose closure lies in (0,1) satisfy:

µZ

¶

Z

1

sup QT ⇒ sup Qr (π), aveQT (s) ⇒

Qr (s)dJ(s), expQT ⇒ log

exp[ Qr (s)]dJ(s) ,

2

s∈S

S

S

with

Qr (s) =

BBr (s)0 BBr (s)

.

s(1 − s)

This result is obtained through the application of the continuous mapping theorem (see Pollard

(1984)). This implies that we can rely on the critical values tabulated for the case of GMM-based tests.

For example the critical values for the statistics defined by the supremum over all breakpoints s ∈ S of

W aldT (s), LMT (s) or LRT (s) can be found in the original paper by Andrews (1993). The same is true

for the Sowell (1996a) and Andrews and Ploberger (1994) asymptotic optimal tests.

3.4

Tests for Stability of Overidentifying Restrictions

Tests presented in the preceding section are based on the projection of the moment conditions on the

subspace of identifying restrictions. In this section we are interested with testing against violations of

H0O1 (π) or H0O2 (π). The local alternatives are given by the projection of the moment condition on the

18

subspace orthogonal to the identifying restrictions. For instance, in the case of a single breakpoint, the

local alternatives by Assumption 3.1 correspond to:

η1

(Iq − PG )Ω−1/2 √

T

O2

−1/2

−1/2 η2

√

HA (s) : (Iq − PG )Ω

E[gt (θ0 )] = (Iq − PG )Ω

T

O1

HA

(s) : (Iq − PG )Ω−1/2 E[gt (θ0 )] =

t = 1, . . . , [T s]

t = [T s] + 1, . . . , T

Sowell (1996b) introduces optimal tests for the violation of the overidentifying restrictions when the

O1

violation occurs before the breakpoint corresponding to the alternative HA

. The statistic is based on

the projection of the partial sum of the full sample estimator on the appropriate subspace. Hall and Sen

O1

(1999) introduce a test for the case where the violation can occurs before or after the breakpoint i.e. HA

O2

or HA

. The statistic is based on the overidentifying restriction tests for the sample before the considered

breakpoint s) and the for the sample after the possible breakpoint s.

We propose here equivalent statistics to the Hall and Sen’s statistics specially designed to detect

instability before and after the possible breakpoint. The first statistic is based on the same statistic that

the one of Hall and Sen (1999) except that is computed with smoothed moment conditions. The OT (s)

statistic is the sum of the GMM criterion function in each subsample

OT (s) = O1T (s) + O2T (s)

where

[T s]

X

1

O1T (s) = p

[T s]

0

1

[T s]

t=1

gtT (β̂1 (s), δ̂(s)) Ω̂−1

1T (s) p

[T s]

X

t=1

gtT (β̂1 (s), δ̂(s))

and

O2T (s) = p

1

0

[T ]

X

(T − [T s]) t=[T s]+1

gtT (β̂2 (s), δ̂(s)) Ω̂−1

2T (s) p

1

X

(T − [T s]) t=[T s]+1

gtT (β̂2 (s), δ̂(s))

The GEL counterparts of OT (s) based on the sum of its objective function for both subsamples, namely:

OTGEL (s) = O1GEL

(s) + O2GEL

(s)

T

T

where

h

i

[T s] ρ(λ (s)0 g (β̂ (s), δ̂(s))) − ρ

X

1

tT

1

0

2[T s]

O1GEL

(s) =

T

2K + 1 t=1

[T s]

and

2(T − [T s])

O2GEL

(s) =

T

2K + 1

h

i

ρ(λ1 (s)0 gtT (β̂1 (s), δ̂(s))) − ρ0

T

X

t=[T s]+1

19

T − [T s]

.

The duality between overidentifying restrictions and the auxiliary Lagrange multiplier parameters γ(·)

for the partial-sample estimation allows us to propose a new structural change test for overidentifying

restrictions based on γ(·). This statistic is defined as following:

O

LMTO (s) = LM 1O

T (s) + LM 2T (s)

where

LM 1O

T (s) =

T

γ̂1T (s)0 Ω̂1T (s)γ̂1T (s)

(2KT + 1)2

LM 2O

T (s) =

T

γ̂2T (s)0 Ω̂2T (s)γ̂2T (s)

(2KT + 1)2

and

√

The equivalence with the overidentifying test of instability results from that T /(2KT + 1)γˆ1 (s) is

√

P[T s]

asymptotically equivalent at the first order to Ω1 (s)−1 T 1/2 t=1 gtT (β̂1 (s), δ̂(s)) and T /(2KT + 1)γˆ2 (s)

PT

to Ω2 (s)−1 T 1/2 t=[T s]+1 gtT (β̂2 (s), δ̂(s)).

The following theorem provides the asymptotic distribution under the null and the alternative of

O

O

those test statistics for the supremum mapping: sup QO

T = sups∈S QT (s), the average mapping: aveQT =

¡R

¢

R

O

QO (s)dJ(s) and exponential mapping: aveQO

exp 12 QO

T = log

T (s)dJ(s) with QT (s) equal OT (s),

S T

S

OTGEL (s) and LMTO (s).

Theorem 3.4. Under the null of no structural change and Assumptions 7.1 to 7.10, the following processes indexed by s for a given set S whose closure lies in (0,1) satisfy:

µZ

¶

Z

1

O

O

O

exp[ Qq−p (s)]dJ(s) ,

Qq−p (s)dJ(s), expQT ⇒ log

sup QT ⇒ sup Qq−p (s), aveQT ⇒

2

s∈s

S

S

with

0

Qq−p (s) =

Bq−p (s)0 Bq−p (s) [Bq−p (1) − Bq−p (s)] [Bq−p (1) − Bq−p (s)]

+

s

(1 − s)

and under the alternative (18)

0

Qq−p (s)

=

Bq−p (s)0 Bq−p (s) [Bq−p (1) − Bq−p (s)] [Bq−p (1) − Bq−p (s)]

+

s

(1 − s)

+

H(s)0 Ω−1/2 (I − PG ) Ω−1/2 H(s) (H(1) − H(s)) Ω−1/2 (I − PG ) Ω−1/2 (H(1) − H(s))

+

(1 − s)

(1 − s)

0

where Bq−p (s) is a q − p-dimensional vector of independent Brownian motion and

¡

¢−1 prime −1/2

PG = Ω−1/2 G G0 Ω−1 G

G

Ω

.

Proof: See Appendix.

The asymptotic critical values are those test statistics are tabulated in Hall and Sen (1999). The last

two terms in the asymptotic distribution under the alternative given in Theorem 3.4 show that the test

statistics have non trivial power to detect instability before and after the possible breakpoint point.

20

4

Tests for a structural change based on implied probabilities

We first consider a statistic test based on the partial sum of the implied probabilities evaluated at

the restricted estimator for the GMM and GEL. The test statistic proposed to detect structural change

is given by

IP STj (s) =

1

2KT + 1

2

[T s] ³

´

X

T πtj (θ̃T ) − 1

t=1

The next Theorem establishes the asymptotic distribution of the partial sample overidentifying moment restriction test.

Theorem 4.1. Under Assumptions (7.1) to (7.10), the following processes indexed by s for a given S ∈ S

whose closure lies in (0, 1) satisfy

IP STET (s), IP STEL (s), IP STGM M (s) ⇒ BBp (s)0 BBp (s) + B(q−p) (s)0 B(q−p) (s)

and under the alternative (18)

IP STET (s), IP STEL (s), IP STGM M (s)

0

⇒ BBp (s)0 BBp (s) + (H(s) − sH(1)) Ω−1/2 PG Ω−1/2 (H(s) − sH(1))

+Bq−p (s)0 Bq−p (s) + H(s)0 Ω−1/2 (I − PG ) Ω−1/2 H(s),

where B(q−p) (s) is a (q − p)-vector of standard Brownian motion and BBp (s) = Bp (s) − sBp (1) is a

p-vector of Brownian bridge.

See the appendix for the proof.

This Theorem shows that the structural change test based on this quadratic form of the partialsample sum of the implied probabilities evaluated at the full sample estimator combines two components.

The first component is function of the Brownian bridges corresponds to a parameter stability tests and

the second component to a stability of overidentifying restrictions. This statistic test based on implied

probabilities can be viewed as a more general of misspecification due to instability that just a test for

parameter variation. The predictive tests proposed by Ghysels, Guay and Hall (1997) shares the same

properties.

The following Theorem gives the asymptotic distribution for the exponential mapping for QIP

T where

QIP

T corresponds to the statistic presented above based on the implied probabilities.

Theorem 4.2. Under the null hypothesis H0 in (8) and Assumptions 7.1 to 7.10, the following processes

indexed by s for a given set S whose closure lies in (0,1) satisfy:

µZ

¶

Z

1

IP

IP

sup QIP

⇒

sup

Q

(s),

aveQ

(s)

⇒

Q

(s)dJ(s),

expQ

⇒

log

exp[

Q

(s)]dJ(s)

,

q−p

q−p

q−p

T

T

T

2

s∈S

S

S

21

with

Qq−p (s) = BBp (s)0 BBp (s) + B(q−p) (s)0 B(q−p) (s).

This result is obtained through the application of the continuous mapping theorem (see Pollard

(1984)).

The simulated critical values for πTET (s), πTEL (s), πTGM M (s) can be found in XX.

In the sequel, we propose structural change tests based on implied probabilities design specially to

detect instability in parameters of interest or in overidentifying restrictions.

4.1

Tests for a structural change in the parameters based on implied probabilities

The statistic proposed to detect parameters instability is based on the difference between the partial

sum of unrestricted implied probabilities (appropriately scaled) with the corresponding partial sum of

restricted implied probability. More precisely the statistic tests are defined as:

´2

X³ j

1

T πt (θ̂T (s)) − T πtj (θ̃T )

2K + 1 t=1

T

IP STI,j (s) =

for j = ET, EL or GM M .

Theorem 4.3. Under the null hypothesis H0 in (8) and Assumptions Under Assumptions (7.1) to (7.10),

the following processes indexed by s for a given set S whose closure lies in (0, 1) satisfy:

IP STI,ET (s) ⇒ Qr (s), IP STI,EL (s) ⇒ Qr (s), IP STI,GM M (s) ⇒ Qr (s),

with

Qr (s) =

BBr (s)0 BBr (s)

s(1 − s)

and under the alternative (18)

0

Qr (s) =

BBr (s)0 BBr (s) (H(s) − sH(1)) Ω−1/2 PGβ Ω−1/2 (H(s) − sH(1))

+

,

s(1 − s)

s(1 − s)

where BBr (s) = Br (s) − sBr (1) is a Brownian bridge, Br is r-vector of independent Brownian motions

£

¤−1 β 0 −1/2

and PGβ = Ω−1/2 Gβ (Gβ )0 Ω−1 Gβ

(G ) Ω

.

Proof: See Appendix B.

The Theorem shows that the asymptotic distribution of the proposed test based on implied probabilities is asymptotically equivalent under the null and the alternative to the Wald, LM and LR tests for

parameter instabilities. However, the small sample properties can differ compared to those more standard

tests.

22

By Lemma 7.4, the two following modified statistics are equivalent the the one defined above:

³

´2

j

j

T

T

π

(

θ̂

(s))

−

T

π

(

θ̃

)

X

T

T

t

t

1

IP SM 1I,j

T (s) =

2K + 1 t=1

T πtj (θ̂T (s))

and

IP SM 2I,j

T (s) =

1

2K + 1

³

´2

T

T πtj (θ̂T (s)) − T πtj (θ̃T )

X

T πtj (θ̃T )

t=1

for j = ET, EL or GM M .

4.2

Tests for a structural change for overidentifying restrictions based on implied probabilities

Ramalho and Smith (2005) introduce in i.i.d. setting a specification tests for moment conditions based

on implied probabilities similar in spirit to the classical Pearson Chi-Square goodness-of-fit tests. The

test is based on the following statistic:

T ³

X

´2

T πtj (θ̂1T (s)) − 1

.

t=1

They show that such statistic iS asymptotically equivalent to the overidentifying moment restrictions

J-test proposed by Hansen (1982). As shown by Ghysels and Hall, the J-test has no power to detect

structural change, a property than shared by the specification test proposed by those authors.

Here, we propose a statistic specially design to detect overidentifying restrictions instability based on

implied probabilities. The statistic is asymptotically equivalent to the ones proposed by Hall and Sen

(1999) and thus shares its asymototic properties. More precisely the statistic test is defined as:

IP STO,j (s)

=

1

2KT + 1

[T s] ³

´2

X

[T s]πtj (θ̂1T (s)) − 1 +

t=1

1

2KT + 1

T

X

³

´2

(T − [T s])πtj (θ̂2T (s)) − 1

(19)

t=[T s]+1

for j = ET, EL or GM M . The statistic is the sum of the overidentifying restrictions statistic proposed

by Ramalho and Smith (2005) for the first and the second part of the sample adapted for dependent

data.

The next Theorem establishes the asymptotic distribution of the partial sample overidentifying moment restriction test.

Theorem 4.4. Under Assumptions (7.1) to (7.10), the following processes indexed by s for a given S ∈ S

whose closure lies in (0, 1) satisfy

IP STO,ET (s) ⇒ Qq−p (s), IP STO,EL (s) ⇒ Qq−p (s), IP STO,GM M (s) ⇒ Qq−p (s)

23

with under the null of no structural change

£

¤0 £

¤

B(q−p) (s)0 B(q−p) (s)

B(q−p) (1) − B(q−p) (s) B(q−p) (1) − B(q−p) (s)

Qq−p (s) ⇒

+

s

s(1 − s)

and under the alternative (18)

£

¤0 £

¤

B(q−p) (s)0 B(q−p) (s)

B(q−p) (1) − B(q−p) (s) B(q−p) (1) − B(q−p) (s)

+

s

(1 − s)

Qq−p (s) ⇒

0

+

H(s)0 Ω−1/2 (I − PG ) Ω−1/2 H(s) [H(1) − H(s)] Ω−1/2 (I − PG ) Ω−1/2 [H(1) − H(s)]

+

,

s

(1 − s)

where B(q−p) (s) is a (q − p)-vector of standard Brownian motion.

See the appendix for the proof.

The Theorem show that the proposed test statistic can detect instability occurring in moment conH(s)0 Ω−1/2 (I−PG )Ω−1/2 H(s)

correspond to a strucs

[H(1)−H(s)]0 Ω−1/2 (I−PG )Ω−1/2 [H(1)−H(s)]

breakpoint s while the term

(1−s)

ditions before and after the breakpoint. Indeed, the term

tural change in the moment conditions before the

to a structural change after the breakpoint s.

By Lemma 7.4, an asymptotic equivalent statistic to (19) in the spirit of the Neyman-modified chisquare is given by:

IP SMTO,j (s) =

1

2KT + 1

³

´2

[T s] [T s]π j (θ̂ (s)) − 1

X

t 1T

t=1

[T s]πtj (θ̂1T (s))

³

+

1

2KT + 1

T

X

t=[T s]+1

(T − [T s])πtj (θ̂2T (s)) − 1

´2

(T − [T s])πtj (θ̂2T (s))

for j = ET, EL or GM M .

5

Robust structural change tests to weak identification based on implied probabilities

To be completed

6

Simulation Evidence

To evaluate the performance of the test stastistics we use the data generating process of (Ghysels et

al. 1997) and of (Hall and Sen 1999). We set p = 1 and generate xt as either an AR(1) process

xt = θi xt−1 + ut

(20)

xt = θi xt−1 + ut + α²t−2

(21)

or as an ARMA(1,2)

24

for t = 1, . . . , T . Structural change in the identifying restrictions is studied by considering different values

of θi where i = 1, 2 denotes the first or second subsample while structural stability in the overidentifying

restrictions is studied by considering nonzero values of α in the second subsample. The change is set at

T /2. We use two moment conditions, q = 2, constructed using the first and second lags of xt . In the

above, ut ∼ N (0, 1). The following table summarizes the different parametrization and is taken from

(Hall and Sen 1999):

DGP1

DGP2

DGP3

DGP4

DGP5

DGP6

DGP7

DGP8

DGP9

DGP10

H0SS (r)

θ1 = θ2 = 0

θ1 = θ2 = 0.4

θ1 = θ2 = 0.8

θ1

θ1

θ1

θ1

= θ2

= θ2

= θ2

= θ2

= 0.4

= 0.4

= 0.4

= 0.4

Table 1: Data Generating Processes

O

(r)

HA

α=0

α=0

α=0

θ1 = 0, θ2 = 0.4

α=0

θ1 = 0, θ2 = 0.8

α=0

θ1 = 0.4, θ2 = 0.8 α = 0

α = 0.5

α = 0.9

α = −0.5

α = −0.9

I

(r)

HA

25

TO BE COMPLETED

26

Figure 1: Simulated Implied Probabilities

0.010050

Constant

Pulse

0.01000

0.010025

0.00975

0.010000

0.00950

0.009975

0.0125

0

20

Break

40

60

80

100

0

Shift

20

40

60

80

100

40

60

80

100

40

60

80

100

0.0100

0.0100

0.0075

0.0075

0

20

40

CRRA Parameter

60

80

100

0

20

AR Parameter

0.01025

0.01025

0.01000

0.01000

0.00975

0

20

40

60

80

0.00975

100

0

20

Notes: The constant case refers to no break, the pulse case to a one-time temporary jump at observation 50, the break case

to a change in the mean for 60 time periods and the shift to a permanent change in the mean at observation 20. For the

CRRA case, a preference parameter is estimated using two moments. The moment conditions are violated at observation 51.

For the AR case a autoregressive parameter is estimated. The data generating process is represented by an AR(1) process

for t ≤ 50 and by an ARM A(1, 2) otherwise. The sample is of size 100 in all cases the horizontal lines correspond to the

empirical weights of 1/100.

27

7

Appendix

TO BE IMPROVED AND COMPLETED

7.1

Assumptions

Assumption 7.1. The process {xt }∞

t=1 is a finite dimensional stationary and strong mixing coefficients

∞ for some ν > 1.

P∞

j=1

α(j)(ν−1)/ν <

Assumption 7.2. (a) θ0 ∈ Θ is the unique solution to E [g(xt , θ)] = 0 where Θ is compact,

g(x

³

´ t , θ) is continuous

£

¤

α

1

at each θ ∈ Θ with probability one; (b) E supθ∈Θ kg(xt , θ)k < ∞ for some α > max 4ν, η ; (b) Ω(θ) is finite

and positive definite for all θ ∈ Θ.

Define the smoothed moment conditions as:

µ

¶

t−1

1 X

s

k

gt−s (xt , θ)

S s=t−T

ST

gtT (θ) =

for an appropriate kernel. From now on, we consider the uniform kernel proposed by Kitamura and Stutzer (1997):

gtT (θ) =

1

2KT + 1

KT

X

gt−s (xt , θ)

s=−KT

Assumption 7.3. KT2 /T → 0 and KT → ∞ as T → ∞.

Assumption 7.4. (a) ρ(·) is twice continuously differential and concave on its domain, an open interval V

¡

¢−ζ

1

containing 0, ρ1 = ρ2 = −1; (b) γ ∈ Γ where Γ = [γ : kγk ≤ D T /KT2

.

] for some D > 0 with 21 > ζ > 2αη

p

p

Under Assumptions 7.1 to 7.4, Smith (2004) shows that for the full sample estimators θ̃T → θ0 , γ̃T → 0,

¡

¢−1/2

P

kγ̃T k = 0p [ T /2KT + 1)2

] and k T1 Tt=1 gtT (θ̃T )k = 0p (T 1/2 ). Now, Let G(θ) = E [∂g(xt , θ)/∂θ] and G =

G(θ0 ).

Assumption

7.5. g(·, θ) is differentiable

in θ0 ∈ Θ0 where Θ0 is a neighborhood of θ0 ,

h

i

E supθ∈Θ0 k∂g(xt , θ)/∂θ0 kα/(α−1) < ∞ and rank(G) = p.

³ ´

¡

¢1/2

Assumptions 7.1 to 7.5 yield the asymptotic distribution of T 1/2 θ̃T and T /(2KT + 1)2

γ̃T (see Smith

2004). Also, under these assumptions:

T

1 X

p

GtT (θ̃T ) → G

T t=1

and

Ω̃T (θ̃T ) = 2K + 1

T

1 X

p

gtT (θ̃T )gtT (θ̃T )0 → Ω.

T t=1

The following high level assumptions are sufficient to derive the weak convergence under the null of the

PS-GEL estimators θ̂T (s) and γ̂T (s). These assumptions are similar to the ones in Andrews (1993).

³ P

´

s

Assumption 7.6. V ar T1 Tt=1

g(xt , θ) → sΩ ∀s ∈ [0, 1].

p

Assumption 7.7. sups∈S kΩ̂T (s) − Ω(s)k → 0 where Ω(s) is defined in Section 3.1.

p

p

Assumption 7.8. sups∈S kθ̂T (s) − θ0 k → 0 for some θ0 in the interior of Θ and sups∈S kγ̂T (s) − 0k → 0.

P s

Assumption 7.9. limT →∞ T1 Tt=1

E∂g(xt , θ0 )/∂θ0 exists uniformly over s ∈ S and equals sG ∀s ∈ S.

Assumption 7.10. G(s)0 Ω(s)−1 G(s) is nonsingular ∀s ∈ S and has eigenvalues bounded away from zero.

28

7.2

Lemmas

Lemma 7.1. Under Assumptions 7.1 to 7.1, the asymptotic distribution of the smoothed moment conditions

under the null is given by:

[T s]

1 X

Ω−1/2 √

gtT (θ0 ) ⇒ B(s),

T t=1

where B(s) is a q-dimensional vector of standard Brownian motion.

Proof of Lemma 7.1 First, by a well established result:

Ω−1/2

[T s]

X

gt (θ0 ) ⇒ B(s)

t=1

where B(s) is a q-vector of standard Brownian motion.

Second, the smoothed moment condition are defined as:

[T s]

1 X

1

Ω−1/2 √

2K

T +1

T t=1

KT

X

gt−j (θ0 ) .

j=−KT

Considering the ”endpoint effect” introduced by the extra KT terms, we have:

[T s]

KT

X

X

t=1 j=−KT

1

2KT + 1

gt−j (θ0 )

=

[T s]

X

t=1

max{t−1,KT }

X

1

2KT + 1

[T s]−KT

=

X

gt (θ0 ) +

t=KT +1

=

gt−j (θ0 )

j=max{t−T,−KT }

KT

X

t + KT

gt (θ0 ) +

2K

T +1

t=1

[T s]

X

t=[T s]−KT +1

[T s]

X

[T s]

X

KT

X

t − KT − 1

gt (θ0 ) +

gt (θ0 ) +

2KT + 1

t=1

t=1

t=[T s]−KT +1

[T s] − t + KT + 1

gt (θ0 )

2KT + 1

[T s] − t − KT

gt (θ0 )

2KT + 1

which implies that

[T s]

X

t=1

gt (θ0 ) =

[T s]

X

µ

gtT (θ0 ) + Op

t=1

KT2

2KT + 1

¶

,

Under the Assumptions that max1≤t≤T kgt (θ0 )k = op (T 1/2 ) and KT2 /T → 0, we get

[T s]

[T s]

1 X

1 X

gt (θ0 ) = Ω−1/2 √

gtT (θ0 ) + op (1).

Ω−1/2 √

T t=1

T t=1

which yields the asymptotic equivalence.

The following Lemma provides the asymptotic distribution of the smoothed moment condition under the

general sequence of local alternatives appearing in Assumption 18.

Lemma 7.2. Let Assumptions 7.1 to 7.10 and Assumption 18 hold, then

[T s]

X

1

√ Ω−1/2

gtT (θ0 ) ⇒ B(s) + Ω−1/2 H(s)

T

t=1

where H(s) =

Rs

0

h(η, τ, u)du and B(s) is a q-dimensional vectors of standard Brownian motion.

29

Proof of Lemma 7.2

Under the alternative (18), the sample smoothed moments satisfy:

[T s]

1 X

1

Ω−1/2 √

T t=1 2KT + 1

where h(t/T ) ≡ h(η, τ,

t

T

µ

KT

X

gt−j (θ0 ) −

j=−KT

h ((t − j)/T )

√

T

¶

⇒ B(s),

) to reduce the notation. The left hand side term can be rewritten as:

[T s]

[T s]

KT

1 X X

1

1 X

Ω−1/2 √

gt−j (θ0 ) − Ω−1/2 √

T t=1 j=−KT

T t=1 2KT + 1

KT

X

j=−KT

h ((t − j)/T )

√

.

T

Let us now examine the last term,

[T s]

1

1 X

√

T t=1 2KT + 1

KT

X

j=−KT

[T s]

1

1 X

h ((t − j)/T )

√

= √

T

T t=1 2KT + 1

max{t−1,KT }

X

j=max{t−T,−KT }

h ((t − j)/T )

√

T

which equals

1

T

[T s]−KT

X

h (t/T ) +

t=KT +1

KT

1 X t + KT

1

h (t/T ) +

T t=1 2KT + 1

T

[T s]

X

t=[T s]−KT +1

[T s]

KT

1 X h (t/T )

1 X t − KT − 1

1

√

=

+

h (t/T ) +

T t=1

T t=1 2KT + 1

T

T

[T s] − t + KT + 1

h (t/T )

2KT + 1

[T s]

X

t=[T s]−KT +1

[T s] − t − KT

h (t/T )

2KT + 1

Rs

K2

The first term of the last equality converges to 0 h(ν)dν. Under the assumption that TT → 0, the last two terms

converge to zero. The result follows.

The next Lemma gives the asymptotic distribution of the partial sum of the full sample estimator for the

general local alternatives based on smoothed moment conditions. With this result, we can obtain the asymptotic distribution for optimal tests for the stability of overidentifying restrictions with GEL. The asymptotic

distributions are the same than derived by Sowell and Hall and Sen

Lemma 7.3. Under Assumptions 7.1 to 7.10 and under the alternative (18), then

[T s]

1 X

√

gtT (θ̃T )

T t=1

⇒

Ω1/2 B(s) + H(s) −

h

i

sG(G0 Ω−1 G)−1 G0 Ω−1 Ω1/2 B(1) + H(1) .

where B(π) is a q-dimensional vectors of mutually independent Brownian motion.

Lemma 7.4. Under Assumptions 7.1 to 7.10,

πtj (θ̃T ) =

and πtj (θ̃T ) =

1

T

T

1

2KT + 1 X

1

− gtT (θ̃T )0 Ω−1

gtT (θ̃T ) + Op (T −2 )

T

T

T

t=1

+ op (1) uniformly in t = 1, . . . , T and j = ET, EL or GM M .

Proof of Lemma 7.4

We need to derive the asymptotic distributions of the implied probabilities for the three following cases evaluated at the full sample estimator:3

3 By

(3.1) of Smith (2004)

30

• Exponential Tilting:

exp(γ̃T0 gtT (xt , θ̃T ))

πtET (θ̃T ) = PT

0

t=1 exp(γ̃T gtT (xt , θ̃T ))

• Empirical Likelihood:

πtEL (θ̃T ) =

h

T 1+

1

γ̃T0 gtT (xt , θ̃T

i

• Standard Generalized Method of Moments4

"

#0

T

T

h

i−1 1 X

1

1

1 X

πtGM M (θ̃T ) =

−

g(xt , θ̃T ) −

g(xt , θ̃T )

V arg(xt , θ̃T )

g(xt , θ̃T )

T

T

T t=1

T t=1

First, consider the implied probabilities for the exponential tilting. A mean value expansion around (θ̃T , γ̃T ) =

(θ̃T , 0) yields:

³

´P

³

´

³

´

T

exp γ̄T0 gtT (θ̃T )

exp γ̄T0 gtT (θ̃T )

gtT (θ̃T )0 exp γ̄T0 gtT (θ̃T )

t=1

1

³

´ γ̄T −

πtET (θ̃T ) =

+ gtT (θ̃T )0 P

γ̃T + op (1)

³

hP

´i2

T

T

0

T

0

t=1 exp γ̄T gtT (θ̃T )

t=1 exp γ̄T gtT (θ̃T )

where γ̄ lies on the line segment joining θ̃ and 0 and may differ from row to row.

Since γ̃T converges in probability to 0, thus:

"

#0

T

gtT (θ̃T ) X gtT (θ̃T )

1

ET

πt (θ̃T ) =

+

−

γ̃T + op (1).

T

T

T2

t=1

As

PT

t=1

√

gtT (θ̃T )/T = Op (T −1/2 ), gtT (θ̃T ) = Op ((2KT + 1)−1/2 )5 and γ̃T = Op (2KT + 1/ T ) yields:

πtET (θ̃T ) =

1

1

+ gtT (θ̃T )0 γ̃T + Op (T −2 )

T

T

and

πtET (θ̃T ) =

1

(1 + op (1)) .

T

uniformly in t = 1, . . . , T . Thus, we get:

T πtET (θ̃T ) − 1 = gtT (θ̃T )0 γ̃T + op (1).

(22)

uniformly in t = 1, . . . , T .

By similar arguments, we can show for the Empirical likelihood:

T πtEL (θ̃T ) − 1 = gtT (θ̃T )0 γ̃T + op (1).

(23)

P

uniformly in t = 1, . . . , T . And considering that γ̃T = −Ω−1 2KTT +1 Tt=1 gtT (θ̃T ) + op (1) and results derive in

Lemma 7.1, the asymptotic equivalence with T πtGM M (θ̃T ) − 1 follows.

4 See

5 See

Back and Brown (1993)

Kitamura and Stutzer (1997), p. 871

31

Proofs of Theorems

7.3

Proof of Theorem 3.1

The FOC (11) can be rewritten as

T

1 X

1

ρ1 (γ̂(θ̂, s)0 gtT (θ̂, s))GδtT (θ̂, s)0

γ̂(θ̂, s) = 0.

T t=1

2KT + 1

³

By a mean-value expansion of the first order conditions for the partial-sample GEL where ΞT = β̂10 , β̂20 , δ̂ 0 ,

0

0

0

γ̂1

γ̂2

,

2KT +1 2KT +1

and Ξ0 = (β00 , β00 , δ00 , 0, 0) :

µ

0 = −T 1/2

1

T

0

t=1 gtT (θ0 , s)

PT

¶

´

³

+ M̄ (s)T 1/2 Ξ̂T (s) − Ξ0 + op (1),

where

[T s]

T

1 X

1 X h gtT (β0 , δ0 ) i

1

gtT (θ0 , s) =

+

0

T t=1

T t=1

T

and

T

0

1 X

M̄ (s) =

¡

¢

0

T t=1 ρ1 γ̄t (s) gtT (θ̄(s), s) GtT (θ̂(s), s)0

T

X

h

t=[T s]+1

0

gtT (β0 , δ0 )

i

³

´

ρ1 γ̂t (s)0 gtT (θ̂(s), s) GtT (θ̂(s), s)0

³

´

(2KT + 1) ρ2 γ̂t (s)0 gtT (θ̂(s), s) gtT (θ̄(s), s)gtT (θ̂(s), s)0

where θ̄(s) is a random vector on the line segment joining θ̂(s) and θ0 and γ̄(s) is a random vector joining γ̂(s)

to (00 , 00 )0 .

p

Now, we need to show that M̄ (s) → M (s) where

¸

·

0

G(s)0 .

M (s) = −

G(s) Ω(s)

First, under Assumption 7.8,

¡

¢

p

max |ρ1 γ̄t (s)0 gtT (θ̄(s), s) − ρ1 (0)| → 0

1≤t≤T

and

¡

¢

p

max |ρ2 γ̄t (s)0 gtT (θ̄(s), s) − ρ2 (0)| → 0.

1≤t≤T

By a proof similar to the one in Lemma 1, we can show that

directly implies by Assumption 7.9 that

P[T s]

t=1

Gt (θ0 , s) =

T

¢

1 X ¡

p

ρ1 γ̄t (s)0 gtT (θ̄(s), s) GtT (θ̂(s), s) → −G(s).

T t=1

By Assumptions 7.6 and 7.8:

[T s]

2KT + 1 X

p

gtT (β̄1 , δ̄)gtT (β̂1 , δ̂)0 → sΩ

T

t=1

and

2KT + 1

T

T

X

p

gtT (β̂2 , δ̂)gtT (β̄2 , δ̄)0 → (1 − s)Ω

t=[T s]+1

32

P[T s]

t=1

GtT (θ0 , s) + op (1) which

´0

which results imply

T

¢

2KT + 1 X ¡

p

ρ2 γ̄t (s)0 gtT (θ̄(s), s) gtT (θ̄(s), s)gtT (θ̂(s), s)0 → −Ω(s).

T

t=1

Moreover under Assumption 7.10:

·

M (s)−1 =

−Σ(s)

H(s)0

H(s)

P (s)

¸

.

¡

¢−1

, H(s) = Σ(s)G(s)0 Ω(s)−1 and P (s) = Ω(s)−1 − Ω(s)−1 G(s)Σ(s)G(s)0 Ω(s)−1 .

where Σ(s) = G(s)0 Ω(s)−1 G(s)

As M̄ (s) is positive definite with probability approaching one, we obtain:

Ã

!

T

√

√ 1 X

T (ΞT (s) − Ξ0 ) = −M̄ −1 (s) 0, − T

gtT (θ0 , s)0 + op (1)

T t=1

=

T

¡

¢0 √ 1 X

− H(s)0 , P (s)

T

gtT (θ0 , s) + op (1).

T t=1

We have also by Lemma 7.1,

T

1 X

gtT (θ0 , s) ⇒ J(s).

Ω−1 √

T t=1

for s ∈ S. Combining the results above yields:

´

√ ³

T θ̂(s) − θ0

T

¡

¢−1

1 X

− G(s)0 Ω(s)−1 G(s)

G(s)0 Ω(s)−1 √

gtT (θ0 , s) + op (1)

T t=1

¡

¢−1

⇒ − G(s)0 Ω(s)−1 G(s)

G(s)0 Ω(s)−1 J(s).

=

and

√

T (γ̂(s) − γ0 )

T

³

´ 1 X

¡

¢−1

− Ω−1 (s) − Ω−1 (s)G(s) G(s)0 Ω(s)−1 G(s)

G(s)0 Ω(s)−1 √

gtT (θ0 , s) + op (1)

T t=1

³

´

¡

¢−1

⇒ − Ω−1 (s) − Ω−1 (s)G(s) G(s)0 Ω(s)−1 G(s)

G(s)0 Ω(s)−1 J(s).

=

Proof of Theorem 3.3

The results for the W aldT (s) and LMT (s) under the null can be directly derived from similar arguments than in the proof of Theorem 3 in Andrews (1993). The asymptotic distribution under the

alternative is a direct implication of the Theorem 3.2. For the LRT (s) statistic, expanding the partialsample GEL objective function evaluated at the unrestricted estimator about γ = 0 yields,

T

1X

2T

ρ(γ̂(s)0 gtT (θ̂T (s), s))

(2KT + 1) T t=1

=

−

T

1X

2T

γ̂(s)0 gtT (θ̂T (s), s)) −

(2KT + 1) T t=1

T

1X

2T

γ̂(s)0 gtT (θ̂T (s), s))gtT (θ̂T (s), s))γ̂(s) + op (1)

(2KT + 1) T t=1

33

PT

By the fact that 2KTT +1 t=1 gtT (θ̂T (s), s))gtT (θ̂T (s), s)) is a consistent estimator of Ω(s) and by Theorem

√ PT

√

(3.1), T /(2KT + 1)γ̂(s) = −Ω(s)−1 T t=1 gtT (θ̂T (s), s)) + op (1), we get

T

2T

1X

ρ(γ̂(s)0 gtT (θ̂T (s), s)) = T gT (θ̂(s), s)0 Ω(s)−1 gT (θ̂(s), s) + op (1).

(2KT + 1) T t=1

Similarly, the expansion the the partial-sample GEL objective function but evaluated at the restricted

estimator yields:

T

2T

1X

ρ(γ̃t (s)0 gtT (θ̃T (s), s)) = T gT (θ̃(s), s)0 Ω(s)−1 gT (θ̃(s), s) + op (1).

(2KT + 1) T t=1

The LRT (s) is then asymptotically equivalent to the LR statistic defined in Andrews (1993) for the

standard GMM.

Proof of Theorem 3.4

P[T s]

P[T s]

First, for the statistic OT (s), the asymptotic equivalence between t=1 gtT (β̂1 (s), δ̂(s)) with t=1 g(xt β̂1 (s), δ̂(s))

PT

PT

and t=[T s]+1 gtT (β̂1 (s), δ̂(s)) with t=[T s]+1 g(xt β̂1 (s), δ̂(s)) is direct implication of the Lemma 7.1 and

Lemma 7.2 and by the asymptotic consistency of the estimator Ω̂1T (s) and Ω̂2T (s) for Ω, the result under

the null and alternative follow directly from the proofs for Theorem 2.1 and subsection A.2 in Hall and

Sen (1999).

Second, for the statistic OT (s)GEL , similarly in the proof in Theorem 3.2, we can show that:

h

i

0

[T s]

2[T s] X ρ(λ1 (s) gtT (β̂1 (s), δ̂(s))) − ρ0

= O1T (s) + op (1)

2KT + 1 t=1

[T s]

and

2(T − [T s])

2KT + 1

T

X

t=[T s]+1

h

i

ρ(λ1 (s)0 gtT (β̂1 (s), δ̂(s))) − ρ0

T − [T s]

= O2T (s) + op (1)

The asymptotic distribution under the null and the alternative follows directly.

³√

´

Finally, for the statistic LMTO (s), the asymptotic equivalence between