A System for Scalable 3D Visualization and Editing of Connectomic Data

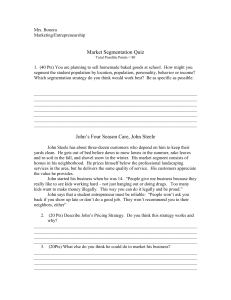

advertisement