Predicting Range of Acceptable Photographic Tonal Adjustments Ronnachai Jaroensri 7

Predicting Range of Acceptable Photographic Tonal

Adjustments

ARCHIVES

MASSACHurCrr' F

0,r

-T T7 ITE

by

Ronnachai Jaroensri

JUL 0 7 2015

B.S.,

Stanford University (2012)

LIBRARIES

Submitted to the Department of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of

Master of Science in Electrical Engineering and Computer Science at the

MASSACHUSETTS INSTITUTE OF TECHNOLOGY

June 2015

@

Massachusetts Institute of Technology 2015. All rights reserved.

Author...........

/-\

Signature redacted

Department of Electrical Engineering and Computer Science

May 19, 2015

Signature redacted

C ertified by .................... ,. .........................................

Fredo Durand

Professor

Thesis Supervisor

Signature redacted

A ccepted

by

..........................

/

I6sfi A. Kolodziejski

Chairman, Department Committee on Graduate Theses

2

Predicting Range of Acceptable Photographic Tonal Adjustments by

Ronnachai Jaroensri

Submitted to the Department of Electrical Engineering and Computer Science on May 19, 2015, in partial fulfillment of the requirements for the degree of

Master of Science in Electrical Engineering and Computer Science

Abstract

There is often more than one way to select tonal adjustment for a photograph, and different individuals may prefer different adjustments. However, selecting good adjustments is challenging.

This thesis describes a method to predict whether a given tonal rendition is acceptable for a photograph, which we use to characterize its range of acceptable adjustments. We gathered a dataset of image "acceptability" over brightness and contrast adjustments. We find that unacceptable renditions can be explained in terms of over-exposure, under-exposure, and low contrast. Based on this observation, we propose a machine-learning algorithm to assess whether an adjusted photograph looks acceptable. We show that our algorithm can differentiate unsightly renditions from reasonable ones. Finally, we describe proof-of-concept applications that use our algorithm to guide the exploration of the possible tonal renditions of a photograph.

Thesis Supervisor: Fredo Durand

Title: Professor

3

4

Acknowledgments

There had been many people who have lent their supporting hands to me throughout my graduate career thus far. Their presence had made these past few years possible. For that, I am grateful, and I would like to express my gratitude to:

* My advisor, Frddo Durand, for your mentorship, guidance, and, most importantly, your patience. You always made it seem possible, when I had not a single clue.

" Sylvain Paris and Aaron Hertzmann, for all your helpful advices and your guidance from start to the finish line. They were overwhelmingly helpful.

* Vlad Bychkovsky, for all the encouragement and the wisdom of someone who had been there before.

* Michael Gharbi and Abe Davis, for being awesome office mates and friends.

" Yichang Shih, for inspiring me to look at the problem in the right way.

* Manohar Srikanth, for inspiring me to be do better than I did yesterday.

" Kanoon Chatnuntawech, for all the intellectually stimulating conversations we had had, and making me think about my career path.

* Duong Huynh and Vinh Le, for introducing me to Boston and the new phase of my life.

" Park Sinchaisri, for the late night study sessions and the instant noodles they implied.

* Leslie Lai, for being with me through all the ups and downs.

" Pak Sutiwisesak, for being the best of all best friends.

" My parents and brother, for clapping and cheering for me from half way around the world.

5

6

Contents

1 Introduction

1.1 Related W ork . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

15

17

2 A Crowd-sourced Dataset

2.1 What is Acceptable? . . . . . . . . . . . . . . . .

. . . . . . . . . . . . . . . .

2.2 The Zero Configuration and the Adjustment Model . . . . . . . . . . . . . . . .

2.3 Data Collection Protocol . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

2.4 Data Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

19

19

21

22

23

3 Predicting Acceptability

3.1 Toy Predictors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

3.2 Our Image-Based Predictor . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

25

25

26

4 Evaluation

4.1 Prediction Result . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

4.2 Going beyond Brightness and Contrast . . . . . . . . . . . . . . . . . . . . . . .

4.3 Failure Cases . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

31

33

33

34

5 Applications

5.1 Most Favorable Adjustment Prediction . . . . . . . . . . . . . . . . . . . . . . .

5.2 Smart Preset . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

7

41

41

42

5.3 Adjustment Space Exploration Assistance . . . . . . . . . . . . . . . . . . . . . . 43

6 Conclusion 45

A Adjustment Model 47

A. 1 Color Space Transformation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

A.2 Our Adjustment Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

B Guideline For Participants 51

8

List of Figures

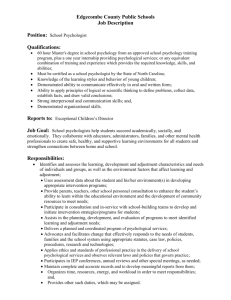

1-1 Adjustment Space Exploration. There are many valid ways to adjust an image.

Our two-dimensional adjustment space is shown on the left, with the origin (no adjustment) at the crosshairs. Four adjustments are shown in the space with X's: two acceptable, two unacceptable. The magenta curve shows the boundary between acceptable and unacceptable adjustments, obtained by crowdsourcing: outside the boundary, the images no longer look acceptable. We present a machine learning algorithm to estimate this boundary for new images. . . . . . . . . . . . . . . . . . 16

2-1 Extreme adjustment that might be seen as interesting by some users. Because answers were not consistent on those, we guided users to reject them. . . . . . . . 20

2-2 Sample unedited photographs with their corresponding data. The green dots repre-

sent acceptable adjustments, and the blue x's represent unacceptable adjustments.

The blue crosshair is zero adjustment. Shown boundaries (magenta) are calculated using SVM with radial basis function kernel on the adjustment values. . . . . . . . 23

2-3 Failure cases in tonal adjustments. Each edge of the triangle represents different failure cases: over-exposure (a), under-exposure (b), and low contrast (c). The original photograph at zero adjustment is shown in (d). . . . . . . . . . . . . . . . 24

2-4 Kernel density estimate of accepted data points. An arbitrary contour is drawn in magenta to illustrate the triangular shape, which indicates three cases of image degradation: overexposure, underexposure, and low contrast. . . . . . . . . . . . . 24

9

4-1 Our algorithm adapts to the exposure of the input photo (a). It allows it to better represent the space of acceptable adjustments (b) whereas the average-adjustment predictor includes many unacceptable samples in its acceptable range and also misses many acceptable points (c). . . . . . . . . . . . . . . . . . . . . . . . . .

31

4-2 Our Algorithm's Failure Cases The adjustment values are marked with X's in the third column. High dynamic range (a) appeared overexposed, while textured scene

(b) were allowed contrast in unusual range, causing misclassifications. . . . . . . . 32

4-3 Textured Scene. First column is images at zero setting (blue cross hair in the plot on the right). The middle column is adjusted by the values marked with X's in the right column. Textured scene were allowed contrast in unusual range, causing m isclassifications. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

4-4 Colorful Images. We show three misclassified renditions (left) and their desaturated counterparts (right). These renditions were accepted by human observers, but were rejected by our predictor as having low contrast. Notice that the face of the girls become flat in the desaturated version. We believe color contrast is the main cause of misclassification of photographs shown here. . . . . . . . . . . . . . . . . . . . 37

4-5 High Dynamic Range Image. This high dynamic range image at bright setting is misclassified. There is enough overexposed salient region (the window) to cause our algorithm to reject, while human observer accept this as a proper indoor scene. 39

4-6 Saturation Mask Problem. Overexposing white patch is often expected. However, when the subject of the photograph is mainly white, this heuristic fails, causing false-positive errors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 39

10

4-7 Artifact of Our Adjustment Model. Our simple adjustment model does not guard against undesirable clipping when the pixel value leaves the supported gamut.

Notice the left part of the building in (b), as well as the window frames throughout the building face. The clipping has caused golden sun reflection to appear as unsightly yellow patches. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

5-1 Most Favorable Point Prediction. Our prediction adapts well to the image content, while the average toy predictor returns always the same value, which fails when the image is not already properly exposed. . . . . . . . . . . . . . . . . . . . . . . . . 42

5-2 Smart Preset vs Normal Preset. The top row shows the result of our smart preset, and the bottom row the standard preset, which directly copies the adjustment to the destination image. Our algorithm predicts acceptable adjustments, which the smart preset uses to control the amount of adjustment applied to an image. The resulting images exhibit a high brightness similar to that of the model while remaining aesthetically pleasing, even though dynamic ranges of the source and destination im ages are different. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

43

B-1 Sample Questionaire . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

11

12

List of Tables

4.1 Performance Comparison. As expected, our predictor performed better than the naive average adjustment but not as well as the per-image fit (remember that this latter option does not generalize to unseen photographs, it is only provided as a reference). . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .

33

4.2 Performance in High Dimensional Adjustment Space. Our algorithm's performance remained the same. Performance of both toy predictors were near chance, which indicates that the effect of added adjustment dimensions dominates human judgment.

Chance predictor is provided for reference, and, in this case, it does best if it always gives positive prediction. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

13

14

Chapter 1

Introduction

Photographic adjustment is a subjective matter: a photograph can be modified with different exposure, color and other adjustments in various ways, depending on the user's intent and preference.

However, performing these adjustments is a difficult task because there are many parameters to adjust. Presets and other completely automatic methods have been proposed [3, 4, 13, 14, 25].

However, when more controls are desired, users must fall back on tedious parameter manipulation.

As the space of parameters grows, the exploration naturally becomes more difficult [16].

This thesis is inspired by the observation that, while many different adjustments may be valid for a given photograph, there are many, more-extreme adjustments that would also never be chosen for normal photo touch-up. We refer to this space of normal adjustments as acceptable. For example, Figure 1-1 shows a photograph where a user may prefer an accented highlight on the hair

(Fig. -1(a)), or a good exposure on the entire face (Fig. 1-1(b)). However, extreme adjustments lead to photographs that are too degraded to be normally usable (Figs. 1-1(c) and 1-1(d)). We call these photographs unacceptable. Of course, precisely defining the notion of acceptable is in itself a challenging task.

Our goal is not to estimate the absolute aesthetics of an adjusted photograph. For this work, acceptability has more to do with recognizable content and proper exposure than it does with

15

(a) (b)

~ \

____

_ /

)

(c)

< : .:-___

Brig h t ness r. .

:;;E::> x x

Figure 1-1: Adjustment Space Exploration. There are many valid ways to adjust an image. Our two-dimensional adjustment space is shown on the left, with the origin (no adjustment) at the crosshairs. Four adjustments are shown in the space with X's: two acceptable, two unacceptable.

The magenta curve shows the boundary between acceptable and unacceptable adjustments, obtained by crowdsourcing: outside the boundary, the images no longer look acceptable. We present a machine learning algorithm to estimate this boundary for new images.

(d) composition and color harmony of the subject. We seek to study how the quality of a natural photograph changes as we explore its renditions across the adjustment space. This contrasts our work with techniques in the aesthetics assessment literature where acceptable rendition is essentially a minimum requirement [ 8 , 9 , 15 , 18 , 19 ].

This thesis describes a method for predicting the acceptable set of tonal adjustments for a given photograph. There are four main contributions of this work.

1. We gather a crowd-sourced dataset of labeled photographs that allow us to analyze the notion of acceptability. This dataset is available online at http : //projects.csail.mit.edu/acceptable adj/ .

2. We find , from this dataset, that in a two-parameter adjustment space , photograph corruption can be categorized into three forms: over-exposure, under-exposure, and low contrast.

16

3. We introduce a learned model that predicts whether a given rendition is acceptable. We use this predictor to define the range of acceptable adjustments by sampling the parameter space and analyzing each sample. We show that this model makes accurate prediction of acceptable renditions, even for the ones adjusted by a more complex model.

4. We demonstrate the benefits of our algorithm with proof-of-concept editing applications that adapt to the predicted ranges.

1.1 Related Work

There exist several approaches to automatic photo adjustment. One is to use machine-learning techniques based on manually built training sets [3,4, 13]. Another option is to directly translate standard photographic guidelines into an automatic algorithm [1 4, 25]. The common point of these approaches is they predict a single valid adjustment. In contrast, we aim for the set of all such adjustments.

Our work is also related to automatic aesthetic assessment, e.g., [8,9, 15, 18, 19]. These methods assign a score to images depending on their visual quality but do not modify them. They include subject content into their consideration, and a strong emphasis is put on image composition. By contrast, we focus on the effect of adjustments on the image renditions. That being said, our approach shares the same strategy of using machine learning to exploit training data, and some aspects of our image descriptor are inspired by those used in these techniques.

Recently, Koyama et al. [16] proposed a user interface for exploring the range of valid adjustments, showing that adapting controls to valid ranges improves user experience and speeds up their exploration process. Their work required crowd-sourcing for each image to define the quality of different adjustments. Our work is complementary, as we show how to define the range of acceptable adjustments for new images without requiring crowd-sourcing.

17

18

Chapter 2

A Crowd-sourced Dataset

In order to address the photographic acceptability problem, we created a dataset of photographs adjusted to various configurations, and crowd-sourced acceptability judgements from human observers. This chapter will address the critical issue of how we define and communicate the concept of "acceptability" to our experiment participants, and will give the full details of the considerations we have taken when we collected our data. We will also present some observations we had about the data that will be crucial to how we predict the acceptability of a photograph.

2.1 What is Acceptable?

"Acceptable" is an ambiguous term. Everyone has their own definition of what an acceptable photograph is. In our case, we study the effect that an adjustment has for a given photograph, rather than comparing the aesthetics of different photographs. Properly adjusted, a beautiful rose should be as "acceptable" as a pile of dead leaves. In our experiment, we specifically instructed our participants to ignore the subject content of photographs, and provided them contrasting examples of professionally adjusted photographs with good and bad compositions in our instruction. The actual instructions, examples, and guidelines we used can be found in the Appendix B.

19

In the course of our data collection, we also found that some extreme adjustments can create non-photorealistic renditions that our experiment participants might find interesting (Figure 2-1).

We collected a small set of data that allowed such renditions to be accepted, but there was not enough agreement among the participants to create a meaningful signal. Thus, in the final version of the survey, we excluded these effects, and instructed our participants to reject such renditions.

Figure 2-1: Extreme adjustment that might be seen as interesting by some users. Because answers were not consistent on those, we guided users to reject them.

Crowd-sourcing subjective judgement has several drawbacks such as the lack of control for viewing conditions. This meant that the acceptability ratings we collected would contain nonnegligible amount of noise regardless of the precision of our instruction. However, people typically view photographs on their computers in an uncontrolled environment. Our experiment is, thus, representative of typical user experience. Furthermore, as pointed out by Harris et al., the quality of crowd-sourced data approaches that of controlled experiments when there are enough data points [11]. While Harris et al. do not provide a threshold for our specific experiment, we ensured that we sampled our adjustment space densely enough to see consistent trends within our data.

Additionally, we allowed each participants to see only one rendition per photograph. This ensures that the ratings for a given photograph came from different people, and the data represents the opinion of the crowd. We did not show a reference photograph to the participants, and they were forced to choose acceptable/unacceptable for each photograph as they saw it.

20

2.2

The Zero Configuration and the Adjustment Model

For this data set, we used photographs from the MIT-Adobe FiveK dataset because it provides a variety of high quality photographs in both edited and raw format [3]. We randomly selected photographs and manually adjusted the selection to ensure a variety of natural scenes and conditions, e.g., day vs. night, indoor vs. outdoor. There were 500 photographs in total.

In this work, we focused on tonal adjustments and sought to minimize any color-related effects.

In particular, we found that using the as-shot white balance and saturation settings can sometimes make a photo unacceptable independently of tonal settings. To avoid this issue, we applied the white balance and saturation settings of one of the expert retouchers of the MIT-Adobe FiveK dataset

(we selected the same expert as Bychkovsky et al. in their study) and kept the other parameters as captured [3]. We used this color-adjusted photo as the zero configuration on top of which we applied tonal adjustments.

We limited our adjustment model only to brightness and contrast adjustments. We chose to perform these adjustments in Chong et al.'s color space because it decouples the chrominance and the luminance channels [7]. Our brightness adjustment roughly corresponds to a multiplication of the linear RGB channels, while the contrast adjustment corresponds to a gamma curve. The exact formula of the adjustment model is given in Appendix A.

Although two-dimensional adjustment space is of limited use, it allows us to sample densely while keeping the size of our data manageable. This is because the number of samples we need grows exponentially with the dimensionality of the adjustment space. Despite the adjustment model's simplicity, brightness and contrast represent the most important tonal adjustments [3]. As we shall see in Chapter 4, the learning from this space applies in higher dimensions.

21

2.3 Data Collection Protocol

With the notion of acceptability and the definition of zero configuration in mind, we describe the full detail of our data collection, and all further considerations we have made to ensure the integrity of our data.

Of the 500 photographs in our dataset, we divided our dataset into five photo sets of 100 photos each. In each trial, photographs in a selected photo set were adjusted randomly. The participants looked at the photographs one by one, and provided a binary label (acceptable/unacceptable). We provided them with an instruction that consisted of text explanation and examples of acceptable and unacceptable photographs. The guideline for what was considered acceptable was presented in the beginning of each trial, and was available throughout. Since our input are natural photographs, we chose to describe acceptable as "natural-looking" in our prompt instead of "acceptable." We also compared the choice of this description, and we found that "natural-looking" yielded the least noise in our data.

The order of photographs presentation was randomized at the beginning of each trial. We included an attention checker once every 10 images. These attention checkers were either professionally adjusted photographs, or extreme adjustments that should have been rejected (e.g. entirely black, binary image). We discarded any trials where the participant failed more than 20% of the attention checkers.

We experimentally found that a 9 brightness units by 2 contrast unit window is the optimal window for most images (see detail on the units in the Appendix A). Additionally, we centered our windows around the setting that was the most similar to a professionally adjusted version of each image.

During our test trials, we collected data on a small number of images with up to 1,200 adjustments each. We then subsampled this data to determine the optimal amount of sampling needed.

We found that ~600 adjustments produced sufficiently consistent shape, and location of the cloud

22

( a )

<.::_ -::::;...___.; Brightness L__J::.

:-

Figure 2-2: Sample unedited photographs with their corresponding data. The green dots represent a c ceptable adjustments , and the blue x ' s represent unacceptable adjustments. The blue crosshair is zero adjustment. Shown boundaries (magenta) are calculated using SVM with radial basis function kernel on the adjustment values. of accepted points. In our final run , there were 26 brightness x 26 contrast stratified adjustments per image. After discarding low quality trial, we had 500 - 600 data points per photographs, and approximately 1,200 participants in total (some participants worked on more than one image set).

2.4 Data Analysis

To gain some insight and guide the design of our algorithm, we analyze the distribution of our data in the brightness x contrast space. We observe a dense region with accepted adjustments in the middle of the sampling window. The density of the accepted points decreases away from the center. Figure 2-2 shows a few photographs from our dataset and their corresponding acceptable regions. Additionally, we observe significant image-to-image variations of the acceptable region with the exposure of the original photograph playing a major role. For instance in Figure 2-2(a) , the region is shifted toward the high-brightness values because the input is under-exposed, while the region in Figure 2-2(b) is centered because the corresponding original photograph is well exposed.

Furthermore, we also observe significant variations in the size of accepted regions.

Inspecting the acceptable regions for many images, we observe that the acceptable region frequently has a triangle-like boundary with curved edges (Fig. 2-2 ). This trend is further confirmed

23

crowd-sourced data (a) over exposed (b) under-exposed (c) low contrast ( d) original

Figure 2-3: Failure cases in tonal adjustments. Each edge of the triangle represents different failure cases: over-exposure (a), under-exposure (b), and low contrast (c). The original photograph at zero adjustment is shown in ( d). with a kernel density estimate of the accepted adjustments (Fig. 2-4 ). We found that the triangular shape indicates three issues (Fig. 2-3 ): over-exposure, under-exposure, and low contrast.

These three cases will inform the design of the machine-learning features that we use to predict whether an adjustment is acceptable. In Section 4.2

, we shall see that these observations also generalize to a higher-dimensional tonal adjustment space.

Brightness ~

Figure 2-4: Kernel density estimate of accepted data points. An arbitrary contour is drawn in magenta to illustrate the triangular shape, which indicates three cases of image degradation: overexposure, underexposure, and low contrast.

24

Chapter 3

Predicting Acceptability

In this chapter, we build upon the previous observations to design our predictor. Formally, given an input image I and an adjustment x, we seek to predict a binary label g E { acceptable, unacceptable }.

We first describe two toy predictors that make their predictions solely on adjustment values. Then, we explain our technique, which makes its prediction based on adjusted photographs and is completely agnostic of the adjustment model. The toy predictors will help characterize our data and will provide useful performance comparisons to our technique.

3.1 Toy Predictors

In order to characterize the variations in our data, we create two toy predictors that only use the adjustment value as their feature: the average-adjustment predictor, and the per-image predictor.

These toy predictors define performance bounds for the machine-learning algorithm that we describe in Section 3.2.

The average-adjustment predictor is trained purely on adjustment value and ignored image dependency completely. This corresponds to a naive form of machine learning where the prediction is always the same and equal to the average of the training set. While a random predictor would

25

be worse, we nonetheless expect any sophisticated predictor to perform better than this simple technique. The per-image predictor, on the other hand, is a collection of classifiers, each of which was trained on one of the images from the dataset. These predictors generalize poorly to unseen photos due to their minimal training set, but perform ideally on their corresponding training image.

Because of this property, they provide an upper bound for the subsequent evaluation, i.e., we do not expect any generic predictor to perform better on a given photo than a predictor trained specifically on that photo. These predictors also help us quantify the irreducible classification error due to the progressive transition between acceptable and unacceptable classes.

We use Support Vector Machines with Radial Basis Function kernel as the algorithm for these toy predictors. Our implementation is based on LibSVM for the per-image predictor, and on

BudgetedSVM with LLSVM approximation scheme for the average-adjustment predictor [6, 10].

We preformed 8-fold cross-validation to choose the slack cost.

3.2

Our Image-Based Predictor

Instead of using adjustment values in the prediction, our machine-learning algorithm learns directly from the adjusted photographs, and is completely agnostic to the adjustment model. This allows us to apply its learning towards more general tonal adjustment models such as those that include highlight and shadow adjustments. We designed our features to capture the three observed failure cases: over-exposure, under-exposure, and low contrast. The features we implemented were:

1. Fraction of Highlight and Shadow Clipping. We calculated this feature in Chong et al.'s colorspace [7]. Highlight clipping is defined as pixels that are beyond 99% of the luminance range, and shadow clipping as pixels that are below 1%.

2. Luminance Histogram. We used 10-bin Chong et al. luminance histogram, and we normalized the histogram so that it sums to one.

26

3. RMS Luminance Contrast. RMS Luminance contrast amounts to calculating standard deviation of the luminance values [23]. Additionally, we computed this feature on a 5-level

Gaussian pyramid of the image to capture contrast at different scales, and also on 3 x 3 grids to capture local contrast.

We calculated this feature in Chong et al.'s space. Note that Chong et al.'s space is logarithmic, but the spaces in which RMS contrast is usually calculated are linear. Instead of taking a difference, our RMS contrast measures the ratio of luminance values in the linear scale.

4. Loss of Gradient. We calculated gradient of the preadjusted image, and used it to detect edge. We marked the pixels that had gradient stronger than a predetermined threshold as edge pixels, and calculated the fraction of those edge pixels that fell below the same predetermined threshold. This feature tends to correlate well with contrast adjustment, which turns out to be an important predictor of acceptability.

The threshold we used should represent just noticeable difference (JND). In CIELAB space, the JND is approximately 2.3 units [20]. We sampled different pairs of luminance values in CIELAB that are 2.3 units apart, and calculated their average distance in Chong et al.'s space [7]. We found the value to be approximately 1.5 units in the Chong et al.'s space.

5. Local Band-Limited Contrast. We followed Peli's definition of band-limited (multiscale) contrast [23]. This contrast is defined in linear luminance space, so we used YUV colorspace to compute the contrast because it is fast to compute [2]. We calculated 9 octaves of spatial frequencies.

For each feature, we also experimented with various kinds of pixel mask to capture what is important in the photographs (face, sky, etc.). For example overexposure of the sky migth be expected, and should be largely excluded from over exposure calculation. The masks that we used are as follows:

27

1. Sky. We used Subr et al.'s segmentation tool to manually mark the sky [24]. Their tool takes scribbles, and propagates the annotation to the whole image. We used their implementation because it does not require pixel-accurate annotations, and it gives accurate segmentation.

2. Face. MIT-Adobe FiveK Dataset has bounding boxes of all faces in their data [3]. We used these boxes as the mask. If there is no face, our mask is identity.

3. Saturation. We calculated saturation in L*a*b* colorspace, by finding the norm in a*b* plane.

4. Saliency. We used Judd et al.'s saliency calculation [12].

5. Gradient. We calculated gradient in the luminance channel using standard sobel filter. The final mask is the L

2 norm of the gradient at each pixel.

We found that computing masks on the original images was brittle because the camera metering may have been wrong. We addressed this by stretching the luminance histogram to the full available range using an affine remapping of the pixel values. We also found that the sky and face masks brought a minimal benefit in terms of measurable accuracy but we added them nonetheless because we found that they improved prediction on photos dominated by the sky or faces, which were important categories.

We experimented with different combinations of the weights and features explained above. We stacked some of our masks together by pixel-wise multiplication. Our best-performing feature lineup was:

1. Highlight Clipping with mask Sky x Gradient x Saturation x Face (Dim

= 1)

2. Highlight Clipping with mask Sky x Saliency x Saturation x Face (Dim = 1)

3. Shadow Clipping with Gradient x Face (Dim = 1)

4. Luminance Histogram with mask Sky x Saturation (Dim = 10)

28

5. Luminance Histogram with mask Gradient x Saturation (Dim = 10)

6. RMS Contrast with Face mask (Dim = 1)

7. RMS Contrast on Gaussian Pyramid (Dim = 5)

8. RMS Contrast on 3 x 3 windows (Dim = 9)

9. Loss of Gradient (Dim = 1)

10. Local Band-Limited Contrast (Dim = 9)

The total dimensionality of our feature space was 48. The features were normalized such that each dimension was zero-mean with a standard deviation of 1. We used MATLAB@ implementation of Logitboost and cross-validated to choose the number of ensembles [21]. We also experimented with linear and RBF SVM, but the Logitboost outperformed both SVMs considerably.

29

30

Chapter 4

Evaluation

As described in the previous chapter, we designed our algorithm to make predictions directly from the adjusted photographs, independently of the adjustment model. In this chapter, we show that our algorithm generalizes to unseen photographs and that it performs well on photographs adjusted by a more sophisticated model.

0.

8.

F

T

(a) input photo

-- Brightness -

(b) our algorithm

-, Brightness --

(c) average-adjustment predictor

Figure 4-1: Our algorithm adapts to the exposure of the input photo (a). It allows it to better represent the space of acceptable adjustments (b) whereas the average-adjustment predictor includes many unacceptable samples in its acceptable range and also misses many acceptable points (c).

31

Unedited Image Image at Failed Adjustment Our Prediction Over Adjustment Space

(a)

Brightness

(b)

Brightness

Figure 4-2: Our Algorithm's Failure Cases The adjustment values are marked with X's in the third column. High dynamic range (a) appeared overexposed, while textured scene (b) were allowed contrast in unusual range, causing misclassifications.

32

4.1 Prediction Result

Predictor

Average Adjustment

Per-Image

Ours

Error (%) Precision

15.6

10.6

14.1

0.68

0.80

0.71

Recall

0.55

0.68

0.62

F-Score

0.61

0.73

0.66

Table 4.1: Performance Comparison. As expected, our predictor performed better than the naive average adjustment but not as well as the per-image fit (remember that this latter option does not generalize to unseen photographs, it is only provided as a reference).

Table 4.1 summarizes our prediction result. Here, we exclude points that are likely to be noise such as those that are on the wrong side of boundary and outside the margin of the per-image

SVM. All metrics are calculated on the remaining data points. After removing noise, we have approximately 23% positive points.

Not surprisingly, the per-image predictor does best in all performance metrics and the averageadjustment predictor does significantly worse. For the latter, its relatively high precision, and relatively low recall suggests that its predictions are generally conservative. Nonetheless, our algorithm performs better than the average predictor. Using the F-score that balances precision and recall, our algorithm is about half way between the baseline and the ideal case.

The performance of the average-adjustment predictor heavily relies on the fact that most input photographs are well exposed. The prediction fails on an under- or over-exposed image (Fig. 4-1).

Our algorithm, on the other hand, is based on directly calculating the image statistics, and it finds the correct location of the acceptable region.

4.2 Going beyond Brightness and Contrast

Because our algorithm is agnostic to the adjustment model, it generalizes beyond the brightnesscontrast adjustment space. To verify this, we collected a small dataset with highlight and shadow

33

adjustments added to the adjustment model. The adjustment range was controlled such that half of the dataset was acceptable. Variations in brightness and contrast adjustments were limited to ensure that we observe mainly the effect of the added dimensions. After discarding low-quality data as we did previously, we had 4,500 data points from 50 unseen photographs, with 58% accepted points.

Predictor

Ours

Average Adjustment

Per-Image

Chance

Error (%)

24.8

39.2

35.8

41.8

Precision

0.75

0.61

0.64

0.58

Recall

0.87

0.96

0.90

1.0

Table 4.2: Performance in High Dimensional Adjustment Space. Our algorithm's performance remained the same. Performance of both toy predictors were near chance, which indicates that the effect of added adjustment dimensions dominates human judgment. Chance predictor is provided for reference, and, in this case, it does best if it always gives positive prediction.

Table 4.2 summarizes the performance of our algorithm on this dataset. Our algorithm does significantly better than the average-adjustment and per-image predictors (both toy predictors ignore additional adjustments and predict based on brightness and contrast only). In fact, the toy predictors' misclassification rates are about the same as chance, and the high recall indicates that they mostly yield positive predictions on this dataset. This shows that the added dimensions have a significant effect over human's judgement of the image quality. We cannot directly compare this experiment with the brightness-contrast only experiment because the sampling rates differ (sampling the 4D space as finely as the brightness-contrast space and crowd-sourcing votes is impractical due to the sheer number of samples that would be required); nonetheless, the precision rating suggests that our algorithm does equally well on this 4D dataset as on the brightness-contrast data.

4.3 Failure Cases

Despite having good test performances, and the ability to generalize to a more complicated adjustment model, there are a few notable cases where our image-based predictor fails. Predicting

34

acceptability, it seems, has a lot to do with semantic understanding of the scene. As most of our features are low- to mid-level, there are many cases where it fails. This section will try to comprehensively list all those cases. The most striking problems we encounter seem to be related to texture and color, which we describe as follows.

Textured Scenes

Textured scenes pose significant challenges in estimating the appropriate contrast levels of the scenes. In particular, human observers accepted textured scenes at lower contrast settings than normal images, causing false-negative errors in the regions where our predictor assumes the contrast is already too low. Figure 4-3 shows examples of such textured scenes.

This problem may be related to a study by McDonald and Tadmor who studied perceived contrast of textured patches [22]. They embedded band-limited textures in the center of natural images, and measured the perceived contrast of those texture patches. In their experiment, they found that the contrast of the embedded texture patch was suppressed, i.e. the patches were perceived as having

lower contrast, which contradicts the observation we had on these textured scenes. However, we observed many textured scenes to be pictures of brownish/grayish soil or wooden objects (Figure 4-

3(b)). Therefore, it is plausible that human observers have different expectations of contrast for certain object, but further study is required for any definitive conclusion.

Bae et al. proposed a measure of texturedness using Fourier analysis on the luminance channel of images [I]. However, their texturedness measure appears to be biased toward darker pixels, and does not differentiate texture and non-texture regions sufficiently well for our application. We did not observe improvement in our predictor's performance when we included Bae et al.'s texturedness into our contrast calculation.

35

Unedited Image Image at Failed Adjustment Our Prediction

(a)

Brightness

(b)

S Brightness

(c )

(C)~

Brightness

Figure 4-3: Textured Scene. First column is images at zero setting (blue cross hair in the plot on the right). The middle column is adjusted by the values marked with X's in the right column. Textured scene were allowed contrast in unusual range, causing misclassifications.

36

(a)

Misclassified Rendition Same Image in Black and White

(b)

(c)

Figure 4-4: Colorful Images. We show three misclassified renditions (left) and their desaturated counterparts (right). These renditions were accepted by human observers, but were rejected by our predictor as having low contrast. Notice that the face of the girls become flat in the desaturated version. We believe color contrast is the main cause of misclassification of photographs shown here.

37

Color Contrast

The second of our failure cases may be color-related (Figure 4-4). We observed that many images with large regions of different colors were accepted at lower contrast settings than our algorithm predicted. Color contrast, it seems, adds to overall perceived contrast of the image, causing false-negatives in the low contrast regions of the affected images.

Furthermore, it is also plausible that the effect we saw is semantic-related and/or hue-specific.

We observed many examples to be images of flowers in front of a green background. At the same time, there are many affected landscape images with blue sky and brownish soil (Figure 4-4(c)).

Arguably, these images may have been affected by texture problem discussed previously. However, we noticed that these images viewed in grayscale appears to have much less contrast than in color.

To the best of our knowledge, there has only been a limited number of studies of the effect of color on perceived contrast of natural images. Color perception is known to be a difficult topic, because there are many factors that affect how humans perceive color [17]. For example, spatial arrangement and context could alter perceived hue. Nonetheless, Calabria and Fairchild suggested the use of mean, variance, and median of image chroma [5]. We implemented these statistics in our contrast features but did not observe improvement on these images.

High Dynamic Range Images

High dynamic range images are images with many salient regions at both ends of the luminance range. They are challenging to our algorithm because human observers are tolerant to overexposed salient regions if there is still other properly exposed salient regions in the image. For instance, in

Figure 4-5, human observers tolerate saturating the window as long as the chair that is the main element of the picture looks good. Our algorithm misses the layering of the scene and predicts such saturation as unacceptable.

38

(a) Original Rendition (b) Bright Rendition

Figure 4-5: High Dynamic Range Image. This high dynamic range image at bright setting is misclassified. There is enough overexposed salient region (the window) to cause our algorithm to reject, while human observer accept this as a proper indoor scene.

Inaccurate Saturation Mask

We included a saturation mask because overexposing colorful patches is often not acceptable.

However, this heuristic sometimes fails. For example, our saturation mask gave low weight to the white ship, which is the main subject of the photograph (Fig. 4-6). Fortunately, we observe mostly minor misclassifications that seem to stem from this problem, so the inclusion of the mask still improves our overall performance.

Unedited Image Image at Failed Adjustment Our Prediction

Brightness - -

Figure 4-6: Saturation Mask Problem. Overexposing white patch is often expected. However, when the subject of the photograph is mainly white, this heuristic fails, causing false-positive errors.

39

Artifact of Our Adjustment Model

Most photo editing tools introduce some non-linearity to preserve saturated pixels. Since our adjustment model is simple, colorful saturation occurs when a pixel value is adjusted beyond the sRGB gamut in Chong's color space [7]. This kind of saturation can easily cause an image to be rejected because saturated regions often appear out of place (Figure 4-7). However, it is difficult to detect because our predictor only sees the adjusted image, whose pixel values has already been clipped. Furthermore, the saturation due to the simplicity of our adjustment model may have degraded the quality of our data by causing an image to be rejected at most of the queried brightness/contrast adjustments.

Figure 4-7: Artifact of Our Adjustment Model. Our simple adjustment model does not guard against undesirable clipping when the pixel value leaves the supported gamut. Notice the left part of the building in (b), as well as the window frames throughout the building face. The clipping has caused golden sun reflection to appear as unsightly yellow patches.

40

Chapter

5

Applications

In this chapter, we demonstrate a few applications that benefit from our predictor.

5.1 Most Favorable Adjustment Prediction

There are many possible schemes to deduce a single most favorable point from a range of acceptable adjustments, e.g., using the adjustment that is the farthest from the boundary. In our work, the predictor also provides us with a score, and we choose the adjustment with highest score as our most favorable point. The average adjustment predictor is independent of the image content, so it always predicts a constant value as its most favorable adjustment. Using its prediction is nearly equivalent to no operation, and it will fail when the input is too different from the average (Fig. 5-1).

Our algorithm respects the image content and yields similar renditions to that of the ideal perimage predictor. Note that our algorithm is trained on crowd-sourced data and as such reflects the consensus of the crowd. An interesting avenue for future work would be to tailor our predictions to a specific user akin to previous work like [3, 13].

41

( a)

Original Image Average Adj Predictor Our Classifier Per-Image Predictor

( b)

(c)

Figure 5-1: Most Favorable Point Prediction. Our prediction adapts well to the image content, while the average toy predictor returns always the same value, which fails when the image is not already properly exposed.

5.2 Smart Preset

Presets are a simple method for achieving consistent looks on an image collection. However , blindly copying and pasting adjustment values to all images is bound to fail because each image has a different dynamic range, and camera metering is not perfect. Our smart preset clamps the adjustment value to the predicted boundary by shrinking it towards the most favorable adjustment found earlier.

Figure 5-2 illustrates a case where the smart preset is effective. The sky in the original image is properly exposed, but the photographer wanted to reveal the building in the bottom of the picture. Since the building is dark, direct application of the adjustment value to other images causes overexposure in almost all cases . Our smart preset limits the extent to which the adjustment is allowed to alter an image, which generates better visual results.

42

Smart Preset

Normal Preset

Figure 5-2: Smart Preset vs Normal Preset. The top row shows the result of our smart preset, and the bottom row the standard preset, which directly copies the adjustment to the destination image.

Our algorithm predicts acceptable adjustments, which the smart preset uses to control the amount of adjustment applied to an image. The resulting images exhibit a high brightness similar to that of the model while remaining aesthetically pleasing, even though dynamic ranges of the source and destination images are different.

5.3

Adjustment Space Exploration Assistance

There are many ways we can use the prediction to assist users in exploring the adjustment space.

Koyama et al. describe an adjustment interface where each sliders is color-coded to indicate the direction that would lead to a more desirable result [16]. The crowd-sourced underlying "goodness" function also allows them to make a better adjustment randomization by biasing towards adjustment with higher scores. However, for each unseen image, the "goodness" function needs to be crowdsourced, which takes time and money. Our work complements theirs by providing the required

"goodness" score on unseen images.

43

44

Chapter 6

Conclusion

We collected and studied a dataset of acceptable adjustments over brightness and contrast adjustment.

To characterize our dataset, we describe two adjustment value-based toy predictors: the averageadjustment and per-image predictors. We find a significant performance gap between these two toy predictors, which suggests that image-dependent analysis is needed.

Our dataset shows that unacceptable renditions can be explained by over-exposure, underexposure, and low contrast. We design a machine-learning feature based on this observation, and show that it performs significantly better than the average toy predictor and that it generalizes to higher-dimensional tonal adjustment models.

Through the range of acceptable adjustment predictions, we demonstrated three proof-of-concept applications that facilitate exploration of adjustment space. We hope that these applications will create better user experience with complex photographic adjustments, allowing users to work faster, while keeping the quality of their photographs high. Our dataset is available online at http://projects.csai1.mit.edu/acceptable-adj/.

45

46

Appendix A

Adjustment Model

It is known that many photo editing tool such as Adobe Photoshop Lightroom and Aperture apply complicated adjustment model to achieve optimal photographic look. In our case, we try to keep thing as simple as possible, and we only allow exposure, contrast, and saturation adjustment.

Highlight and shadow adjustments were added only for later dataset. Our adjustment model was based on perceptually uniform color space proposed by Chong et al. [7]. Many of our operations are linear in this color space. Next section describe the color space and a few modification we made to suit our purpose.

A.1 Color Space Transformation

The color space proposed by Chong et al. has an advantage over Lab in that it completely separate chrominance from luminance channel. Multiplying (R, G, B) value by a constant (exposure adjustment) won't affect the chrominance channel. As a quick overview, Chong et al.'s transformation is as follow (we will adopt (Lt, at, bt) notation for Chong's luminance and two chrominance channels

47

respectively):

Lt at bt

A log(B

R

G

B

(A.1) where A and B are 3 x 3 matrices specified in [7], and log(.) represents elemen-wise natural logarithm. We add an additional rotation matrix in front of A provided by Chong et al., so that a gray scale values (R = G = B) maps along the first axis, and the two chrominance channels are approximately blue-yellow and margenta-green axes. Thus our final matrices are:

9.2152 0.6479 3.6904

A= 19.4876 -7.4255 -12.0623

17.8195 -23.3507 5.5316

(A.2)

B

0.4505 0.5335 0.0672

0.1627 0.6689 0.0574 (A.3)

0.0474 0.1183 0.9585

Here we assumed (R, G, B) E [0, 1]

3 values are in linear (not gamma-encoded) sRGB color space.

Because the transformation include logarithm, a black pixel will map to -oc. This poses problem when we need to measure perceptual distance between two dark pixels. We address this problem by assuming that R, G, and B value that are below a certain fraction of maximum value is noise, and we clamp them to that value before multiplying by B. We consider trade off between dynamic range and plausibility of perceptual distance in dark region, and by trial and error, we uses

0.004 as the threshold.

48

A.2 Our Adjustment Model

Our adjustment model is as followed:

1. Exposure adjustment (E) is constant multiplication of (R, G, B) values, which translate to addition to Lt. We rescale the exposure value so that exposure adjustment E is equavalent to

RGB multiplication by

2

E

2. contrast adjustment (C) adjusts spread of luminance value around a midpoint (M), which corresponds to 18% gray value (R = G = B = 0.18). Again we rescale the contrast adjustment so that adjustment C is equivalent to multiplying Lt by 2c.

3. saturation adjustment (S) is multiplicative factor to chrominance channel. We use second degree polynomial to map saturation adjustment value so that -1 adjustment maps to 0 (no saturation), 0 maps to 1, and +1 maps to twice as saturated.

4. highlight and shadow adjustment (HI, and SH) is applied after all the adjustments above.

We use a fixed constant 0 = 5 luminance units to define overlapping luminance regions between highlight and shadow adjustment. See equation A.7 for detail.

In equation form (after all rescaling), our adjustment is as followed:

Lt = Cx(Lt-M+E)+ M at = S x a t bt = S x bt

(A.4)

(A.5)

(A.6)

For the later experiment, there is an extra step for highlight and shadow adjustment:

49

Lt, = Lt + HI * (Lt - (M - 0))+

+ SH * ((M + O) - Lt)+ where (-)+ represents standard ramp function.

(A.7)

50

Appendix B

Guideline For Participants

Instruction

"

You can navigate back and forth to correct your mistake with previous and next buttons

" Once youve reached the end, click submit to submit and go to the next section

* You can click Show/Hide Guideline at anytime.

" Image may take a few seconds to load. If it doesn't load, try navigating away, and back again

" Please answer the following question: o

Is the current image NATURAL-LOOKING?

Ms NO

<<Prevwus

Image = ejin

Submit

Image loaded

Image 3 out of 10

Section 1 out of 3

Guideline

Figure B-1: Sample Questionaire

The following is the guideline we provided to our participants in the beginning and was available throughout. The sample page that our participants saw is shown in Figure B- 1.

This HIT will ask you to judge if the images shown are NATURAL-LOOKING. We've

51

provided some guidelines and examples below. Please use your best judgement.

Some (non-exhaustive) guidelines to consider when deciding:

1. NATURAL-LOOKING images are the ones that look like something you might have seen with your own eyes, OR taken with a camera with reasonable camera settings. Note that

natural-looking images need NOT be images of nature. It could be man-made objects as long as it looks like something that you would see with naked eyes.

NATURAL-LOOKING: it could be man- NATURAL-LOOKING: it could be namade ture

2. Decide whether an image is NATURAL-LOOKING based on the lighting/colors/exposure,

NOT the image content. An image might be acceptable even if the subject matter isn't interesting. The image might be aesthetically pleasing, but it need not be. For example, a picture of a friend or a favorite location does not need to be a beautiful picture.

3. Images that are underexposed (too dark), overexposed (too light), or have unappealing contrast are NOT NATURAL-LOOKING.

4. Images that are NOT NATURAL-LOOKING need not be ugly, but you would be able to tell that it has been modified from the originals

52

An image of some random leaves, prop- This sunset image is too dark.

It is erly taken, thus NATURAL-LOOKING. not what you would normally see with

This might be what you saw in your neigh- your eyes, and thus NOT NATURALbor's backyard LOOKING.

53

NOT NATURAL-LOOKING: too NOT NATURAL-LOOKING: too dark bright

NOT NATURAL-LOOKING: too much NOT NATURAL-LOOKING: unappealcontrast ing color

54

'A0

This picture of a stop sign is clearly modified. Thus, even though the image presents a somewhat artistic rendition, it is NOT

NATURAL-LOOKING.

This picture has also been heavily modified. It looks almost cartoon-like. It is thus

NOT NATURAL-LOOKING.

55

56

Bibliography

[1] S. Bae, S. Paris, and F. Durand. Two-scale tone management for photographic look. In ACM

Transactions on Graphics (TOG), volume 25, pages 637-645. ACM, 2006. 35

[2] G. M. R. B. Black. Yuv color space. Communications Engineering Desk Reference, page 469,

2009. 27

[3] V. Bychkovsky, S. Paris, E. Chan, and F. Durand. Learning photographic global tonal adjustment with a database of input/output image pairs. In Computer Vision and Pattern

Recognition (CVPR), 2011 IEEE Conference on, pages 97-104. IEEE, 2011. 15, 17, 21, 28,

41

[4] J. C. Caicedo, A. Kapoor, and S. B. Kang. Collaborative personalization of image enhancement.

In Computer Vision and Pattern Recognition (CVPR), 2011 IEEE Conference on, pages 249-

256. IEEE, 2011. 15, 17

[5] A. J. Calabria and M. D. Fairchild. Perceived image contrast and observer preference ii.

empirical modeling of perceived image contrast and observer preference data. Journal of

Imaging Science and Technology, 47(6):494-508, 2003. 38

[6] C.-C. Chang and C.-J. Lin. LIBSVM: A library for support vector machines. ACM Transactions

on Intelligent Systems and Technology, 2:27:1-27:27, 2011. Software available at ht t p:

//www.csie .ntu.edu.tw/-cjlin/libsvm. 26

[7] H. Y. Chong, S. J. Gortler, and T. Zickler. A perception-based color space for illuminationinvariant image processing. In ACM Transactions on Graphics (TOG), volume 27, page 61.

ACM, 2008. 21, 26, 27, 40, 47, 48

[8] R. Datta, D. Joshi, J. Li, and J. Z. Wang. Studying aesthetics in photographic images using a computational approach. In Computer Vision-ECCV 2006, pages 288-301. Springer, 2006.

16, 17

[9] R. Datta, J. Li, and J. Z. Wang. Algorithmic inferencing of aesthetics and emotion in natural images: An exposition. In Image Processing, 2008. ICIP 2008. 15th IEEE International

Conference on, pages 105-108. IEEE, 2008. 16, 17

[10] N. Djuric, L. Lan, S. Vucetic, and Z. Wang. Budgetedsvm: A toolbox for scalable svm approximations. Journal of Machine Learning Research, 14:3813-3817, 2013. 26

[11] M. D. Harris, G. D. Finlayson, J. Tauber, and S. Farnand. Web-based image preference.

Journal of Imaging Science and Technology, 57(2):20502-1, 2013. 20

57

[12] T. Judd, K. Ehinger, F. Durand, and A. Torralba. Learning to predict where humans look. In

Computer Vision, 2009 IEEE 12th international conference on, pages 2106-2113. IEEE, 2009.

28

[13] S. B. Kang, A. Kapoor, and D. Lischinski. Personalization of image enhancement. In Computer

Vision and Pattern Recognition (CVPR), 2010 IEEE Conference on, pages 1799-1806. IEEE,

2010. 15, 17, 41

[14] L. Kaufman, D. Lischinski, and M. Werman. Content-aware automatic photo enhancement. In

Computer Graphics Forum, volume 31, pages 2528-2540. Wiley Online Library, 2012. 15, 17

[15] Y. Ke, X. Tang, and F. Jing. The design of high-level features for photo quality assessment.

In Computer Vision and Pattern Recognition, 2006 IEEE Computer Society Conference on, volume 1, pages 419-426. IEEE, 2006. 16, 17

[16] Y. Koyama, D. Sakamoto, and T. Igarashi. Crowd-powered parameter analysis for visual design exploration. In Proceedings of the 27th annual ACM symposium on User interface

software and technology, pages 65-74. ACM, 2014. 15, 17, 43

[17] R. B. Lotto and D. Purves. The empirical basis of color perception. Consciousness and

Cognition, 11(4):609-629, 2002. 38

[18] W. Luo, X. Wang, and X. Tang. Content-based photo quality assessment. In Computer Vision

(ICCV), 2011 IEEE International Conference on, pages 2206-2213. IEEE, 2011. 16, 17

[19] Y. Luo and X. Tang. Photo and video quality evaluation: Focusing on the subject. In Computer

Vision-ECCV 2008, pages 386-399. Springer, 2008. 16, 17

[20] M. Mahy, L. Eycken, and A. Oosterlinck. Evaluation of uniform color spaces developed after the adoption of cielab and cieluv. Color Research & Application, 19(2):105-121, 1994. 27

[21] MATLAB. Statistics Toolbox Release 2012a. The MathWorks Inc., Natick, Massachusetts,

2012. 29

[22] J. S. McDonald and Y. Tadmor. The perceived contrast of texture patches embedded in natural images. Vision research, 46(19):3098-3104, 2006. 35

[23] E. Peli. Contrast in complex images. JOSA A, 7(10):2032-2040, 1990. 27

[24] K. Subr, S. Paris, C. Soler, and J. Kautz. Accurate binary image selection from inaccurate user input. In Computer Graphics Forum, volume 32, pages 41-50. Wiley Online Library, 2013. 28

[25] L. Yuan and J. Sun. Automatic exposure correction of consumer photographs. In Computer

Vision-ECCV 2012, pages 771-785. Springer, 2012. 15, 17

58