Fisher Information in a Statistic Stat 543 Spring 2005

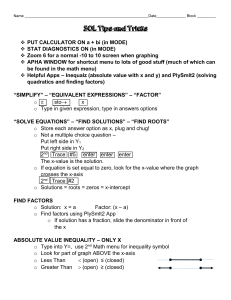

advertisement

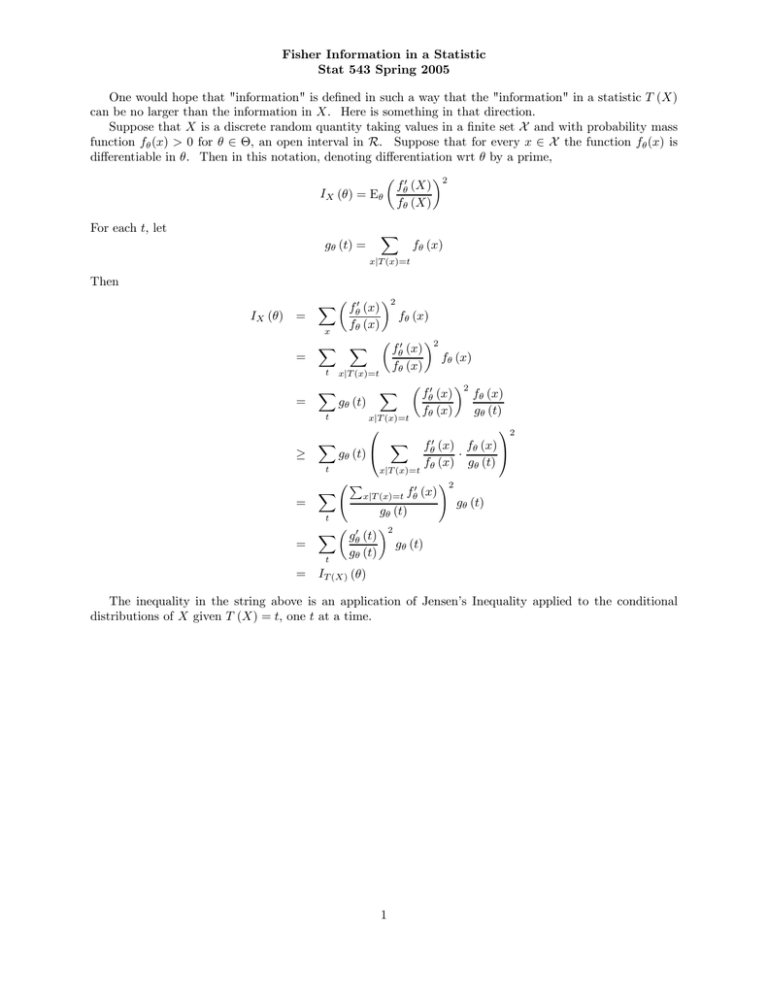

Fisher Information in a Statistic Stat 543 Spring 2005 One would hope that "information" is defined in such a way that the "information" in a statistic T (X) can be no larger than the information in X. Here is something in that direction. Suppose that X is a discrete random quantity taking values in a finite set X and with probability mass function fθ (x) > 0 for θ ∈ Θ, an open interval in R. Suppose that for every x ∈ X the function fθ (x) is differentiable in θ. Then in this notation, denoting differentiation wrt θ by a prime, IX (θ) = Eθ For each t, let gθ (t) = µ fθ0 (X) fθ (X) X ¶2 fθ (x) x|T (x)=t Then IX (θ) = X µ f 0 (x) ¶2 θ x = = ≥ = = fθ (x) fθ (x) µ ¶2 fθ0 (x) fθ (x) fθ (x) t x|T (x)=t X X µ f 0 (x) ¶2 fθ (x) θ gθ (t) f gθ (t) θ (x) t x|T (x)=t ⎛ ⎞2 X f 0 (x) fθ (x) X θ ⎠ gθ (t) ⎝ · f (x) g (t) θ θ t x|T (x)=t ÃP !2 0 X x|T (x)=t fθ (x) gθ (t) gθ (t) t X µ g 0 (t) ¶2 θ gθ (t) g θ (t) t X X = IT (X) (θ) The inequality in the string above is an application of Jensen’s Inequality applied to the conditional distributions of X given T (X) = t, one t at a time. 1