18-742 Spring 2011 Parallel Computer Architecture Lecture 9: More Asymmetry Prof. Onur Mutlu

advertisement

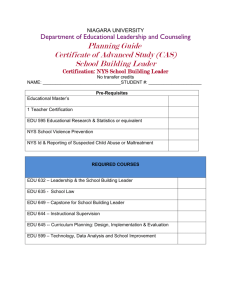

18-742 Spring 2011 Parallel Computer Architecture Lecture 9: More Asymmetry Prof. Onur Mutlu Carnegie Mellon University Reviews Due Wednesday (Feb 9) Rajwar and Goodman, “Speculative Lock Elision: Enabling Highly Concurrent Multithreaded Execution,” MICRO 2001. Due Friday (Feb 11) Herlihy and Moss, “Transactional Memory: Architectural Support for Lock-Free Data Structures,” ISCA 1993. 2 Last Lecture Finish multi-core evolution (IBM perspective) Asymmetric multi-core Accelerating serial bottlenecks How to achieve asymmetry? Benefits and disadvantages Static versus dynamic Boosting frequency Asymmetry as EPI throttling Design tradeoffs in asymmetric multi-core 3 Review: How to Achieve Asymmetry Static Type and power of cores fixed at design time Two approaches to design “faster cores”: High frequency Build a more complex, powerful core with entirely different uarch Is static asymmetry natural? (chip-wide variations in frequency) Dynamic Type and power of cores change dynamically Two approaches to dynamically create “faster cores”: Boost frequency dynamically (limited power budget) Combine small cores to enable a more complex, powerful core Is there a third, fourth, fifth approach? 4 Review: Possible EPI Throttling Techniques Grochowski et al., “Best of both Latency and Throughput,” ICCD 2004. 5 Review: Design Tradeoffs in ACMP (I) Hardware Design Effort vs. Programmer Effort - ACMP requires more design effort + Performance becomes less dependent on length of the serial part + Can reduce programmer effort: Serial portions are not as bad for performance with ACMP Migration Overhead vs. Accelerated Serial Bottleneck + Performance gain from faster execution of serial portion - Performance loss when state is migrated - Serial portion incurs cache misses when it needs data generated by the parallel portion - Parallel portion incurs cache misses when it needs data generated by the serial portion 6 Review: Design Tradeoffs in ACMP (II) Fewer threads vs. accelerated serial bottleneck + Performance gain from accelerated serial portion - Performance loss due to unavailability of L threads in parallel portion This need not be the case Large core can implement Multithreading to improve parallel throughput As the number of cores (threads) on chip increases, fractional loss in parallel performance decreases 7 Uses of Asymmetry So far: Improvement in serial performance (sequential bottleneck) What else can we do with asymmetry? Energy reduction? Energy/performance tradeoff? Improvement in parallel portion? 8 Use of Asymmetry for Energy Efficiency Kumar et al., “Single-ISA Heterogeneous Multi-Core Architectures: The Potential for Processor Power Reduction,” MICRO 2003. Idea: Implement multiple types of cores on chip Monitor characteristics of the running thread (e.g., sample energy/perf on each core periodically) Dynamically pick the core that provides the best energy/performance tradeoff for a given phase “Best core” Depends on optimization metric 9 Use of Asymmetry for Energy Efficiency 10 Use of Asymmetry for Energy Efficiency Advantages + More flexibility in energy-performance tradeoff + Can execute computation to the core that is best suited for it (in terms of energy) Disadvantages/issues - Incorrect predictions/sampling wrong core reduced performance or increased energy - Overhead of core switching - Disadvantages of asymmetric CMP (e.g., design multiple cores) - Need phase monitoring and matching algorithms - What characteristics should be monitored? - Once characteristics known, how do you pick the core? 11 Use of ACMP to Improve Parallel Portion Performance Mutual Exclusion: Threads are not allowed to update shared data concurrently Accesses to shared data are encapsulated inside critical sections Only one thread can execute a critical section at a given time Idea: Ship critical sections to a large core Suleman et al., “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures,” ASPLOS 2009, IEEE Micro Top Picks 2010. 12 A Critical Section 13 Example of Critical Section from MySQL List of Open Tables A Thread 0 B × Thread 1 Thread 2 C × × Thread 3 D E × × × × Thread 2: 3: OpenTables(D, E) CloseAllTables() 14 Example of Critical Section from MySQL A B C D E 0 0 1 2 2 3 3 15 Example of Critical Section from MySQL LOCK_openAcquire() End of Transaction: foreach (table opened by thread) if (table.temporary) table.close() LOCK_openRelease() 16 Contention for Critical Sections Critical Section Parallel Thread 1 Thread 2 Thread 3 Thread 4 Idle Accelerating critical sections not only helps the thread executing t t t t t t thet critical sections, but also the waiting threads Thread 1 Critical Sections 1 2 3 4 5 6 Thread 2 Thread 3 Thread 4 7 execute 2x faster t1 t2 t3 t4 t5 t6 t7 17 Contention for Critical Sections Contention for critical sections leads to serial execution (serialization) of threads in the parallel program portion Contention is likely to increase with large critical sections 18 Impact of Critical Sections on Scalability • Contention for critical sections increases with the number of threads and limits scalability 8 7 Speedup LOCK_openAcquire() foreach (table locked by thread) table.lockrelease() table.filerelease() if (table.temporary) table.close() LOCK_openRelease() 6 5 4 3 2 1 0 0 8 16 24 32 Chip Area (cores) MySQL (oltp-1) 19 Conventional ACMP 1. 2. 3. 4. 5. EnterCS() PriorityQ.insert(…) LeaveCS() P1 P2 encounters a Critical Section Sends a request for the lock Acquires the lock Executes Critical Section Releases the lock Core executing critical section P2 P3 P4 On-chip Interconnect 20 Accelerated Critical Sections (ACS) Critical Section Request Buffer (CSRB) Large core Niagara Niagara -like -like core core Niagara Niagara -like -like core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core ACMP Approach • Accelerate Amdahl’s serial part and critical sections using the large core Suleman et al., “Accelerating Critical Section Execution with Asymmetric Multi-Core Architectures,” ASPLOS 2009, IEEE Micro Top Picks 2010. 21 Accelerated Critical Sections (ACS) 1. P2 encounters a Critical Section 2. P2 sends CSCALL Request to CSRB 3. P1 executes Critical Section 4. P1 sends CSDONE signal EnterCS() PriorityQ.insert(…) LeaveCS() P1 Critical Section Request Buffer (CSRB) Core executing critical section P2 P3 P4 OnchipInterconnect 22 Accelerated Critical Sections (ACS) Small Core Small Core A = compute() A = compute() PUSH A CSCALL X, Target PC LOCK X result = CS(A) UNLOCK X print result … … … … … … … Large Core CSCALL Request Send X, TPC, STACK_PTR, CORE_ID … Waiting in … Critical Section Request Buffer … (CSRB) TPC: Acquire X POP A result = CS(A) PUSH result Release X CSRET X CSDONE Response POP result print result 23 ACS Architecture Overview ISA extensions CSCALL LOCK_ADDR, TARGET_PC CSRET LOCK_ADDR Compiler/Library inserts CSCALL/CSRET On a CSCALL, the small core: Sends a CSCALL request to the large core Arguments: Lock address, Target PC, Stack Pointer, Core ID Stalls and waits for CSDONE Large Core Critical Section Request Buffer (CSRB) Executes the critical section and sends CSDONE to the requesting core 24 False Serialization ACS can serialize independent critical sections Selective Acceleration of Critical Sections (SEL) Saturating counters to track false serialization To large core A 4 2 3 CSCALL (A) B 4 5 CSCALL (A) Critical Section Request Buffer (CSRB) CSCALL (B) From small cores 25 ACS Performance Tradeoffs Fewer threads vs. accelerated critical sections Accelerating critical sections offsets loss in throughput As the number of cores (threads) on chip increase: Overhead of CSCALL/CSDONE vs. better lock locality Fractional loss in parallel performance decreases Increased contention for critical sections makes acceleration more beneficial ACS avoids “ping-ponging” of locks among caches by keeping them at the large core More cache misses for private data vs. fewer misses for shared data 26 Cache misses for private data PriorityHeap.insert(NewSubProblems) Private Data: NewSubProblems Shared Data: The priority heap Puzzle Benchmark 27 ACS Performance Tradeoffs Fewer threads vs. accelerated critical sections Accelerating critical sections offsets loss in throughput As the number of cores (threads) on chip increase: Overhead of CSCALL/CSDONE vs. better lock locality Fractional loss in parallel performance decreases Increased contention for critical sections makes acceleration more beneficial ACS avoids “ping-ponging” of locks among caches by keeping them at the large core More cache misses for private data vs. fewer misses for shared data Cache misses reduce if shared data > private data 28 ACS Comparison Points Niagara Niagara Niagara Niagara -like -like -like -like core core core core Large core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara -like -like core core Large core Niagara Niagara -like -like core core Niagara Niagara -like -like core core Niagara Niagara -like -like core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core Niagara Niagara Niagara Niagara -like -like -like -like core core core core SCMP ACMP ACS • All small cores • Conventional locking • • One large core (area-equal 4 small cores) Conventional locking • ACMP with a CSRB • Accelerates Critical Sections 29 ACS Simulation Parameters Workloads 12 critical section intensive applications from various domains 7 use coarse-grain locks and 5 use fine-grain locks Simulation parameters: x86 cycle accurate processor simulator Large core: Similar to Pentium-M with 2-way SMT. 2GHz, out-of-order, 128-entry ROB, 4-wide issue, 12-stage Small core: Similar to Pentium 1, 2GHz, in-order, 2-wide issue, 5stage Private 32 KB L1, private 256KB L2, 8MB shared L3 On-chip interconnect: Bi-directional ring 30 Workloads with Coarse-Grain Locks Chip Area = 16 cores Chip Area = 32 small cores SCMP = 16 small cores ACMP/ACS = 1 large and 12 small cores SCMP = 32 small cores ACMP/ACS = 1 large and 28 small cores 130 120 110 100 90 80 70 60 50 40 30 20 10 0 210 150 Exec. Time Norm. to ACMP Exec. Time Norm. to ACMP Equal-area comparison Number of threads = Best threads SCMP ACS 31 130 120 110 100 90 80 70 60 50 40 30 20 10 0 210 SCMP ACS 150 Workloads with Fine-Grain Locks Chip Area = 16 cores Chip Area = 32 small cores SCMP = 16 small cores ACMP/ACS = 1 large and 12 small cores SCMP = 32 small cores ACMP/ACS = 1 large and 28 small cores 130 120 110 100 90 80 70 60 50 40 30 20 10 0 Exec. Time Norm. to ACMP Exec. Time Norm. to ACMP Equal-area comparison Number of threads = Best threads SCMP ACS 32 130 120 110 100 90 80 70 60 50 40 30 20 10 0 SCMP ACS ------ SCMP ------ ACMP ------ ACS Equal-Area Comparisons Speedup over a small core Number of threads = No. of cores 3.5 3 2.5 2 1.5 1 0.5 0 3 5 2.5 4 2 3 1.5 2 1 0.5 1 0 0 0 8 16 24 32 0 8 16 24 32 (a) ep (b) is 0 8 16 24 32 (c) pagemine 6 10 8 5 8 6 4 6 3 4 4 2 1 2 2 0 0 0 0 8 16 24 32 (g) sqlite 7 6 5 4 3 2 1 0 0 8 16 24 32 (h) iplookup 3.5 3 2.5 2 1.5 1 0.5 0 0 8 16 24 32 0 8 16 24 32 0 8 16 24 32 (d) puzzle (e) qsort (f) tsp 12 3 12 10 2.5 10 8 2 8 6 1.5 6 4 1 4 2 0.5 2 0 0 0 0 8 16 24 32 0 8 16 24 32 (i) oltp-1 (i) oltp-2 Chip Area (small cores) 33 14 12 10 8 6 4 2 0 0 8 16 24 32 0 8 16 24 32 (k) specjbb (l) webcache Exec. Time Norm. to SCMP ACS on Symmetric CMP 130 120 110 100 90 80 70 60 50 40 30 20 10 0 Majority of benefit is from large core ACS symmACS 34 Alternatives to ACS Transactional memory (Herlihy+) ACS does not require code modification Transactional Lock Removal (Rajwar+), Speculative Synchronization (Martinez+), Speculative Lock Elision (Rajwar) Hide critical section latency by increasing concurrency ACS reduces latency of each critical section Overlaps execution of critical sections with no data conflicts ACS accelerates ALL critical sections Does not improve locality of shared data ACS improves locality of shared data ACS outperforms TLR (Rajwar+) by 18% (details in ASPLOS 2009 paper) 35