Document 10743347

advertisement

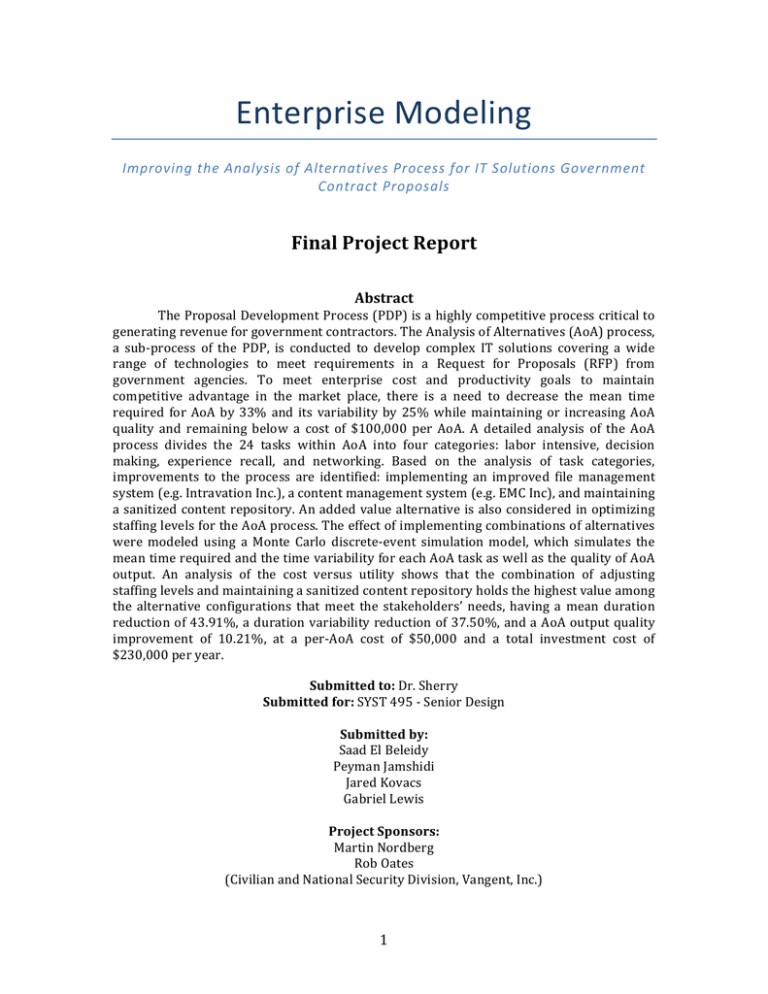

Enterprise Modeling Improving the Analysis of Alternatives Process for IT Solutions Government Contract Proposals Final Project Report Abstract The Proposal Development Process (PDP) is a highly competitive process critical to generating revenue for government contractors. The Analysis of Alternatives (AoA) process, a sub-­‐process of the PDP, is conducted to develop complex IT solutions covering a wide range of technologies to meet requirements in a Request for Proposals (RFP) from government agencies. To meet enterprise cost and productivity goals to maintain competitive advantage in the market place, there is a need to decrease the mean time required for AoA by 33% and its variability by 25% while maintaining or increasing AoA quality and remaining below a cost of $100,000 per AoA. A detailed analysis of the AoA process divides the 24 tasks within AoA into four categories: labor intensive, decision making, experience recall, and networking. Based on the analysis of task categories, improvements to the process are identified: implementing an improved file management system (e.g. Intravation Inc.), a content management system (e.g. EMC Inc), and maintaining a sanitized content repository. An added value alternative is also considered in optimizing staffing levels for the AoA process. The effect of implementing combinations of alternatives were modeled using a Monte Carlo discrete-­‐event simulation model, which simulates the mean time required and the time variability for each AoA task as well as the quality of AoA output. An analysis of the cost versus utility shows that the combination of adjusting staffing levels and maintaining a sanitized content repository holds the highest value among the alternative configurations that meet the stakeholders’ needs, having a mean duration reduction of 43.91%, a duration variability reduction of 37.50%, and a AoA output quality improvement of 10.21%, at a per-­‐AoA cost of $50,000 and a total investment cost of $230,000 per year. Submitted to: Dr. Sherry Submitted for: SYST 495 -­‐ Senior Design Submitted by: Saad El Beleidy Peyman Jamshidi Jared Kovacs Gabriel Lewis Project Sponsors: Martin Nordberg Rob Oates (Civilian and National Security Division, Vangent, Inc.) 1 Table of Contents 1.0 Context ..................................................................................................................4 1.1 Project Sponsor: Civilian and National Security Division, Vangent Inc...................................... 4 1.2 The Proposal Development Process (PDP)............................................................................................. 4 1.3 The Technical Solution Development Process ...................................................................................... 5 1.4 The Analysis of Alternatives (AoA) Process ........................................................................................... 6 1.4.1 The Define the Problem Domain Phase................................................................................................. 9 1.4.2 The Define Evaluation Criteria Phase................................................................................................. 11 1.4.3 The Explore Alternate Solutions Phase .............................................................................................. 12 1.4.4 The Evaluate Solutions Phase................................................................................................................. 13 1.5 The Final Output of AoA: The Ranked List of Alternatives ............................................................15 1.6 Issues in the AoA Process .............................................................................................................................15 1.6.1 Limited and Variable Availability of Information ......................................................................... 15 1.6.2 Limited and Variable Applicability of Information....................................................................... 16 1.6.3 Variable Difficulty of the AoA ................................................................................................................. 16 1.6.4 The Effect of Low Availability or Applicability of Information on the Quality of AoA Output .......................................................................................................................................................................... 17 1.6.5 Non-­Optimized Staffing Levels in the AoA ........................................................................................ 18 1.6.6 Entirely Overhead Costs ............................................................................................................................ 18 2.0 Stakeholder Analysis ............................................................................................19 2.1 Key Stakeholders..............................................................................................................................................19 2.2 Primary Stakeholders.....................................................................................................................................19 2.3 Stakeholder Goals ............................................................................................................................................20 2.4 Stakeholder Conflict........................................................................................................................................21 3.0 Problem and Need Statements.............................................................................23 3.1 Problem Statement..........................................................................................................................................23 3.2 Need Statement.................................................................................................................................................23 4.0 Design Alternatives ..............................................................................................24 4.1 Optimized Staffing Levels .............................................................................................................................24 4.2 Information Technology Alternatives.....................................................................................................25 4.2.1 File Management System ..................................................................................................................... 25 4.2.2 Content Management System................................................................................................................. 26 4.2.3 Sanitized Document Repository ............................................................................................................ 26 4.3 Cost of Alternatives .........................................................................................................................................27 5.0 Method of Analysis ..............................................................................................28 5.1 Simulation Design ............................................................................................................................................28 5.1.1 Model Assumptions .....................................................................................................................................28 5.1.2 Simulation Inputs and Outputs.............................................................................................................. 28 5.1.2.1 Simulation Inputs..................................................................................................................................... 29 5.1.2.2 Simulation Outputs.................................................................................................................................. 29 5.1.3 Simulation Process Logic.......................................................................................................................... 29 5.1.4 Simulation Calculations ............................................................................................................................ 30 5.2 Design of Experiment .....................................................................................................................................31 6.0 Results and Analysis .............................................................................................33 6.1 Simulation Results ...........................................................................................................................................33 6.1.1 AoA Mean Duration Reduction .............................................................................................................. 33 2 6.1.2 AoA Duration Variability Reduction.................................................................................................... 34 6.1.3 AoA Output Quality Increase................................................................................................................... 34 6.2 Cost-­‐Benefit Analysis......................................................................................................................................35 6.2.1 Utility Function Ranks ............................................................................................................................... 35 6.2.2 Sensitivity Analysis for the Utility Ranks ........................................................................................... 37 6.2.3 Cost versus Utility ........................................................................................................................................ 37 6.3 Recommendations ...........................................................................................................................................38 7.0 Project Budget and Management .........................................................................40 7.1 Work Breakdown Structure ........................................................................................................................40 7.2 Earned Value Management..........................................................................................................................40 References.................................................................................................................42 Appendix A : The Decision and Analysis Review Process Diagram...............................43 Appendix B: Percent Composition of Task Types for Each AoA Task ...........................44 Appendix C: Arena Simulation Model Documentation ...............................................46 3 1.0 Context 1.1 Project Sponsor: Civilian and National Security Division, Vangent Inc. This project is sponsored by the Civilian and National Security Division at Vangent, Inc. Vangent Inc. is a government contractor based in the greater Washington, D.C. area and specializes in consulting, information technology and management solutions as well as business process solutions and system integration services. Vangent employs about 7500 people across the country, and provides solutions to U.S. and international governments, as well as some private organizations such as educational institutions and other corporations. The contracts that Vangent wins and the associated solutions provided are usually quite large in value, ranging from several million dollars to over one hundred million dollars in value. The CNS division within Vangent provides solutions to key customers such as the U.S. Department of State, Department of Education, and Department of Labor. It responds with proposals to between 15 and 25 solicitations per year. The proposals developed by the CNS division are highly complicated in nature, often incorporating multiple types of technology into one solution [1]. The environment in which the CNS division operates is highly competitive. Each proposal generated by the CNS division is estimated to have five to ten competing proposals submitted by other contractors in response to the same solicitation. It is expected that recent budget cuts within the government as well as future expected cuts will only increase the competitive nature of this environment. For example, due to a Presidential directive, federal contract spending decreased from $550 billion $510 billion from fiscal year 2009 to fiscal year 2011 [2]. Efficiency is therefore key in all government contractor operations, especially in the process of developing proposals, as this is the crux of where revenue is made or lost. 1.2 The Proposal Development Process (PDP) Information Technology (IT) solutions government contracting companies are private commercial organizations that earn revenue through providing IT or IT-­‐ related solutions and services to meet needs within the government. A high level process by which needs are identified and solutions proposed and selected is described below in Figure 1 [1] [3]. The process begins when a need for a new system is identified in one of many government entities, such as government offices or field operators, who develop requirements based on their needs. These requirements are formed into a formal solicitation, such a Request for Proposal (RFP), by an acquisitions committee. The acquisitions committee is responsible for acquiring new systems to meet 4 government needs and ensuring an unbiased approach to evaluating proposed solutions, and so is required by law to be independent of the government entity voicing the need. Once the formal solicitation has been made public, the contractor makes a bid decision-­‐-­‐whether or not to pursue the solicitation by submitting a proposal to answer the need. If the contractor decides to pursue the solicitation, it develops a proposal via three roughly parallel processes: 1) Technical Solutions Development, where the technical solution is designed and the analysis of alternatives is conducted, 2) Proposal Writing, in which a proposal writing team, composed of both technical experts and writing experts, will craft the formal proposal, and finally 3) Budget and Management, which consists of the management of the Technical Solutions Development and Proposal Writing processes. The end result of this proposal development effort is the formal written proposal, which is submitted back to the acquisitions committee. Any number of proposals may be submitted from various government contractors, so the acquisitions committee must evaluate all proposals and select the “best alternative” based on defined requirements. Once the selection is complete, the selected solution is provided to the government entity by the contractor. Figure 1: Level 1 Diagram, High Level Solicitation and Proposal Generation Process 1.3 The Technical Solution Development Process Within the PDP, an in-­‐depth design process is undergone by the contractor to determine the technical solution to propose in response to the government entity’s need. The process by which the technical solution is developed in order to be proposed to the solicitor in response to the need is called, as previously mentioned, the “Technical Solution Development” process (Figure 2). [3] This phase of proposal 5 development is conducted in three steps: 1) Analysis of Alternatives (AoA), 2) Alternatives Analysis, and 3) Integration of Assets. In Analysis of Alternatives, the various possibilities for solutions the contractor could propose are identified and ranked, resulting in a “Ranked List of Alternatives” based on the solicitor’s requirements as documented in the solicitation, which is the primary input to this step. Alternatives Analysis consists of activities related to determining whether or not the previously determined alternatives actually meet the solicitor’s requirements, regardless of their rank in the analysis. The decision block shown represents the potential for the previous two steps to be repeated many times if there are many different technologies to consider in the solution. Once all technologies have been addressed, all assets, including technologies, to be included in the proposed solution are integrated into a coherent whole, resulting in the solution to be proposed. Figure 2: Level 2 Diagram, Technical Solution Development Process for Proposal Development 1.4 The Analysis of Alternatives (AoA) Process The process by which alternatives solutions to propose are determined and evaluated is given by the project sponsors as Vangent’s Decision and Analysis Resolution (DAR) process and is divided into four phases: 1) Define the Problem Domain, 2) Define Evaluation Criteria, 3) Explore Alternate Solutions, and 4) Evaluate Solutions (Figure 3) [3]. The primary input for AoA is the solicitation from the solicitor, and the final output of AoA is the “ranked list of alternatives” mentioned previously. Key external information relevant to the decision-­‐making processes of AoA is requested and obtained at several points in the process. This information includes 1) research from past AoA efforts, 2) industry research (including technology specifications, etc.), 3) customer knowledge, 4) subject matter expert opinions, and 5) internal survey responses [1]. Of particular importance among these is the past research, which may be able to completely or partially replace work done in the AoA, greatly diminishing its duration. For Vangent’s CNS division, the “Solutions Architect” (SA), a technical expert employee, is the primary 6 (and usually the only) person conducting the AoA process, and will from here on be referred to as the performer of AoA [1]. A diagram of each of the 24 tasks decomposing the AoA phases can be found in Appendix A. Likelihood Figure 3: Level 3 Diagram, AoA Phases: from the Decision and Analysis Resolution The AoA varies in duration with the larger proposal development effort, usually comprising about 20% of the whole PDP. Three common durations of the PDP/AoA are considered as representative of the overall duration of the process. The common PDP durations are 1) twelve months, at a likelihood of ~0.04, 2) six months, at a likelihood of ~0.60, and 3) one month, at a likelihood of ~0.36. The corresponding AoA durations for each PDP duration are shown in Figure 4 as 1) eight weeks, 2) four weeks, and 3) about one week [1]. 0.8 0.4 0 0 2 4 6 8 10 AoA Duration (Weeks) Figure 4: AoA Durations and their respective probabilities Also, each phase of the AoA takes a certain proportion of the time necessary for the entire AoA (Figure 5). The proportions of time in the entire AoA taken by each phase are 1) about 20% for the “Define the Problem Domain” phase, 2) about 10% for the “Define Evaluation Criteria” phase, 3) about 30% for the “Explore Alternate Solutions”, and 4) about 40% for the “Evaluate Solutions” phase [1]. 7 Mean Duration (% AoA) 60% 40% 20% 0% 20% Deline the Problem Domain 10% Deline Evaluation Criteria 30% 40% Explore Alternate Solutions Evaluate Solutions Figure 5: AoA Phase Durations and their respective probabilities Due to the fact that almost all of the AoA process is intellectual labor, there are multiple types of tasks involved, reflecting the nature of the work performed in the AoA. The task types differ in the variability of time required to accomplish tasks, giving insight into potential alternatives for improvement. The task categories are 1) Labor Intensive, 2) Decision Making, 3) Experience Recall, and 4) Networking. Each task can be described by some or all of the task types, at a proportion defined via stakeholder knowledge elicitation. The labor intensive task category represents tasks in which an expert is not required and are still time-­‐consuming. They have a low time variability of +/-­‐ 5% of the original duration (all variabilities are one standard deviation from the mean), and describe about 35% of the tasks in the AoA. The decision making category requires an expert decision maker to complete the tasks, for example making judgment calls about the weights for the evaluation criteria in AoA. They carry a high variability of +/-­‐ 30% of the original duration, and comprise about 19% of the tasks in the AoA. Experience recall tasks include requiring the decision maker to refer to his/her personal qualitative experience or memory. They are distinct from decision making tasks in that the decision maker is basing the given activity on a past activity, and not necessarily making a new decision. These tasks have a medium variability, at +/-­‐ 20% of the original duration, and describe 19% of the AoA. Finally, networking tasks are those involving interpersonal interaction and communication, for example, obtaining the opinion of a subject matter expert (SME). Networking tasks carry a high variability, at +/-­‐30% of the original duration, and describe about 26% of the AoA. Table 1 shows the variabilities and the percent composition of AoA for each task type. The percent composition of the task types in the AoA process are found as the aggregation of the percent composition of task types in each individual task, which can be found in Appendix B [1]. 8 Task Category % AoA Variability Labor Intensive 35.2% Low ±22% Decision Making 19.2% High ± 54% Experience Recall 26.3% Med ± 44% Networking 19.2% High ±100% Table 1: AoA Task Type Variabilities and Percent Composition of the AoA 1.4.1 The Define the Problem Domain Phase In the “Define the Problem Domain” phase (Figure 6), the requirements for alternate solutions are defined and rolled up into “key” requirements. These requirements are obtained first directly from the primary input of AoA, the solicitation, but are also gained from the “technical reference model” provided by the solicitor. The “technical reference model” document contains requirements and recommendations focused on the technical functionality aspects of the government entity operations, including the solicitors past experience and, where relevant, preferred functionality or vendors of technology. Another input is customer knowledge gained through public Q&A sessions which the contractor may or may not choose to participate in, based on the subjective assessment of the Solutions Architect as to whether or not it is needed. Other factors in his/her decision include the risks of unintentionally sharing expertise through public questions, or revealing weaknesses to be exploited. The requirements are divided into two categories: functional and non-­‐ functional. Functional requirements include “technical” and “environmental” requirements. “Technical” functional requirements are those dealing with the technical aspects of the systems functionality, such as performance, input/output, or interface requirements. “Environmental” functional requirements refer to those concerning the systems impact on its environment, including the working area and the system’s users. Non-­‐functional requirements are divided into “technical”, “environmental”, “financial”, and “political” requirements. Non-­‐functional “technical” requirements refer to those parts of the system’s technical aspects which are not functionality related, and “environmental” non-­‐functional requirements refer to the same for environmental concerns. “Financial” requirements refer to cost constraints and other financially-­‐oriented concerns of the system, and “political” requirements are drawn from the current political climate or past laws that place constraints on the system [3]. 9 Once these requirements are defined, they are “rolled up” into “key” functional and non-­‐functional requirements. This is done by a process of determining, based on the solution architect’s expert knowledge as well as the solicitations specifications, which requirements are more important and should be kept separate from the rest, and which can be aggregated, or “rolled” into a collection represented by a “key” requirement. Figure 6: The “Define the Problem Domain” Phase The percent composition of task types involved in this phase are shown in Table 2. This phase includes significant portions of each task type, due in large part to the diverse nature of the initial work done in this phase. Defining requirements may be considered to be primarily labor intensive, but it may also involve consulting with colleagues if the SA is unfamiliar with the problem area. Rolling up requirements into “key” requirements is an expertise-­‐based task, also requiring much past experience [1]. Task Category % of Phase Labor Intensive 25% Decision Making 23% Experience Recall 21% Networking 31% Table 2: Percent Composition of Task Types in the “Define the Problem Domain” Phase 10 1.4.2 The Define Evaluation Criteria Phase The “Define Evaluation Criteria” phase (Figure 7) includes those actions necessary to develop clear, measurable evaluation criteria from the previously defined requirements to be used in the evaluation of alternatives. Criteria are also defined from the solutions architect’s past experience. The criteria are divided into two parts: technical criteria, and business criteria. As can be imagined, technical criteria have to do with the technical aspects of the system, such as performance, related to functionality, which is measured by performance metrics. Business criteria have to do with less functional aspects of the system and more financial, cost-­‐related aspects, in addition to other qualitatively defined aspects of the system. The evaluation criteria are documented as they are defined, and compiled into a coherent set of evaluation criteria [3]. Figure 7: The “Define Evaluation Criteria” Phase This phase is heavily labor intensive, involving much translation of requirements to measurable criteria and consulting of research for previously-­‐used metrics, etc. However, it also includes substantial work in the other task types. The percent composition of task types in this phase is seen in Table 3 [1]. 11 Task Category % of Phase Labor Intensive 42% Decision Making 20% Experience Recall 18% Networking 20% Table 3: Percent Composition of Task Types in the “Define Evaluation Criteria” Phase 1.4.3 The Explore Alternate Solutions Phase The third phase of the AoA is the “Explore Alternate Solutions” phase (Figure 8). In this phase, the Solutions Architect researches and uses whatever means available to identify reasonable candidate solutions for the proposed solution. The activities in this phase are obtaining an subject matter expert’s (SME) opinion, conducting personal market research in literature and Internet resources, conducting internal surveys to determine the capabilities of the company with regards to the technology at hand, and finally, using industry research organizations (IROs). IROs are organizations which conduct and maintain thorough market research in various technology areas, and then make their findings available for purchase. The solutions architect makes numerous run-­‐time decisions on which exactly are necessary of the possible methods for determining alternatives. For example, if the proposal deals with a very simple and well known technology area, the solutions architect is less likely to consult a SME, but if the problem is complex and unfamiliar, he/she will almost certainly find and consult a SME. The alternatives are documented as they are identified and compiled into a coherent set of alternatives [3]. 12 Figure 8: The “Explore Alternate Solutions” Phase This phase contains substantial portions of both labor intensive and networking tasks. Much of the research tasks requires little expertise, but have long durations, making it labor intensive. However, consulting an SME is heavily a networking activity, as it deals with collaborating with others in the SA’s social network to gain knowledge on alternatives available. The percent composition of task types in this phase is shown in Table 4 [1]. Task Category % of Phase Labor Intensive 36% Decision Making 15% Experience Recall 13% Networking 36% Table 4: Percent Composition of Task Types in the “Explore Alternate Solutions” Phase 1.4.4 The Evaluate Solutions Phase The final phase of AoA is the evaluation of the alternate solutions, or “Evaluate Solutions” (Figure 9). In this phase, each alternate solution is evaluated against each evaluation criteria via five different methods of analysis to develop a ranking for each alternative. The methods are separated into qualitative analyses and quantitative analyses. The qualitative analyses are conducted either by a somewhat informal pro/con analysis or a formal Kepner-­‐Tregoe analysis. The 13 solutions architect makes a judgment call as to whether or not to conduct the pro/con analysis (for smaller, less complex solutions) or the Kepner Tregoe analysis (for larger and more complex solutions). Both of these analyses depend highly on the subjective assessment of the solutions architect, rather than a predefined scoring mechanism. Conversely, the quantitative analyses include a cost analysis based on the costs of the individual alternate solutions as well as a quantitative benefit analysis based on numerically measured aspects of the system, such as performance metrics. All these analyses are then wrapped up in a cost-­‐benefit analysis to determine the final ranking of alternatives. The final output of the cost-­‐ benefit analysis and of the entire AoA process is the ranked list of alternatives [3]. Figure 9: The “Evaluate Solutions” Phase This phase is heavily labor intensive, as it deals primarily with using information gained earlier in the process for calculations rather that the generation of new information. However, the analysis, especially the qualitative portion, relies on the expertise of the SA to make the various subjective judgment calls to determine the ranks of the alternate solutions. The percent composition of task types in this phase are shown in Table 5 [1]. Task Category % of Phase Labor Intensive 38% Decision Making 21% Experience Recall 23% Networking 18% Table 5: Percent Composition of Task Types in the “Evaluate Solutions” Phase 14 1.5 The Final Output of AoA: The Ranked List of Alternatives The final output and goal of the AoA process, the ranked list of alternatives, is a document, often including a table, showing the scoring of each alternate solution against each evaluation criteria. It consists of 1) a description and defense of the methodology used for the analysis (i.e. the DAR), 2) a list of all alternative solutions considered, 3) scores for each alternative solution against each criteria used in the analysis, along with an aggregate score for each alternative solution, and 4) a description of conclusions reached based on the aggregate scores. A typical “ranked list of alternatives” contains between 20 (for a small AoA) and 50 (for a large AoA) criteria used in the analysis. It may take several different forms based on the solutions architect’s preference, but will always include a description of alternatives considered and methods of analysis, with a ranked list of the alternatives. The table, if included, shows in matrix format the various alternatives weighed against the evaluation criteria and calculations of total scores, including the weights associated with each criterion if relevant. This is the final goal of the AoA process. Any system built to support the AoA process will be designed with this final goal in mind—to support the process of creating the ranked list of alternatives [1]. 1.6 Issues in the AoA Process Through a detailed analysis of the AoA process, multiple issues are identified leading to motivations for improvement. 1.6.1 Limited and Variable Availability of Information The availability of information, and in particular past research, to be used in the AoA is highly unpredictable and is usually deemed insufficient by the project stakeholders, in particular the SAs. A lack of key information may potentially result in delays in the process, or diminished quality of the AoA output if, for example, some high-­‐value alternate solutions are not considered in the analysis due to lack of information about them. Also, the lack of past research may result in duplicate effort among SAs in the CNS division if, for example, another SA has done research relevant to a given AoA in the past, but the current SA re-­‐does the work because he or she does not have access to the past research. A key factor contributing to the lack of information availability is the proprietary restrictions on the information, potentially requiring a lengthy process to gain the necessary permissions to access the information. The availability of past research and its likelihood at each level is shown in Figure 10, where 0% is the bare minimum amount of information allowable to still complete the process and 100% is the maximum expected availability level [1]. 15 Probability 0.5 0.25 0 0% 50% 100% Relative Expected Information Availability Figure 10: The Relative Expected Availability of Information in the AoA 1.6.2 Limited and Variable Applicability of Information The applicability of information in the AoA process is also unpredictable and often insufficient. Like a lack of availability, this may cause delays or a detriment to AoA output quality. Given that information is often very effortful to obtain by the SAs, the uncertain applicability of the may result in delays through wasted effort to obtain non-­‐useful information. The applicability of past research and its likelihood at each level is shown in Figure 11, where 0% is the least expected applicability and 100% is the maximum expected applicability of information [1]. Probability 0.5 0.25 0 0% 50% 100% Relative Expected Information Applicability Figure 11: The Relative Expected Applicability of Information in the AoA 1.6.3 Variable Difficulty of the AoA The difficulty of a given AoA is also unpredictable. AoA difficulty may be influenced by such factors as the level of familiarity of the SA with the problem area, the complexity of the technology involved, and many other factors. For a very 16 difficult AoA, high availability and applicability of information is necessary for a high quality output. But for a low difficulty AoA, the availability and applicability of information do not have as great an effect. Thus the importance of the availability and applicability of information is variable and often not known prior to the beginning of the AoA [1]. AoA Output Relative Quality 1.6.4 The Effect of Low Availability or Applicability of Information on the Quality of AoA Output The quality of the AoA output, the ranked list of alternatives, is directly related to the availability of information in the AoA. At the lowest expected availability level of information, the quality of the AoA output will be reduced to about 60% of what it would be at the maximum expected level of information availability. Figure 12 shows the relationship between the availability of information in the AoA and the relative AoA output quality [1]. 100% 50% 0% 0% 50% 100% Relative Expected Information Availability Figure 12: The Relative Expected Availability of Information in the AoA versus the AoA Output Relative Quality The quality of the AoA output is also directly related to the applicability of information in the AoA. As Figure 13 shows, at the lowest expected information applicability level, the AoA output quality decreases to about 40% of what it would be at the maximum expected level of information applicability [1]. From the analysis of the AoA process, it is found that changes in information availability have a greater effect on the AoA output quality than does the information applicability. Scale factors are determined for the effect on AoA output quality of the availability and applicability of information. The scale factor for availability is 0.6, and the scale factor for applicability is 0.4. Thus, the overall effect on AoA output quality from the availability and applicability of information can be calculated as 0.6 multiplied by the effect of information availability plus 0.4 multiplied by the effect of information applicability [1]. 17 AoA Output Relative Quality 100% 50% 0% 0% 50% 100% Relative Expected Information Applicability Figure 13: The Relative Expected Applicability of Information in the AoA versus the AoA Output Relative Quality 1.6.5 Non-­‐Optimized Staffing Levels in the AoA Current staffing arrangements for the AoA process are potentially non-­‐ optimal. A single SA conducts the entire AoA, leaving unexploited the parallel structure of much of the AoA process. If multiple resources were to simultaneously conduct the AoA, there could potentially be higher efficiency due to the parallel nature of the labor [3]. Probability 1.6.6 Entirely Overhead Costs The costs the AoA process are entirely overhead for the CNS division, and also vary with the size of the AoA and the proposal. The total yearly burden of a single SA conducting an AoA is estimated as $200,000 per year. From this, a $100 per hour total burden is calculated for the SAs. Thus, the labor costs per AoA range from $6,000 to $32,000, as shown in Figure 14. The yearly cost of AoAs for the CNS division is highly variable, ranging from a minimum of $150,000 to a maximum of $800,000, with an expected yearly cost of $326,000. The expected cost per AoA is $13,040 [1]. 0.8 $16,000 0.4 $6000 $32,000 0 0 2 4 6 8 10 AoA Duration (Weeks) Figure 14: AoA Durations with their respective costs and their probabilities 18 2.0 Stakeholder Analysis 2.1 Key Stakeholders Key stakeholders are those within the project sponsor’s organization, Vangent Inc.’s Civilian and National Security Division, and have been categorized as those that are directly involved in driving and or performing AoA in the proposal development process. 2.1.1 Solutions Architects Solutions Architects (SA) are technical experts and are the primary employee responsible for conducting the entire AoA process. They perform market research and collect component specifications and data from vendors via interpersonal communication and/or reviewing product literature. They then organize the solutions development effort by combining available technologies and/or services into potential solutions that meet solicitor requirements. Finally, by performing an alternatives analysis, solutions architects then provide a recommended solution to propose to the solicitor [1]. 2.1.2 Capture Managers Capture Managers identify, track, and review bid opportunities and suggest bid or no-­‐bid decisions. If a bid is made, they develop a winning bid strategy by understanding the solicitor, the solution, and the competitive environment. They also oversee pricing, identify resources required, and manage process execution [1]. 2.1.3 Proposal Managers Proposal Managers develop and manage the proposal plan and schedule. They structure, develop, and write proposals around the RFP and may also leverage existing archived proposal information [1]. 2.2 Primary Stakeholders Primary Stakeholders have been categorized as beneficiaries of successful proposals. 2.2.1 Executives 19 Executives are interested in high-­‐level drivers, goals, and objectives of the organization, and how these are translated into an effective process and IT architecture to advance the business. 2.2.2 Solicitors Solicitors are interested in the best value solution that meets requirements, constraints, and standards as described in the RFP. 2.2.3 Vendors Vendors communicate and distribute component data and specifications with solutions architects. They supply hardware, software, and IT services that may be integrated into a solution that is chosen and analysed in an AoA and recommended in a proposal. 2.3 Stakeholder Goals Once the stakeholders were categorized, major goals were elicited and summarized in the following Table 6. The high level CxO’s, executives of the IT solutions contracting company, aim to maximize profitability through increasing the amount of revenue from won contracts while minimizing resource and proposal development costs. These objectives are directly translated to the capture managers and proposal managers where their main goals are to maximize the probability of developing winning proposals and also maximize proposal throughput, respectively. By increasing the discriminability and solution quality, the probability of winning a contract increases, and in order to increase throughput of the proposals that are captured and delivered, AoA process efficiency must increase to meet demand. The Solutions Architects, who are the end-­‐users of this system, want to maximize their productivity while conducting AoA which will help them avoid working overtime as their deadline for providing a solution approaches. This will help to decrease non-­‐ billable overhead costs that fall in line with goals of the executives. SA’s believe that if they have increased access to past market research data and past AoAs and proposals which may contain similar decisions and analyses that are applicable to what they may be currently working on, this will help them to increase the quality of the AoA output and meet their productivity goal. External stakeholder goals are also noted here for the solicitors and vendors that also are important for the SA’s to take into consideration when conducting AoA [1] [3]. 20 Stakeholder Major Goals How to Meet Goals CxO (Corporate Functions) e.g., CEO, CFO, CIO, COO Maximize Profitability ↑ Revenue from won contracts ↓ Resource/ Proposal Development costs Capture Manager (Project Organization) Maximize Probability of Win ↑ Solution Discriminability ↑ Solution Quality Proposal Manager (Project Organization) Maximize Proposal Throughput ↑ AoA Process Efficiency Solutions Architect (End-­‐User) Maximize productivity Avoid overtime ↑ Past Market Research Data Availability ↑ Proposal data applicability Solicitors (External) Maximize solution utility Meet Requirements ↑ Solution Quality ↓ Contract costs Vendor (External) Maximize Competitiveness ↑Product Marketability ↑Data Accuracy Table 6: Stakeholders, Stakeholder Goals, and Methods to Meet Stakeholder Goals 2.4 Stakeholder Conflict There is a conflict between the proposal development managers and the solutions development architects concerning their major goals as outlined in Table 6. With the managers wanting to increase proposal throughput in order to meet the available market demand from solicitors and raise the probability of increasing revenue from won contracts, this would require an increase in the AoA throughput for proposals.However, the solutions architects have limited time and personnel resources available to conduct AoA, which impacts their ability to meet the demand required for an increase in proposal throughput and creates a tension between SA’s and managers as shown in Figure 15. To add another strain on the available time resources, increasing the quality of an intellectual labor process such as this also typically increases the time necessary for completion. In order to satisfy both stakeholders, we have determined that overall process efficiency must be increased 21 in order to increase proposal throughput. In addition, the architects have stated they would like an increase in the availability of data collected from past market research and analyses conducted in past proposals in order to minimize their solutions development efforts [1]. Figure 15: Stakeholder Interactions and Tension 22 3.0 Problem and Need Statements 3.1 Problem Statement During a time of national economic downturn, federal contract spending cuts have led to a decrease in available contract revenue and an increase in competition between government contractors. These factors have increased the time sensitivity of proposal development, specifically in the AoA process. 3.2 Need Statement There is a need for Analysis of Alternatives process improvements to reduce the mean time duration by at least 33%, and the variability by 25%, while maintaining or increasing AoA proposed solution quality and keeping maximum costs below $100,000 per AoA.1 1 Need Statement obtained via stakeholder knowledge elicitation and validated with key stakeholders 23 4.0 Design Alternatives Alternatives are developed based on a detailed understanding of the AoA process and further research with the goal of addressing the stakeholders’ needs. The two alternative types considered are 1) based on optimizing personnel resources to conduct AoA, 2) information technology solutions that will facilitate technical AoA material storage and retrieval. The defined alternatives are not considered as exclusive, as an integrated system comprised of a combination of alternatives that target the need may provide maximum utility to the stakeholders. 4.1 Optimized Staffing Levels The optimized staffing levels alternative involves adding one additional solutions architect to aid in conducting AoA. This holds many potential benefits for the AoA process because the parallel nature of AoA tasks is not utilized when only one solutions architect is conducting AoA. There is expected to be a significant impact on the process duration and when an additional solutions architect aids in performing AoA. Of the 24 tasks identified throughout all stages of the AoA process, 19 of those tasks may be conducted in parallel with at least one other task as indicated in Table 7, which can lead to a substantial increase in efficiency if an additional architect is available. Parallel Tasks AoA Phase Define Problem Domain 6 Define Evaluation Criteria 4 Explore Alternate Solutions 5 Evaluate Solutions 4 Table 7: Number of Parallel tasks for each AoA Phase It is expected that solutions architects will be able to conduct certain tasks simultaneously and the AoA process will therefore become more efficient. Also, with an added solutions architect there is a larger pool of contacts between the architect’s social networks, which may reduce the time necessary to conduct tasks relying on networking. With an increase in subject matter experts, vendors, and 24 professionals between the solutions architects there is expected to be more knowledge and applicable products available to use in AoA. One potential drawback is the conflict in making decisions that can occur with the difference of opinions between two individuals, which could possibly cause tasks that require decision making to take longer to perform, as shown by a decrease in efficiency for decision making tasks in Table 8. Task Category Labor Intensive Decision Making Experience Recall Networking Efficiency Gain -­‐-­‐-­‐ -­‐10% -­‐-­‐-­‐ +10% Table 8: Efficiency Changes for AoA Task Categories 4.2 Information Technology Alternatives Key stakeholders have indicated that they currently use Microsoft Sharepoint for sharing files between architects, and that it does not provide the level of access or features that are needed, resulting in a low quality and underutilized system. The information technology alternatives that are proposed will seek to directly increase the efficiency of performing all tasks, with task category percentages of the entire AoA process seen in Table 1 (in the “Context” section) by allowing for storage, retrieval and direct sharing of current and past technical solution material. Past proposal material could be used as a reference for current work and may even be directly applicable to the AoA at hand, potentially removing the need for new work in a given task of AoA. Two vendors of such products are considered because of existing license agreements or other relationships with the project sponsors. 4.2.1 File Management System A file management system is an IT solution that will allow files to be stored in a hierarchical structure on a backend server where they can be managed and accessed by solutions architects. It can be integrated with existing desktop productivity applications that architects are familiar with, and promotes intranet collaboration by increasing availability of information. A file management system can easily be scaled with the addition of more storage space, and should only require a minimal amount of technical support as it is not overly complex in terms of hardware and software. Specific user tailoring is limited to basic permissions required for accessing files, and search functionality is limited to filenames, tags, and attributes. The commercial-­‐off-­‐the-­‐shelf (COTS) product considered is Intravation Inc.’s Virtual Proposal Center (VPC). Team elicited efficiency gains provided by this product for each of the task categories are shown below in Table 9. 25 Task Labor Decision Experience Networking Category Intensive Making Recall Efficency +10% +10% +15% +5% Gain Table 9: Efficiency Gains for the File Management System Alternative in Each Task Category 4.2.2 Content Management System A content management system extends on a file management system with additional benefits and features. It has more robust searching and indexing capabilities that allow for full-­‐text and content searches within stored files and therefore is a higher quality solution (relative to the file management alternative) in terms of information accessibility and availability. It allows for users to be assigned roles based upon their file-­‐access needs and includes authentication, file check-­‐in and check-­‐out, change tracking, and version control. These features accommodate greater enterprise security and integrity requirements. However, due to the much higher complexity associated with a content management system, it may require more technical support and training along with potential problems with authentication and access permissions which could delay availability of information. The COTS product that is considered is EMC’s Documentum. Team elicited efficiency gains provided by this product for each of the task categories are shown below in Table 10. Task Category Labor Intensive Decision Making Experience Recall Networking Efficiency Gain +15% +15% +20% +10% Table 10: Efficiency Gains for the Content Management System Alternative in Each Task Category 4.2.3 Sanitized Document Repository A sanitized document repository is essentially a collection of files that have been sanitized of proprietary and classified information. This will virtually eliminate any security risks associated with sharing of files and may provide quicker access to information since it will not require file specific access permissions. In addition, since it may be implemented as a simple shared drive, technical support is generally 26 not necessary as this solution does not require special hardware or software. Potential drawbacks are that it will only contain sanitized documents, which could initially limit the quality and quantity of information available until additional files are added and the repository continues to grow. This alternative will reduce time variability of the AoA process since highly variable tasks such as those that require decision making, experience recall, and networking may be partly converted to the labor intensive task of retrieving past proposal data. The changes in task categories as a percentage of the entire AoA process are shown in Table 11. The new content that is created, which would have otherwise been unavailable to share between solutions architects, may contain similar decisions that have been made and analyses that have been conducted, thereby preventing the architect from having to start “fresh” on new AoAs. In addition, team elicited efficiency gains for each of the task categories are also shown below in Table 11. Task Category Labor Intensive Decision Making Experience Recall Networking Efficiency Gain +15% +10% +10% +5% Variability Low High Med High % of AoA Original New 35% 40% 20% 17% 19% 17% 26% Table 11: Efficiency Gains, Variabilities, and Percent Altered for Each AoA Task Category 4.3 Cost of Alternatives A cost analysis is conducted for each alternative. The optimized staffing levels alternative for one additional SA is estimated to be a $200,000 yearly burden resulting in a total five-­‐year cost of approximately $1,000,000 [1]. The file management alternative has a total five year cost estimated at $83,075. This includes first year cost of licensing 100 users at $75,000 plus annual maintenance of $1,615 per year [4]. The content management alternative has a total five-­‐year cost estimated at $202,740. This includes first year cost of licensing 100 users at $110,665 plus annual maintenance of $18,415 per year [5] [6]. The sanitized repository alternative has an estimated five-­‐year cost of $150,000, this being calculated from the time necessary to sanitize AoA documents [1]. Since these alternatives are independent with regard to cost, the cost of any combination of these alternatives is the sum of the individual costs. These costs are reduced by cost saved from time saved in AoA duration reduction, as will be discussed. 27 5.0 Method of Analysis The method of analysis used to develop recommendations to the project sponsors includes a discrete-­‐events simulation of the AoA process and a design of experiment for running the simulation. The simulation models the baseline or current AoA state and allows for changes that depict the modifications made by the design alternatives. The design of experiment includes all possible configurations of alternatives that considered to be applied to the system. Based on the simulation results and other information collected about the design alternatives according to the utility function elicited from our stakeholders, a utility score for each alternative configuration is calculated. 5.1 Simulation Design A Monte Carlo Discrete-­‐Event simulation is built in Rockwell’s Arena simulation software and models the current baseline AoA process, as defined by the DAR via stakeholder knowledge elicitation, as well as all possible alternative configurations. The simulation models both the basic structure and flow of the process as well as task categories and the effects of external information flow. The simulation runs for 1000 replications each simulating 1 year of proposals (~25 proposals.) For detailed documentation on the Arena simulation model, see Appendix C. 5.1.1 Model Assumptions The model assumptions made in the simulation are as follows: 1) solutions architects work on one task at a time, 2) solutions architects work on one proposal at a time, 3) the four task categories adequately capture the labor done in the AoA process, and 4) all tasks are of equal importance to the quality of the AoA output. 5.1.2 Simulation Inputs and Outputs Figure 16 shows the inputs and outputs of the simulation model. The simulation inputs are entirely exogenous, defined from process documentation and stakeholder knowledge elicitation. 28 Figure 16: Stakeholder Interactions and Tension 5.1.2.1 Simulation Inputs The simulation inputs are as follows: 1) the AoA process structure definition, including the relative base duration random variable (considered as a percentage of the total expected time for AoA) for each of the 24 tasks, the percentage of each task category composing each task, and the inherent duration variability associated with the task categories; 2) task category efficiency indexes for each alternative; 3) the difficulty metric random variable for each AoA; 4) the availability of external information, determined via a probability distribution; 5) the applicability of external information, also determined via a probability distribution; and 6) the number of technologies (AoAs required) for each proposal. 5.1.2.2 Simulation Outputs The outputs of the simulation model are entirely endogenous, calculated by the simulation model, and are: 1) the duration for each phase of the AoA and for the entire AoA; 2) the variability of the time duration for each phase and for the entire AoA; and 3) the quality metric for the AoA output. 5.1.3 Simulation Process Logic The simulation is structured using the stations module in Arena. The simulation is setup to define the four phases as major portions of the process logic that create the path for entities to travel in the entire AoA. The entity moves through the path is by getting assigned certain values that determine the entity’s route through the simulation model. The path each entity takes goes along a similar structure. First, the entity goes through variable assignments (some of which allow 29 it to come back to the process logic) then the entity goes through quality calculations then time delay before returning back to the process logic. In the variable assignment stage of the simulation, variables are assigned to aid in the process logic and also variables or attributes are assigned to each entity passing through the simulation. These allow the simulation to perform the calculations. In the quality calculations stage, the information availability and applicability random variables are calculated then used to calculate the task quality metric. The quality metric of the entire proposal is calculated as the average of all the quality metrics. In the time delay stage of the simulation, the proposal progresses through four main process modules. The first module is a “Seize Delay” type, the next two are “Delay” types and the final fourth module is a “Delay Release” type. The resource allocated to these delay modules is the Solutions Architect. Each of the four process modules represents the calculations performed for each of the task categories. To meet the first assumption that a solutions architect works on one task at a time they are configured in a way that would retain the resource and use it throughout the task over each task category and release it when the delay is complete. 5.1.4 Simulation Calculations Several calculations are performed by the simulation, most importantly those for time delay (generating durations) for each AoA task, time variability for the durations, and AoA output quality. The process time delay calculated by the simulation for each task is given by (1) with the variables being as follows: • Tc = Number of Task Categories • D = Inherent Process Delay for each Process • E = Task Category Efficiency Index • W = Task Category Weight (proportion of that task category in the process) • V = Task Category Variability Factor (RV) • Tv = AoA Difficulty Factor (RV) • N = Number of Technologies/AoAs in the Proposal (1) The total AoA duration is the sum of each task delay. And the time variability is calculated as the standard deviation of the mean time duration. The quality metric is summed over each external information flow point and is based on applicability, availability of information as shown in (2). 30 (2) 5.2 Design of Experiment The simulation configurations that comprise the design of experiment are shown in Table 12. Alternatives Run Configuration A1 A2 A3 A4 1 Baseline -­‐ -­‐ -­‐ -­‐ 2 A1 x -­‐ -­‐ -­‐ 3 A1, A2 x x -­‐ -­‐ 4 A1, A2, A3 x x x -­‐ 5 A1, A2, A4 x x -­‐ x 6 A1, A3 x -­‐ x -­‐ 7 A1, A4 x -­‐ -­‐ x 8 A2 -­‐ x -­‐ -­‐ 9 A2, A3 -­‐ x x -­‐ 10 A2, A4 -­‐ x -­‐ x 11 A3 -­‐ -­‐ x -­‐ 12 A4 -­‐ -­‐ -­‐ x Table 12: Configurations of Alternatives Considered In simulating each configuration of alternatives, efficiency indexes are captured to calculate the effect of the tested configuration on the system—in particular the efficiency of each task category. These efficiency improvements for each alternative configuration were elicited from key stakeholder experts and they can be seen in Figure 17. 31 Figure 17: Efficiency Indexes for the Configurations of Alternatives 32 6.0 Results and Analysis 6.1 Simulation Results 6.1.1 AoA Mean Duration Reduction Figure 18 shows the AoA percent time duration reduction from the baseline time for each configuration of alternatives considered. The configurations where several alternatives are included have a greater effect than single alternatives. Only six of the twelve configurations meet the desired minimum 33% decrease of AoA duration and 25% decrease in variability. Those configurations which meet the goals are marked with the darker coloring. Of these, the combination of optimized staffing levels, maintaining a sanitized repository, and implementing a content management system (A1, A2, A4) have the most significant effect at a 52% decrease in AoA duration from the baseline. Taken singly, the alternative with the greatest impact on mean time duration is the optimized staffing levels alternative, with a percent decrease of 36.53%. It should be noted that the configurations which do not meet the stakeholders’ need are those which do not include the optimizing staffing levels alternative. Figure 18: Percent Mean Duration Reduction in AoA for Each Alternative Configuration. 33 6.1.2 AoA Duration Variability Reduction Figure 19 shows the AoA time duration variability in standard deviations for the baseline and each alternative configuration. Again, the configurations with multiple alternatives are seen to have a greater effect on the variability than any single alternatives, and only six configurations meet the goal of 25% variability reduction. The configurations with the greatest effect are the optimized staffing levels alternative and sanitized repository alternative coupled with either the file management or content management alternative (A1, A2, A3; or A1, A2, A4), both having a percent decrease in variability of 50%. Similarly to the results for AoA mean time duration, those configurations which do not include the optimized staffing levels alternative do not meet the variability reduction goal. Figure 19: Percent Duration Variability Reduction in AoA for Each Alternative Configuration. 6.1.3 AoA Output Quality Increase The quality metric shows improvement in certain alternative configurations. It is seen that the sanitized repository alternative is the only one which significantly affects the quality metric. For those configurations involving that alternative, the quality metric increases by 10.18+/-­‐.04%, and for those that do not, the quality metric improves only marginally (up to a .04% improvement), as seen in Figure 20. 34 Figure 20: Percent Increase in AoA Output Quality for Each Alternative Configuration 6.2 Cost-­‐Benefit Analysis 6.2.1 Utility Function Ranks A utility function with eight criteria is developed from stakeholder values elicitation, using the swing weight method (see figure 21). The criteria and their respective weights (in parentheses) are 1) AoA duration reduction (.238), 2) usability of the solution (.190), 3) integrability of solution with currents systems (.167), 4) improvement in quality of AoA output (.143), 5) tailorability of solution in terms of the ability to customize the tool for varied situations (.119), 6) contract and technical support aspects of the solution (.071), 7) AoA duration variability reduction (.048), and 8) the scalability of the solution in terms of the number of users supported at once (.024). The most prominent factor in the utility function is the time reduction of alternatives, followed by the usability of the alternative for SAs and the integrability of the alternative. 35 Figure 21: Stakeholders’ Utility Function The alternative configurations are scored on a 1 to 5 scale against each element of the stakeholders’ utility function, and the weighted total is calculated for each alternative configuration. The alternative configurations are ranked by their total utility scores as shown in Table 13. The combination of the optimized staffing levels (A1), the sanitized repository (A2), and the content management system (A4) is ranked first with a utility of 4.25. The second place configuration, the same as the first ranking configuration but without the content management system, has almost as high utility at 3.95. Table 13: Ranking of Alternative Configurations by Utility Score 36 6.2.2 Sensitivity Analysis for the Utility Ranks A sensitivity analysis is performed on the weights used to score alternative configurations in the stakeholders’ utility function for the three highest-­‐ranking configurations and the three greatest-­‐weighted utility function criteria. Table 14 shows the amount by which the weight of each criterion would have to increase in order to overtake the highest ranking alternative configuration utility. Utility Function Criteria Alternative Configurations Mean Time Usability Integrability A1, A2 A1, A2, A3 A2, A4 +33% +34% +60% +41% +48% +34% +69% +119% +60% Table 14: Sensitivity Analysis for Utility Ranks It is also found that the combination of optimized staffing levels, maintaining a sanitized repository, and implementing a content management system (A1, A2, A4) utility scores of mean time, usability, and integrability must decrease by 38% and 50%, and 89% respectively in order to lose the highest ranking position and allow the combination of optimizing staffing levels and maintaining a sanitized repository hold the highest utility. An analysis of the lower-­‐weighted criteria showed that no reasonable change in weights would alter the results. 6.2.3 Cost versus Utility Figure 22 shows cost versus utility where cost is considered as the estimated cost per AoA after full implementation of the alternatives in the configuration. This cost amount includes cost savings from time saved by implementing the alternatives. The upper-­‐left corner represents the desirable region of the graph, having high utility and low cost, whereas the upper-­‐right region has high utility with high cost. The group of configurations in the upper right region contains those that include the optimized staffing levels alternative—a high value but high cost alternative. These are also the only configurations that meet the goals for AoA mean duration and duration variability reduction. The group of configurations in the upper-­‐left corner are primarily technology-­‐based alternatives. It is seen that the combination of the optimized staffing levels and the sanitized repository alternatives (A1, A2) are nearest to the desirable region of the cost vs. utility graph, though they are not ranked the highest by utility. 37 Figure 22: Cost vs. Utility for all Alternatives Configurations 6.3 Recommendations Based on the utility function results and the cost-­‐benefit analysis, it is recommended that the CNS division implement both the optimized staffing levels and sanitized repository alternatives (A1, A2). This would yield a reduction in AoA mean duration of 43.9%, a reduction in AoA duration variability of 37.5%, and increase in AoA output quality of 10.2%, and a total utility of 3.95 on a 1 to 5 scale. The total maximum cost per AoA is calculated as the cost per AoA for two solutions architects, at $32,000 per solutions architect per AoA, added to the cost of an additional 12 hours of labor to sanitize AoA materials, which is $1,200 per AoA. Subtracted from the cost is the cost savings from AoA duration reduction. The total cost per AoA is $50,000, and the total implementation cost is $230,000 per year. There is potential for a better configuration of alternatives should there be a change in cost. The configuration including the optimized staffing levels, the sanitized repository, and the content management system hold a higher utility, but also a higher cost. However, the parent company of Vangent, Inc., namely General Dynamics, has a relationship, possibly even license agreements, with EMC, the vendor of the Documentum content management system. If that relationship were to be leveraged to gain a lower cost of the content management system, then this configuration would likely be nearer the desirable region on the cost vs. utility chart than the prior configuration. Therefore, it is recommended that this relationship be explored and, if possible, exploited to gain a lower cost. The configuration of the optimized staffing levels, the sanitized repository, and the content management system would give a reduction in AoA mean duration of 51.7%, a reduction in AoA duration variability of 50.0%, and increase in AoA 38 output quality of 10.2%, and a total utility of 4.25 on a 1 to 5 scale. The total maximum cost per AoA is $78,000, and the total cost of implementation is a one-­‐ time cost of $111,000, plus a yearly cost of $230,000. 39 7.0 Project Budget and Management 7.1 Work Breakdown Structure A project work break-­‐down structure is developed to help define and manage the work done in the project. As seen in the figure below, the work break-­‐down structure is divided into six phases: Project Definition, Requirements Development, Solution Development, Modeling and Testing, Analysis of Results, and Communications and Management. The top-­‐level work breakdown structure is shown in Figure 23. Figure 23: Project Work Breakdown Structure 7.2 Earned Value Management The total estimated project cost is $130,000. The estimation was mainly based on the assumption that each person will bill the project a total burden of $62.40 for each hour that they spent on the project ($40/hour base rate plus overhead and general and administrative costs). The actual cost of the project is $139,300. There was also budgeted an $8000 management reserve, which reduces the effect of the cost overages in large degree. The cost and schedule performance indexes also showing the cost slightly over run (see Table 15). 40 Total Project Expected Cost Total Burden per Person Management Reserve Budget Cost Work Performed (BCWP) Actual Cost Work Performed (ACWP) Budget Cost Work Scheduled (BCWS) Cost Performance Index (CPI) Schedule Performance Index (SPI) $130,000 $62.40 $8,000 $132,500 $137,500 $124,800 0.96 0.94 Table 15: Project Costs, CPI, and SPI The earned value of the project over its entire duration is also considered (see Figure 24). The budgeted cost of work performed and actual cost of work performed are seen to remain roughly consistent with the expected costs throughout the duration of the project, with the actual cost slightly overrunning the management reserve at the end of the project, as discussed previously. BCWP ACWP $160,000.00 Management Reserve $140,000.00 $120,000.00 Cost $100,000.00 $80,000.00 $60,000.00 $40,000.00 $20,000.00 $0.00 1 5 9 13 17 21 25 29 33 37 Time (Week) Figure 24: Earned Value Management Chart, Including the Budget Cost of Work Performed, the Actual Cost of Work Performed, and the Expected Cost 41 References 1. Knowledge Elicitation with Stakeholders and Subject Matter Experts, Vangent, Inc. Arlington, VA, 2011 -­‐ 2012. [Verbal] 2. “Contract Spending Dips First Time in 13 Years -­‐ FederalTimes.com.” [Online]. Available: http://www.federaltimes.com/article/20110203/ACQUISITION03/102030302 /1034/IT04 Accessed Apr. 23 2012. 3. Authors Redacted Justification of Product/Solution Selection. Vangent, Inc., Arlington, VA, 2011. 4. Intravation, Inc., Product Information, 2012. [Online]. Available: http://www.intravation.com/products/vpc.asp. Accessed February 2, 2012. 5. EMC Corporation, EMC Documentum Architecture : Foundations and Services for Managing Content across the Enterprise. EMC Corporation, Hopkinton, MA, Nov 2009. [Online]. Available: http://www.emc.com/collateral/software/white-­‐ papers/h3411-­‐documentum-­‐architecture-­‐wp.pdf 6. Dr. Ian Howels, Total Cost of Ownership for ECM, Alfresco Software, Inc., [Presentation]. January 2009. 42 Appendix A : The Decision and Analysis Review Process Diagram Figure 25: The Decision and Analysis Resolution Process 43 Appendix B: Percent Composition of Task Categories/Types for Each AoA Task Define Evaluation Criteria Define the Problem Domain AoA Phase AoA Task Define Technical Functional Requirments Define Environmental Functional Requirements Define Technical NonFunctional Requirements Define Environmental Non-Functional Requirements Define Political Non-Functional Requirements Define Financial Non-Functional Requirements Compile Functional Requirements Compile NonFunctional Requirements Define Technical Criteria from Requirements Define Business Criteria from Requirements Define Technical Criteria from Lessons Learned Define Business Criteria from Lessons Learned Compile Criteria from Labor Intensive Task Types Decision Experience Making Recall Networking 33.33% 33.33% 11.11% 22.22% 14.29% 14.29% 28.57% 42.86% 22.22% 22.22% 33.33% 22.22% 12.50% 12.50% 25.00% 50.00% 16.67% 16.67% 16.67% 50.00% 16.67% 16.67% 16.67% 50.00% 62.50% 12.50% 0.00% 25.00% 62.50% 12.50% 0.00% 25.00% 57.14% 21.43% 7.14% 14.29% 35.71% 14.29% 14.29% 35.71% 21.43% 21.43% 35.71% 21.43% 13.33% 20.00% 33.33% 33.33% 76.92% 7.69% 0.00% 15.38% 44 Evaluate Solutions Explore Alternate Solutions Requirements Compile Criteria from Lessons Learned Obtain SME Opinion Research Literature Conduct Internet Research Conduct Surveys Use Industry Research Organizations Conduct Pro/Con Analysis Conduct Kepner-Tregoe Analysis Conduct Cost Analysis Conduct Quantitative Benefit Analysis Conduct CostBenefit Analysis 76.92% 7.69% 0.00% 15.38% 7.69% 7.69% 7.69% 76.92% 45.45% 9.09% 27.27% 18.18% 66.67% 8.33% 16.67% 8.33% 45.45% 22.73% 9.09% 22.73% 50.00% 20.00% 20.00% 10.00% 28.57% 14.29% 42.86% 14.29% 30.00% 30.00% 30.00% 10.00% 33.33% 16.67% 16.67% 33.33% 45.45% 18.18% 18.18% 18.18% 45.45% 18.18% 18.18% 18.18% Table 16: Task Category Percent Composition in each task of the DAR Process 45 Appendix C: Arena Simulation Model Documentation Concepts Entity: An entity is an instance of the object being simulated. Entities go through other modules to change their own state or change the entire system state. Resource: A resource may be required to perform a specific process. Based on the available amount of resources and the process type, the process might be delayed. Modules Used Separate: The separate module splits up an entity into 2 directions so that parallel events may occur to the same entity. Batch: The batch module groups several entities to form one entity. Assign: The assign module sets certain values to an entity or to the system as a whole. These values can then be used to perform other calculations. Decide: The decide module allows for a certain path to be taken by an entity depending on a probability or a condition. Process: The process module causes a time delay to the system state and the entity being processed. To perform a process a resource may be required based on several types. These types are 1) Seize Delay, where the process uses a resource and continues to use it until another process releases it 2) Delay Release, where the process causes a delay to the entity and system time while using all the resources already being used then releases them 3) Seize Delay Release, where the process uses a resource for the duration of the delay then releases at the end and 4) Delay, where no additional resource is required. Hold: The hold module causes entities to stop proceeding in the path based on a specific condition. 46 Station: The station module acts as a bookmark that denotes the start of a path. Stations can get entities sent to them through other modules such as the “Route” module. Route: The route module sends an entity to a specific station. PickStation: The pickstation module sends an entity to a station based on an expression or condition. Simulation Figure 26: The Arena Simulation Model of the DAR Process 1. The simulation (see Figure 26) begins at the top left where it creates proposals that then go through a decision block to formulate the proposal size based on a probability distribution (Figure 27). 47 Figure 27: Simulation Complexity Assignment 2. The proposals proceed to a hold module (Figure 28) which restricts proposals so that no more than 1 proposal can be in the system at a time. This is done through a system variable that is modified at proposal entry and exit. Figure 28: Simulation Hold Module 3. Shown below are the four main phases’ stations with some assign modules to define the next step to go to then they are connected to a PickStation module that determines where the entity should go (Figure 29). (After starting, the entity would just go through the first AoA phase which would lead it to the detailed processes and then back to the following phase) 48 Figure 29: Simulation Stations for the Four Phases of the DAR Process 4. The proposal then goes to the detailed processes of the current phase (Figure 30). Each phase is designed to accurately represent the structure of tasks that would be undertaken. This is done through the use of separate, batch, decide, route and station modules. In each of the phases when a process is desired to occur, a route module exists that sends the entity to variable assignments. Figure 30: Simulation Structure of DAR Phases 49 5. The variable assignments stage (Figures 31, 32) is where many of the variables used for calculations occur. Task Category efficiency indexes, task category weights, inherent task delay and the variables that define the next location to go to are assigned to each entity here before going to the quality calculations stage. Figure 31: Simulation Variable Assignments, Low Level View Figure 31: Simulation Variable Assignments, High Level View 6. In the quality calculations stage some variables unrelated to quality but those that are similar in all tasks such as task category variability are assigned here (Figure 32). More importantly however are the availability and applicability variable assignments as well as the quality metric calculation following that. At the end of the quality calculations, the entity goes through a pickstation module which finds a free queue to go to in the time delay stage. 50 Figure 32: Simulation Quality Calculations 7. In the time delay stage, a time delay is calculated for each category then added up to have a time delay for the entire task (Figures 33, 34). The way this is done is by having a Seize Delay, Delay, Delay, Delay Release process module sequence. After the time delay is incurred the entity is routed to the next station based on the variable it got assigned in variable assignments. Figure 33: Simulation Delay Calculations, Low Level Figure 34: Simulation Delay Calculations, High Level 8. This leads the entity back to the next of the four phases (seen in Figure 30). 9. Upon completion of a phase, an entity is sent to the beginning of the next phase (seen in Figure 29). 10. This then loops again through the rest of the phases (seen in Figure 30). 51 11. Upon completion of all phases the entity goes through recording the variables for further analysis, modifying the hold-­‐related variable to allow for the next proposal to enter then finally it gets disposed of. Figure 35: Simulation Ending and Completion 52