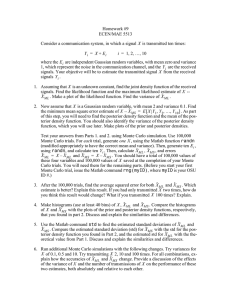

Spring 2009 Statistics 580 Project #2 Problem #1 Notes

advertisement

Spring 2009 Statistics 580 Project #2 Problem #1 Notes Suppose that (1, 2, 1, 2, 1, 1, 2, 1, 3, 1) is an observed random sample from the (discrete) logarithmic series distribution with density f (x; θ) = θx −x log(1 − θ) for x = 1, 2, 3, . . . , 0<θ<1 If the prior π(θ) is taken as a Beta(2,2), construct and execute a Metropolis procedure to estimate the posterior mean and its variance. 1. Do not use the same code as for the NB10 data problem because this application is much simpler. But you could use the same logic for the main loop. 2. Write the likelihood L(θ), the prior density π(θ) and thus the posterior as L(θ) × π(θ). 3. You are free to choose your own candidate generating density q(θ n , θ). The simplest such choice for q(x, y), in general, is a random walk. That is, given x generate y using y = x + z, where z is independently ∼ U (−k, k) where k is a small value (in this case k=.1 is suggested; a larger value, say, .2 might work better, if the starting value is chosen well). 4. If you choose the random walk, q(x, y) is symmetric, so the Metroplois Algorithm can be used (instead of the Metropolis-Hastings version). 5. If you choose the random walk, you have to make sure that θ ∗ ∈ (0, 1), i.e., the value generated, θ ∗ , is in the parameter space. Else accept θn as the new state. 6. This Markov Chain will need a large burn-in time (say, 5000) and then monitored for, say, another 10,000 samples. Estimated run time is about a half hour. 7. In any case, the sequence needs to be modelled as an AR(1) series to estimate the variance of θ̄n properly. Use the formula v u u γ̂(0) 1 + γ̂(1)/γ̂(0) × s.e.(θ̄n ) = t n 1 − γ̂(1)/γ̂(0) where γ̂(h) = cov(θn , θn+h ) You could use the acf() function to compute γ̂(0) and γ̂(1).