Bayesian information based learning and majorization.

advertisement

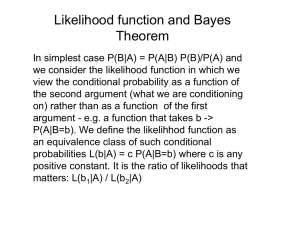

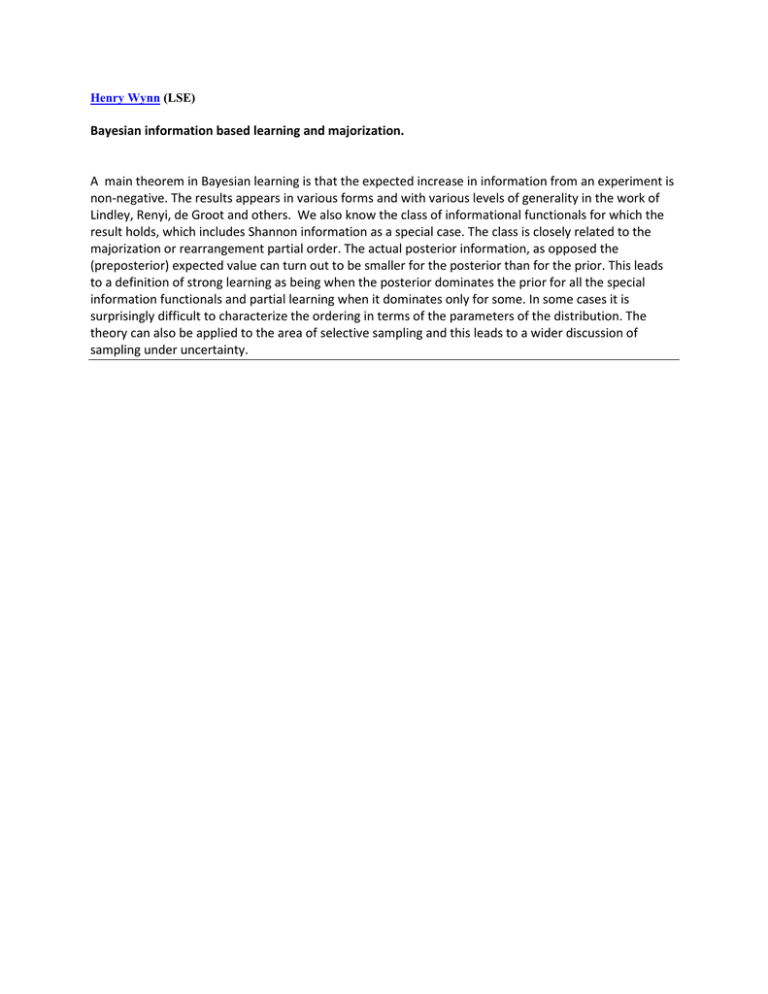

Henry Wynn (LSE) Bayesian information based learning and majorization. A main theorem in Bayesian learning is that the expected increase in information from an experiment is non-negative. The results appears in various forms and with various levels of generality in the work of Lindley, Renyi, de Groot and others. We also know the class of informational functionals for which the result holds, which includes Shannon information as a special case. The class is closely related to the majorization or rearrangement partial order. The actual posterior information, as opposed the (preposterior) expected value can turn out to be smaller for the posterior than for the prior. This leads to a definition of strong learning as being when the posterior dominates the prior for all the special information functionals and partial learning when it dominates only for some. In some cases it is surprisingly difficult to characterize the ordering in terms of the parameters of the distribution. The theory can also be applied to the area of selective sampling and this leads to a wider discussion of sampling under uncertainty.