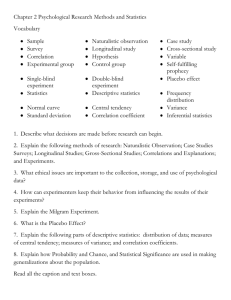

Statistics Reference Sheet Independent Variable: Dependent Variable:

advertisement

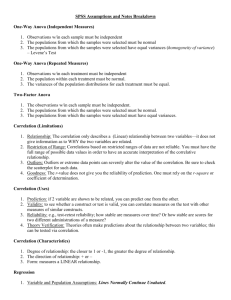

ComSt 311 Redmond Statistics Reference Sheet Independent Variable: A variable/quality that does not depend on other variables. Sometimes manipulated, but often simply measured as a state condition (age, sex, education, etc.). Dependent Variable: A variable (quality) that is affected and changed by independent variables. In the statement, "women are more empathic than men," sex is the independent variable and empathy is the dependent variable. Another way of considering the relationship between two variables is to say that by knowing one variable (a person's sex) we can predict another variable (level of empathy). Significance p < 0.1 p < .05 p <.01 p < .001 Chance of the statistic happening purely by chance: 0.1 means 10 out of 100 times the result is by chance, .05 (5 out of 100), .01 (1 out of 100), .001 (1 out of 1,000) Frequency (number) N = 245 Means (average) (usually appears to show the number of respondents) M = 3.45 (on some scale, such as 1-5) Standard Deviation SD = 2.18 (relative to the mean and scale). (SD is an indication of how representative the mean is of the sample, the larger the SD relative to the mean, the less representative it is—also usually, the flatter the bell curve. Just over 68% of the sample falls within one standard deviation (+/-) of the mean. Thus in the example above, 68% fall between 1.27 (3.45-2.18) and 5.63 (3.45 + 2.18) which is above the 5.0 scale (thus also indicating a skewed sample). Variance: The degree to which a set of numbers are spread out--their distribution. Thus for a sample of five people responding on a five point scale, if they all answered 3, there is no variance (SD = 0), if each chose a different number, there is complete variance (SD = 1.6). Notice that for both groups the means would be 3 and thus for the first group, it would be a very accurate predictor of a person’s score. For the second group, it would only accurately predict 1 out of the 5 and not be a very good predictor. Standard deviation reflects this.1 Tests of Differences T-Test—Tests the difference between means of two groups. Are the two groups under comparison significantly different on the measured quality? Sample: t = (153) = -1.83, p < .05 t (degree of freedom # of participants-1) = value, p < value This means that in a sample of 154 respondents, a t value of –1.83 was obtained in calculating the difference in two means, and that that value would occur by chance only 5% of the time. Analysis of Variance (ANOVA)—Similar to t-test but instead of just two groups, it tests the difference between three or more groups by examining the variance of each group to a grand mean (between group) and within each group (within group) on one independent variable (factor) F (df of categories, df of participants) = value, p < value (sometimes eta squared 2 is provided to further support the strength—interpreted like r2—as percent of variation accounted for) Sample: F (2, 154) = 3.47, p <.05, 2 = .38 (38% of the variance accounted for) Multiple Factor Analysis of Variance (MANOVA)—the same as ANOVA except that it can handle more than one independent variable (factor) and determine the interaction effects among those factors. Shows as F tests among the various configurations. 1 ComSt 311 Redmond Interaction Effects Sex x Age x Education on Affectionate Communication Sex x Age on Affectionate Communication Sex x Education on Affectionate Communication Age x Education Affectionate Communication F (3, 65) = … F (2, 65) = F (2, 65) = F (2, 65) = Main Effects (the effect of each independent variable by itself, controlling for the effects of the other independent variables) Sex on Affectionate Communication Age on Affectionate Communication Education on Affectionate Communication F (1, 65) = F (5, 65) = F (7, 65) = Chi Square (χ2) Also compares groups when the frequency of responses between nominal/categorical data (males/females, freshmen/sophomores/juniors/seniors, ComSt majors/nonmajors, etc.). Chi square simply involves comparing how two groups rated or ranked given options to see if there are different. The underlying assumption is that the distributions of any responses will be the same. Chi square can also be used to compare against “expected” distributions of responses such as equally distributed across the choices. Example: (reading 3). Number indicting a given quality is part of “What is a date?” Quality % of College Students % of Single Adults (older) A couple/dyad 72.4% 60.4% Heterosexual 22% 29.2% Social 46.8% 29.3% Chi square is used to answer the question about whether the choices of these two groups are significantly different. The actual study has more qualities. Finding: χ2 (25, n= 1,149) = 71.45, p < .001). The 25 is the number of qualities and the 1,149 is the number of total responses). Tests of Relationships Correlation (Pearson’s Product-Moment Correlation)—tests whether two variables vary together either positively or negatively and is a number between 1.0 and -1.0 Assesses the amount of variation that is common to both variables—in essence, how often does a response on one quality match up with a quality on another quality. The square of the correlation coefficient indicates how much variance is accounted for between the two variables (called the coefficient of determination). r = .40 produces . r2 = .16, meaning 16% of the variance is accounted for (84% is due to other factors) r = .70 produces r2 = .49, meaning 49% of the variance is accounted for (51% by other factors) r = -.20 becomes r2 = .04, meaning only 4% is accounted for. A correlation can be statistically significant but account for very little variance between the two variables. Just as SD tells how well a mean reflects the general scores, a correlation reflects how well the sloped line reflects the relationship between where two pairs of numbers are plotted in space. In the example below, the distance of each point away from the line reflects the amount of variance that is not accounted for. For r = .95, the total distances of all the points away from the line is small, while for r = .34, a lot more distance (variation) from the line. In essence, the ability to know what the value of one variable (X) based on knowing the value of another variable (Y) is not very good in the second correlation. A smaller r2 means that a lot of other factors are affecting variation in the Y scores. BUT, REMEMBER CORRELATION IS NOT CAUSE AND EFFECT. ComSt 311 Redmond 5 4.5 4 3.5 r = .95 r = .34 3 2.5 Multiple Correlation—a correlation calculated between three or more variables; a large R = +1.0 to –1.0 2 70 Correlation—a 90 110 130 between 150 two 170 Partial correlation variables (X & Y) in which the effect of one or more other variables (Y) is removed from between the two variables (X & Y). Factor Analysis—A method for determining which set of variables are most closely related to one another, and which ones aren’t. Typically a set of items such as a questionnaire are analyzed to see which items inter-correlate (multiple correlation) the best. Those items that relate well to one another constitute a “factor.” Factor lists include the items and their correlation to the factor (the other items in the factor). Researchers label the factors, and you should carefully examine the items and the labels to insure you understand what the label represents. Regression Analysis Regression analysis is a statistical way of demonstrating prediction based only on a linear model creating a slope much like correlations. Attempts to answer the question: “What is the best predictor of…?”. Which set of known independent variables best predicts an dependent variable and with what weighting? Similar to ANOVA (F-test) in that it attempts to account for the most variance possible by creating an equation based upon possible combinations and weightings of independent variables. F-test is used to determine if the regression analysis is statistically significant. Regression equation format: values of Y = a constant value + some slope + error Y = a + b*X + e Y= dependent variable a = some constant (called an intercept—where the line is intercepted) b = slope/beta weight the value by which the known value is multiplied (shows relative contributions when more than one independent variable is included) X = the value of the independent variable. e = error Interpreting Regression Analysis: Usually, the formula is not reported, but simply the beta weights, R2 change values, F test values. Beta weights “β” are listed for each variable to indicate its strength of contribution to the overall prediction of the dependent variable. Each independent variable is evaluated for making a significant improvement in the prediction (usually an F test), if it fails to reach significance, it is not included in the final regression equation. A correlation of determination R2 is calculated comparing the predicted value of the dependent variable based on the each variable’s contribution is compared with the actual/observed results reported by participants. This value is between 1.0 and -1.0. Example: Reading # 8 Coping strategies predict type of commitment in dating relationships. High moral commitment used reframing, β = .15, R2 change .02, modeling, β = .14, R2 change .01, and punishing, β = -.16, R2 change .02. (F results and significance are reported for each as well). Notice the Beta and R2 are fairly small making this marginally meaningful.