Bo otstrap Estimation A

advertisement

Bootstrap Estimation

Suppose a simple random sample

(sampling with replacement)

X1; X2; : : : ; Xn

is available from some population

with distribution function (cdf)

F (x):

Objective: Make inferences about

some feature of the population

median

variance

correlation

A statistic tn is computed from the

observed data:

Sample mean: tn = n1 Pnj=1 Xj

Standard deviation:

nX

(Xj X )2

tn = n 1 1 j =1

v

u

u

u

u

u

u

u

t

Correlation:

1j 777

Xj = X

X2j 75

2

66

66

4

3

j = 1; 2; : : : ; n

Pn

(X X )(X X 2:)

tn = vuutPn j =1 1j 21vuut:Pn 2j

2

j =1(X1j X 1:) j =1(X2j X 2:)

1081

tn estimates some feature of the

population.

What can you say about the distribution of tn, with respect to all

possible samples of size n from the

population?

Expectation of tn

Standard deviation

Distribution function

1082

Simulation: (The population c.d.f.

is known)

For a univariate normal distribution with mean and variance

2, the cdf is

F (x) = P rfX xg

= x1 p21 e

Z

1 ( w )2

2 dw

Simulate B samples of size n and

compute the value of tn for each

sample:

How can a condence interval be

constructed?

1083

tn;1; tn;2; : : : ; tn;B

1084

Approximate EF (tn)

simulated mean

with the

with

X

t = B1 kB=1

tn;k

B

BX

1 k=1(tn;k

B

BX

1 k=1(tn;k

<t

Order

the B values of tn from

smallest to largest

t)

2

tion of tn with

1

for tn

n;k

Approximate the standard deviav

u

u

u

u

u

u

u

t

the c.d.f.

with t

Fdn(t) = number of samples

B

Approximate V arF (tn) with

1

Approximate

tn(1) tn(2) : : : tn(B)

and approximate percentiles of

the distribution of tn.

t)2

1085

1086

Basic idea:

(1) Approximate the population cdf

What if F (X), the population c.d.f.

is unknown?

You cannot use a random

number generator to simulate

samples of size n and values

of tn, from the actual

population.

Use a bootstrap (or resampling)

method?

F (x)

with the empirical cdf

F^n(x)

obtained from the observed

sample

X1; X2; : : : ; Xn

Assign probability n1 to each observation in the sample. Then

Fdn(x) = nb

where b is the number of observations in the sample with

Xi < x for i = 1; 2; : : : ; n

1087

1088

Approximate the act of simulating B samples of size n from

a population with c.d.f. F (x)

by simulating B samples of size

n from a population with c.d.f.

Fdn(x)

{ The \approximating" population is the original sample.

{ Sample n observations from

the original sample using simple random sampling with replacement.

{ repeat this B times to obtain

B bootstrap samples of size n.

{ Evaluate the summary statistic for each bootstrap sample

Sample 1: tn;1

Sample 2: tn;2

..

Sample B: tn;B

This will be called a boostrap

sample.

1090

1089

This resampling procedure is

Evaluate bootstrap estimates

of features of the sampling

distribution for tn, when the

c.d.f. is F^n(x).

BX tn;b

EFdnd(tn) = B1 b=1

V arFddn(tn)

EdFdn (tn)

= B1 1 Bb=1 tn;b EF n(tn) 2

2

4

d

called a nonparametric

bootstrap

If used properly it provides

consistent large sample results:

As n ! 1 and B ! 1

3

5

1091

VdarFdn (tn)

B b=1 tn;b

! EF (tn)

=

=

1

B

X

1

B

X

B 1 b=1

! V arF (tn)

2

66

4

3

2

tn;b B1 BX tn;b775

b=1

1092

The bootstrap is a large sample

method

Consistency:

Large number of bootstrap

samples. As B ! 1

As n ! 1;

Original sample

size must become large

X

tn;b ! EF^n(tn)

EdF^n(tn) = B1 bB=1

1

BX

n;b E ^ (tn))2

(

t

Fn

B 1 b=1

! V arF^n(tn)

V darF^n(tn) =

F^n(x) ! F (x) for any x

Then,

EF^n(tn) ! EF (tn)

V arF^n(tn) ! V arF (tn)

d

What is a good value for B ?

standard deviation: B 200

condence interval: B 1000

more demanding

applications: B 5000

..

..

small values of n; F^n(x)

could deviate substantially from

F (x).

For

1094

1093

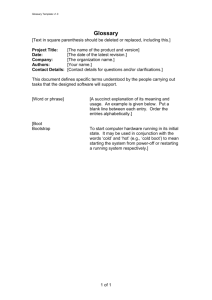

Example 12.1: Average values for

GPA and LSAT scores for students

admitted to n=15 Law Schools in

1973.

Law School Data

2.8

3.0

GPA

3.2

3.4

School LSAT GPA

1

576 3.39

2

635 3.30

3

558 2.81

4

579 3.03

5

666 3.44

6

580 3.07

7

555 3.00

8

661 3.43

9

651 3.36

10

605 3.13

11

653 3.12

12

575 2.74

13

545 2.76

14

572 2.88

15

594 2.96

560

580

600

620

640

660

LSAT score

1095

1096

Bootstrap samples

These schools were randomly se-

lected from a larger population

of law schools.

We want to make inferences

about the correlation between

GPA and LSAT scores for the

population of law schools

The sample correlation (n=15)

is

r = 0:7764 tn

Take samples of n=15 schools,

using simple random sampling

with replacement

Sample 1:

School LSAT GPA

7

555 3.00

10

605 3.13

14

572 2.88

8

661 3.43

5

666 3.44

4

578 3.03

1

576 3.39

2

635 3.30

13

545 2.76

3

558 2.81

15

594 2.96

4

573 3.03

9

651 3.36

3

558 2.81

3

558 2.81

tn;1 = 0:8586 = r15;1

1098

1097

Sample 2:

School LSAT GPA

12

575 2.74

11

653 3.12

12

575 2.74

8

661 3.43

9

651 3.36

5

666 3.44

9

651 3.36

1

576 3.39

5

666 3.44

5

666 3.44

1

576 3.39

2

635 3.30

10

605 3.13

14

572 2.88

Repeat this B = 5000 times to

obtain

tn;1; tn;2; : : : ; tn;5000

tn;2 = 0:6673 = r15;2

1099

1100

Estimated correlation from the

original sample of n = 15 law

schools

r = 0:7764

100 150 200 250 300

5000 Bootstrap Correlations

Bootstrap standard error (from

B = 5000 bootstrap samples) is

v

u

u

u

u

u

u

u

u

t

0

50

1 BX t 7772

X 66 64t

Sr = B 1 1 bB=1

n;b B j =1 n;j 5

0.2

0.4

0.6

0.8

1.0

2

v

u

u

u

u

u

u

u

u

t

3

1 5000 r

1 5000 r 2

= 4999

15;b

b=1

5000 j =1 15;j

= 0:1341

X

2

66

64

3

77

75

X

1101

1102

Number of Bootstrap

Bootstrap estimate of

samples Standard error

Law School Data

2.6

2.8

3.0

3.2

3.4

0:1108

0:0985

0:1334

0:1306

0:1328

0:1299

0:1366

0:1341

GPA

B = 25

B = 50

B = 100

B = 250

B = 500

B = 1000

B = 2500

B = 5000

500

\Very seldom are more than B = 200

replications needed for estimating a

standard error."

550

600

650

700

LSAT score

{ Efron & Tibshirani (1993)

(page 52)

1103

1104

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

School

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

LSAT

622

542

579

653

606

576

620

615

553

607

558

596

635

581

661

547

599

646

622

611

546

GPA Type

3.23

2

2.83

2

3.24

2

3.12

1

3.09

2

3.39

1

3.10

2

3.40

2

2.97

2

2.91

2

3.11

2

3.24

2

3.30

1

3.22

2

3.43

1

2.91

2

3.23

2

3.47

2

3.15

2

3.33

2

2.99

2

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

School

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

LSAT

614

628

575

662

627

608

632

587

581

605

704

477

591

578

572

615

606

603

535

595

575

GPA Type

3.19

2

3.03

2

3.01

2

3.39

2

3.41

2

3.04

2

3.29

2

3.16

2

3.17

2

3.13

1

3.36

2

2.57

2

3.02

2

3.03

1

2.88

1

3.37

2

3.20

2

3.23

2

2.98

2

3.11

2

2.92

2

1105

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

School

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

LSAT

573

644

545

645

651

562

609

555

586

580

594

594

560

641

512

631

597

621

617

637

572

GPA Type

2.85

2

3.38

2

2.76

1

3.27

2

3.36

1

3.19

2

3.17

2

3.00

1

3.11

2

3.07

1

2.96

1

3.05

2

2.93

2

3.28

2

3.01

2

3.21

2

3.32

2

3.24

2

3.03

2

3.33

2

3.08

2

1106

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

1107

School

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

LSAT

610

562

635

614

546

598

666

570

570

605

565

686

608

595

590

558

611

564

575

GPA Type

3.13

2

3.01

2

3.30

2

3.15

2

2.82

2

3.20

2

3.44

1

3.01

2

2.92

2

3.45

2

3.15

2

3.50

2

3.16

2

3.19

2

3.15

2

2.81

1

3.16

2

3.02

2

2.74

1

1108

The correlation coeÆcient for the

population of 82 Law Schools in

1973 is

= 0:7600

The exact distribution of estimated

correlation coeÆcients for random

samples of size n=15 from this population involves

More than 3.610110 possible

samples of size n=15

Results from 100,000 samples of

size n=15.

Percentiles

.95 0.9128

.75 0.8404

.50 0.7699

.25 0.6777

.05 0.5010

min = -0.3647

max = 0.9856

mean = 0.7464

std. error = 0.1302

1109

Bias: From a sample of size n, tn is

used to estimate a population parameter .

BiasF (tn) = EF (tn)

"

"

average across

true

all possible

parameter

samples of size n

value

from the population

1110

Bootstrap estimate of bias:

\true value" if you

take the original

sample as

the population

#

BiasFd(tn) = EFd(tn) tn

"

Law School example:

BiasF (r15) = EF (r15) 0:7600

= :7464 :7600

= 0:0136

1111

approximate this with

the average of results

from B bootstrap

samples B1 PB

b=1 tn;b

1112

Improved bootstrap bias estimation: Efron & Tibshirani (1993),

Section 10.4

Law School example:

(B = 5000 bootstrap samples)

1 5000

d

Bias

Fd(r15) = 5000 b=1 r15;b r15

= :7692 :7764

= :0072

Bias corrected estimates:

d

tfn = tn Bias

b(tn)

1

0

X

tn;bCCCA

= 2tn BBB@B1 bB=1

Law School example:

d

r~15 = r15 Bias

b(r15)

= :7764 ( :0072) = 0:7836

"

here we moved

farther away from

1113

Bias correction can be dangerous in

practice:

tn

may have a substantially

larger variance than tn

f

MSEF (tn)

f

= [BiasF (tn)]2 + V arF (tn)

f

f

is often larger than

MSEF (tn)

= [BiasF (tn)]2 + V arF (tn)

= :7600:

1114

Empirical Percentile

Bootstrap

Condence Intervals

Construct an approximate

(1 ) 100%

condence interval for .

tn is an estimator for Compute B bootstrap

samples

to obtain tn;1; : : : ; tn;B

Order the bootstrapped values

from smallest to largest

tn;(1) tn;(2) : : : tn;(B)

1115

1116

Law School example:

(B = 5000 bootstrap samples)

Compute upper and lower

100th percentiles

2

Compute

3

kL = (B + 1) 2 775

2

66

4

= largest integer (B + 1)2

kU = B + 1 kL

Construct an approximate 90%

condence interval for

= population correlation

= :10

kL = [(5001)(:05)] = [250:05] = 250

kU = 5001 250 = 4751

Then, an approximate

(1 ) 100%

An approximate large sample 90%

condence interval is

condence interval for is

[tn;(kL); tn;(kU )]

[r15;(250); r15;(4751)] = [0:5207; 0:9487]

1117

1118

Law School example:

Fisher Z -transformation

Zn = 12 log BBB@ 11 + rrn CCCA

n

0

1

_ N 21 log 11 + ; n 1 3

An approximate (1 ) 100%

condence interval for 21 log 11+

0

B

B

B

B

@

100 150 200 250 300

5000 Bootstrap Correlations

0

B

B

B

B

@

1

C

C

C

C

A

1

C

C

C

C

A

0

@

1

A

50

is

Z=2 pn1 3 = ZL

upper limit: Zn + Z=2 pn1 3 = ZU

0

lower limit: Zn

0.2

0.4

0.6

0.8

1.0

Transform

scale

1119

back to the original

3

e ZL 1 ; e2Zu 1 77777

e ZL + 1 e2Zu + 1 75

2

66 2

66

66

4 2

1120

For n = 15 and r15 = :7764 we have

Percentile Bootstrap

Condence Intervals

Z15 = 12 log 11 + ::7764

7764 = 1:03624

0

B

B

B

@

1

C

C

C

A

and

ZL = Z15 Z:05 p151 3 = :561372

ZU = Z15 + Z:05 p151 3 = 1:511113

and an approximate 90% condence interval for the correlation is

(0:509; 0:907)

The bootstrap percentile interval

would approximate this interval if

the original sample was taken from

a bivariate normal approximation.

Suppose there is a transformation

= m()

such that

d = m(tn) N (; !2)

for some standard deviation !.

Then, an approximate condence

interval for is

[m 1( Z(=2)!); m 1( + Z=2!)]

d

1121

d

1122

The bootstrap percentile

interval is a consistent

approximation. (You do not

have to identify the m()

transformation.

The bootstrap approximation

becomes more accurate for

larger sample

For smaller samples, the

coverage probability of the

bootstrap percentile interval

tends to be smaller than the

nominal level (1 ) 100.

1123

A

percentile interval is entirely

inside the parameter space.

A percentile condence interval

for a correlation lies inside the

interval [ 1; 1].

1124

The BCa

interval of intended

coverage 1 is given by

Bias-corrected and

accelerated (BCa)

bootstrap percentile

condence intervals

[tn;([1(B+1)]); tn;([2(B+1)])]

where

simulate B

bootstrap samples

and order the resulting estimates

tn;(1) tn;(2) tn;(B)

0

B

B

d

B

B

B

B

@ 0

1

Zd0 Z=2 CCCC

1 = Z + 1 ad(Zd Z ) CCA

0

=2

Zd0 + Z=2 CCCC

2 = Z + 1 ad(Zd + Z ) CCA

0

=2

1

0

B

B

d

B

B

B

B

@ 0

1126

1125

and

() is the c.d.f. for the standard

normal distribution

Z=2 is an \upper" percentile of the

standard normal distribution,

e.g., Z:05 = 1:645 and

(Z=2) = 1

2

proportion of tn;b !

Z0 = 1 values

smaller than tn

d

is roughly a measure of

median bias of tn in \normal

units."

When exactly half of the

bootstrap samples have

tn;b values less than tn,

then Zd0 = 0.

1127

ad =

is the

where

n (t

tn; j )3

j

=1 n;()

2

3

6 4Pnj=1(tn;() tn; j )253=2

P

estimated

acceleration,

tn; j is the value of tn when the

j -th case is removed from the

and

sample

tn;() = n1 Pnj=1(tn; j )

1128

BCa

intervals are second order

accurate

P rf < lower end of BCa intervalg

= 2 + Clower

n

P rf > upper end of BCa intervalg

= 2 + Cupper

n

Bootstrap percentile intervals

are rst order accurate

lower

P rf < lower endg = + Cp

2

n

ABC

intervals are approximations to BCa intervals

{ second order accurate

{ only use about 3% of the computation time

(See Efron & Tibshirani (1993)

Chapter 14)

C

P rf < upper endg = 2 + upper

pn

1130

1129

#

#

#

#

#

#

#

#

#

#

#

#

#

This is S-plus code for creating

bootstrapped confidence intervals

for a correlation coefficient. It is

stored in the file

lawschl.ssc

Any line preceded with a pound sign

is a comment that is ignored by the

program. The law school data are

read from the file

lawschl.dat

# Enter the law school data into a data frame

School LSAT

1

1 576

2

2 635

3

3 558

4

4 578

5

5 666

6

6 580

7

7 555

8

8 661

9

9 651

10

10 605

11

11 653

12

12 575

13

13 545

14

14 572

15

15 594

GPA

3.39

3.30

2.81

3.03

3.44

3.07

3.00

3.43

3.36

3.13

3.12

2.74

2.76

2.88

2.96

laws <- read.table("lawschl.dat",

col.names=c("School","LSAT","GPA"))

laws

1131

1132

# First test for zero correlation

# Plot the data

par(fin=c(7.0,7.0),pch=16,mkh=.15,mex=1.5)

plot(laws$LSAT,laws$GPA, type="p",

xlab="GPA",ylab="LSAT score",

main="Law School Data")

# Compute the sample correlation matrix

length(laws$LSAT);

sqrt(n-2)*rr/sqrt(1 - rr*rr)

<- 1 - pt(tt,n-2)

<- round(pval,digits=5)

cat("t-test for zero correlation: ",

round(tt,4), fill=T)

t-test for zero correlation: 4.4413

rr<-cor(laws$LSAT,laws$GPA)

cat("Estimated correlation: ",

round(rr,5), fill=T)

Estimated correlation:

n <tt<pval

pval

cat("p-values for the t-test for

zero correlation: ", pval, fill=T)

p-values for the t-test

for zero correlation: 0.00033

0.77637

1134

1133

# Use Fisher's z-transformation to construct

# approximate confidence intervals.

# First set the level of confidence at 1-alpha.

alpha <- .10

z <- 0.5*log((1+rr)/(1-rr))

zl <- z - qnorm(1-alpha/2)/sqrt(n-3)

zu <- z + qnorm(1-alpha/2)/sqrt(n-3)

rl <- round((exp(2*zl)-1)/(exp(2*zl)+1),

digits=4)

ru <- round((exp(2*zu)-1)/(exp(2*zu)+1),

digits=4)

per <- (1-alpha)*100;

cat( per,"% confidence interval: (",

rl,", ",ru,")",fill=T)

# Compute bootstrap confidence intervals.

# Use B=5000 bootstrap samples.

nboot <- 5000

rboot <- bootstrap(data=laws,

statistic=cor(GPA,LSAT),B=nboot)

Forming

Forming

Forming

.

.

.

Forming

Forming

replications 1 to 100

replications 101 to 200

replications 201 to 300

replications 4801 to

replications 4901 to

4900

5000

90 % confidence interval: ( 0.509 , 0.9071 )

1135

1136

# limits.emp(): Calculates empirical percentiles

#

for the bootstrapped parameter

#

estimates in a resamp object.

#

The quantile function is used to

#

calculate the empirical percentiles.

# usage:

# limits.emp(x, probs=c(0.025, 0.05, 0.95, 0.975))

limits.emp(rboot, probs=c(0.05,0.95))

5%

95%

Param 0.523511 0.9473424

# limits.bca(): Calculates BCa (bootstrap

#

bias-correct, adjusted)

#

confidence limits.

# usage:

#

limits.bca(boot.obj,

#

probs=c(0.025, 0.05, 0.95, 0.975),

#

details=F, z0=NULL,

#

acceleration=NULL,

#

group.size=NULL,

#

frame.eval.jack=sys.parent(1))

# Do another set of 5000 bootstrapped values

rboot <- bootstrap(data=laws,

statistic=cor(GPA,LSAT),B=nboot)

limits.emp(rboot, probs=c(0.05,0.95))

limits.bca(rboot,probs=c(0.05,0.95),detail=T)

$limits:

5%

95%

Param 0.442216 0.9306627

$emp.probs:

5%

95%

Param 0.520661 0.9486835

5%

95%

Param 0.01954177 0.9065055

1137

1138

#

#

#

Both sets of confidence intervals could

have been obtained from the summary( )

function

summary(rboot)

Call:

bootstrap(data = laws, statistic =

cor(GPA, LSAT), B = nboot)

$z0:

Param

-0.07979538

Number of Replications: 5000

$acceleration:

Param

-0.07567156

Summary Statistics:

Observed

Bias Mean

SE

Param 0.7764 -0.007183 0.7692 0.1341

$group.size:

[1] 1

Empirical Percentiles:

2.5%

5%

95% 97.5%

Param 0.4638 0.5207 0.9487 0.9616

BCa Percentiles:

2.5%

5%

95% 97.5%

Param 0.3462 0.4422 0.9307 0.9449

1139

1140

50

hist(rboot$rep,nclass=50,

xlab=" ",

main="5000 Bootstrap Correlations",

density=.0001)

0

# Make a histogram of the bootstrapped

# correlations.

100 150 200 250 300

5000 Bootstrap Correlations

0.2

0.4

0.6

0.8

1.0

1141

The bootstrap can fail:

Example: X1; X2; : : : ; Xn are

sampled from a uniform (0; )

distribution:

true density:

f (x) = 1 ;

0<x<

0 x0

true c.d.f.: F (x) = x 0 < x 1 x>

8

>

>

>

>

>

>

<

>

>

>

>

>

>

:

Bootstrap percentile condence

intervals for tend to be

too short.

1143

1142

Application of the bootstrap must

adequately replicate the random

process that produced the original

sample

Simple random samples

\Nested" experiments

{ Sample plants from a eld

{ Sample leaves from plants

Curve tting (existence of

covariates)

{ Fixed levels

{ Random samples

1144

Obtain

Parametric Bootstrap

a bootstrap sample of

size n by sampling from a

N (^; ^ ) distribution, i.e.

Suppose you \knew" that

(X1j ; X2j )

j = 1; : : : ; n

were obtained from a simple random sample from a bivariate normal distribution, i.e.,

02

3

1

X

B

66 1 77

1j 777

B

B

6

7

Xj = X2j 75 NID @4 2 5 ; CCCA

2

66

66

4

3

Estimate unknown parameters

nX

Xj = X

= 2 = n1 j =1

d

2

3

66 d 1 77

64

7

d 5

n

= n 1 1 j =1

(Xj X)(Xj X)T

d

X

1145

References

Davison, A.C. and Hinkley, D.V. (1997)

Bootstrap Methods and Their Applications, Cambridge Series in Statistical and

Probabilistic Mathematics 1, Cambridge

University Press, New York.

Efron, B. (1982) The Jackknife, The

Bootstrap and other resampling plans,

CBMS, 38, SIAM-NSF, Philadelphia.

Efron, B. and Gong, G. (1983) The American Statistician, , 36-48.

Efron, B. (1987) Better Bootstrap condence intervals (with discussion) Journal of

the American Statistical Association, 82,

171-200.

Efron, B. and Tibsharani, R. (1993) An

Introduction to the Bootstrap, Chapman

and Hall, New York.

Shao, J. and Tu, D. (1995) The Jackknife

and Bootstrap, New York, Springer.

1147

X1;b; : : : ; Xn;b

and compute tn;b = rn;b

Repeat this to obtain

rn;1; rn;2; : : : ; rn;B

Compute bootstrap

{ standard errors

{ bias estimators

{ condence intervals

1146

Example 12.2: Stormer viscometer

data (Venables & Ripley, Chapter 8)

measure viscosity of a uid

measure time taken for an

inner cyclinder in the mechanism

to complete a specic number of

revolutions in response to an

actuating weight

calibrate the viscometer using

runs with

{ varying weights (W ) (g)

{ uids with known viscosity (V )

{ record the time (T ) (sec

1148

theoretical model

T = W1V + 2

#

#

#

#

#

#

#

#

#

#

This code is used to explore the

Stormer viscometer data. It is stored

in the file

stormer.ssc

Enter the data into a data frame.

The data are stored in the file

stormer.dat

library(MASS)

stormer <- read.table("stormer.dat")

stormer

Viscosity

1

14.7

2

27.5

3

42.0

4

75.7

5

89.7

6

146.6

7

158.3

8

14.7

9

27.5

10

42.0

11

75.7

12

89.7

13

146.6

14

158.3

15

161.1

16

298.3

17

75.7

18

89.7

19

146.6

20

158.3

21

161.1

22

298.3

23

298.3

Wt

20

20

20

20

20

20

20

50

50

50

50

50

50

50

50

50

100

100

100

100

100

100

100

Time

35.6

54.3

75.6

121.2

150.8

229.0

270.0

17.6

24.3

31.4

47.2

58.3

85.6

101.1

92.2

187.2

24.6

30.0

41.7

50.3

45.1

89.0

86.5

1149

Starting values for 1 and 2:

Fit an approximate linear model.

Note that

Ti = W1Vi + i

2

i

) (Wi 2)Ti = 1Vi + i(Wi 2)

) WiTi = 1Vi + 2Ti + i(W1 2)

"

"

this is the new

response variable

this is the

new error

Use OLS estimation to obtain

# Use a linear approximation to obtain

# starting values for least squares

# estimation in the non-linear model

fm0 <- lm(Wt*Time ~ Viscosity + Time - 1,

data=stormer)

b0 <- coef(fm0)

names(b0) <- c("b1","b2")

# Fit the non-linear model

storm.fm <- nls(

formula = Time ~ b1*Viscosity/(Wt-b2),

data = stormer, start = b0,

trace = T)

885.365 : 28.8755 2.84373

825.11 : 29.3935 2.23328

825.051 : 29.4013 2.21823

d1(0) = 28:876

d2(0) = 2:8437

1150

1151

1152

# Create a bivariate confidence region

# for the the (b1,b2) parameters.

# First set up a grid of (b1,b2) values

bc <- coef(storm.fm)

se <- sqrt(diag(vcov(storm.fm)))

dv <- deviance(storm.fm)

summary(storm.fm)$parameters

Value

b1 29.401328

b2 2.218226

Std. Error

0.9155353

0.6655234

gsize<-51

b1 <- bc[1] + seq(-3*se[1], 3*se[1],

length = gsize)

b2 <- bc[2] + seq(-3*se[2], 3*se[2],

length = gsize)

bv <- expand.grid(b1, b2)

t value

32.113813

3.333054

# Create a function to evaluate sums of squares

ssq <- function(b)

sum((stormer$Time - b[1] * stormer$Viscosity/

(stormer$Wt-b[2]))^2)

1153

1154

# Create the plot

# Evalute the sum of squared residuals and

# approximate F-ratios for all of the

# (b1,b2) values on the grid

dbeta <- apply(bv, 1, ssq)

n<-length(stormer$Viscosity)

df1<-length(bc)

df2<-n-df1

fstat <- matrix( ((dbeta - dv)/df1) / (dv/df2),

gsize, gsize)

par(fin=c(7.0,7.0), mex=1.5,lwd=3)

plot(b1, b2, type="n",

main="95% Confidence Region")

contour(b1, b2, fstat, levels=c(1,2,5,7,10,15,20),

labex=0.75, lty=2, add=T)

contour(b1, b2, fstat, levels=qf(0.95,2,21),

labex=0, lty=1, add=T)

text(31.6,0.3,"95% CR", adj=0, cex=0.75)

points(bc[1], bc[2], pch=3, mkh=0.15)

# remove b1,b2, and bv

rm(b1,b2,bv,fstat)

1155

1156

Construct a joint condence

region for (1; 2):

Deviance (residual sum of

squares):

95% Confidence Region

F (1; 2) =

An

2

d(1;2) d(c1;c2)

2

d(c1;c2)

n 2

approximate (1 ) 100%

condence interval consists of all

(1; 2) such that

F (1; 2) < F(2;n

5

b2

Approximate F-statistic:

4

1Vj

Wj 2

3

nX

d(1; 2) = j =1

Tj

32

77

77

75

20

15

1

20

10

1

2

66

66

64

2

7

5

20 15

27

28

29

30

10

7

31

5

95% CR

32

b1

;

2)

1157

1158

#=========================================

# Bootstrap I:

#

Treat the regressors as random and

#

resample the cases (y,x_1,x_2)

#=========================================

Bootstrap Estimation

storm.boot1 <- bootstrap(stormer,

coef(nls(Time~b1*Viscosity/(Wt-b2),

data=stormer,start=bc)),B=1000)

Bootstrap I:

Sample n = 23 cases

(Ti; Wi; Vi)

summary(storm.boot1)

from the original data set

(using simple random

sampling with replacement)

Call:

bootstrap(data = stormer,

statistic = coef(nls(Time ~ (b1 *

Viscosity)/(Wt - b2),

data = stormer, start = bc)), B = 1000)

Number of Replications: 1000

1159

1160

Summary Statistics:

Observed

Bias Mean

SE

b1 29.401 -0.08665 29.315 0.7194

b2

2.218 0.09412 2.312 0.8424

# Produce histograms of the bootstrapped

# values of the regression coefficients

#

#

#

#

Empirical Percentiles:

2.5%

5%

95% 97.5%

b1 27.8202 28.113 30.402 30.613

b2 0.8453 1.057 3.513 3.779

The following code is used to draw

non-parametric estimated densities,

normal densities, and a histogram

on the same graph.

# truehist(): Plot a Histogram (prob=T

#

by default) For the function

#

hist(), probability=F by

#

default.

# width.SJ(): Bandwidth Selection by Pilot

#

Estimation of Derivatives.

#

Uses the method of Sheather

#

& Jones (1991) to select the

#

bandwidth of a Gaussian kernel

#

density estimator.

BCa Percentiles:

2.5%

5%

95% 97.5%

b1 27.9368 28.242 30.525 30.747

b2 0.7462 0.899 3.409 3.604

Correlation of Replicates:

b1

b2

b1 1.0000 -0.8628

b2 -0.8628 1.0000

1161

1162

b1.boot <- storm.boot1$rep[,1]

b2.boot <- storm.boot1$rep[,2]

library(MASS)

par(fin=c(7.0,7.0),mex=1.5)

# density() : Estimate Probability Density

#

Function. Returns x and y

#

coordinates of a non-parametric

#

estimate of the probability

#

density of the data. Options

#

include the type of window to

#

use and the number of points

#

at which to estimate the density.

#

n = the number of equally spaced

#

points at which the density

#

is evaluated.

mm <- range(b1.boot)

min.int <- floor(mm[1])

max.int <- ceiling(mm[2])

truehist(b1.boot,xlim=c(min.int,max.int),

density=.0001)

width.SJ(b1.boot)

[1] 0.6918326

b1.boot.dns1 <- density(b1.boot,n=200,width=.6)

b1.boot.dns2 <list( x = b1.boot.dns1$x,

y = dnorm(b1.boot.dns1$x,mean(b1.boot),

sqrt(var(b1.boot))))

1163

1164

# Draw the non-parametric density

truehist(b2.boot,xlim=c(min.int,max.int),

density=.001)

lines(b1.boot.dns1,lty=3,lwd=2)

# Draw normal densities

width.SJ(b2.boot)

[1] 0.7339957

lines(b1.boot.dns2,lty=1,lwd=2)

legend(27.,-0.30,c("Nonparametric",

"Normal approx."),

lty=c(3,1),bty="n",lwd=2)

# Display the distribution of the estimate

# of the second parameter

par(fin=c(7.0,7.0),mex=1.5)

mm <- range(b2.boot)

min.int <- floor(mm[1])

max.int <- ceiling(mm[2])

b2.boot.dns1 <- density(b2.boot,n=200,width=.8)

b2.boot.dns2 <list( x = b2.boot.dns1$x,

y = dnorm(b2.boot.dns1$x,mean(b2.boot),

sqrt(var(b2.boot))))

lines(b2.boot.dns1,lty=3,lwd=2)

lines(b2.boot.dns2,lty=1,lwd=2)

legend(1.,-0.30,c("Nonparametric",

"Normal approx."),

lty=c(3,1),bty="n",lwd=2)

1166

0.0

0.0

0.1

0.1

0.2

0.2

0.3

0.3

0.4

0.4

0.5

0.5

0.6

1165

26

27

28

29

30

31

32

0

b1.boot

2

4

6

b2.boot

Nonparametric

Normal approx.

Nonparametric

Normal approx.

1167

1168

Bootstrap II: Fix the values of the

explanatory variables

f(Wj ; Vj ) : j = 1; : : : ; ng

Compute

residuals from tting

the model to the original sample

e j = Tj

d1Vj

Wj d2 j = 1; : : : ; n

Approximate sampling from the

population of random errors by

taking a sample (with replacement) from fe1; : : : ; eng say,

e1;b; e2;b; : : : ; en;b

1169

Create new observations:

; Wj ; Vj ) j = 1; : : : ; n

(Tj;b

where

d

Tj;b = W 1Vjd + ej

2

j

Fit the model to the j -th bootstrap sample to obtain

d1;b and d2;b

Repeat

this for b = 1; : : : ; B

bootstrap samples

1170

#=======================================

# Bootstrap II:

#

Treat the regressors as fixed and

#

resample from the residuals

#=======================================

#

#

#

#

#

#

#

Center the residuals at zero and

divide residuals by linear approximations

to a multiple of the standard errors. These

centered and scaled residuals approximately

have the same first two moments as the

random errors, but they are not quite

uncorrelated.

g2 <- grad.f(b[1], b[2], stormer$Viscosity,

stormer$Wt, stormer$Tim)

D <- attr(g2, "gradient")

h <- 1-diag(D%*%solve(t(D)%*%D)%*%t(D))

rs <- rs/sqrt(h)

dfe <- length(rs)-length(coef(storm.fm))

vr <- var(rs)

rs <- rs%*%sqrt(deviance(storm.fm)/dfe/vr)

rs <- scale(resid(storm.fm),center=T,scale=F)

grad.f <- deriv3( expr = Y ~ (b1*X1)/(X2-b2),

namevec = c("b1", "b2"),

function.arg = function(b1, b2, X1, X2, Y) NULL)

1171

1172

# Compute 1000 bootstrapped values of the

# regression parameters

storm.boot2 <- bootstrap(data=rs,

statistic=storm.bf2(rs),B=1000)

# Create a function to use in fitting

# the model to bootstrap samples

storm.bf2 <- function(rs)

{assign("Tim", fitted(storm.fm) + rs,

frame = 1)

nls(formula = Tim ~ (b1*Viscosity)/(Wt-b2),

data = stormer,

start = coef(storm.fm)

)$parameters }

summary(storm.fm)$parameters

Value

b1 29.401328

b2 2.218226

Std. Error

0.9155353

0.6655234

Forming

Forming

Forming

Forming

Forming

Forming

Forming

Forming

Forming

Forming

replications

replications

replications

replications

replications

replications

replications

replications

replications

replications

1 to 100

101 to 200

201 to 300

301 to 400

401 to 500

501 to 600

601 to 700

701 to 800

801 to 900

901 to 1000

Call:

bootstrap(data = rs, statistic = storm.bf2(rs),

B = 1000)

t value

32.113813

3.333054

b1.boot <- storm.boot2$rep[,1]

b2.boot <- storm.boot2$rep[,2]

1173

# The BCA intervals may not be correctly

# computed by the following function

summary(storm.boot2)

1174

Empirical Percentiles:

2.5%

5%

95% 97.5%

b1 27.58 27.847 30.690 30.924

b2 1.03 1.241 3.295 3.459

BCa Confidence Limits:

2.5%

5%

95% 97.5%

b1 26.236 26.267 29.698 29.985

b2 1.316 1.524 3.563 3.683

Number of Replications: 1000

Summary Statistics:

Observed

Bias Mean

SE

b1 28.745 0.5719 29.317 0.8892

b2

2.432 -0.1682 2.264 0.6353

Correlation of Replicates:

b1

b2

b1 1.0000 -0.9194

1175

1176

#

#

#

#

The following code is used to draw

non-parametric estimated densities,

normal densities, and histograms

on the same graphic window.

par(fin=c(7.0,7.0),mex=1.3)

# Draw a non-parametric density

mm <- range(b1.boot)

min.int <- floor(mm[1])

max.int <- ceiling(mm[2])

lines(b1.boot.dns1,lty=3,lwd=2)

# draw normal densities

truehist(b1.boot,xlim=c(min.int,max.int),

density=.0001)

b1.boot.dns1 <- density(b1.boot,n=200,

width=width.SJ(b1.boot))

b1.boot.dns2 <list( x = b1.boot.dns1$x,

y = dnorm(b1.boot.dns1$x,mean(b1.boot),

sqrt(var(b1.boot))))

lines(b1.boot.dns2,lty=1,lwd=2)

legend(27,-0.22,c("Nonparametric",

"Normal approx."),

lty=c(3,1),bty="n",lwd=2)

1178

1177

# Display the distribution for the other

# parameter estimate

par(fin=c(7.0,7.0),mex=1.3)

0.3

0.2

0.1

b2.boot.dns1 <- density(b2.boot,n=200,

width=width.SJ(b2.boot))

b2.boot.dns2 <list( x = b2.boot.dns1$x,

y = dnorm(b2.boot.dns1$x,mean(b2.boot),

sqrt(var(b2.boot))))

0.0

truehist(b2.boot,xlim=c(min.int,max.int),

density=.00001)

0.4

0.5

mm <- range(b2.boot)

min.int <- floor(mm[1])

max.int <- ceiling(mm[2])

26

27

28

29

30

31

32

b1.boot

lines(b2.boot.dns1,lty=3,lwd=2)

lines(b2.boot.dns2,lty=1lwd=2)

legend(0.5,-0.25,c("Nonparametric",

"Normal approx."),lty=c(3,1),

bty="n",lwd=2)

Nonparametric

Normal approx.

1179

1180

Comparison of standard errors:

Parameter

1

2

Asymptotic

normal

approx.

0.916

0.666

Random

Bootstrap

0.719

0.842

Fixed

Bootstrap

0.889

0.635

0.2

0.4

0.6

Comparison of approximate 95%

condence intervals:

0.0

Parameter

0

1

2

3

1

2

4

b2.boot

Asymptotic

normal

approx.

(27.50, 31.31)

(0.83, 3.60)

Random

Bootstrap

(28.13, 30.40)

(1.06, 3.51)

Fixed

Bootstrap

(27.42, 31.03)

(1.03, 3.46)

"

i t21;:025Si

Nonparametric

Normal approx.

d

1181

c

1182