Finding Bayes Estimators X ) and ˜

advertisement

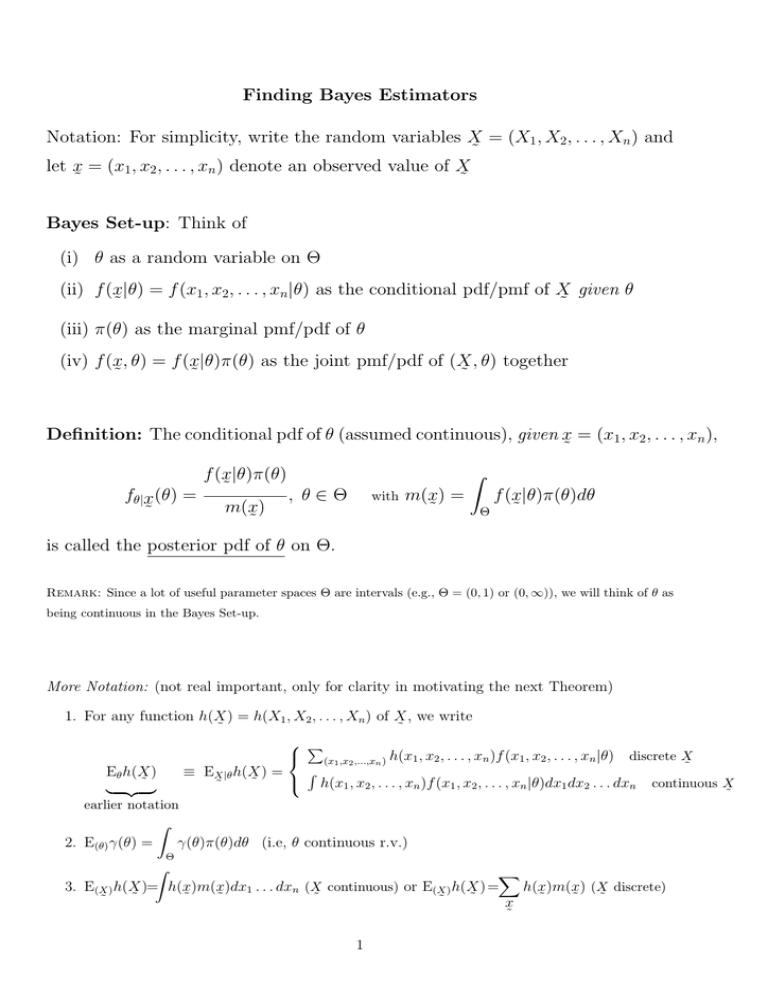

Finding Bayes Estimators

Notation: For simplicity, write the random variables X = (X1 , X2 , . . . , Xn ) and

˜

let x = (x1 , x2 , . . . , xn ) denote an observed value of X

˜

˜

Bayes Set-up: Think of

(i) θ as a random variable on Θ

(ii) f (x|θ) = f (x1 , x2 , . . . , xn |θ) as the conditional pdf/pmf of X given θ

˜

˜

(iii) π(θ) as the marginal pmf/pdf of θ

(iv) f (x, θ) = f (x|θ)π(θ) as the joint pmf/pdf of (X , θ) together

˜

˜

˜

Definition: The conditional pdf of θ (assumed continuous), given x = (x1 , x2 , . . . , xn ),

˜

Z

f (x|θ)π(θ)

˜

fθ|x (θ) =

, θ ∈ Θ with m(x) =

f (x|θ)π(θ)dθ

m(x)

˜

˜

Θ

˜

˜

is called the posterior pdf of θ on Θ.

Remark: Since a lot of useful parameter spaces Θ are intervals (e.g., Θ = (0, 1) or (0, ∞)), we will think of θ as

being continuous in the Bayes Set-up.

More Notation: (not real important, only for clarity in motivating the next Theorem)

1. For any function h(X ) = h(X1 , X2 , . . . , Xn ) of X , we write

˜

˜

P

(x1 ,x2 ,...,xn ) h(x1 , x2 , . . . , xn )f (x1 , x2 , . . . , xn |θ) discrete X

˜

R

Eθ h(X )

≡ EX |θ h(X ) =

˜

˜

h(x

,

x

,

.

.

.

,

x

)f

(x

,

x

,

.

.

.

,

x

|θ)dx

dx

.

.

.

dx

continuous

X

1

2

n

1

2

n

1

2

n

˜

| {z }

˜

earlier notation

Z

2. E(θ) γ(θ) =

γ(θ)π(θ)dθ (i.e, θ continuous r.v.)

Θ

Z

X

3. E(X ) h(X )= h(x)m(x)dx1 . . . dxn (X continuous) or E(X ) h(X ) =

h(x)m(x) (X discrete)

˜

˜

˜

˜

˜

˜

˜ ˜

˜

˜

x

˜

1

Main idea: For an estimator T = h(X ), recall the risk function of T is RT (θ) =

˜

Eθ L(T, θ) = EX |θ L(T, θ). Then, the Bayes risk of T is

˜

BRT = E(θ) RT (θ) = E(θ) [EX |θ L(T, θ)] = E(X ) [Eθ|x L(T, θ)] = E(X ) [Eθ|x L(h(x), θ)]

˜

˜

˜

˜

˜

˜

To find an estimator T = h(X ) to minimize the Bayes risk BRT , it is enough,

˜

at each fixed data x possibility of X , to pick the “h(x)”-value that minimizes

˜

˜

˜

Eθ|x L(h(x), θ).

˜

˜

Theorem: A Bayes estimator is an estimator that minimizes the “posterior

risk” Eθ|x L(h(x), θ), over all estimators T = h(X ), for fixed values x = (x1 , x2 , . . . , xn )

˜

˜

˜

˜

of X = (X1 , X2 , . . . , Xn ).

˜

Corollary: Let T0 denote the Bayes estimator of γ(θ).

¡

¢2

(1). If L(t, θ) = t − γ(θ) , then T0 = Eθ|x γ(θ). posterior mean of γ(θ)

˜ ¡

¯

¯

¢

¯

¯

(2). If L(t, θ) = t − γ(θ) , then T0 = median γ(θ)|x . posterior median of γ(θ)

˜

Example/continued: X ∼ Binomial(θ), θ ∈ (0, 1); uniform(0, 1) prior for θ;

L(t, θ) = (t − θ)2 . We found Bayes estimator T0 =

Corollary 1

2

X+1

n+2

of γ(θ) = θ, but now try