Elements of Decision Theory

advertisement

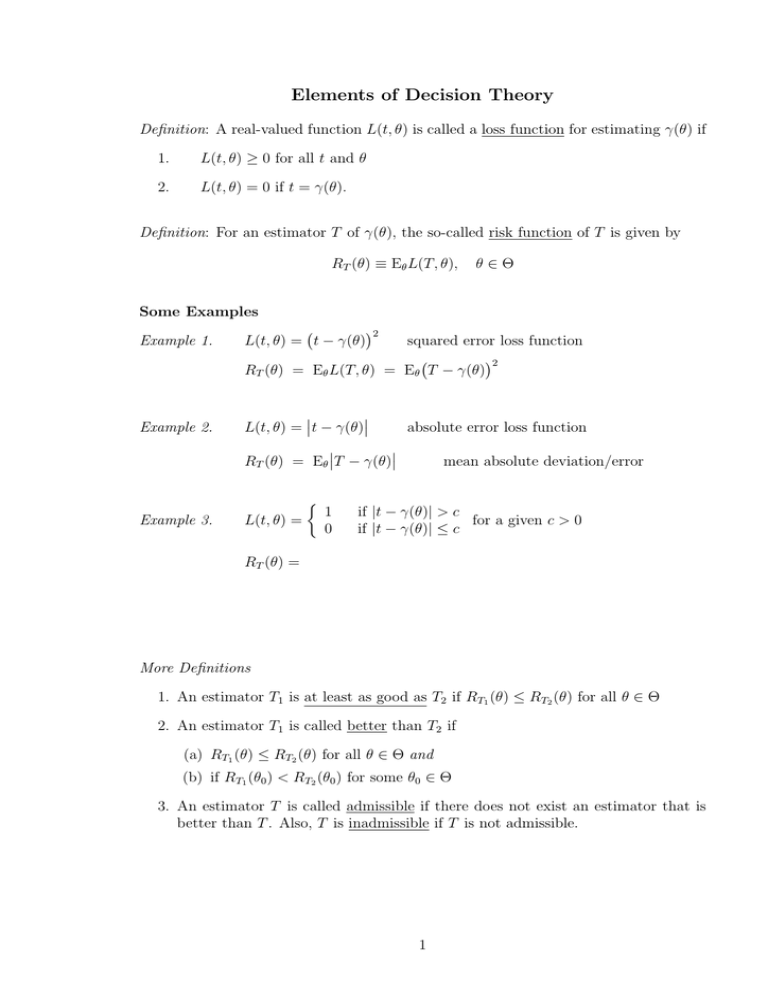

Elements of Decision Theory Definition: A real-valued function L(t, θ) is called a loss function for estimating γ(θ) if 1. L(t, θ) ≥ 0 for all t and θ 2. L(t, θ) = 0 if t = γ(θ). Definition: For an estimator T of γ(θ), the so-called risk function of T is given by RT (θ) ≡ Eθ L(T, θ), θ∈Θ Some Examples Example 1. ¡ ¢2 L(t, θ) = t − γ(θ) Example 2. ¯ ¯ L(t, θ) = ¯t − γ(θ)¯ squared error loss function ¡ ¢2 RT (θ) = Eθ L(T, θ) = Eθ T − γ(θ) absolute error loss function ¯ ¯ RT (θ) = Eθ ¯T − γ(θ)¯ ½ Example 3. L(t, θ) = 1 0 mean absolute deviation/error if |t − γ(θ)| > c for a given c > 0 if |t − γ(θ)| ≤ c RT (θ) = More Definitions 1. An estimator T1 is at least as good as T2 if RT1 (θ) ≤ RT2 (θ) for all θ ∈ Θ 2. An estimator T1 is called better than T2 if (a) RT1 (θ) ≤ RT2 (θ) for all θ ∈ Θ and (b) if RT1 (θ0 ) < RT2 (θ0 ) for some θ0 ∈ Θ 3. An estimator T is called admissible if there does not exist an estimator that is better than T . Also, T is inadmissible if T is not admissible. 1 Little sketch here Example Suppose X1 , X2 iid Poisson(θ). Consider estimators T1 = X̄2 and T2 = 10 for γ(θ) = θ with L(t, θ) = (t − θ)2 . ¡ ¢2 RT2 (θ) = Eθ T2 − θ = ¡ ¢2 RT1 (θ) = Eθ T1 − θ = MSEθ (T1 ) = Remark: If T1 is inadmissible, then we can find an estimator T that is better than T1 . Hence, we need only consider the set of admissible estimators. Remark: In general, a “best” estimator does not exist. One may • restrict the class of estimators (e.g., consider only UEs) and look for the best within the smaller class (e.g., UMVUE); • or define other optimality criterion (e.g., Bayes principle, minimax principle) for ordering the risk function and select the best under the new criterion (Bayes estimators, minimax estimators). 2