STAT 510 Homework 6 Solutions Spring 2016

advertisement

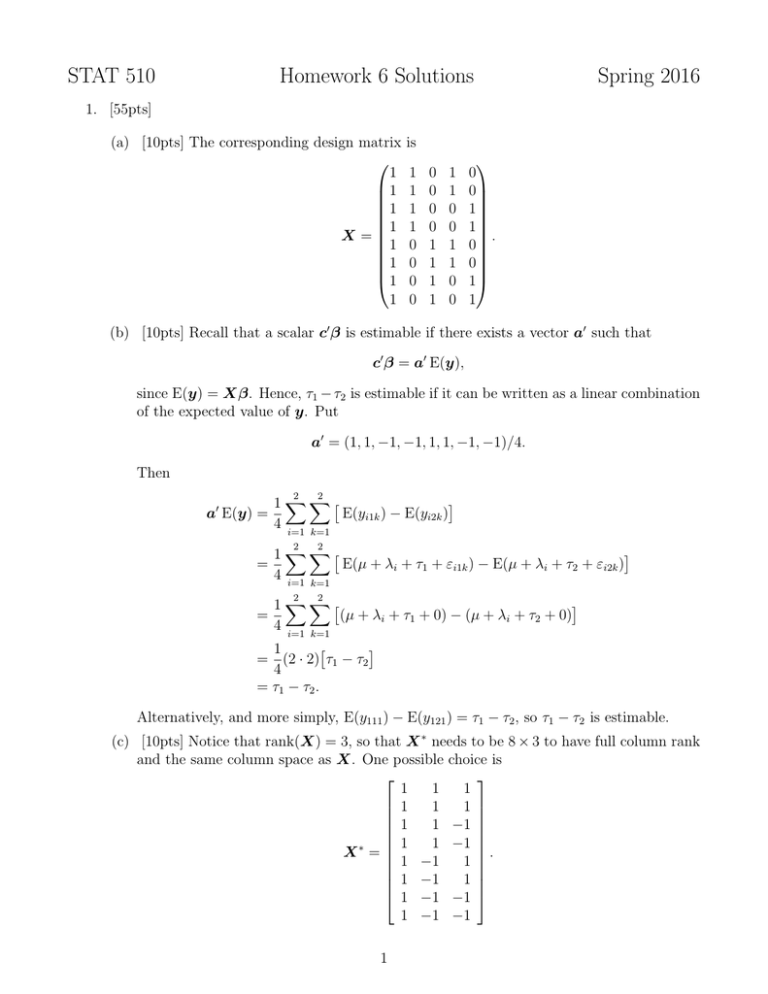

STAT 510 Homework 6 Solutions Spring 2016 1. [55pts] (a) [10pts] The corresponding design matrix 1 1 1 1 X= 1 1 1 1 is 1 1 1 1 0 0 0 0 0 0 0 0 1 1 1 1 1 1 0 0 1 1 0 0 0 0 1 1 . 0 0 1 1 (b) [10pts] Recall that a scalar c0 β is estimable if there exists a vector a0 such that c0 β = a0 E(y), since E(y) = Xβ. Hence, τ1 − τ2 is estimable if it can be written as a linear combination of the expected value of y. Put a0 = (1, 1, −1, −1, 1, 1, −1, −1)/4. Then 2 2 1 XX E(yi1k ) − E(yi2k ) a E(y) = 4 i=1 k=1 0 2 2 2 2 1 XX = E(µ + λi + τ1 + εi1k ) − E(µ + λi + τ2 + εi2k ) 4 i=1 k=1 1 XX = (µ + λi + τ1 + 0) − (µ + λi + τ2 + 0) 4 i=1 k=1 1 = (2 · 2) τ1 − τ2 4 = τ1 − τ2 . Alternatively, and more simply, E(y111 ) − E(y121 ) = τ1 − τ2 , so τ1 − τ2 is estimable. (c) [10pts] Notice that rank(X) = 3, so that X ∗ needs to be 8 × 3 to have full column rank and the same column space as X. One possible choice is 1 1 1 1 1 1 1 1 −1 1 1 −1 ∗ . X = 1 −1 1 1 −1 1 1 −1 −1 1 −1 −1 1 (d) [10pts] Let β ∗ = (β1∗ , β2∗ , β3∗ )0 . Using X ∗ as given in part (c), equating expected values gives β1∗ + β2∗ + β3∗ β1∗ + β2∗ − β3∗ β1∗ − β2∗ + β3∗ β1∗ − β2∗ − β3∗ = µ + λ1 + τ1 , = µ + λ1 + τ2 , = µ + λ2 + τ1 , = µ + λ2 + τ2 . Then 2β1∗ = 2µ + λ1 + λ2 + τ1 + τ2 , 2β2∗ = λ1 − λ2 , 2β3∗ = τ1 − τ2 , which implies β1∗ = µ + (λ1 + λ2 )/2 + (τ1 + τ2 )/2, β2∗ = (λ1 − λ2 )/2, β3∗ = (τ1 − τ2 )/2. (e) [15pts] Since τ1 − τ2 = 2β3∗ = 0, 0, 2 β ∗ , ∗ \ τ1 − τ2 = 0, 0, 2 β̂OLS OLS = 0, 0, 2 (X ∗ 0 X ∗ )−1 X ∗ 0 y −1 8 0 0 = 0, 0, 2 0 8 0 X ∗ 0 y 0 0 8 1/8 0 0 = 0, 0, 2 0 1/8 0 X ∗ 0 y 0 0 1/8 1 1 1 1 1 1 1 1 1 −1 −1 −1 −1 y = 0, 0, 1/4 1 1 1 1 1 −1 −1 1 1 −1 −1 y111 y112 y121 y122 1 1 1 −1 −1 1 1 −1 −1 = y211 4 y212 y221 y222 2 2 1 XX = [yi1k − yi2k ] 4 i=1 k=1 = ȳ1 − ȳ2 2 2. [15pts] By slide 51 of set 12, the BLUE of µ is a weighted average of independent linear unbiased estimators, where the weights are proportional to the inverse variances of the linear unbiased estimators. We can divide y into two subsets that each have homogeneous variances by considering y5 separately from y1 , . . . , y4 . By the hint given, the BLUE of µ based only on y1 , . . . , y4 is P4 1 i=1 yi . Clearly, the BLUE of µ based on only y5 is y5 itself. These two estimators are 4 independent, with variances ! 4 1 0 1X 0 yi = Var 1 (y1 , y2 , y3 , y4 ) Var 4 i=1 4 4×1 1 0 1 Var (y1 , y2 , y3 , y4 )0 14×1 16 4×1 5 1 1 1 1 5 1 1 1 = 104×1 1 1 5 1 14×1 16 1 1 1 5 32 = 16 = 2, = Var(y5 ) = 4. Then, µ̂BLUE = Var( 1 4 1 P4 1 4 i=1 yi ) Var( 14 = 11 24 P4 1 P4 i=1 P4 i=1 yi ) 1 i=1 yi + 4 y5 1 + 14 2 4 1X 1 = yi + y5 . 6 i=1 3 3 yi + + 1 y Var(y5 ) 5 1 Var(y5 ) 3. [30pts] (a) [15pts] EM Sou(xu,trt) = = 1 dfou(xu,trt) E SSou(xu,trt) 1 E tnm − tn t X n X m X ! (yijk − ȳij )2 i=1 j=1 k=1 t X n X m X 1 E ([µ + τi + uij + eijk ] − [µ + τi + uij + ēij ])2 = tnm − tn i=1 j=1 k=1 ! t X n m X X 1 = E (eijk − ēij )2 tnm − tn i=1 j=1 k=1 t n XX 1 (m − 1) σe2 = tnm − tn i=1 j=1 iid since eijk ∼ N 0, σe2 1 tn (m − 1) σe2 tnm − tn = σe2 . = (b) [15pts] From slide 6, the sum of squares can be written as y 0 (I − P3 )y, where −1 0 [Itn×tn ⊗ 1m×1 ]0 P3 = [Itn×tn ⊗ 1m×1 ] [Itn×tn ⊗ 1m×1 ] [Itn×tn ⊗ 1m×1 ] −1 = [Itn×tn ⊗ 1m×1 ] mItn×tn [Itn×tn ⊗ 1m×1 ]0 = 1 Itn×tn ⊗ 110m×m . m Let A= Itnm×tnm − m1 Itn×tn ⊗ 110m×m I − P3 = . tnm − tn tn(m − 1) 4 ! Then, by linearity of trace, Itnm×tnm − m1 Itn×tn ⊗ 110m×m 2 0 2 tr(AΣ) = tr σu Itn×tn ⊗ 11m×m + σe Itnm×tnm tn(m − 1) 1 1 0 2 0 2 tr Itnm×tnm − Itn×tn ⊗ 11m×m σu Itn×tn ⊗ 11m×m + σe Itnm×tnm = tn(m − 1) m 1 tr Itnm×tnm σu2 (Itn×tn ⊗ 110m×m ) + Itnm×tnm σe2 Itnm×tnm = tn(m − 1) 1 1 0 2 2 0 0 − (Itn×tn ⊗ 11m×m )(σu Itn×tn ⊗ 11m×m ) − (Itn×tn ⊗ 11m×m )σe Itnm×tnm m m 1 = tr σu2 Itn×tn ⊗ 110m×m + σe2 Itnm×tnm tn(m − 1) σu2 σe2 0 0 0 − (Itn×tn Itn×tn ) ⊗ (11m×m 11m×m ) − Itn×tn ⊗ 11m×m m m 1 = tr σu2 Itn×tn ⊗ 110m×m + σe2 Itnm×tnm tn(m − 1) σe2 σu2 0 0 − Itn×tn ⊗ m11m×m − Itn×tn ⊗ 11m×m m m 1 = σ 2 tr(Itn×tn ⊗ 110m×m ) + σe2 tr(Itnm×tnm ) tn(m − 1) u σe2 0 2 0 tr(Itn×tn ⊗ 11m×m ) − σu tr(Itn×tn ⊗ 11m×m ) − m 1 σe2 2 0 = σ tr(Itnm×tnm ) − tr(Itn×tn ⊗ 11m×m ) tn(m − 1) e m 1 σe2 2 = σe (tnm) − (tnm) since Itnm×tnm and Itn×tn ⊗ 110m×m have 1’s on the diagonal tn(m − 1) m 2 = σe , and Itnm×tnm − m1 Itn×tn ⊗ 110m×m E(y) E(y) A E(y) = E(y) tn(m − 1) 1 1 0 0 = E(y) Itnm×tnm − Itn×tn ⊗ 11m×m E(y) tn(m − 1) m 1 1 0 0 0 E(y) Itnm×tnm − E(y) (Itn×tn ⊗ 11m×m ) E(y) = tn(m − 1) m 1 1 0 0 E(y) − m E(y) E(y) = tn(m − 1) m 1 00 E(y) = tn(m − 1) = 0. 0 0 5 Now, I − P3 y =E y tnm − tn = E (y 0 Ay) = tr(AΣ) + E(y)0 A E(y) = σe2 + 0 = σe2 , EM Sou(xu,trt) 0 which is the same result as in part (a). 6 by slide 19 of set 12