Utah State University Department of Instructional Technology Needs Assessment

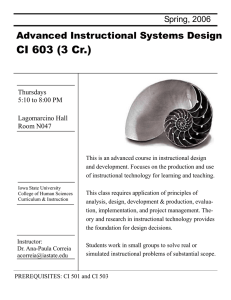

advertisement