Periodic Array

advertisement

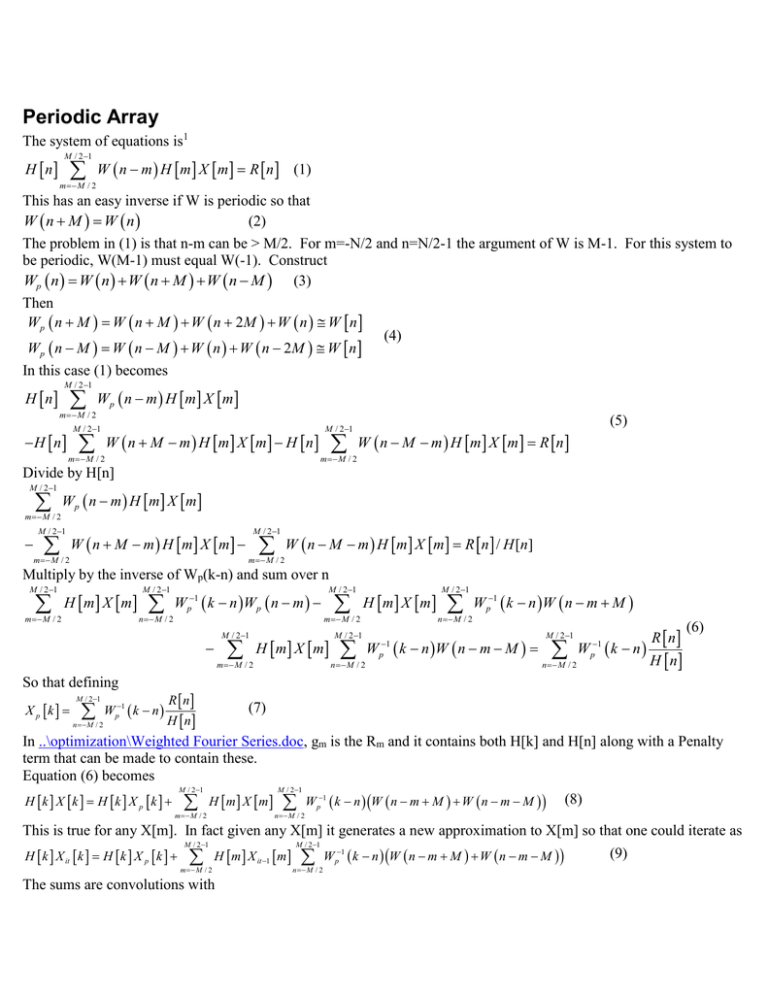

Periodic Array The system of equations is1 H n M / 2 1 m M / 2 W n m H m X m R n (1) This has an easy inverse if W is periodic so that (2) W n M W n The problem in (1) is that n-m can be > M/2. For m=-N/2 and n=N/2-1 the argument of W is M-1. For this system to be periodic, W(M-1) must equal W(-1). Construct Wp n W n W n M W n M (3) Then W p n M W n M W n 2M W n W n (4) W p n M W n M W n W n 2M W n In this case (1) becomes H n M / 2 1 m M / 2 H n Wp n m H m X m M / 2 1 m M / 2 W n M m H m X m H n M / 2 1 m M / 2 (5) W n M m H m X m R n Divide by H[n] M / 2 1 m M / 2 Wp n m H m X m M / 2 1 m M / 2 W n M m H m X m M / 2 1 m M / 2 W n M m H m X m R n / H [n ] Multiply by the inverse of Wp(k-n) and sum over n M / 2 1 m M / 2 H m X m M / 2 1 n M / 2 W p1 k n W p n m M / 2 1 m M / 2 So that defining X p k M / 2 1 n M / 2 Wp1 k n R n M / 2 1 m M / 2 H m X m H m X m M / 2 1 n M / 2 W 1 p M / 2 1 n M / 2 W p1 k n W n m M M / 2 1 k n W n m M W n M / 2 1 p k n R n (6) H n (7) H n In ..\optimization\Weighted Fourier Series.doc, gm is the Rm and it contains both H[k] and H[n] along with a Penalty term that can be made to contain these. Equation (6) becomes H k X k H k X p k M / 2 1 m M / 2 H m X m M / 2 1 n M / 2 W p1 k n W n m M W n m M (8) This is true for any X[m]. In fact given any X[m] it generates a new approximation to X[m] so that one could iterate as H k X it k H k X p k M / 2 1 m M / 2 H m X it 1 m The sums are convolutions with M / 2 1 n M / 2 W p1 k n W n m M W n m M (9) ws ti M / 2 1 W m M W m M exp j 2 f t m i m M / 2 ti Ti m ; fm (10) M T And M / 2 1 hxit 1 ti m M / 2 H m X it 1 m exp j 2 f m ti ti Ti m ; fm M T (11) Note that time is defined in terms of T/M, not T/N. 1 M / 21 T H m X it 1 m W n m M W n m M T m M / 2 M (12) Then with wp1 ti M / 2 1 m M / 2 W p1 m exp j 2 f m ti M / 2 1 i M / 2 hxit 1 ti ws ti exp j 2 f m ti HWs X it 1 f m (13) 1 M / 21 1 T M / 21 Wp k n HWs X it 1 n hws xit 1 ti wp1 ti exp j 2 f k ti (14) T n M / 2 M i M / 2 The FFT’s in (10) and (13) need to be made once for every new choice of M. The FFT’s in (11) and (14) need to be made for every iteration. Since this starts from (5) which is merely a restating of (1), and X[m] can be used as the first iteration. Finally (15) H k X it k H k X p k HWs X it 1Wp1 k Error in the solution The set of equations (1) arises from minimizing 2. The R[n] is the derivatives of 2 with respect to the n’th coefficient. In a first order expansion 2 02 M / 2 1 D n M / 2 n Dn ,0 R n (1) Assume that the error in the final steps is dominated by the error in solving for Dn-Dn.0 rather than from truncation of the expansion in (1). After solving for X n = Dn-Dn,o H n M / 2 1 m M / 2 W n m H m X m R n n (2) So that after the final step 2 02 M /2 n M / 2 m n Mixing The natural way to solve (8) is to start with the first term, and then iterate. But the equation set in (1) can be solved directly if all but the first L terms are taken to be zero. Thus it makes sense to include Xb[k] in the solution. Find a1 to ak by minimizing 2 err M / 2 1 n M / 2 n a n a (1) K W n m H m ak X k m R n n a (2) m M / 2 k 1 Each sum over M is a convolution 1 M / 21 T M / 21 W n m H m X m k w ti hx ti exp j 2 f n ti (3) T m M / 2 N i M / 2 So that H n M / 2 1 K T H n ak W HX k n R n n a (4) k 1 Then 2 M / 2 1 err n a n a c.c. (5) ak ' n M / 2 ak ' And 2 M / 2 1 2 err n a n a c.c. (6) ak ' ak '' n M / 2 ak ' ak '' There are K2 terms in (6). The equation to solve is 2 2 K 2 err err (7) a k ak k '1 ak ' ak ' The matrix is symmetric and positive definite so Cholesky matrix inversion is appropriate. For very large arrays M 103-6, so that K ~ 102 is appropriate. In practice this allows us to start with Xp, Xtridiagonal, and Xshort band. These are solved using 7 for the best starting array, which is then used in (15) to generate a new X which can be added to the mixture. 1 This system equations usually occurs as part of Newton Raphson iteration. In this case the R is the set of residuals which is constantly getting smaller. These interact a bit to the approximation of X below causeing one set to want a band like solution and the next set to want a periodic solution. Also since R is in principly going to zero, highly accurate solutions at each step are not needed.