11: STEEPEST DESCENT AND CONJUGATE GRADIENT

advertisement

11: STEEPEST DESCENT AND CONJUGATE GRADIENT

Math 639

(updated: January 2, 2012)

Suppose that A is a SPD n × n real matrix and, as usual, we consider

iteratively solving Ax = b. By now, you should understand that the goal of

any iterative method is to drive down (a norm of) the error ei = x − xi as

rapidly as possible. We consider a method of the following form:

xi+1 = xi + αi pi .

Here pi ∈ Rn is a “search direction” while αi is a real number which we are

free to choose. It is immediate (subtract this equation from x = x) that

(11.1)

ei+1 = ei − αi pi .

We denote the “residual” by ri = b − Axi . A simple manipulation shows

that

ri = Aei and ri+1 = ri − αi Api .

The first method that we shall develop takes pi to be the residual ri . The

idea is then to try to find the best possible choice for αi . Ideally, we should

choose αi so that it results in the maximum error reduction, i.e., kei+1 k

should be as small as possible. For arbitrary norms, this goal is not a viable

one. The problem is that we cannot assume that we know ei at any step

of the iteration. Indeed, since we always have xi available, knowing ei is

tantamount to knowing the solution since x = xi + ei .

It is instructive to see what happens with the wrong choice of norm.

Suppose that we attempt to choose αi so that kei+1 kℓ2 is minimal, i.e.,

kei+1 kℓ2 = min kei − αri kℓ2 .

α∈R

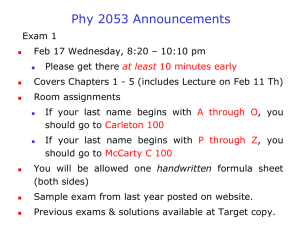

The above problem can be solved geometrically and its solution is illustrated

in Figure 1. Clearly, αi should be chosen so that the error ei+1 is orthogonal

to ri , i.e.,

(ei+1 , ri ) = 0.

Here (v, w) ≡ v · w denotes the dot inner product on Rn . A simple algebraic

manipulation using the properties of the inner product and (11.1) gives

(11.2)

(ei − αi ri , ri ) = 0 or αi =

(ei , ri )

.

(ri , ri )

Of course, this method is not computable as we do not know ei so the

numerator in the definition of αi in (11.2) is not available.

1

2

ei

e i+1

αi r i

Figure 1. Minimal error

We can fix up the above method by introducing a different norm, actually,

we introduce a different inner product. Recall, that from earlier classes, inner

products not only provide norms but they give rise to a (different) notion

of angle. We shall get a computable algorithm by replacing the dot-inner

product above with the A-inner product, i.e., we define

(11.3)

kei+1 kA = min kei − αri kA .

α∈R

The solution of this problem is to make ei+1 A-orthogonal to ri , i.e.,

(ei+1 , ri )A = 0.

Repeating the above computations (but with the A-inner product) gives

(ei − αi ri , ri )A = 0 or αi =

(11.4)

αi =

(Aei , ri )

(ei , ri )A

, i.e.,

=

(ri , ri )A

(Ari , ri )

(ri , ri )

.

(Ari , ri )

We have now obtained a computable method. Clearly, the residual ri =

b − Axi and αi are computable without explicitly knowing x or ei . We can

easily check that this choice of αi solves (11.3). Indeed, by A-orthogonality

3

and the Schwarz inequality,

(11.5)

kei+1 k2A = (ei+1 , ei+1 )A = (ei+1 , ei − αri + (αi − α)ri )A

= (ei+1 , ei − αri )A ≤ kei+1 kA kei − αri kA

holds for any α ∈ R. Clearly if kei+1 kA = 0 then (11.3) holds. Otherwise,

(11.3) follows by dividing (11.5) by kei+1 kA .

The algorithm which we have just derived is known as the steepest descent

method and is summarized in the following:

Algorithm 1. (Steepest Descent). Let A be a SPD n × n matrix. Given an

initial iterate x0 , define for i = 0, 1, . . .,

xi+1 = xi + αi ri ,

ri = b − Axi ,

and

(11.6)

αi =

(ri , ri )

.

(Ari , ri )

Proposition 1. Let A be a SPD n × n matrix and {ei } be the sequence of

errors corresponding to the steepest descent algorithm. Then

K −1

kei kA

kei+1 kA ≤

K +1

where K is the spectral condition number of A.

Proof. Since ei+1 is the minimizer

kei+1 kA ≤ k(I − τ A)ei kA

for any real τ . Taking τ = 2/(λ1 + λn ) as in the proposition of Class 7 and

applying that proposition completes the proof.

Remark 1. Note that λ1 and λn only appear in the analysis. We do not need

any eigenvalue estimates for implementation of the steepest descent method.

Remark 2. As already mentioned, it is not practical to attempt to make

the optimal choice with respect to other norms as the error is not explicitly known. Alternatively, at least one application of A can eliminate this

drawback, for example, one could design a method which minimized

kAei kℓ2 .

One could also propose to minimize some other norm, i.e.,

kAei kℓ∞ .

Although this is feasible, since the ℓ∞ norm does not come from an inner

product, the computation of the parameter αi ends up being a difficult nonlinear problem.

4

It is interesting to note that the Steepest Descent Method is the first

example (in this course) of an iterative method that is not linear. Note that

ei+1 can be expressed directly from ei (without knowing xi or b) since one

simply substitutes ri = Aei to compute αi and uses

ei+1 = ei − αi ri .

Thus, there is a mapping ei → ei+1 however it is NOT LINEAR. This can

be illustrated by considering the 2 × 2 matrix

1 0

A=

.

0 2

For either e10 = (1, 0)t or e20 = (0, 1)t , a direct computation gives ej1 = (0, 0)t ,

for j = 1, 2 (do it!). Here ej1 is the error after one step of steepest descent is

applied to ej0 . In contrast, for e0 = e10 + e20 = (1, 1)t , we find

5

1

1

Ae0 = r0 =

,

Ar0 =

,

α0 =

2

4

9

4/9

0

0

e1 =

6=

+

= e11 + e21 .

−1/9

0

0

The steepest descent method makes the error ei+1 A-orthogonal ri . Unfortunately, if this step results in little change, then ri+1 lies in almost the

same direction as ri so the step to ei+2 is not very effective either since ei+1

is already A-orthogonal to ri and almost A-orthogonal to ri+1 . This is a

shortcoming of the steepest descent method.

The fix is simple. We generalize the algorithm and let pi be the direction

which we use to compute ei+1 . As usual, we make ei+1 A-orthogonal to pi .

The idea is to preserve this orthogonality when going to ei+2 . Since ei+1 is

already A-orthogonal to pi , ei+2 will remain A-orthogonal to pi only if our

new search direction pi+1 is also A-orthogonal to pi . Thus, instead of using

ri+1 as our next search direction, we use the component of ri+1 which is

A-orthogonal to pi , i.e.,

pi+1 = ri+1 − βi pi

where βi is chosen so that

(pi+1 , pi )A = 0.

A simple computation gives (do it!)

(ri+1 , pi )A

.

(pi , pi )A

A-orthogonal to pi+1 , i.e.,

βi =

We continue by making ei+2

xi+2 = xi+1 + αi+1 pi+1

5

with αi+1 satisfying

(ri+1 , pi+1 )

.

(Api+1 , pi+1 )

As both ei+1 and pi+1 are A-orthogonal to pi and ei+2 = ei+1 − αi+1 pi+1 ,

ei+2 is A-orthogonal to both pi and pi+1 . The above discussion leads to the

following algorithm.

(ei+1 − αi+1 pi+1 , pi+1 )A = 0 or αi+1 =

Algorithm 2. (Conjugate Gradient). Let A be a SPD n × n matrix and

x0 ∈ Rn (the initial iterate) and b ∈ Rn (the right hand side) be given. Start

by setting p0 = r0 = b − Ax0 . Then for i = 0, 1, . . ., define

(ri , pi )

xi+1 = xi + αi pi ,

where αi =

(Api , pi )

ri+1 = ri − αi Api ,

(11.7)

pi+1 = ri+1 − βi pi ,

where βi =

(ri+1 , Api )

.

(Api , pi )

Notice that we have moved the matrix-vector evaluation in the above inner

products so that it is clear that only one matrix-vector evaluation, namely

Api , is required per iterative step after startup.

We illustrate pseudo code for the conjugate gradient algorithm below: We

have implicitly assumed that A(X) is a routine which returns the result of

A applied to X and IP (·, ·) returns the result of the inner product. Here k

is the number of iterations, X is x0 on input and X is xk on return.

F U N CT ION CG(X, B, A, k, IP )

R = P = B − A(X);

F OR j = 1, 2, . . . , k DO {

AP = A(P ); al = IP (R, P )/IP (P, AP );

X = X + al ∗ P ; R = R − al ∗ AP ;

be = IP (R, AP )/IP (P, AP );

P = R − be ∗ P ;

}

RET U RN

EN D

The above code is somewhat terse but was included to illustrate the fact

that one can implement CG with exactly 3 extra vectors, R, P , and AP .

An actual code would include extra checks for consistency and convergence.

For example, a tolerance might be passed and the residual might be tested

against it causing the routine to return when the desired tolerance was

achieved. Also, for consistency, one would check to see that (Ap, p) > 0

6

for when this in negative or zero, it is a sure sign that the matrix is either

no good (not SPD) or that you have iterated to convergence (if (Ap, p) = 0).