THE CREATION AND UTLIZATION OF PRIMARY CLASSROOM

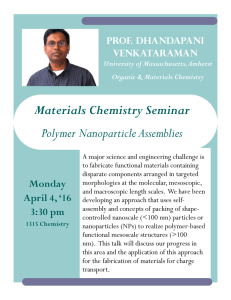

advertisement