Introduction to Probability Random Variables Bayes Rule Continuous Random Variables

advertisement

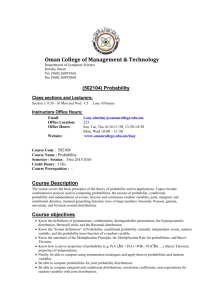

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Introduction to Machine Learning

CSE 474/574: Introduction to Probabilistic Methods

Varun Chandola <chandola@buffalo.edu>

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Frequentist and Bayesian Interpretations

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Frequentist and Bayesian Interpretations

What is Probability? [3, 1]

Probability that a coin will land heads is 50%1

What does this mean?

1 Dr.

Persi Diaconis showed that a coin is 51% likely to land facing the same way

up as it is started.

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Varun Chandola

Frequentist and Bayesian Interpretations

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Frequentist and Bayesian Interpretations

Frequentist Interpretation

Number of times an event will be observed in n trials

What if the event can only occur once?

My winning the next month’s powerball.

Polar ice caps melting by year 2020.

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Frequentist and Bayesian Interpretations

Frequentist Interpretation

Number of times an event will be observed in n trials

What if the event can only occur once?

My winning the next month’s powerball.

Polar ice caps melting by year 2020.

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Frequentist and Bayesian Interpretations

Bayesian Interpretation

Uncertainty of the event

Use for making decisions

Should I put in an offer for a sports car?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Notation

Basic Rules

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Notation

Basic Rules

What is a Random Variable (X )?

Can take any value from X

Discrete Random Variable - X is finite/countably finite

Continuous Random Variable - X is infinite

P(X = x) or P(x) is the probability of X taking value x

an event

What is a distribution?

Examples

1

2

Coin toss (X = {heads, tails})

Six sided dice (X = {1, 2, 3, 4, 5, 6})

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Notation

Basic Rules

Notation, Notation, Notation

X - random variable (X if multivariate)

x - a specific value taken by the random variable ((x if multivariate))

P(X = x) or P(x) is the probability of the event X = x

p(x) is either the probability mass function (discrete) or

probability density function (continuous) for the random variable

X at x

Probability mass (or density) at x

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Notation

Basic Rules

Basic Rules - Quick Review

For two events A and B:

P(A ∨ B) = P(A) + P(B) − P(A ∧ B)

Joint Probability

P(A, B) = P(A ∧ B) = P(A|B)P(B)

Also known as the product rule

Conditional Probability

P(A|B) =

P(A,B)

P(B)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Notation

Basic Rules

Chain Rule of Probability

Given D random variables, {X1 , X2 , . . . , XD }

P(X1 , X2 , . . . , XD ) = P(X1 )P(X2 |X1 )P(X3 |X1 , X2 ) . . . P(XD |X1 , X2 , . . . , XD )

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Notation

Basic Rules

Marginal Distribution

Given P(A, B) what is P(A)?

Sum P(A, B) over all values for B

X

X

P(A) =

P(A, B) =

P(A|B = b)P(B = b)

b

b

Sum rule

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

Bayes Rule or Bayes Theorem

Computing P(X = x|Y = y ):

Bayes Theorem

P(X = x|Y = y )

=

=

P(X = x, Y = y )

P(Y = y )

P(X = x)P(Y = y |X = x)

P

0

0

x 0 P(X = x )P(Y = y |X = x )

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

Example

Medical Diagnosis

Random event 1: A test is positive or negative (X )

Random event 2: A person has cancer (Y ) – yes or no

What we know:

1

2

3

4

5

Test has accuracy of 80%

Number of times the test is positive when the person has cancer

P(X = 1|Y = 1) = 0.8

Prior probability of having cancer is 0.4%

P(Y = 1) = 0.004

Question?

If I test positive, does it mean that I have 80% rate of cancer?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

Base Rate Fallacy

Ignored the prior information

What we need is:

P(Y = 1|X = 1) =?

More information:

False positive (alarm) rate for the test

P(X = 1|Y = 0) = 0.1

P(Y = 1|X = 1)

=

P(X = 1|Y = 1)P(Y = 1)

P(X = 1|Y = 1)P(Y = 1) + P(X = 1|Y = 0)P(Y = 0)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

Classification Using Bayes Rules

Given input example X, find the true class

P(Y = c|X)

Y is the random variable denoting the true class

Assuming the class-conditional probability is known

P(X|Y = c)

Applying Bayes Rule

P(Y = c)P(X|Y = c)

0

0

c P(Y = c ))P(X|Y = c )

P(Y = c|X) = P

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

Independence

One random variable does not depend on another

A ⊥ B ⇐⇒ P(A, B) = P(A)P(B)

Joint written as a product of marginals

Conditional Independence

A ⊥ B|C ⇐⇒ P(A, B|C ) = P(A|C )P(B|C )

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

More About Conditional Independence

Alice and Bob live in the same town but far away from each other

Alice drives to work and Bob takes the bus

Event A - Alice comes late to work

Event B - Bob comes late to work

Event C - A snow storm has hit the town

P(A|C ) - Probability that Alice comes late to work given there is a

snowstorm

Now if I know that Bob has also come late to work, will it change

the probability that Alice comes late to work?

What if I do not observe C ? Will B have any impact on probability

of A happening?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

More About Conditional Independence

Alice and Bob live in the same town but far away from each other

Alice drives to work and Bob takes the bus

Event A - Alice comes late to work

Event B - Bob comes late to work

Event C - A snow storm has hit the town

P(A|C ) - Probability that Alice comes late to work given there is a

snowstorm

Now if I know that Bob has also come late to work, will it change

the probability that Alice comes late to work?

What if I do not observe C ? Will B have any impact on probability

of A happening?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Bayes Theorem: Example

Classification Using Bayes Rule

Independence and Conditional Independence

More About Conditional Independence

More About Conditional Independence

Alice and Bob live in the same town but far away from each other

Alice drives to work and Bob takes the bus

Event A - Alice comes late to work

Event B - Bob comes late to work

Event C - A snow storm has hit the town

P(A|C ) - Probability that Alice comes late to work given there is a

snowstorm

Now if I know that Bob has also come late to work, will it change

the probability that Alice comes late to work?

What if I do not observe C ? Will B have any impact on probability

of A happening?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Continuous Random Variables

X is continuous

Can take any value

How does one define probability?

P

x

P(X = x) = 1

Probability that X lies in an interval [a, b]?

P(a < X ≤ b) = P(x ≤ b) − P(x ≤ a)

F (q) = P(x ≤ q) is the cumulative distribution function

P(a < X ≤ b) = F (b) − F (a)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Continuous Random Variables

X is continuous

Can take any value

How does one define probability?

P

x

P(X = x) = 1

Probability that X lies in an interval [a, b]?

P(a < X ≤ b) = P(x ≤ b) − P(x ≤ a)

F (q) = P(x ≤ q) is the cumulative distribution function

P(a < X ≤ b) = F (b) − F (a)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Probability Density

Probability Density Function

p(x) =

∂

F (x)

∂x

Z

P(a < X ≤ b) =

b

p(x)dx

a

Can p(x) be greater than 1?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Some Useful Statistics

Quantiles

F −1 (α): Value of xα such that F (xα ) = α

α quantile of F

What is F −1 (0.5)?

What are quartiles?

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Expectation

Expected value of a random variable

E(X )

What is most likely to happen in terms of X ?

For discrete variables

X

E[X ] ,

xp(x)

x∈X

For continuous variables

Z

E[X ] ,

xp(x)dx

X

Mean of X (µ)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Useful Statistics

Expectation

Variance

Spread of the distribution

var [X ]

, E((X − µ)2 )

= E(X 2 ) − µ2

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Discrete Distributions

Continuous Distributions

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Discrete Distributions

Continuous Distributions

What is a Probability Distribution?

Continuous

Discrete

Binomial,Bernoulli

Gaussian (Normal)

Degenerate pdf

Multinomial, Multinolli

Poisson

Laplace

Empirical

Beta

Gamma

Pareto

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Discrete Distributions

Continuous Distributions

Binomial Distribution

X = Number of heads observed in n coin tosses

Parameters: n, θ

X ∼ Bin(n, θ)

Probability mass function (pmf)

n k

Bin(k|n, θ) ,

θ (1 − θ)n−k

k

Bernoulli Distribution

Binomial distribution with n = 1

Only one parameter (θ)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Discrete Distributions

Continuous Distributions

Multinomial Distribution

Simulates a K sided die

Random variable x = (x1 , x2 , . . . , xK )

Parameters: n, θ

θ ← <K

θj - probability that j th side shows up

Y

K

n

x

Mu(x|n, θ) ,

θj j

x1 , x2 , . . . , xK

j=1

Multinoulli Distribution

Multinomial distribution with n = 1

x is a vector of 0s and 1s with only one bit set to 1

Only one parameter (θ)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Discrete Distributions

Continuous Distributions

Gaussian (Normal) Distribution

N (x|µ, σ 2 ) , √

1

1

2πσ 2

e − 2σ2 (x−µ)

2

0.4

0.3

0.2

Parameters:

1

2

0.1

µ = E[X ]

σ 2 = var [X ] = E[(X − µ)2 ]

0

−4

−2

0

2

4

6

−4

−2

0

2

4

6

X ∼ N (µ, σ 2 ) ⇔ p(X = x) = N (µ, σ 2 )

X ∼ N (0, 1) ⇐ X is a standard normal

random variable

Cumulative distribution function:

Z x

2

Φ(x; µ, σ ) ,

N (z|µ, σ 2 )dz

1

0.8

0.6

0.4

0.2

−∞

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

References

E. Jaynes and G. Bretthorst.

Probability Theory: The Logic of Science.

Cambridge University Press Cambridge:, 2003.

K. B. Petersen and M. S. Pedersen.

The matrix cookbook, nov 2012.

Version 20121115.

L. Wasserman.

All of Statistics: A Concise Course in Statistical Inference (Springer

Texts in Statistics).

Springer, Oct. 2004.

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Joint Probability Distributions

Covariance and Correlation

Multivariate Gaussian Distribution

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Joint Probability Distributions

Covariance and Correlation

Multivariate Gaussian Distribution

Joint Probability Distributions

Multiple related random variables

p(x1 , x2 , . . . , xD ) for D > 1 variables (X1 , X2 , . . . , XD )

Discrete random variables?

Continuous random variables?

What do we measure?

Covariance

How does X vary with respect to Y

For linear relationship:

cov [X , Y ] , E[(X − E[X ])(Y − E[Y ])] = E[XY ] − E[X ]E[Y ]

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Joint Probability Distributions

Covariance and Correlation

Multivariate Gaussian Distribution

Covariance and Correlation

x is a d-dimensional random vector

cov [X] , E[(X − E[X])(X − E[X])> ]

var [X1 ]

cov [X2 , X1 ]

=

..

.

cov [X1 , X2 ]

var [X2 ]

..

.

···

···

..

.

cov [Xd , X1 ]

cov [Xd , X2 ]

···

cov [X1 , Xd ]

cov [X2 , Xd ]

..

.

var [Xd ]

Covariances can be between 0 and ∞

Normalized covariance ⇒ Correlation

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Joint Probability Distributions

Covariance and Correlation

Multivariate Gaussian Distribution

Correlation

Pearson Correlation Coefficient

corr [X , Y ] , p

cov [X , Y ]

var [X ]var [Y ]

What is corr [X , X ]?

−1 ≤ corr [X , Y ] ≤ 1

When is corr [X , Y ] = 1?

Y = aX + b

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Joint Probability Distributions

Covariance and Correlation

Multivariate Gaussian Distribution

Correlation

Pearson Correlation Coefficient

corr [X , Y ] , p

cov [X , Y ]

var [X ]var [Y ]

What is corr [X , X ]?

−1 ≤ corr [X , Y ] ≤ 1

When is corr [X , Y ] = 1?

Y = aX + b

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Joint Probability Distributions

Covariance and Correlation

Multivariate Gaussian Distribution

Multivariate Gaussian Distribution

Most widely used joint probability distribution

1

1

> −1

(x

−

µ)

Σ

(x

−

µ)

N (X|µ, Σ) ,

exp

−

2

(2π)D/2 |Σ|D/2

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Linear Transformations

General Transformations

Approximate Methods

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Linear Transformations

General Transformations

Approximate Methods

Linear Transformations of Random Variables

What is the distribution of f (X) (X ∼ p())?

Linear transformation:

Y = a> X + b

Y = AX + b

E[Y ]?

E[Y]?

var [Y ]?

cov [Y]?

The Matrix Cookbook [2]

http://orion.uwaterloo.ca/~hwolkowi/matrixcookbook.pdf

Available on Piazza

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Linear Transformations

General Transformations

Approximate Methods

General Transformations

f () is not linear

Example: X - discrete

Y = f (X ) =

1

0

if X is even

if X is odd

What is pmf for Y ?

pY (y ) =

X

pX (x)

x:f (x)=y

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Linear Transformations

General Transformations

Approximate Methods

General Transformations for Continuous Variables

For continuous variables, work with cdf

FY (y ) , P(Y ≤ y ) = P(f (X ) ≤ y ) = P(X ≤ f −1 (y )) = FX (f −1 (y ))

For pdf

pY (y ) ,

d

d

dx d

dx

FY (y ) =

FX (f −1 (y )) =

FX (x) =

pX (x)

dy

dy

dy dx

dy

x = f −1 (y )

Example

Let X be Uniform(−1, 1)

Let Y = X 2

1

pY (y ) = 12 y − 2

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

Linear Transformations

General Transformations

Approximate Methods

Monte Carlo Approximation

Generate N samples from distribution for X

For each sample, xi , i ∈ [1, N], compute f (xi )

Use empirical distribution as approximate true distribution

Approximate Expectation

Z

E[f (X )] =

f (x)p(x)dx ≈

N

1 X

f (xi )

N

i=1

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

KL Divergence

Mutual Information

Outline

1

Introduction to Probability

2

Random Variables

3

Bayes Rule

More About Conditional Independence

4

Continuous Random Variables

5

Different Types of Distributions

6

Handling Multivariate Distributions

7

Transformations of Random Variables

8

Information Theory - Introduction

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

KL Divergence

Mutual Information

Introduction to Information Theory

Quantifying uncertainty of a random

variable

1

0.8

Entropy

0.6

H(X ) or H(p)

H(X ) , −

K

X

0.4

0.2

p(X = k) log2 p(X = k)

0

0

0.2

0.4

k=1

Variable with maximum entropy?

Lowest entropy?

Varun Chandola

Introduction to Machine Learning

0.6

0.8

1

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

KL Divergence

Mutual Information

Comparing Two Distributions

Kullback-Leibler Divergence (or KL Divergence or relative

entropy)

KL(p||q) ,

K

X

p(k) log

k=1

=

X

pk

qk

p(k) log p(k) −

k

=

X

p(k) log q(k)

k

−H(p) + H(p, q)

H(p, q) is the cross-entropy

Is KL-divergence symmetric?

Important fact: H(p, q) ≥ H(p)

Varun Chandola

Introduction to Machine Learning

Introduction to Probability

Random Variables

Bayes Rule

Continuous Random Variables

Different Types of Distributions

References

Handling Multivariate Distributions

Transformations of Random Variables

Information Theory - Introduction

KL Divergence

Mutual Information

Mutual Information

What does learning about one variable X tell us about another, Y ?

Correlation?

Mutual Information

I(X ; Y ) =

XX

x

p(x, y ) log

y

p(x, y )

p(x)p(y )

I(X ; Y ) = I(Y ; X )

I(X ; Y ) ≥ 0, equality iff X ⊥ Y

Varun Chandola

Introduction to Machine Learning