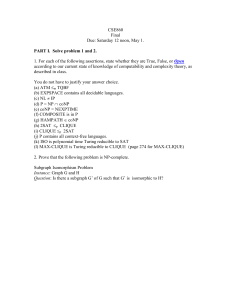

CSE596 Problem Set 10 Answer Key Fall 2015

advertisement

CSE596

Problem Set 10 Answer Key

Fall 2015

Note Change: The Review Session now runs 10am–noon on Monday, in Davis 113A.

(A) Show that for any language B, if NPB = NP then B ∈ NP ∩ co-NP. This looks weird until

you think of two particular polynomial-time oracle machines M1 , M2 —both of which are deterministic

not just NOTMs—such that for any language B, L(M1B ) = B and L(M2B ) =? (you say it). (3 pts.

checkoff credit—the subject of polynomial-time Turing reductions and oracles won’t be lectured on

until Wednesday. The converse also holds and is just a bit trickier.)

Answer: Make M2 on any input x submit x to its oracle and accept iff the answer is no. So

L(M2B ) = B̃ for any language B, which yields the unconditionally true fact that B̃ ∈ NPB for any B.

So if NPB = NP then B̃ ∈ NP, which makes B ∈ co-NP. Since obviously B = L(M1B ) ∈ NPB , having

NPB = NP makes B ∈ NP, so putting both parts together makes B ∈ NP ∩ co-NP. (Alternate proof:

clearly B, B̃ ∈ PB ⊆ NPB = NP, so B ∈ NP ∩ co-NP.)

(1) Define 2SAT to be the language of satisfiable 2CNF formulas, i.e. satisfiable Boolean formulas

in conjunctive normal form with at most two literals per clause.

(a) Show that 2SAT belongs to P (15 pts.).

log

(b) Show that 2SAT is NL-hard under logspace many-one reductions (≤m ). (Hint: give a log-space

reduction from the complement of GAP to 2SAT, and then argue on the basis of NL being closed

under complementation 21 pts., for 36 total on the problem).

Answer. (a) The key trick is to regard a two-literal clause (u ∨ v) as the implication ū −→ v

together with its contrapositive, v̄ −→ u. (Recall that u can be a negative literal x̄i , and then

ū = xi .) Now these implications form themselves naturally into a directed graph G = (V, E) with

V = { x1 , x̄1 , . . . , xn , x̄n } (same as U in the last problem!) and the implications as edges. We claim

that a 2CNF formula φ is satisfiable iff the corresponding graph Gφ has no cycle that includes some

node u together with its negation ū.

If Gφ has such a cycle, then u =⇒ ū follows by a chain of implications that follows the path

from u to ū along the cycle, and ū =⇒ u follows by the rest of the cycle. This is very much like

the contradiction “d ∈ D −→ d ∈

/ D −→ d ∈ D . . . ” at the heart of the proof of “D ∈

/ RE” by

diagonalization, and it means that φ itself implies a contradiction and must always be false. To show

this more concretely, let t be any truth assignment to the variables, and suppose that t makes u = true

and ū = false. Somewhere along the path from u to ū there must be an edge (v, w) that goes from

a node v made true to a node w made false. Then the corresponding implication v −→ w is false,

and so the assignment t fails to satisfy the corresponding clause (v̄ ∨ w). The case where t makes

u = false and ū = true is handled symmetrically, using the path from ū back to u.

Conversely, if there is no such cycle, then let us select any node u in Gφ . If there is no path from u

to ū, then set u = true; else set u = false. When there is no path from u to ū, the node sets

Au = { v : there is a path from u to v }

and

Bū = { v : there is a path from v to ū }

are disjoint. Set all the literals in Au true, and all those in Bu false. If there is any node u0 left

over, repeat the process, setting u0 = true or false depending on whether there is a path from u0

to ū0 , and doing likewise to Au0 and Bū0 as before. Continue in this way until all nodes have been

assigned truth values. Doing so never gives an edge going from a true node to a false one, because

all edges into false nodes from previous iterations are exhausted in the definition of Bū , while such

an edge in a current iteration with u0 and its negation ū0 implies a path from u0 to ū0 . Thus every

clause corresponding to an edge in Gφ is satisfied by the resulting truth assignment.

Hence we have proved that the following algorithm really does determine whether φ is satisfiable:

build Gφ and test for each literal u whether there is a path to ū and also a path from ū to u that would

complete a cycle. This means running breadth-first search at most 2n times, and gives an O(n2 ) (i.e.,

polynomial) time algorithm.

Technotes. Actually, we have shown that 2SAT is in co-NL, since when φ is unsatisfiable, a nondeterministic logspace machine can guess a literal xi and guess and follow a sequence of clauses that

correspond to the edges in a path from xi to x̄i and a return path from x̄i to xi . Since NL is closed

under complements (Immerman-Szelépcsenyi Theorem), we get 2SAT ∈ NL, and from (b) it will follow

that 2SAT is NL-complete. An alternative algorithm strategy is to combine every pair of clauses of

the form (u ∨ v) and (ū ∨ w) and obtain the new clause (v ∨ w). Adding this so-called resolvent clause

to φ doesn’t change whether φ is satisfiable, since the new clause is implied by the other two. Keep

2

doing this until you already have all possible resolvent clauses—this must happen within ( 2n

2 ) ' 2n

iterations since that’s how many possible binary clauses there are. Then you can show that φ is

satisfiable iff you never get a pair of clauses (u ∨ u) and (ū ∨ ū) from this process. Actually, what

this process of resolution has done is define the transitive closure of the graph Gφ above, and clearly

Gφ has a path from u to ū iff its transitive closure has (u, ū) as an edge. Hence this argument can

proceed along similar lines to the featured one. The reason why this process of resolution doesn’t work

efficiently for 3SAT is that the resolvent of two 3CNF clauses is a 4CNF clause, and the blowup gets

worse from there.

(2) Suppose we have a Turing machine M that on any input x calls itself recursively on some shorter

strings y1 , . . . , yk . Here k may depend on x and may even be zero—M (x) might give a yes/no answer

without recursing (note M (λ) cannot recurse). Suppose that M runs in polynomial time p(n) apart

from the recursive calls—which entails also that k ≤ p(n). Show that L(M ) belongs to PSPACE.

Answer: To convert the oracle TM M into a non-oracle TM M 0 , we can imitate an execution stack

on one new worktape by storing “blocks,” each of which includes a string y on which a recursive call

is being made. The other worktapes of M are enhanced by M 0 to incude recording the current state q

and position i of the scanned character on that tape at the time a recursive call is made. As we have

seen with other theorems, this adds a minimal amount of space so the usage per frame is still O(p(n)).

Whenever M makes a recursive call M (y), the machine M 0 writes a frame divider # on every tape,

copies y from the query tape to the stack tape where it is treated as a read-only input, and pretends

the other worktapes are freshly blank beginning on the cell after the #. Thus M 0 can simulate M (y)

directly. Either M (y) will make a recursive call, which M 0 handles by repeating the above, or it will

return a yes/no value to its caller. In the latter case, M 0 blanks the parts to the right of the rightmost

# (which are left over from the current frame) and pops the #, on each worktape plus the execution

stack tape. M 0 can then march its own tape heads to the positions where M had them at the time

the oracle call was made, load the saved state, and continue the previous frame.

No other

P space is needed to complete the computation. The space used on any tape is at most

order-of nm=1 p(m), which is O(np(n)). Thus M 0 runs in space a polynomial of degree at most one

more than the degree of p, so L(M 0 ) ∈ PSPACE.

Technote: In the proof of Savitch’s Theorem for a given language A in nondeterministic space

s(n), we build a deterministic M 0 that makes recursive calls that determine the predicate Rk (I, J) ≡ I

can go to J by a (nondeterministic) path of at most 2k steps, for k = 0 to (basically) s(n). The

recursive calls are Rk−1 (I, Yj ) and Rk−1 (Yj , J) for all possible IDs Yj , which are generated by a big

deterministic loop over all the IDs. The current frame pops with a “true” return iff both calls return

“true,” else it increments the loop and does the two calls for Yj+1 or if the loop exits it pops with a

“false” return value. In this case the Yj ’s aren’t shorter, but we know the depth of the recursion is

bounded by s(n). Hence the same analysis gives an overall space bound of O(s(n) ∗ s(n)), thus placing

A into DSPACE[s(n)2 ].