Dr Juyang Weeng Dr Shaoyun Chen Michigan Sate University

Dr Juyang Weeng

Dr Shaoyun Chen

Michigan Sate University

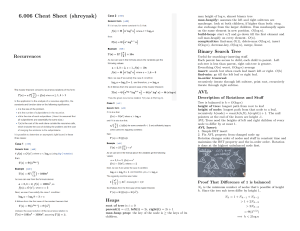

PROS

Efficient for predictable cases

Easier to understand

Computationally inexpensive

CONS

Non generic

Not able to deal with every possible case

Potentially huge number of exhaustive cases.

[1]

Each leaf node represents sample(X,Y)

Each node represents a set of data points with increased similarity

One of the central ideas in Shoslif’s approach

Given X find f (X) at the corresponding leaf node after traversal.

Building a Regression Partition Tree

Take the sample space S.

Divide the space into b cells. Each a child of the root.

The analysis performs automatic derivation of features(discussed later).

Continue to do this until the leaf nodes have a single data point or many data points with virtually the same Y.

8

6

9

2

7

1

3

4 5

2

6

5

1

4

3

9

7

8

Input X’

Output Y control signal

Recursively analyze the centre of each node

If it is close to the input then proceed in that direction till you reach the leaf node .

Use the corresponding Control signal

Use top k paths to find the top k nearest centers.

Feature Selection :Select features from a set of human defined features.

Feature Extraction: extrapolates selected features from images

Feature Derivation : derives features from high dimensional vector inputs

Using Principal Component Analysis recursively partitions the space S into a subspace S’ where the training samples lie.

Computes the principal component vectors .

◦ V

1

,V

2

,V

3

,V

4

…..V

N

MEF : Most Expressive Features

They explain the variation in the sample set

The hyper plane that has V samples forms a partition.

1 as a normal an that passes through the centroid of the

The samples on one side fall onto on side of the tree and vice versa.

PCA LDA

[1]

We can do better with class information.

MDF :Most discriminating feature

Similar to PCA

This method is cuts more along the class boundaries.

Differences

MEF: samples spread out widely, and the samples of different classes tend to mix together.

MDF: samples are clustered more tightly, and the samples from different classes are farther apart.

Using a model similar to Markov chain model

S t

State at time t

At time t, the system is at state S t observes image X t

. and

Control vector Y t+1

S t+1

.

and enters the next state

(S t+1

, Y t+1

) = f (S t

, X t

)

[1]

A special state A (ambiguous) indicates that local visual attention is needed.

Eg. trainer defined this state for a segment right before a turn.

If the image area that revealed the visual difference between different turn types was mainly in a small part of the scene.

A directs the system to look at such landmarks through a prespecified image sub window so that the system issues the correct steering action before it is too late.

Batch learning : All the training data are available at the time the system learns.

Incremental learning : Training samples are available only one at a time.

Discard once you have used them

Memory requires to store the image only once.

Similar images discarded

Step 1 •Query the current RPT.

Step 2

•If the difference between the current RPT’s output and the desired control signal >prespecified tolerance.

•Go to Step 3 Else Goto Step 1

Step 3

•Shoslif learns the current sample to update the RPT.

Compared Shoslif with feed forward neural networks and radial basis function networks for approximating stateless appearancebased navigation systems.

Shoslif did significantly better than both methods.

Extension to face detection, speech recognition and vision-based robot arm action learning.

Shoslif performs better in benign scenes.

The state based method allows more flexibility

However still need to specify that many states for different environment types.

1. Dr.Juyang Weng & Dr. Shaouyun Chen

“Visual Learning with Navigation as an

Example” .Published in IEE

September/October 2000.