SHARE Study of Hubble Archive & Reprocessing Enhancements

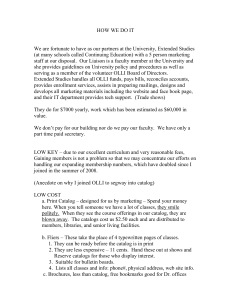

advertisement