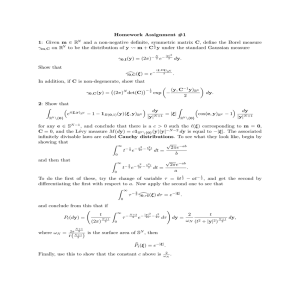

Homework Assignment #5

advertisement

Homework Assignment #5

1: A family {X(t) : t ≥ 0} of square integrable, R-valued random variables on a probability space

(Ω, F, P) is called a centered Gaussian process if its span in L2 (P; R)

Gaussian family.

is a centered,

Given such a process, define its covariance function C(s, t) ≡ EP X(s)X(t) .

(i) Given 0 ≤ t0 < · · · tn , let µt0 ,...,tn be the distribution of X(t0 ), . . . , X(tn ) and express the

Fourier transform of µt0 ,...,tn in terms of C. In particular, conclude that C uniquely determines

the distribution of {X(t) : t ≥ 0} as a stochastic process.

(ii) Assuming that for each T > 0 there exist a K(T ) < ∞ and αT > 0 such that

C(t, t) − 2C(s, t) + C(s, s) ≤ K(T )(t − s)αT for s, t ∈ [0, T ],

show that there exists a version that is continuous in the sense that there exists a family {X̃(t) :

t ≥ 0} such that t

X̃(t) is continuous and X̃(t) = X(t) (a.s.,P) for each t ≥ 0.

C(s,t)

X(s) is independent of X(s) and

(iii) Assume that C(s, s) > 0, and show that X(t) − C(s,s)

therefore that, for any Borel measurable ϕ : R −→ [0, ∞),

s

!

Z

C(s, t)

C(s, t)2

ϕ

X(s) + (C(t, t) −

y γ0,1 (dy)

C(s, s)

C(s, s)

R

is the conditional expectation of ϕ X(t) given σ({X(s)}).

(iv) Show that {X(t) : t ≥} is Markov in the sense that, for all 0 ≤ s < t and non-negative Borel

measurable ϕ’s

EP ϕ X(t) σ {X(τ ) : τ ∈ [0, s]} = EP ϕ X(t) σ {X(s)} ,

if and only if C(τ, t)C(s, s) = C(s, t)C(τ, s) for all 0 ≤ τ ≤ s < t.

(v): Take Ω = C [0, ∞); R , F = BΩ , and P = W. Let h : [0, ∞)2 −→ R be a Borel measurable

function for which

Z ∞

|h(t, τ )|2 dτ < ∞ for all t ≥ 0,

0

and set

Z

X(t) =

t

h(t, τ ) dw(τ ).

0

Show that {X(t) : t ≥} is a centered Gaussian process with covariance

Z ∞

C(s, t) =

h(s, τ )h(t, τ ) dτ.

0

Show that this process will have a continuous version if for each T > 0 there exist a K(T ) < ∞

and αT > 0 such that

Z ∞

|h(t, τ ) − h(s, τ )|2 dτ ≤ K(T )(t − s)αT for 0 ≤ s < t ≤ T.

0

2: Aside from Brownian motion, the most famous Gaussian process is the Ornstein-Uhlenbeck

process. Namely, continue in the setting of (v) above, and, for x ∈ R and w ∈ C [0, ∞); R , show

that there is precisely one solution X( · , x)(w) to the integral equations

Z

X(t, x) = x + w(t) −

(1)1

t

X(τ, x)(w) dτ.

0

In fact, use Riemann-Stieltjes integration to show that

−t

X(t, x) = e x + e

−t

Z

t

eτ dw(τ ).

0

In particular, conclude that {X(t, 0) : t ≥ 0} is a centered Gaussian process with covariance

C(s, t) =

e−|s−t| − e−(s+t

.

2

Although one can use (iv) above to check that {X(t, 0) : t ≥ 0} is Markov, there is a better way.

Namely, show that, for 0 ≤ s < t,

X(t, x)(w) = e

−(t−s)

−s−t

Z

X(s, x)(w) + e

= X t − s, X(s, x)(w) (δs w),

t

eτ dw(τ )

s

where δs w(t) = w(s + t) − w(s), and conclude that

W

E ϕ X(t, x) Bs =

Z

ϕ(y)p t − s, X(s, x), y) dy

where

p(τ, x, y) = π(1 − e

−2τ

)

− 21

y − e−τ x

exp −

1 − e−2τ

Finally, show that {X(t, 0) : t ≥ 0} has the same distribution as

−t

e w

e2t − 1

2

: t≥0 .

.